This repository is based on the Standalone Cluster on Docker and the Material Developed by the Data System Group from Tartu University.

The project was featured on an article at MongoDB official tech blog! 😱

The project just got its own article at Towards Data Science Medium blog! ✨

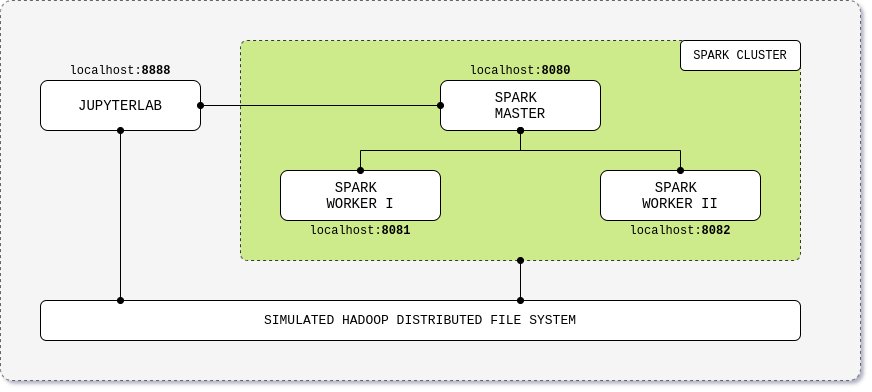

This project gives you an Apache Spark cluster in standalone mode with a JupyterLab interface built on top of Docker. It also includes an second version which mounts an HDFS cluster and allows testing parallel data processing.

Learn Apache Spark through its Scala, Python (PySpark) and R (SparkR) API by running the Jupyter notebooks with examples on how to read, process and write data.

curl -LO https://raw.githubusercontent.com/cluster-apps-on-docker/spark-standalone-cluster-on-docker/master/docker-compose.yml

docker-compose up| Application | URL | Description |

|---|---|---|

| JupyterLab | localhost:8888 | Cluster interface with built-in Jupyter notebooks |

| Spark Driver | localhost:4040 | Spark Driver web ui |

| Spark Master | localhost:8080 | Spark Master node |

| Spark Worker I | localhost:8081 | Spark Worker node with 1 core and 512m of memory (default) |

| Spark Worker II | localhost:8082 | Spark Worker node with 1 core and 512m of memory (default) |

- Install Docker and Docker Compose, check infra supported versions

- Download the docker compose file;

curl -LO https://raw.githubusercontent.com/cluster-apps-on-docker/spark-standalone-cluster-on-docker/master/docker-compose.yml- Edit the docker compose file with your favorite tech stack version, check apps supported versions;

- Start the cluster;

docker-compose up- Run Apache Spark code using the provided Jupyter notebooks with Scala, PySpark and SparkR examples;

- Stop the cluster by typing

ctrl+con the terminal; - Run step 3 to restart the cluster.

Note: Local build is currently only supported on Linux OS distributions.

- Download the source code or clone the repository;

- Move to the build directory;

cd build- Edit the build.yml file with your favorite tech stack version;

- Match those version on the docker compose file;

- Build up the images;

chmod +x build.sh ; ./build.sh- Start the cluster;

docker-compose up- Run Apache Spark code using the provided Jupyter notebooks with Scala, PySpark and SparkR examples;

- Stop the cluster by typing

ctrl+con the terminal; - Run step 6 to restart the cluster.

- Infra

| Component | Version |

|---|---|

| Docker Engine | 1.13.0+ |

| Docker Compose | 1.10.0+ |

- Languages and Kernels

| Spark | Hadoop | Scala | Scala Kernel | Python | Python Kernel | R | R Kernel |

|---|---|---|---|---|---|---|---|

| 3.x | 3.2 | 2.12.10 | 0.10.9 | 3.7.3 | 7.19.0 | 3.5.2 | 1.1.1 |

| 2.x | 2.7 | 2.11.12 | 0.6.0 | 3.7.3 | 7.19.0 | 3.5.2 | 1.1.1 |

- Apps

| Component | Version | Docker Tag |

|---|---|---|

| Apache Spark | 2.4.0 | 2.4.4 | 3.0.0 | <spark-version> |

| JupyterLab | 2.1.4 | 3.0.0 | <jupyterlab-version>-spark-<spark-version> |

| Image | Size | Downloads |

|---|---|---|

| JupyterLab |  |

|

| Spark Master |  |

|

| Spark Worker |  |

|

We'd love some help. To contribute, please read this file.

A list of amazing people that somehow contributed to the project can be found in this file. This project is maintained by:

André Perez - dekoperez - andre.marcos.perez@gmail.com

Support us on GitHub by staring this project ⭐

Support us on Patreon. 💖