LLMUnity enables seamless integration of Large Language Models (LLMs) within the Unity engine.

It allows to create intelligent characters that your players can interact with for an immersive experience.

LLMUnity is built on top of the awesome llama.cpp, llamafile and usearch libraries.

- 💬 Craft your own NPC AI that the player interacts with in natural language

- 🖌️ The NPC can respond creatively according to your character definition

- 🗝️ or with predefined answers that stick to your script!

- 🔍 You can even build an intelligent search engine with our search system (RAG)

Features

- 💻 Cross-platform! Windows, Linux and macOS (versions)

- 🏠 Runs locally without internet access. No data ever leave the game!

- ⚡ Blazing fast inference on CPU and GPU (Nvidia and AMD)

- 🤗 Support of the major LLM models (models)

- 🔧 Easy to setup, call with a single line of code

- 💰 Free to use for both personal and commercial purposes

Tested on Unity: 2021 LTS, 2022 LTS, 2023

Upcoming Releases

- Join us at Discord and say hi!

- ⭐ Star the repo and spread the word about the project!

- Submit feature requests or bugs as issues or even submit a PR and become a collaborator!

- Open the Package Manager in Unity:

Window > Package Manager - Click the

+button and selectAdd package from git URL - Use the repository URL

https://github.com/undreamai/LLMUnity.gitand clickAdd

On macOS you further need to have Xcode Command Line Tools installed:

- From inside a terminal run

xcode-select --install

For a step-by-step tutorial you can have a look at our guide:

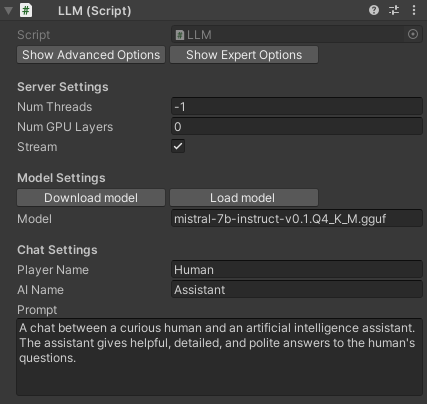

The first step is to create a GameObject for the LLM ♟️:

- Create an empty GameObject.

In the GameObject Inspector clickAdd Componentand select the LLM script. - Download the default model with the

Download Modelbutton (~4GB).

Or load your own .gguf model with theLoad modelbutton (see Use your own model). - Define the role of your AI in the

Prompt. You can also define the name of the AI (AI Name) and the player (Player Name). - (Optional) By default you receive the reply from the model as is it is produced in real-time (recommended).

If you want the full reply in one go, deselect theStreamoption. - (Optional) Adjust the server or model settings to your preference (see Options).

In your script you can then use it as follows 🦄:

using LLMUnity;

public class MyScript {

public LLM llm;

void HandleReply(string reply){

// do something with the reply from the model

Debug.Log(reply);

}

void Game(){

// your game function

...

string message = "Hello bot!";

_ = llm.Chat(message, HandleReply);

...

}

}You can also specify a function to call when the model reply is complete.

This is useful if the Stream option is selected (default behaviour) to continue the conversation:

void ReplyCompleted(){

// do something when the reply from the model is complete

Debug.Log("The AI replied");

}

void Game(){

// your game function

...

string message = "Hello bot!";

_ = llm.Chat(message, HandleReply, ReplyCompleted);

...

}- Finally, in the Inspector of the GameObject of your script, select the LLM GameObject created above as the llm property.

That's all ✨! You can also:

Build multiple characters

LLMUnity allows to build multiple AI characters efficiently, where each character has it own prompt.

See the ServerClient sample for a server-client example.

To use multiple characters:

- create a single GameObject for the LLM as above for the first character.

- for every additional character create a GameObject using the LLMClient script instead of the LLM script.

Define the prompt (and other parameters) of the LLMClient for the character

Then in your script:

using LLMUnity;

public class MyScript {

public LLM cat;

public LLMClient dog;

public LLMClient bird;

void HandleCatReply(string reply){

// do something with the reply from the cat character

Debug.Log(reply);

}

void HandleDogReply(string reply){

// do something with the reply from the dog character

Debug.Log(reply);

}

void HandleBirdReply(string reply){

// do something with the reply from the bird character

Debug.Log(reply);

}

void Game(){

// your game function

...

_ = cat.Chat("Hi cat!", HandleCatReply);

_ = dog.Chat("Hello dog!", HandleDogReply);

_ = bird.Chat("Hiya bird!", HandleBirdReply);

...

}

}Process the prompt at the beginning of your app for faster initial processing time

void WarmupCompleted(){

// do something when the warmup is complete

Debug.Log("The AI is warm");

}

void Game(){

// your game function

...

_ = llm.Warmup(WarmupCompleted);

...

}Add or not the message to the chat/prompt history

The last argument of the Chat function is a boolean that specifies whether to add the message to the history (default: true):

void Game(){

// your game function

...

string message = "Hello bot!";

_ = llm.Chat(message, HandleReply, ReplyCompleted, false);

...

}Wait for the reply before proceeding to the next lines of code

For this you can use the async/await functionality:

async void Game(){

// your game function

...

string message = "Hello bot!";

string reply = await llm.Chat(message, HandleReply, ReplyCompleted);

Debug.Log(reply);

...

}Add a LLM / LLMClient component dynamically

using UnityEngine;

using LLMUnity;

public class MyScript : MonoBehaviour

{

LLM llm;

LLMClient llmclient;

async void Start()

{

// Add and setup a LLM object

gameObject.SetActive(false);

llm = gameObject.AddComponent<LLM>();

await llm.SetModel("mistral-7b-instruct-v0.2.Q4_K_M.gguf");

llm.prompt = "A chat between a curious human and an artificial intelligence assistant.";

gameObject.SetActive(true);

// or a LLMClient object

gameObject.SetActive(false);

llmclient = gameObject.AddComponent<LLMClient>();

llmclient.prompt = "A chat between a curious human and an artificial intelligence assistant.";

gameObject.SetActive(true);

}

}Use a remote server

You can also build a remote server that does the processing and have local clients that interact with it.To do that:

- Create a server based on the

LLMscript or a standard llama.cpp server. - Create characters with the

LLMClientscript. The characters can be configured to connect to the remote instance by providing the IP address and port of the server in thehost/portproperties.

LLMUnity implements a super-fast similarity search functionality with a Retrieval-Augmented Generation (RAG) system.

This works as follows.

Building the data You provide text inputs (a phrase, paragraph, document) to add in the data

Each input is split into sentences (optional) and encoded into embeddings with a deep learning model.

Searching You can then search for an query text input.

The input is again encoded and the most similar text inputs or sentences in the data are retrieved.

To use search:

- create an empty GameObject for the embedding model 🔍.

In the GameObject Inspector clickAdd Componentand select theEmbeddingscript). - select the model you prefer from the drop-down list to download it (bge small, bge base or MiniLM v6).

In your script you can then use it as follows 🦄:

using LLMUnity;

public class MyScript {

public Embedding embedding;

SearchEngine search;

void Game(){

...

string[] inputs = new string[]{

"Hi! I'm an LLM.", "the weather is nice. I like it.", "I'm a RAG system"

};

// build the embedding

EmbeddingModel model = embedding.GetModel();

search = new SearchEngine(model);

foreach (string input in inputs) search.Add(input);

// get the 2 most similar phrases

string[] similar = search.Search("hello!", 2);

// or get the 2 most similar sentences

string[] similarSentences = search.SearchSentences("hello!", 2);

...

}

}- Finally, in the Inspector of the GameObject of your script, select the Embedding GameObject created above as the embedding property.

You can save the data along with the embeddings:

search.Save("Embeddings.zip");and load them from disk:

SearchEngine search = SearchEngine.Load(model, "Embeddings.zip");If you want to manage dialogues, LLMUnity provides the Dialogue class for ease of use:

Dialogue dialogue = new Dialogue(model);

// add a text

dialogue.Add("the weather is nice");

// add a text for a character

dialogue.Add("hi I'm Luke", "Luke");

// add a text for a character and a dialogue part

dialogue.Add("Hi again", "Luke", "ACT 2");

// search for similar texts

string[] similar = dialogue.Search("how is the weather?", 3);

// search for similar texts within a character

string[] similar = dialogue.Search("hi there!", 2, "Luke");

// search for similar texts within a character and dialogue part

string[] similar = dialogue.Search("hello!", 3, "Luke", "ACT 2");That's all ✨!

The Samples~ folder contains several examples of interaction 🤖:

- SimpleInteraction: Simple interaction between a player and a AI

- ServerClient: Simple interaction between a player and multiple characters using a

LLMand aLLMClient - ChatBot: Interaction between a player and a AI with a UI similar to a messaging app (see image below)

- HamletSearch: Interaction with predefined answers using the search functionality

- LLMUnityBot: Interaction by searching and providing relevant context to the AI using the search functionality

To install a sample:

- Open the Package Manager:

Window > Package Manager - Select the

LLMUnityPackage. From theSamplesTab, clickImportnext to the sample you want to install.

The samples can be run with the Scene.unity scene they contain inside their folder.

In the scene, select the LLM GameObject download the default model (Download model) or load an existing .gguf model (Load model).

Save the scene, run and enjoy!

LLMUnity uses the Microsoft Phi-2 or Mistral 7B Instruct model by default, quantised with the Q4 method.

Alternative models can be downloaded from HuggingFace.

The required model format is .gguf as defined by the llama.cpp.

The easiest way is to download gguf models directly by TheBloke who has converted an astonishing number of models 🌈!

Otherwise other model formats can be converted to gguf with the convert.py script of the llama.cpp as described here.

❕ Before using any model make sure you check their license ❕

Show/Hide Advanced OptionsToggle to show/hide advanced options from belowShow/Hide Expert OptionsToggle to show/hide expert options from below

-

Num Threadsnumber of threads to use (default: -1 = all) -

Num GPU Layersnumber of model layers to offload to the GPU. If set to 0 the GPU is not used. Use a large number i.e. >30 to utilise the GPU as much as possible. If the user's GPU is not supported, the LLM will fall back to the CPU -

Streamselect to receive the reply from the model as it is produced (recommended!).

If it is not selected, the full reply from the model is received in one go -

Advanced options

Parallel Promptsnumber of prompts that can happen in parallel (default: -1 = number of LLM/LLMClient objects)Debugselect to log the output of the model in the Unity EditorPortport to run the server

-

Download modelclick to download the default model (Mistral 7B Instruct) -

Load modelclick to load your own model in .gguf format -

Modelthe model being used (inside the Assets/StreamingAssets folder) -

Advanced options

Load loraclick to load a LORA model in .bin formatLoad grammarclick to load a grammar in .gbnf formatLorathe LORA model being used (inside the Assets/StreamingAssets folder)Grammarthe grammar being used (inside the Assets/StreamingAssets folder)Context SizeSize of the prompt context (0 = context size of the model)Batch SizeBatch size for prompt processing (default: 512)Seedseed for reproducibility. For random results every time select -1-

Saves the prompt as it is being created by the chat to avoid reprocessing the entire prompt every timeCache Promptsave the ongoing prompt from the chat (default: true) -

This is the amount of tokens the model will maximum predict. When N predict is reached the model will stop generating. This means words / sentences might not get finished if this is too low.Num Predictnumber of tokens to predict (default: 256, -1 = infinity, -2 = until context filled) -

The temperature setting adjusts how random the generated responses are. Turning it up makes the generated choices more varied and unpredictable. Turning it down makes the generated responses more predictable and focused on the most likely options.TemperatureLLM temperature, lower values give more deterministic answers -

The top k value controls the top k most probable tokens at each step of generation. This value can help fine tune the output and make this adhere to specific patterns or constraints.Top Ktop-k sampling (default: 40, 0 = disabled) -

The top p value controls the cumulative probability of generated tokens. The model will generate tokens until this theshold (p) is reached. By lowering this value you can shorten output & encourage / discourage more diverse output.Top Ptop-p sampling (default: 0.9, 1.0 = disabled) -

The probability is defined relative to the probability of the most likely token.Min Pminimum probability for a token to be used (default: 0.05) -

The penalty is applied to repeated tokens.Repeat PenaltyControl the repetition of token sequences in the generated text (default: 1.1) -

Positive values penalize new tokens based on whether they appear in the text so far, increasing the model's likelihood to talk about new topics.Presence Penaltyrepeated token presence penalty (default: 0.0, 0.0 = disabled) -

Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model's likelihood to repeat the same line verbatim.Frequency Penaltyrepeated token frequency penalty (default: 0.0, 0.0 = disabled)

-

Expert options

-

tfs_z: Enable tail free sampling with parameter z (default: 1.0, 1.0 = disabled). -

typical_p: Enable locally typical sampling with parameter p (default: 1.0, 1.0 = disabled). -

repeat_last_n: Last n tokens to consider for penalizing repetition (default: 64, 0 = disabled, -1 = ctx-size). -

penalize_nl: Penalize newline tokens when applying the repeat penalty (default: true). -

penalty_prompt: Prompt for the purpose of the penalty evaluation. Can be eithernull, a string or an array of numbers representing tokens (default:null= use originalprompt). -

mirostat: Enable Mirostat sampling, controlling perplexity during text generation (default: 0, 0 = disabled, 1 = Mirostat, 2 = Mirostat 2.0). -

mirostat_tau: Set the Mirostat target entropy, parameter tau (default: 5.0). -

mirostat_eta: Set the Mirostat learning rate, parameter eta (default: 0.1). -

n_probs: If greater than 0, the response also contains the probabilities of top N tokens for each generated token (default: 0) -

ignore_eos: Ignore end of stream token and continue generating (default: false).

-

Player Namethe name of the playerAI Namethe name of the AIPrompta description of the AI role

The license of LLMUnity is MIT (LICENSE.md) and uses third-party software with MIT and Apache licenses (Third Party Notices.md).