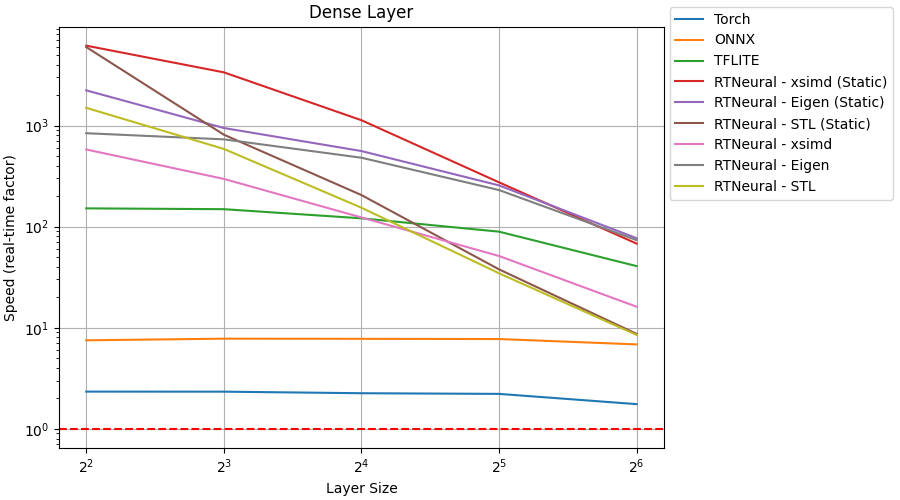

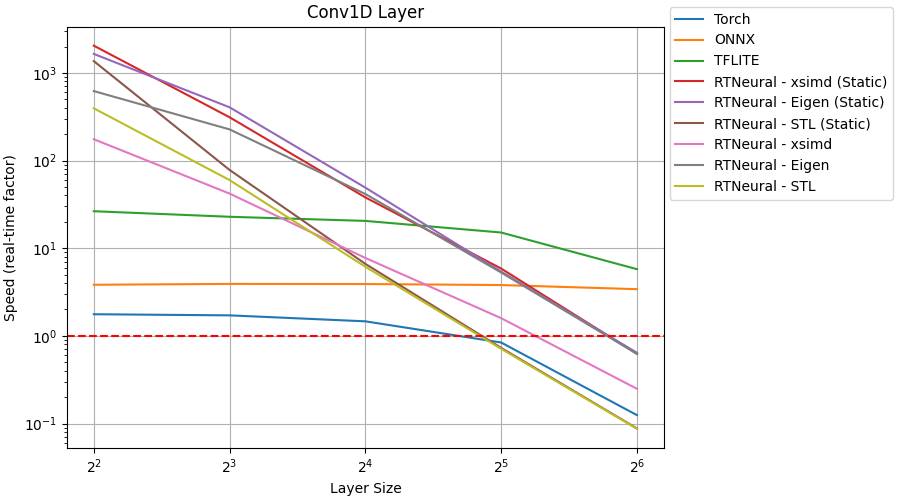

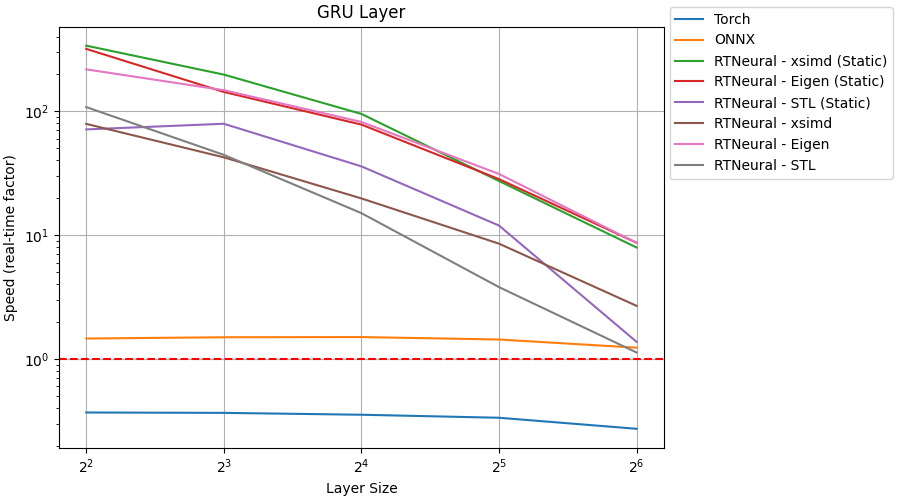

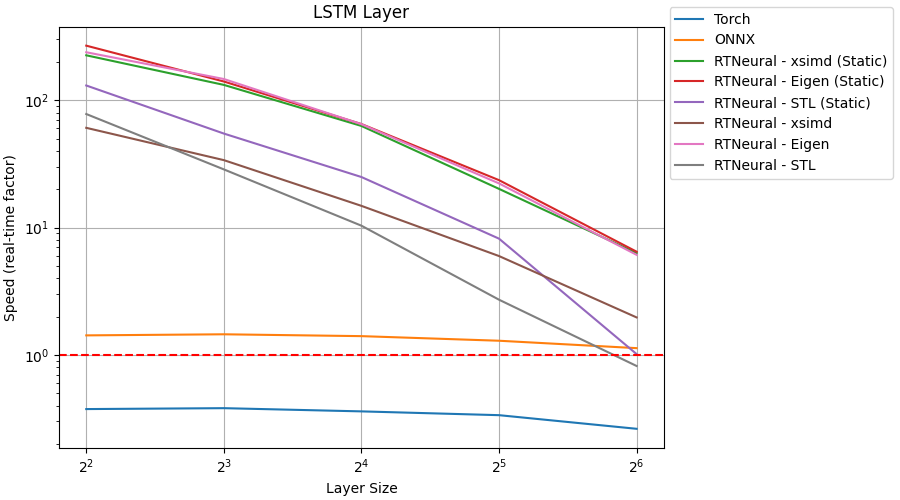

This repository contains an executable for comparing the performance of C++ neural network inferencing engines. Currently, there are four inferencing engines being compared:

- RTNeural (compile-time API)

- RTNeural (run-time API)

- libtorch

- onnxruntime

- TensorFlow Lite

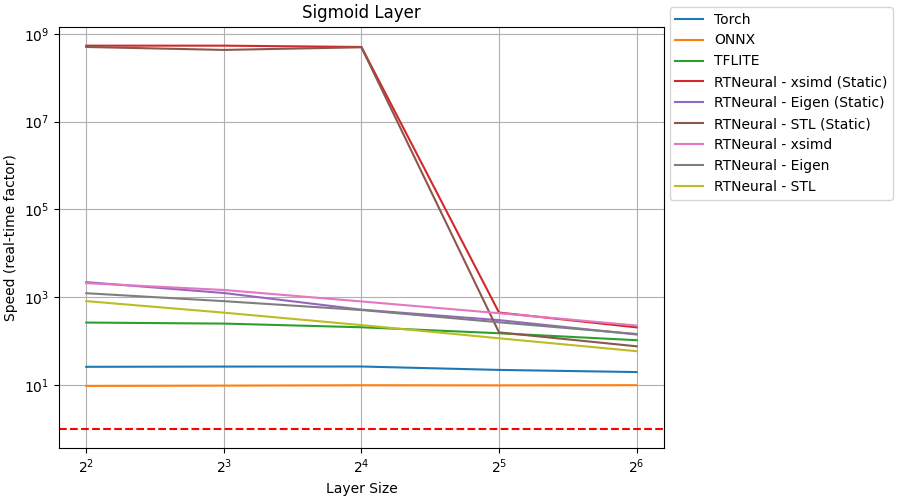

All benchmarks results were obtained on a 2018 Mac Mini with an Intel(R) Core(TM) i7-8700B CPU @ 3.20GHz. The "Real-Time Factor" measurement assumes a sample rate of 48 kHz.

Tanh:

ReLU:

Sigmoid: