A library of functions that streamlines two sets of common text-corpus tasks:

- annotated corpus search of grammatical constructions and complex lexical patterns in context, and

- detailed summary and aggregation of corpus search results.

Grammatical constructions and complex lexical patterns are formalized here (in terms of an annotated corpus) as patterns comprised of:

- different types of elements (eg, form, lemma, or part-of-speech),

- contiguous and/or non-contiguous elements,

- positionally fixed and/or free (ie, optional) elements, or

- any combination thereof.

Under the hood, corpuslingr search is regex-based & (informally) tuple-based --- akin to the RegexpParser function in Python's Natural Language Toolkit (NLTK). Regex character matching is streamlined with a simple "corpus querying language" modeled after the more intuitive and transparent syntax used in the online BYU suite of English corpora. This allows for convenient specification of search patterns comprised of form, lemma, & pos, with all of the functionality of regex metacharacters and repetition quantifiers.

At present, part-of-speech search is based on English-specific part-of-speech tags. In theory, search functionality could be made more language-generic by utilizing universal part-of-speech tags when building tuples. However, language-specific search will utlimately be more powerful/insightful.

Summary functions allow users to:

- aggregate search results by text & token frequency,

- view search results in context (kwic),

- create word embeddings/co-occurrence vectors for each search term, and

- specify how search results are aggregated.

Importantly, both search and aggregation functions can be easily applied to multiple (ie, any number of) search queries.

While still in development, the package should be useful to linguists and digital humanists interested in having BYU corpora-like search & summary functionality when working with (moderately-sized) personal corpora, as well as researchers interested in performing finer-grained, more qualitative analyses of language use and variation in context.

A simple shiny demo of search & summary functionaity is available here.

Here, we walk through a simple workflow from corpus creation using quicknews, corpus annotation using the cleanNLP package, and annotated corpus search & summary using corpuslingr.

library(tidyverse)

library(cleanNLP)

library(corpuslingr) #devtools::install_github("jaytimm/corpuslingr")

library(quicknews) #devtools::install_github("jaytimm/quicknews")

library(DT)To demo the search functionality of corpuslingr, we first build a small corpus of current news articles using my quicknews package. We apply the gnews_get_meta/gnews_scrape_web functions across multiple Google News sections to build out the corpus some, and to add a genre-like dimension to the corpus.

topics <- c('nation','world', 'sports', 'science')

corpus <- lapply(topics, function (x) {

quicknews::qnews_get_meta (language="en", country="us", type="topic", search=x)})%>%

bind_rows() %>%

quicknews::qnews_scrape_web ()This function performs two tasks. It eliminates unnecessary whitespace from the text column of a corpus data frame object. Additionally, it attempts to trick annotators into treating hyphenated words as a single token. With the exception of Stanford's CoreNLP (via cleanNLP), annotators tend to treat hyphenated words as multiple word tokens. For linguists interested in word formation processes, eg, this is disappointing. There is likely a less hacky way to do this.

corpus <- clr_prep_corpus (corpus, hyphenate = TRUE)For demo purposes, we use udpipe (via cleanNLP) to annotate the corpus data frame object.

cleanNLP::cnlp_init_udpipe(model_name="english",feature_flag = FALSE, parser = "none")

ann_corpus <- cleanNLP::cnlp_annotate(corpus$text, as_strings = TRUE, doc_ids = corpus$doc_id) This function prepares the annotated corpus for complex search (as defined above) by building <token~lemma~pos> tuples and setting tuple onsets/offsets. Additionally, column names are homogenized using the naming conventions established in the spacyr package. Lastly, the function splits the corpus into a list of data frames by document. This is ultimately a search convenience.

Including text metadata in the meta parameter enables access to text characteristics when aggregating search results.

lingr_corpus <- ann_corpus$token %>%

clr_set_corpus(doc_var='id',

token_var='word',

lemma_var='lemma',

tag_var='pos',

pos_var='upos',

sentence_var='sid',

meta = corpus[,c('doc_id','source','search')]) Some example tuple-ized text:

paste(lingr_corpus$corpus[[1]]$tup[200:204], collapse= " ")

## [1] "<intervene~intervene~VB> <during~during~IN> <a~a~DT> <Presidential~presidential~JJ> <foreign~foreign~JJ>"A simple function for describing an annotated corpus, providing some basic aggregate statistics at the corpus, genre, and text levels.

summary <- corpuslingr::clr_desc_corpus(lingr_corpus,doc="doc_id",

sent="sentence_id", tok="token",upos='pos', genre="search")- Corpus summary:

summary$corpus

## n_docs textLength textType textSent

## 1: 59 61110 8980 2640- By genre:

summary$genre

## search n_docs textLength textType textSent

## 1: topic_nation 11 12341 2965 563

## 2: topic_sports 18 25469 4105 1207

## 3: topic_world 16 14673 3562 609

## 4: topic_science 14 8627 2426 368- By text:

head(summary$text)

## doc_id textLength textType textSent

## 1: 1 1124 471 44

## 2: 2 1592 586 83

## 3: 3 755 353 26

## 4: 4 171 110 8

## 5: 5 228 144 15

## 6: 6 317 183 22The search syntax utilized here is modeled after the syntax implemented in the BYU suite of corpora. A full list of part-of-speech syntax can be viewed here.

library(knitr)

corpuslingr::clr_ref_search_egs %>% kable(escape=FALSE, format = "html")| type | search\_syntax | example |

|---|---|---|

| Simple form search | lime | lime |

| Simple lemma search | DRINK | drinks, drank, drinking |

| Lemma with POS search | BARK~VERB | barked, barking |

| Simple phrasal search | in the long run | in the long run |

| Phrasal search - POS/form | ADJ and ADJ | happy and healthy, political and economical |

| Phrasal search inc noun phrase | VERB NPHR into VBG | trick someone into believing |

| Phrasal search inc noun phrase | VERB PRP$ way PREP NPHR | make its way through the Senate |

| Suffix search | \*tion | defenestration, nation, retaliation |

| Infix search | \*break\* | breakable, heartbreaking |

| Optional search w/ parens and ? | MD (NEG)? HAVE been | should have been, might not have been |

| Multiple term search w parens and | | PRON (HOPE| WISH| DESIRE) | He hoped, they wish |

| Multiple term search w parens and | | House (Republicans| Democrats) | House Republicans, House Democrats |

| Indeterminate wildcard search w brackets and min/max | NPHR BE (\*){1,4} ADJ | He was very, very happy; I'm not sure |

| Multiple optional search | (President)? (Bill)? Clinton | Clinton, President Clinton, Bill Clinton |

Search for all instantiations of a particular lexical pattern/grammatical construction devoid of context. This function enables fairly quick search.

search1 <- "ADJ and (ADV)? ADJ"

lingr_corpus %>%

corpuslingr::clr_search_gramx(search=search1)%>%

select(doc_id, search, token, tag)%>%

slice(1:15)

## # A tibble: 15 x 4

## doc_id search token tag

## <chr> <chr> <chr> <chr>

## 1 1 topic_nation healthy and prepared JJ CC JJ

## 2 1 topic_nation dedicated and outstanding JJ CC JJ

## 3 3 topic_world harsh and brutal JJ CC JJ

## 4 3 topic_world blind and deaf JJ CC JJ

## 5 12 topic_world dangerous and unstable JJ CC JJ

## 6 12 topic_world dangerous and unstable JJ CC JJ

## 7 13 topic_world serious and irregular JJ CC JJ

## 8 16 topic_sports third and fourth JJ CC JJ

## 9 16 topic_sports second and third JJ CC JJ

## 10 16 topic_sports better and worse JJR CC JJR

## 11 16 topic_sports straight and narrow JJ CC JJ

## 12 16 topic_sports frustrating and unavailable JJ CC JJ

## 13 16 topic_sports effective and healthy JJ CC JJ

## 14 17 topic_sports second and third JJ CC JJ

## 15 18 topic_sports contrite and apologetic JJ CC JJA simple function for calculating text and token frequencies of search term(s). The agg_var parameter allows the user to specify how frequency counts are aggregated.

search2 <- "VERB into"

lingr_corpus %>%

corpuslingr::clr_search_gramx(search=search2)%>%

corpuslingr::clr_get_freq(agg_var = c('lemma'), toupper=TRUE)%>%

head()

## lemma txtf docf

## 1: TURN INTO 6 3

## 2: CRASH INTO 3 1

## 3: LOOK INTO 3 3

## 4: FORCE INTO 2 2

## 5: GET INTO 2 2

## 6: GO INTO 2 2Having included metadata in the call to clr_set_corpus, we can aggregate search results, eg, by Google News topic:

search3 <- "SHOT~NOUN| BALL~NOUN| PLAY~VERB"

lingr_corpus %>%

corpuslingr::clr_search_gramx(search=search3)%>%

corpuslingr::clr_get_freq(agg_var = c('search','token','tag'), toupper=TRUE)%>%

slice(1:15)

## # A tibble: 15 x 5

## search token tag txtf docf

## <chr> <chr> <chr> <int> <int>

## 1 topic_sports SHOT NN 25 2

## 2 topic_sports PLAY VB 21 8

## 3 topic_sports SHOTS NNS 15 2

## 4 topic_sports BALL NN 12 2

## 5 topic_sports PLAYED VBD 10 5

## 6 topic_sports PLAYING VBG 10 4

## 7 topic_sports PLAYED VBN 9 7

## 8 topic_sports PLAY VBP 6 1

## 9 topic_sports PLAYS VBZ 6 4

## 10 topic_world PLAYED VBN 3 1

## 11 topic_nation PLAYING VBG 1 1

## 12 topic_nation PLAYS VBZ 1 1

## 13 topic_science PLAY VB 1 1

## 14 topic_science PLAYING VBG 1 1

## 15 topic_world PLAY VB 1 1A function that returns search terms with user-specified left and right contexts (LW and RW). Output includes a list of two data frames: a BOW (bag-of-words) data frame object and a KWIC (keyword in context) data frame object.

Note that generic noun phrases can be included as a search term (regex below), and can be specified in the query using NPHR.

clr_ref_nounphrase

## [1] "(?:(?:DET )?(?:ADJ )*)?(?:((NOUNX )+|PRON ))"search4 <- 'NPHR BE (NEG)? VBN'

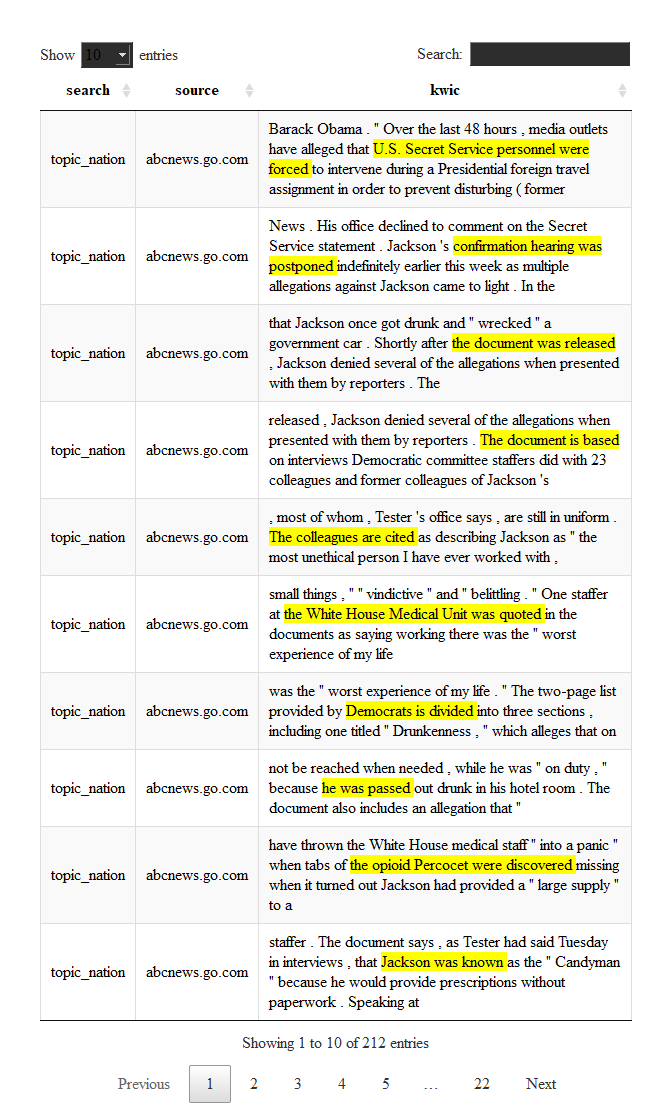

found_egs <- corpuslingr::clr_search_context(search=search4,corp=lingr_corpus,LW=15, RW = 15)Access KWIC object:

found_egs %>%

corpuslingr::clr_context_kwic(include=c('search', 'source'))%>%

DT::datatable(selection="none",class = 'cell-border stripe', rownames = FALSE,width="100%", escape=FALSE)A function for accessing/aggregating BOW object. The parameters agg_var and content_only can be used to specify how collocates are aggregated and whether only content words are included, respectively.

search5 <- "White House"

corpuslingr::clr_search_context(search=search5,corp=lingr_corpus, LW=20, RW=20)%>%

corpuslingr::clr_context_bow(content_only = TRUE, agg_var = c('searchLemma', 'lemma'))%>%

head()

## searchLemma lemma cofreq

## 1: WHITE HOUSE JACKSON 10

## 2: WHITE HOUSE SAY 7

## 3: WHITE HOUSE TRUMP 7

## 4: WHITE HOUSE DOCUMENT 3

## 5: WHITE HOUSE JUST 3

## 6: WHITE HOUSE KIM 3Function for extracting key phrases from each text comprising a corpus based on tf-idf weights. The methods and logic underlying this function are described in more detail here.

The regex for key phrase search:

clr_ref_keyphrase

## [1] "(ADJ )*(NOUNX )+((PREP )(ADJ )*(NOUNX )+)?"The user can specify the number of key phrases to extract, how to aggregate key phrases, how to output key phrases, and whether or not to use jitter to break ties among top n key phrases.

library(knitr)

lingr_corpus %>%

corpuslingr::clr_search_keyphrases(n=5,

key_var ='lemma',

flatten=TRUE,

jitter=TRUE,

include = c('doc_id','search','source')) %>%

slice(1:10) %>%

kable(escape=FALSE, format = "html")| doc\_id | search | source | keyphrases |

|---|---|---|---|

| 1 | topic\_nation | abcnews.go.com | Jackson | Secret Service | allegation | document | Tester |

| 2 | topic\_sports | mmajunkie.com | Bellator | MMA | BMMA | fight | Danis |

| 3 | topic\_world | people.com | Otto | complaint | North Korea | son | family |

| 4 | topic\_sports | profootballtalk.nbcsports.com | Patriots | Brown | no. | contribution | pm EDT Getty |

| 5 | topic\_sports | profootballtalk.nbcsports.com | Bradford | Rosen | league | pm | injury history |

| 6 | topic\_sports | profootballtalk.nbcsports.com | Petty | jet | pm | talent evaluator | Namath |

| 7 | topic\_sports | profootballtalk.nbcsports.com | nuke | only medium of expression | Seahawks article | existence | football field |

| 8 | topic\_world | time.com | India | Modi | China | Beijing | world |

| 9 | topic\_world | bbc.com | chemical | ban | restriction | be | environment |

| 10 | topic\_world | bbc.com | Ms Rudd | target | Guardian | home secretary | memo |