TinyHNSW is a tiny, simple vector database. It weighs in at a measly few hundred lines of code. It's built on a straightforward (but not fast) implementation of HNSW in Python with minimal dependencies. It has an associated set of tutorials that build up to understanding how HNSW works, and how you can build your own TinyHNSW.

tinyhnsw is NOT production-quality.

Compared to literally any standard implementation, it's slow and probably buggy.

If you want to use an Approximate Nearest Neighbor library in a real application, consider something like FAISS.

- Introduction

- Nearest Neighbor Search

- An Overview of HNSW

- Skip Lists

- Navigable Small Worlds

- HNSW

- Limitations

- Filtering and Hybrid Search

- Multimodal Retrieval with CLIP

- Searching Text with Sentence Transformers

- Nearest Neighbor Search

- Skip Lists

- Navigable Small Worlds

- HNSW

- Filtering and Hybrid Search

- Multimodal Retrieval with CLIP

- Searching Text with Sentence Transformers

With the disclaimers out of the way, here is how you use it/set it up.

To install tinyhnsw, run the following command:

pip install tinyhnswThis will install the library and all its dependencies (numpy, networkx, scipy, tqdm).

from tinyhnsw import HNSWIndex

import numpy

vectors = numpy.random.randn(100, 10)

index = HNSWIndex(d=10)

index.add(vectors)

print(index.ntotal)

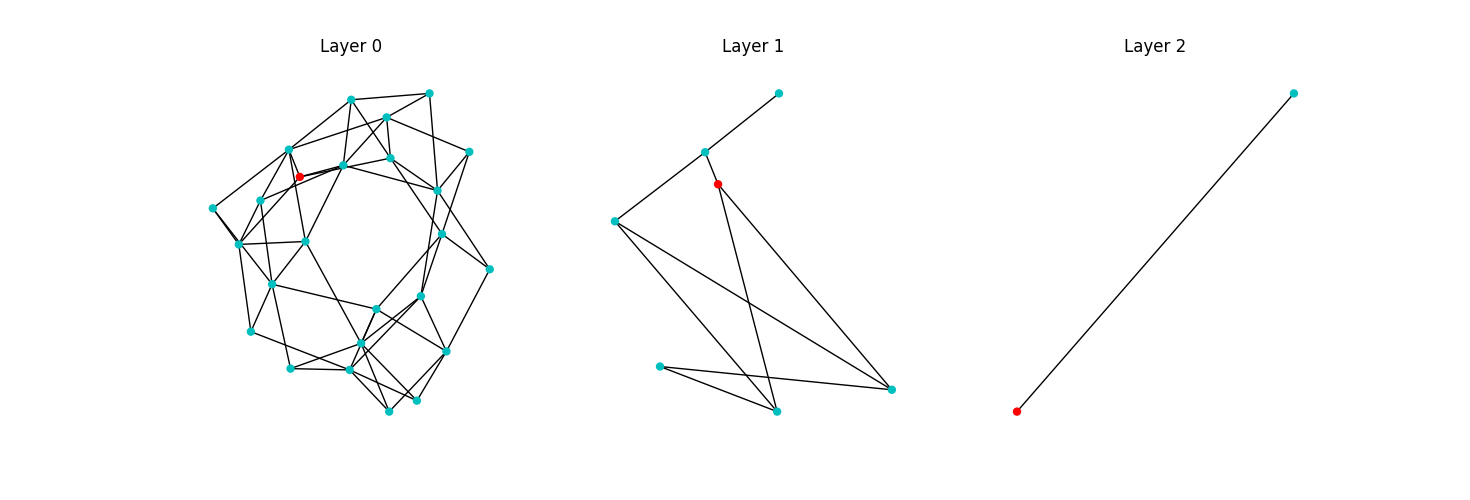

# => 100You can also visualize each layer of the HNSW graph using the following code:

from tinyhnsw.visualization import visualize_hnsw_index

# ... set up index here

visualize_hnsw_index(index)Which will generate a representation like the following:

You can evaluate the full nearest neighbors index with the following command:

from tinyhnsw.utils import load_sift, evaluate

from tinyhnsw import FullNNIndex

data, queries, labels = load_sift()

index = FullNNIndex(128)

index.add(data)

D, I = index.search(queries, k=10)

print(f"Recall@1: {evaluate(labels, I[:, 0])}")On my M2 MacBook Air with numpy=1.26.2, that runs in 0.25s and results in a recall@1 of 98%.

📝 As part of understanding how HNSW works, the tutorial walks you through how skip lists work and how to implement one. However, this implementation is not particularly robust and only works with integer keys. It's there for teaching purposes, as understanding skip lists will really help understand how HNSW works.

You can use the skip lists as follows:

from tinyhnsw.skip_list import SkipList

list = [3, 2, 1, 7, 14, 9, 6]

s = SkipList(list)

print(s)Which will return something like the following (but not exactly, it's a random data structure after all):

2 | 2 3 9

1 | 2 3 6 9 14

0 | 1 2 3 6 7 9 14

You have a few basic operations:

s.find(3)

# => Node(value=3, pointers=[...])

s.delete(3)

# => None ; removes item from skiplist

s.insert(5)

# => None ; inserts the element 5

s.tolist()

# => [1, 2, 5, 6, 7, 9, 14]📝 The second part of understanding how HNSW works is understanding how NSWs work. Again, we provide a teaching implementation in this repo, but it's not meant for much more than teaching.

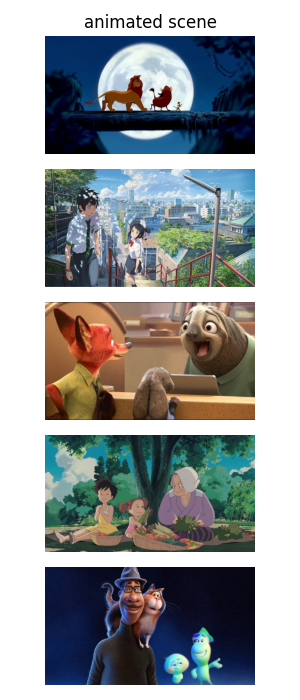

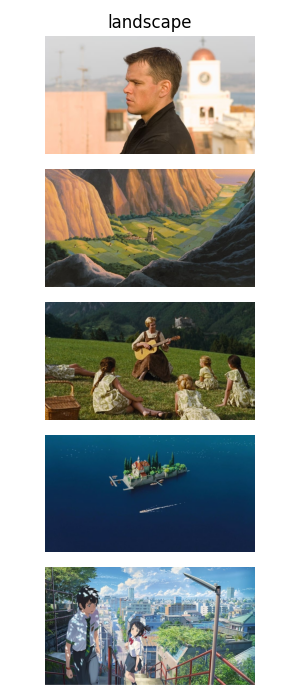

python examples/multimodal_retrieval/multimodal_retrieval.py "animated scene"There are a few different kinds of tests in this repo:

- correctness tests

- timing tests

- accuracy tests

To run the correctness tests, simply run:

poetry run pytestMake sure you've downloaded the data:

python tinyhnsw/utils.pyWhich will download the SIFT10K dataset to the data/ folder.

On my machine, index creation for 10k vectors to get to 100% recall takes 22 seconds.

We use SIFT10k to evaluate the different indexes, scoring them with Recall@1 (on my machine, with a random seed):

| Index | Recall@1 |

|---|---|

FullNNIndex |

1.00 |

HNSWIndex (simple) |

1.00 |

HNSWIndex (heuristic) |

1.00 |