-

This project is part of Data Science Nanodegree Program by Udacity in collaboration with Figure Eight. The dataset contains pre-labelled tweets and messages from real-life disaster events. It aims to build a Natural Language Processing (NLP) model to categorize messages.

-

Project is divided in the following key sections:

- ETL pipeline - Extracts and processes data from source, and save it to a SQLite DB

- ML pipeline - Builds a machine learning pipeline to train on the data, to classify text message in various categories

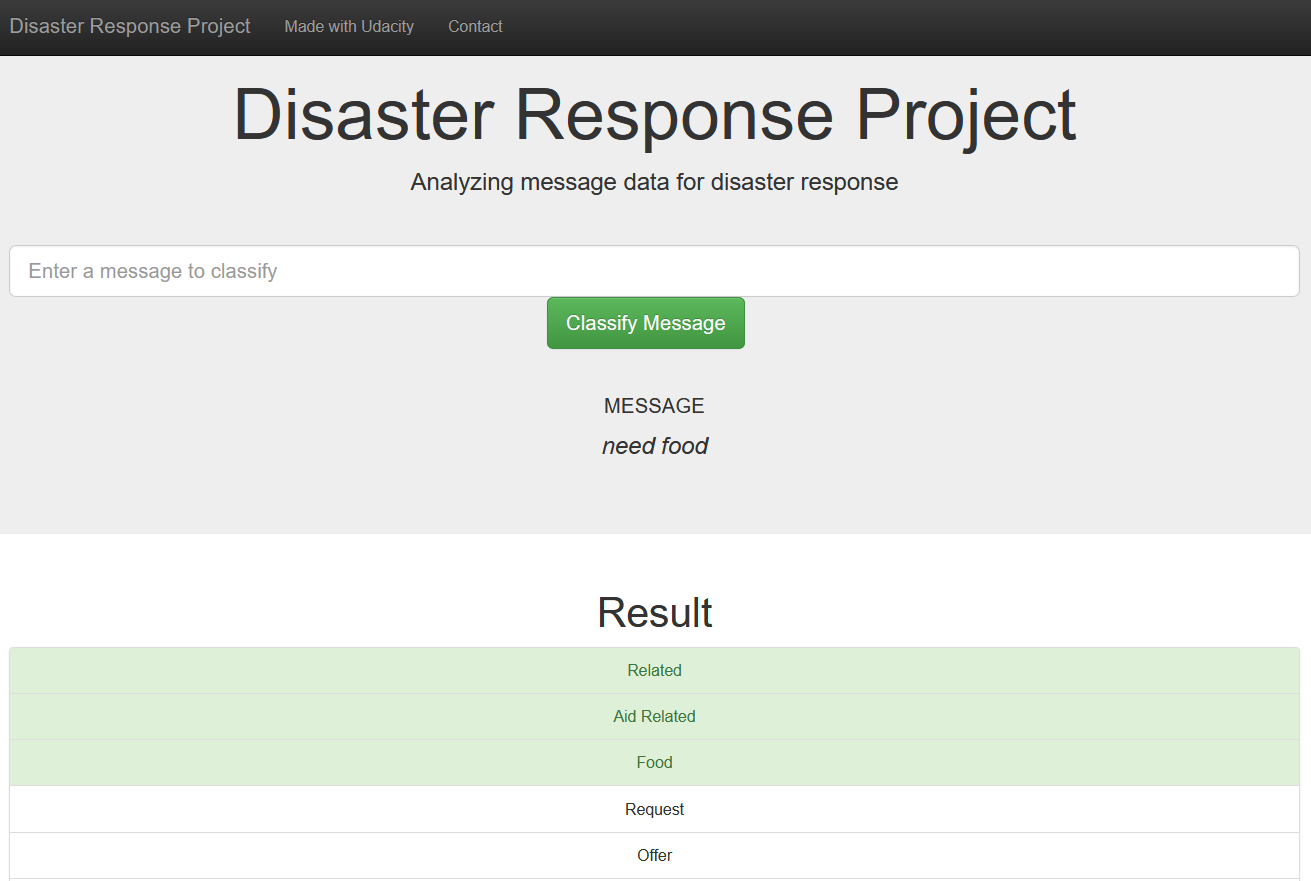

- Web App - Generates and displays model predictions for user entered message in real time

- Python 3.9+

- ML libraries: numPy, pandas, sciki-Learn, nltk

- Other libraries: sqlalchemy, joblib, flask, plotly

To clone the git repository:

git clone https://github.com/jeena72/disaster-response-pipeline.git

-

You can run the following commands in the project's directory to set up the database, train model and save the model

- To run ETL pipeline to clean data and store the processed data in the database

python data/process_data.py data/disaster_messages.csv data/disaster_categories.csv data/DisasterResponse.db - To run the ML pipeline that loads data from DB, trains classifier and saves the classifier as a pickle file

python models/train_classifier.py data/DisasterResponse.db models/classifier.pkl

- To run ETL pipeline to clean data and store the processed data in the database

-

Run the following command from inside "app/" directory to run web app

python run.py -

Go to http://127.0.0.1:3001/

app/templates/*: templates/html files for web app

data/process_data.py: Extract Train Load (ETL) script for data cleaning, feature extraction, and storing data in a SQLite database

models/train_classifier.py: Model fitting script that loads data, trains a model, and saves it as a .pkl file

run.py: Script for launching the Flask web app

- Udacity for the Data Science Nanodegree Program

- Figure Eight for providing the relevant dataset to train the model

- An example of a message categorization (predicted categories highlighted in green)

- Web app home page with some visualization of distributions in dataset

- Sample run of train_classifier.py with precision, recall etc. for each category