Parameter server is distributed machine learning framework. It targets cloud-computing situations where machines are possibly unreliable, jobs may get preempted, data may be lost, and where network latency and temporary workloads lead to a much more diverse performance profile. In other words, we target real cloud computing scenarios applicable to Google, Baidu, Amazon, Microsoft, etc. rather than low utilization-rate, exclusive use, high performance supercomputer clusters.

- Ease of use. The globally shared parameters are represented as (potentially sparse) vectors and matrices, which are more convenient data structures for machine learning applications than the widely used (key,value) store or tables. High-performance and convenient multi-threaded linear algebra operations, such as vector-matrix multiplication between parameters and local training data, are provided to facilitate developing applications.

- Efficiency. Communication between nodes is asynchronous. Importantly, synchronization does not block computation. This framework allows the algorithm designer to balance algorithmic convergence rate and system efficiency, where the best trade-off depends on data, algorithm, and hardware.

- Elastic Scalability. New nodes can be added without restarting the running framework. This property is desirable, e.g. for streaming sketches or when deploying a parameter server as an online service that must remain available for a long time.

- Fault Tolerance and Durability. Conversely, node failure is inevitable, particularly at large scale using commodity servers. We use an optimized data replication architecture that efficiently stores data on multiple server nodes to enable fast (in less than 1 second) recovery from node failure.

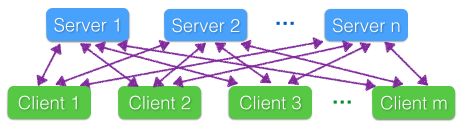

The parameter server architecture has two classes of nodes: Each server node maintains a partition of the globally shared parameters. They communicate with each other to replicate and/or to migrate parameters for reliability and scaling. The client nodes perform the bulk of the computation. Each client typically stores locally a portion of the training data, computing local statistics such as gradients. Clients communicate only with the server nodes, updating and retrieving the shared parameters. Clients may be added or removed.

Parameter server provides several globally shared data structures, for example,

(sparse) vectors and matrices. The class Container is the base class of all

these structures. It provides two simple functions to do communication. Push

sends out local modifications to other nodes, while Pull retrieves

modifications from the others. These two functions are non-blocking, if the

user specified consistency model is satisfied. All control information is set in

Header.

Status Push(const Header& h);

Status Pull(const Header& h);A particular data structure may provides more convenient functions to avoid

explicitly construct Header. For example, Vectors could store several

sparse vectors (namely a thin sparse matrix).

Status PushPull(KeyRange key_range = KeyRange::All(),

VecList push_vec_list = {0},

bool push_delta = kDelta,

VecList pull_vec_list = {0},

bool pull_delta = kValue,

uid_t dest = kServer);A typical usage is that, we first construct 2 vectors, the first one stores the gradient, while the second one has the weight. Then each client write local computed gradient into the first one, push these values into all server nodes, and then retrieve the modification of weight from the server. Then we can write it as

Vectors<double> W("my_vec", p, 2);

// write local gradient into W.vec(0)

W.PushPull(KeyRange::All(), {0}, kValue, {1}, kDelta, kServer);TODO

We are now on developing a total new version, which is quite different and contains much more features than the previous versions the authors developed at Yahoo, Google, Baidu and CMU. However, the current codes are not be easily deployed yet, we hope to release a beta version after the winter break. If you would like to run it for your projects, please wait for our beta release.

TODO

Binary format data is often more efficient than plain text data, because of more compact representation, no tokenization is required, easy to seek to a particular row/instance.

A row-majored sparse matrix stores the nonzero entries in each row sequentially. For example, consider the following the matrix

[ 1 2 0 0 ]

[ 0 3 9 0 ]

[ 0 1 4 0 ]Then it is stored by three binary files

name.rowcnt = [ 0 2 4 6 ] // array of offsets of first nonzero element of a row

name.colidx = [ 0 1 1 2 1 2 ] // array of column index of each element

name.value = [ 1 2 3 9 1 4 ] // array of non-zero element valueTODO

- zeromq A socket library.

- gflags Google’s flag processing library, used for parsing commandline options

- glogs Google’s log library

- gtest Google’s code test library

- protobuf Google’s serialization library, used for serializing push/pull flags

All these library could be installed by make third_party_all

compiler required gcc >= 4.7 or LLVM 3.3, tested under Ubuntu 12.04, 12.10

Mu Li, Li Zhou, Zichao Yang, Aaron Li, Fei Xia, David Andersen and Alexander Smola. Parameter Server for Distributed Machine Learning, Big Learning Workshop, NIPS 2013