This repo contains the code of our paper:

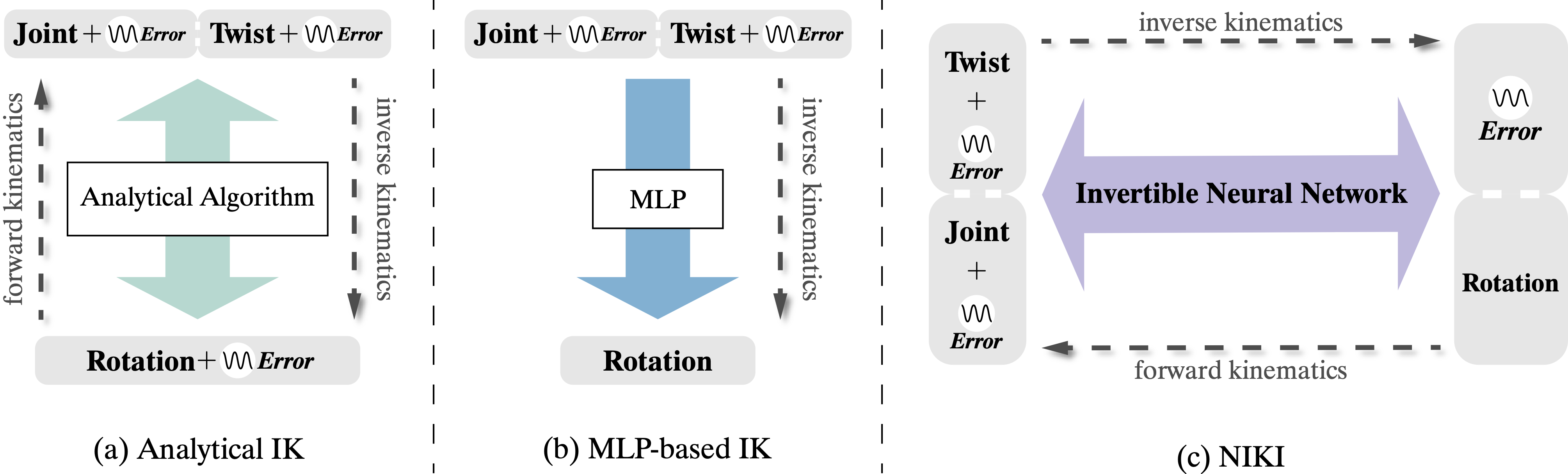

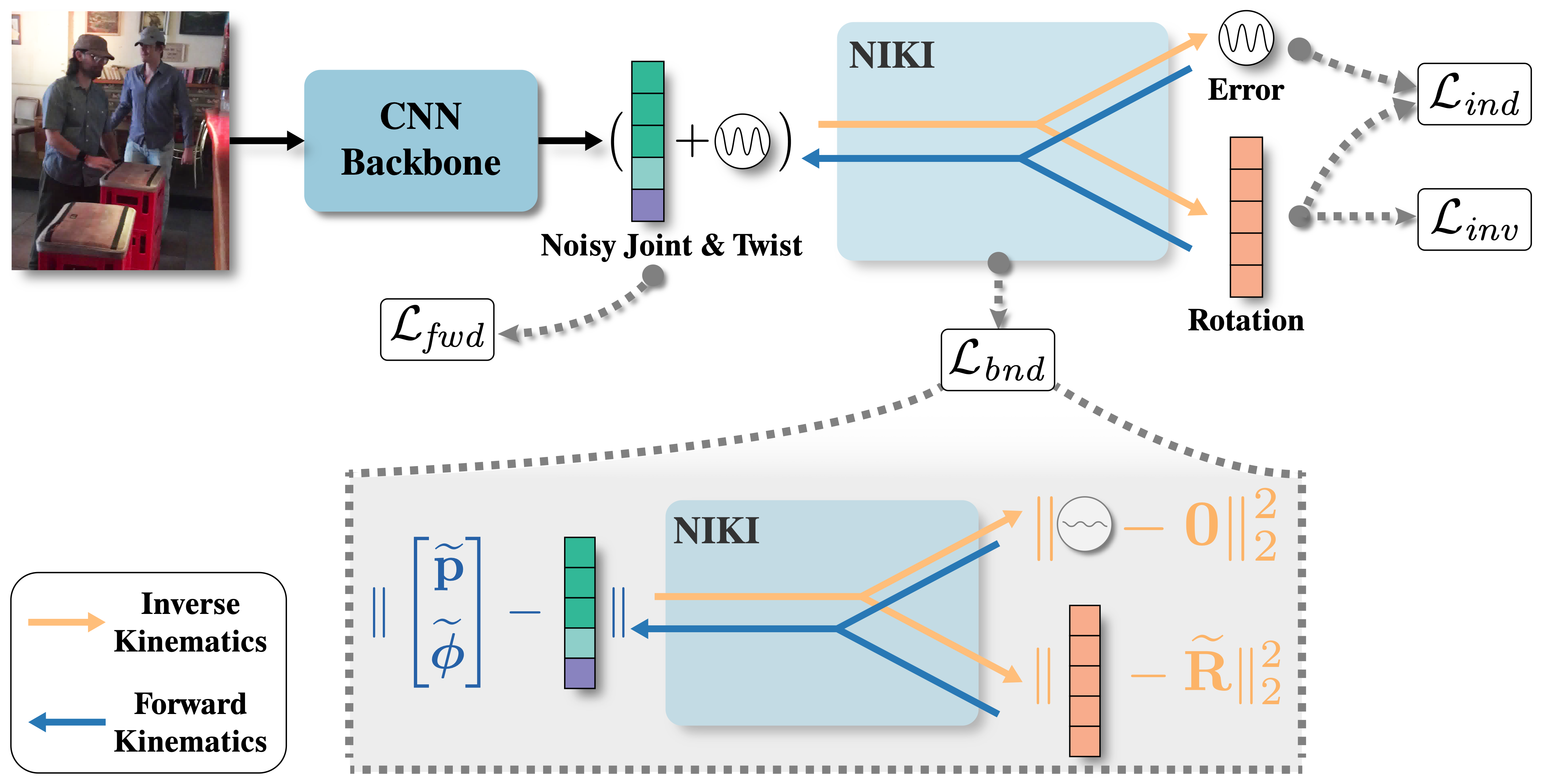

NIKI: Neural Inverse Kinematics with Invertible Neural Networks for 3D Human Pose and Shape Estimation

Jiefeng Li*, Siyuan Bian*, Qi Liu, Jiasheng Tang, Fan Wang, Cewu Lu

In CVPR 2023

# 1. Create a conda virtual environment.

conda create -n niki python=3.8 -y

conda activate niki

# 2. Install PyTorch

conda install pytorch==1.13.0 torchvision==0.14.0 torchaudio==0.13.0 pytorch-cuda=11.6 -c pytorch -c nvidia

# 3. Install PyTorch3D (Optional, only for visualization)

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install -c bottler nvidiacub

pip install git+ssh://git@github.com/facebookresearch/pytorch3d.git

pip install -r requirements.txt

# 4. Pull our code

git clone git@github.com:Jeff-sjtu/NIKI.git

cd NIKI- Download our pretrained model from [Google Drive | Baidu (code:

z4iv)].

- Download parsed annotations from [Google Drive].

python scripts/train.py --cfg configs/NIKI-ts.yaml --exp-id niki-tspython scripts/validate.py --cfg configs/NIKI-ts.yaml --ckpt niki-ts.pthDownload pretrained HybrIK and Single-stage NIKI models from onedrive link, and put them in exp/ folder.

python scripts/demo.py --video-name {VIDEO-PATH} -out-dir {OUTPUT-DIR}If our code helps your research, please consider citing the following paper:

@inproceedings{li2023niki,

title = {{NIKI}: Neural Inverse Kinematics with Invertible Neural Networks for 3D Human Pose and Shape Estimation},

author = {Li, Jiefeng and Bian, Siyuan and Liu, Qi and Tang, Jiasheng and Wang, Fan and Lu, Cewu},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

}