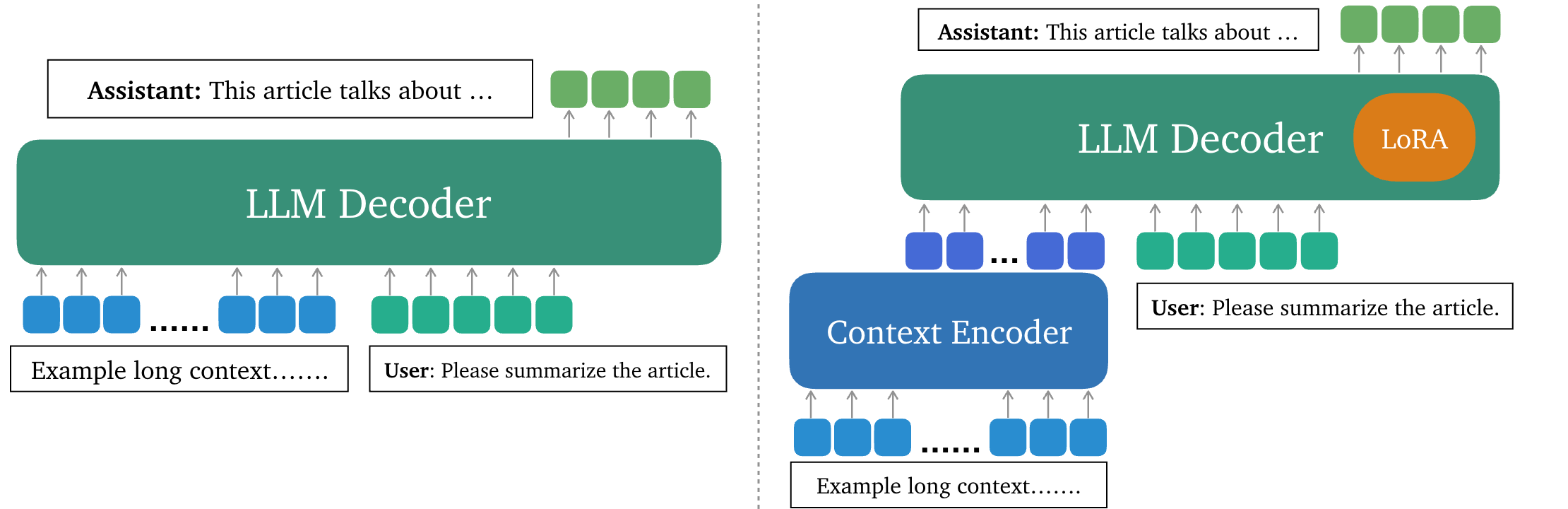

LLoCO is a technique that learns documents offline through context compression and in-domain parameter-efficient finetuning using LoRA, which enables LLMs to handle long context efficiently.

Setup a new environment and run:

pip install -r requirements.txtUse the following command to download the QuALITY dataset. Other datasets are loaded from HuggingFace and can be downloaded automatically during data loading.

cd data

wget https://raw.githubusercontent.com/nyu-mll/quality/main/data/v1.0.1/QuALITY.v1.0.1.htmlstripped.train

wget https://raw.githubusercontent.com/nyu-mll/quality/main/data/v1.0.1/QuALITY.v1.0.1.htmlstripped.devFirst generate summary embeddings for the datasets. An example bash script is stored in scripts/preproc_emb.sh, which preprocess the training dataset of QuALITY:

python3 preproc_embs.py \

--emb_model_name "autocomp" \

--dataset quality \

--split train \

--data_path ./data/QuALITY.v1.0.1.htmlstripped.train \

--out_path ./embeddings/quality_train_embs.pth \

--truncation False \This script will generate summary embeddings for QuALITY training set, and store the embeddings in the /embeddings folder. Embedding generation for other datasets works similarly.

Here is an example bash script to finetune the QuALITY dataset. This script is in scripts/finetune_quality.sh.

torchrun --nproc_per_node=4 finetune_quality.py \

--output_dir output/lloco_quality \

--run_name lloco_quality \

--data_path ./data/QuALITY.v1.0.1.htmlstripped.train \

--embedding_path ./embeddings/quality_train_embs.pth \

...Below is a bash script to run inference over the validation sets are contained in script/inference.sh. Evaluation results are stored in out_path, and the finetuned model is specified by peft_model.

python3 inference.py \

--model_name_or_path meta-llama/Llama-2-7b-chat-hf \

--dataset_name qmsum \

--eval_mode autocomp \

--out_path ./eval/qmsum_lloco.json \

--peft_model output/lloco_qmsum \

--embedding_path ./embeddings/qmsum_val_embs.pth \

...After obtaining the prediction files, use the following evaluation scripts in the /eval folder to get the scores for each dataset.

Evaluate QuALITY:

python3 quality_evaluator.py --quality_path {quality_path} --pred_path {prediction_file}Evaluate QMSum, Qasper, NarrativeQA:

python3 scroll_evaluator.py --split validation --dataset_name {dataset_name} --predictions {prediction_file} --metrics_output_dir .Evaluate HotpotQA:

python3 hotpot_evaluator.py --pred_path {prediction_fild}- Release finetuning and inference code.

- Release pre-trained LoRA weights on HuggingFace.

- Integrate to VLLM.

If you find LLoCO useful or relevant to your project and research, please kindly cite our paper:

@article{tan2024lloco,

title = {LLoCO: Learning Long Contexts Offline},

author = {Sijun Tan and Xiuyu Li and Shishir Patil and Ziyang Wu and Tianjun Zhang and Kurt Keutzer and Joseph E. Gonzalez and Raluca Ada Popa},

year = {2024},

journal = {arXiv preprint arXiv: 2404.07979}

}We referred to AutoCompressors for the context encoder implementation.