'DLR (Deep Learning Routines)' as a part of DPU (Deep Learning Processing Unit) is a collection of high-level synthesizable C/C++ routines for deep learning inference network.

All contents are provided as it is WITHOUT ANY WARRANTY and NO TECHNICAL SUPPORT will be provided for problems that might arise.

Click to expand table of contents

- Overview

1.1 Note

1.2 Prerequisites

1.3 Conventions

1.4 How to build

1.5 How to use - Deep-Learning Routines

2.1 Activation

2.1.1. Hyperbolic tangent

2.1.2. Leaky ReLU

2.1.3. ReLU

2.1.4. Sigmoid

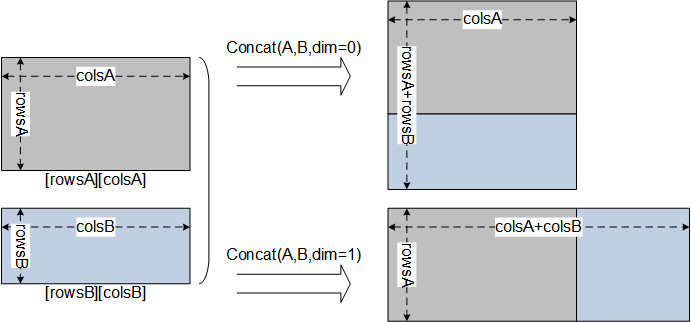

2.2 Concatenation

2.2.1 Concat2d

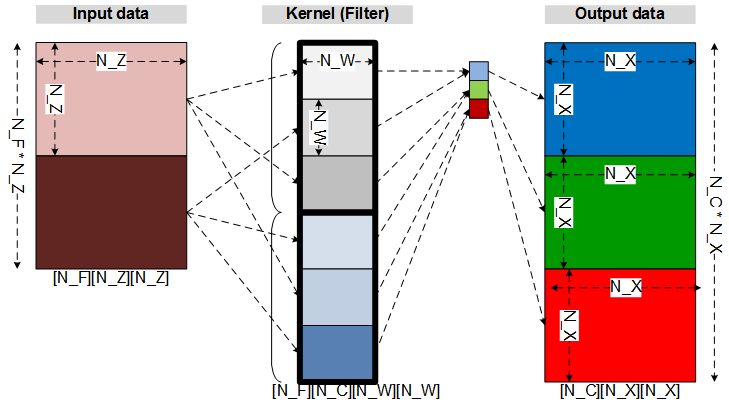

2.3 Convolution

2.3.1 Convolution2d

2.4 Deconvolution

2.4.1 Deconvolution2d

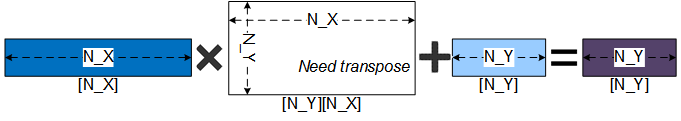

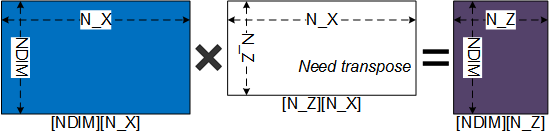

2.5 Linear (Fully connected)

2.6 Normalization

2.6.1 Batch normalization

2.7 Pooling

2.7.1 Average pooling

2.7.2 Max pooling - Projects

3.1 Project: LeNet-5

3.2 Project: Tiny YOLO-V2 for VOC - Other things

4.1 Acknowledgment

4.2 Authors and contributors

4.3 License

4.4 Revision history

'DLR (Deep Learning Routines)' as a part of DPU (Deep Learning Processing Unit) is a collection of high-level synthesizable C/C++ routines for deep learning inference network.

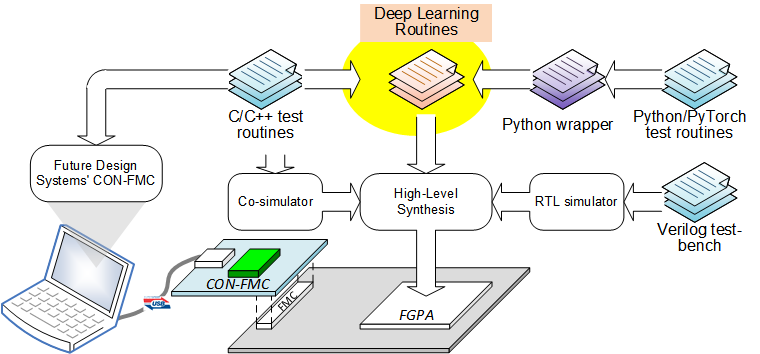

As shown in the picture below, DLR routines are verified in the context of C/C++, Python, PyTorch, and FPGA.

|

|---|

| Overall framework |

DLR routines contain followings (some are under development and more will be added):

- Convolution layer (strict convolution )

- Activation layer (ReLU, Leaky ReLU, hyperbolic tangent, sigmoid)

- Pooling layer (max, average)

- Fully connected layer (linear layer)

- De-convolution layer (transposed convolution layer)

- Batch normalization layer

- Concatenation layer

The routines have following highlights:

- Fully synthesizable C code

- Highly parameterized to be adopted wide range of usages

- C, Python, PyTorch, Verilog test-benches

- FPGA verified using Future Design Systems’ CON-FMC

It should be noted that the routines are not optimized to get a higher performance since the routines are for hardware implementation not for computation. In addition to this the routines are only for inference not for training.

Version 1.4 uses 'dlr' namespace.

This program requires followings.

- GNU GCC: C compiler

- PyTorch / Conda / Python

- (Optional for hardware verification and implementation)

- HDL simulator: Xilinx Xsim

- High level synthesis: Xilinx Vivado HLS

This program uses following conventions.

- DLR routines are defined within dlr namespace for C++.

- Each DLR routine has C++ and C routines, where templates are used for C++.

- Python wrappers follow C++ name.

- PyTorch wrappers follow PyTorch torch.nn.functional name.

Simple do as follows and 'include' and 'lib' directories will be ready to use under 'v1.4' directory.

$ cd v1.4/src

$ make clean

$ make

$ make installYou should specify where the DLR header files resiede using "-I" option for g++ compiler, in which $(DLR_HOME) is the path to the deep-learning routines such as /home/user/Deep_Learning_Routines/v1.4.

$ g++ .... -I$(DLR_HOME)/include ....

You should specify where the DLR libaray resides using "-L" and "-l" options for g++ linker.

$ g++ .... -L$(DLR_HOME)/lib -ldlr ....

Refer to the DLR Projects. for more details.

Click to expand this section

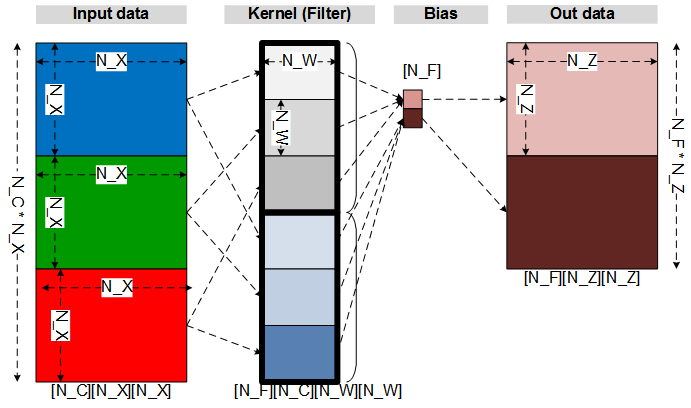

'Convolution2d()' applies a 2D convolution over an input data composed of multiple planes (i.e., channels).

|

|---|

| COnvolutioin |

C++ routines uses template to support user specific data type.

Click to expand C++ code

template<class TYPE=float>

void Convolution2d

( TYPE *out_data // out_channel x out_size x out_size

, const TYPE *in_data // in_channel x in_size x in_size

, const TYPE *kernel // out_channel x in_channel x kernel_size x kernel_size

, const TYPE *bias // out_channel

, const uint16_t out_size // only for square matrix

, const uint16_t in_size // only for square matrix

, const uint8_t kernel_size // only for square matrix

, const uint16_t bias_size // out_channel

, const uint16_t in_channel // number of input channels

, const uint16_t out_channel // number of filters (kernels)

, const uint8_t stride

, const uint8_t padding=0

#if !defined(__SYNTHESIS__)

, const int rigor=0 // check rigorously when 1

, const int verbose=0 // verbose level

#endif

)C routines are build by overloading template of corresponding C++ routine.

Click to expand C code

extern void Convolution2dInt

( int *out_data // out_channel x out_size x out_size

, const int *in_data // in_channel x in_size x in_size

, const int *kernel // in_channel x out_channel x kernel_size x kernel_size

, const int *bias // bias per kernel

, const uint16_t out_size // only for square matrix

, const uint16_t in_size // only for square matrix

, const uint8_t kernel_size // only for square matrix

, const uint16_t bias_size // number of biases, it should be the same as out_channel

, const uint16_t in_channel // number of input channels

, const uint16_t out_channel // number of filters (kernels)

, const uint8_t stride // stride default 1

, const uint8_t padding // padding default 0

#if !defined(__SYNTHESIS__)

, const int rigor // check rigorously when 1

, const int verbose // verbose level

#endif

);

extern void Convolution2dDouble( ); // arguments are the same as Concat2dFloat, but data type is 'double' instead of 'float'

extern void Convolution2dInt( ); // arguments are the same as Concat2dFloat, but data type is 'double' instead of 'int'‘Convolution2d()’ wrapper gets NumPy arguments for array and ‘out_data’ carries calculated result. It returns ‘True’ on success or ‘False’ on failure.

Click to expand Python code

Convolution2d( out_data # out_channel x out_size x out_size

, in_data # in_channel x in_size x in_size

, kernel # out_channel x in_channel x kernel_size x kernel_size

, bias=None # out_channel

, stride=1

, padding=0

, rigor=False

, verbose=False)'conv2d()' PyTorch wrapper gets PyTorch tensor arguments for array and returns calculated result. It calls Python wrapper after converting PyTorch tensor to NumPy array.

Click to expand PyTorch code

conv2d( input # in_minibatch x in_channel x in_size x in_size

, weight # out_channel x in_channel x kernel_size x kernel_size

, bias=None # out_channel

, stride=1

, padding=0

, dilation=1

, groups=1

, rigor=False

, verbose=False)Linear transfomation on the input data

y = x * W T + b

Click to expand this section

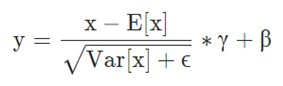

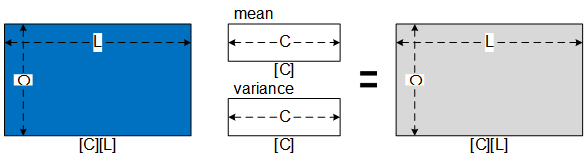

|

|---|

| Batch normaization equation |

| E[x]: mean, Var[x]: variance, γ: scaling factor, β: bias, ε: numerical stability |

|

| :---: |

| Batch normaization |

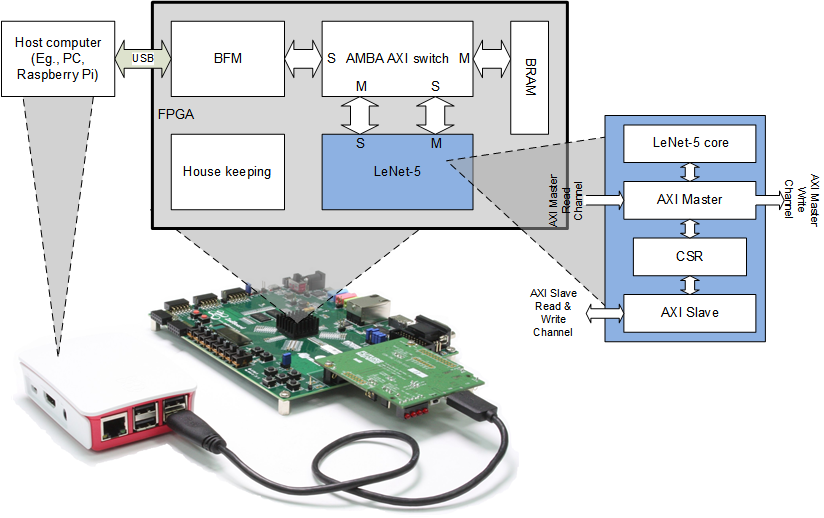

LeNet-5 is a popular convolutional neural network architecture for handwritten and machine-printed character recognition.

Details of this project can be found from DLR Projects: LeNet-5.

|

|---|

| LeNet-5 project on FPGA |

Click to expand this project

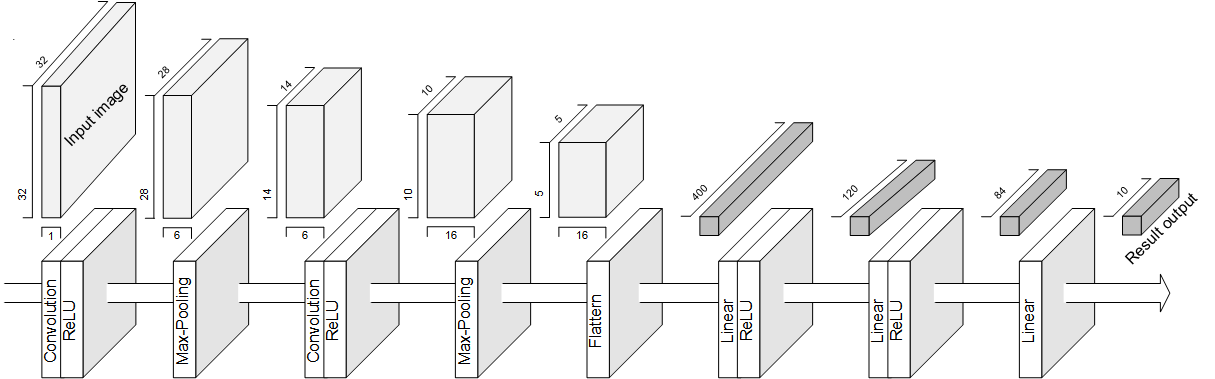

Following picture shows network structure and its data size.

|

|---|

| LeNet-5 network |

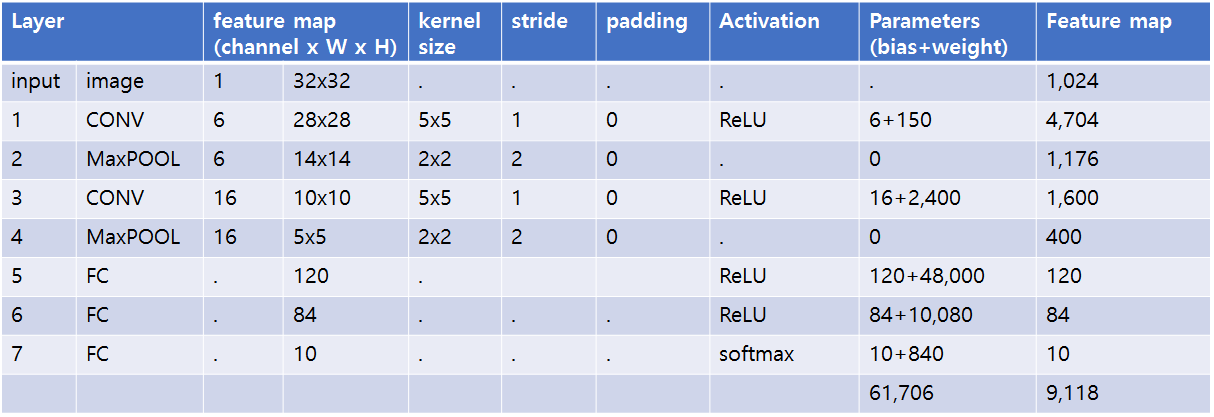

Following table shows a summary of the network and its parameters.

|

|---|

| LeNet-5 network details |

The picture below shows an overall design flow from model training to FPGA implementation.

|

|---|

| LeNet-5 design flow |

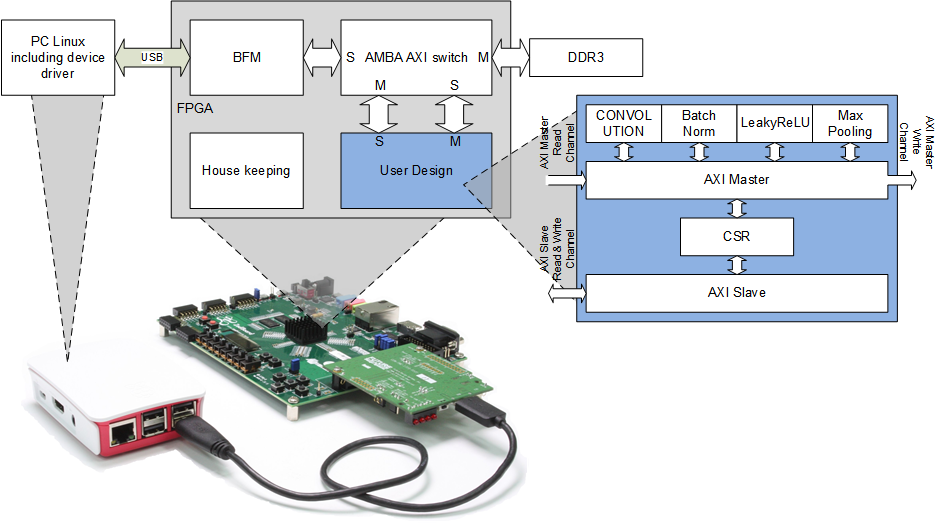

YOLO is a popular convolutional neural network architecture for object detection.

Details of this project can be found from DLR Projects: Tiny YOLO-V2.

|

|---|

| Tiny YOLO-V2 project on FPGA |

Click to expand this project

This work was supported partially by KARI (Korea Aerospace Research

Institute) under the “Development of deep learning hardware accelerator for

microsatellite ” (Contract 2019110A35C-00).

Some part of this work was carried out partially by Handong Global University

2020 Summer Internship program.

Some part of this work was supported partially by ETRI (Electronics and

Telecommunications Research Institute) under the “Development and training of

FPGA-based AI semiconductor design environment ” (Contract EA20202206).

- [Ando Ki] - Initial work - Future Design Systems

- Seongwon Seo - Future Design Systems

- Kwangsub Jeon - Future Design Systems

- Chae Eon Lim - Future Design Systems

DLR (Deep Learning Routines) and its associated materials are licensed with the 2-clause BSD license to make the program and library useful in open and closed source projects independent of their licensing scheme. Each contributor holds copyright over their respective contribution.

The 2-Clause BSD License

Copyright 2020-2021 Future Design Systems (http:://www.future-ds.com)Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

-

Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

-

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

- 2021.09.04: v1.4 uses namespace 'dlr'

- 2020.11.12: Released v1.3

- 2020.03.10: Started by Ando Ki (adki at future-ds.com)