YOLOv9 tensorrt deployment (See the video : https://www.youtube.com/watch?v=aWDFtBPN2HM

This repository provides an API for accelerating inference deployment, with two open interface implementation: C++ and Python. C++also provides the use of CUDA programming to accelerate YOLOv9 model preprocessing and post-processing to pursue faster model inference speed🔥🔥🔥

Clone YOLOv9 code repository, download the original model provided by the repository, or train your own model, such as yolov9-c.pt

# export onnx

python export.py --weights yolov9-c.pt --simplify --include "onnx"Place the exported onnx file in the "yolov9-tensorrt/configs" folder and configure the relevant parameters through the "yolov9-tensorrt/configs/yolov9.yaml" file

# move onnx

cd yolov9-Tensorrt

mv yolov9-c.onnx ./configsModify parameter configuration in configs/yolov9-yaml

# modify configuration in configs/yolov9.yaml

confTreshold: 0.25 #Detection confidence threshold

nmsTreshold : 0.45 #nms threshold

maxSupportBatchSize: 1 #support max input batch size

quantizationInfer: "FP16" #support FP32 or FP16 quantization

onnxFile: "yolov9-c.onnx" # The currently used onnx model file

engineFile: "yolov9-c.engine" # Automatically generate file names for the Tensorrt inference enginemkdir build

cd build

cmake ..

make -j4# modify configuration in configs/yolov9py.yaml

confTreshold: 0.3 # detect treshold

nmsThreshold: 0.45 #nms treshold

quantizationInfer: "FP16" #FP32 or FP16

onnxFile: "yolov9-c.onnx" # The currently used onnx model file

engineFile: "yolov9-c.engine" # Automatically generate file names for the Tensorrt inference engineThe first run will generate the inference engine ".engine" file in the configs folder. If the inference engine has already been generated, it will not be generated again

run c++ demo API

# run images floder

./demo ../datarun python demo API

# run images floder

python yolov9_trt.py --configs configs --yaml_file yolov9py.yaml --data dataThis project is based on the following awesome projects:

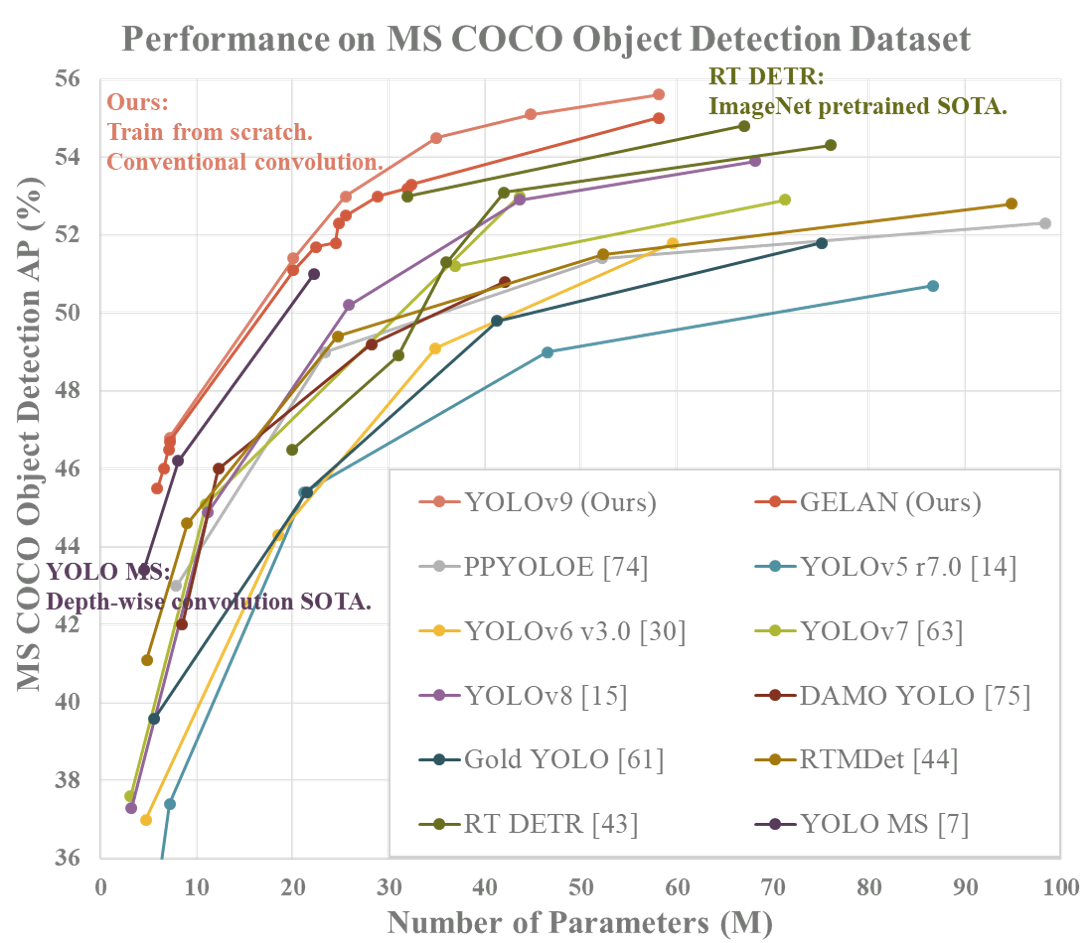

- Yolov9 - YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information.

- TensorRT - TensorRT samples and api documentation.

@article{wang2024yolov9,

title={{YOLOv9}: Learning What You Want to Learn Using Programmable Gradient Information},

author={Wang, Chien-Yao and Liao, Hong-Yuan Mark},

booktitle={arXiv preprint arXiv:2402.13616},

year={2024}

}