A Construct-Optimize Approach to Sparse View Synthesis without Camera Pose

Official PyTorch implementation of the ACM SIGGRAPH 2024 paper

A Construct-Optimize Approach to Sparse View Synthesis without Camera Pose

Kaiwen Jiang, Yang Fu, Mukund Varma T, Yash Belhe, Xiaolong Wang, Hao Su, Ravi Ramamoorthi

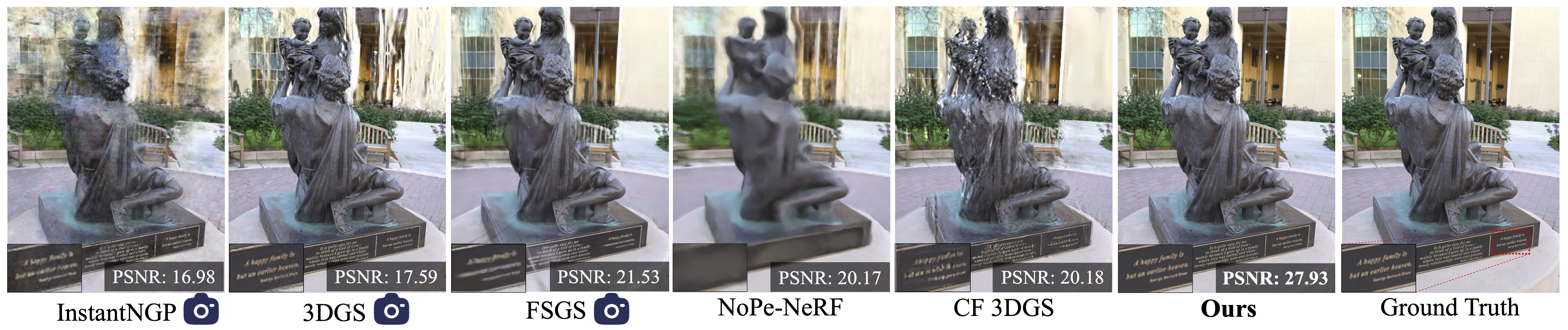

Abstract: Novel view synthesis from a sparse set of input images is a challenging problem of great practical interest, especially when camera poses are absent or inaccurate. Direct optimization of camera poses and usage of estimated depths in neural radiance field algorithms usually do not produce good results because of the coupling between poses and depths, and inaccuracies in monocular depth estimation. In this paper, we leverage the recent 3D Gaussian splatting method to develop a novel construct-and-optimize method for sparse view synthesis without camera poses. Specifically, we construct a solution progressively by using monocular depth and projecting pixels back into the 3D world. During construction, we optimize the solution by detecting 2D correspondences between training views and the corresponding rendered images. We develop a unified differentiable pipeline for camera registration and adjustment of both camera poses and depths, followed by back-projection. We also introduce a novel notion of an expected surface in Gaussian splatting, which is critical to our optimization. These steps enable a coarse solution, which can then be low-pass filtered and refined using standard optimization methods. We demonstrate results on the Tanks and Temples and Static Hikes datasets with as few as three widely-spaced views, showing significantly better quality than competing methods, including those with approximate camera pose information. Moreover, our results improve with more views and outperform previous InstantNGP and Gaussian Splatting algorithms even when using half the dataset.

- Please refer to 3DGS for requirements of hardware.

- We have done all the experiments on the Linux platform with NVIDIA 3080 GPUs.

- Dependencies:

- conda create -n cogs python=3.11

- conda activate cogs

- ./install.sh

- Please refer to fcclip for downloading

fcclip_cocopan.pth, and put it undersubmodules/fcclip. - Please refer to QuadTreeAttention for downloading

indoor.ckptandoutdoor.ckpt, and put them undersubmodules/QuadTreeAttention.

We provide a quick demo for you to play with.

- For Tanks & Temples Dataset, please refer to NoPe-NeRF for downloading. You will then need to run

convert.pyto estimate the intrinsics and ground-truth extrinsics for evaluation. - For Hiking Dataset, please refer to LocalRF for downloading. We truncate each scene to keep first 50 frames such that 3 training views can cover the whole scene. You will then need to run

convert.pyto estimate the intrinsics and ground-truth extrinsics for evaluation. - For your own dataset, you need to provide the intrinsics, and notice that our model assumes all views share the same intrinsics as well. You need to prepare the data under the following structure:

- images/ -- Directory containing your images

- sparse/0

- cameras.bin/cameras.txt -- Intrinsics information in COLMAP format.

Afterwards, you need to run following commands to estimate monocular depths and semantic masks.

python preprocess_1_estimate_monocular_depth.py -s <path to the dataset>

python preprocess_2_estimate_semantic_mask.py -s <path to the dataset>To train a scene, after preprocessing, please use

python train.py -s <path to the dataset> --eval --num_images <number of trainig views>After a scene is trained, please first use

python eval.py -m <path to the saved model> --load_iteration <load iteration>to estimate the extrinsics of testing views. If ground-truth extrinsics are provided, it will calculate the metrics of estimated extrinsics of training views as well.

After registering the testing views, please use render.py and metrics.py to evaluate the novel view synthesis performance.

As to training, you may need to tweak the hyper-parameters to adapt to different scenes for best performance. For example,

- If the distance between consecutive training frames is relatively large,

register_stepsandalign_stepsare recommended to increase. - If the number of training views are relatively large (e.g., 30 or 60 frames), it is recommended to set

add_frame_intervalto be larger than 1, and decreaseregister_stepsandalign_stepsto avoid alignment and back-projection for unnecessary frames and speed up the process. The number of iterations is also recommended to increase.- E.g., we use

add_frame_interval = 10,register_steps = 50,align_steps = 100, number of iterations= 30000for the Horse scene with60/120training views.

- E.g., we use

- If the training frame contains too many primitives, which makes the number of correspondence points located on each primitive relatively low, setting

scale_and_shift_modetowhole, anddepth_diff_toleranceto1e9may produce better results. - Our method focuses on scene-level sparse view synthesis. If you want to apply this algorithm to object-level sparse view synthesis, you may want to adjust the

BASE_SCALINGandBASE_SHFITinpreprocess_1_estimate_monocular_depth.pyandscene/cameras.py. Besides, you may want to adjust thedepth_diff_toleranceas well. For example, dividing their initial values by 10 should be helpful.

As to testing, we evaluate at both 3000 and 9000 iterations' checkpoints, and use the better one.

- I don't have intrinsics, and fail to estimate the intrinsics as well. Usually, the intrinsics come with your camera. You may use your camera to carefully and densely capture a scene, and use its estimated intrinsics. Meanwhile, our rasterizer supports differentiation through the intrinsic parameters. You may try to optimize the intrinsic parameters as well if you can manage to get a coarse estimation in the first place (Caution: We haven't tested it yet). Notice that, inheriting from the 3DGS, only perspective camera is supported, and the principal point must lie at the center, and there is no skew.

- I want to use SIBR viewer provided by 3DGS to inspect the scene. Since we do not export registered cameras parameters into a format that can be read by the SIBR viewer (Maybe added later), a work-around is to first apply COLMAP which sees all the views to estimate the ground-truth extrinsics, and then ensure the extrinsics of the first frame in our pipeline aligns with that in COLMAP by Commenting L55-56, and Uncommenting L58-59 in

./utils/camera_utils.py. If you want to evaluate the pose metrics, it is also necessary to align the pose of the first frame as well. - I want to speed up the refinement process. Currently, our refinement process utilizes the same rasterizer as that in the construction phase. Since we just use its differentiability of camera parameters, it is possible to remove unnecessary parts (i.e., ray-sphere intersection) in the rasterizer during the refinement to alleviate control divergence and speed up the training.

This project is built upon 3DGS. We also utilize FC-CLIP, MariGold, and QuadTreeAttention. We thank authors for their great repos.

@article{COGS2024,

title={A Construct-Optimize Approach to Sparse View Synthesis without Camera Pose},

author={Jiang, Kaiwen and Fu, Yang and Varma T, Mukund and Belhe, Yash and Wang, Xiaolong and Su, Hao and Ramamoorthi, Ravi},

journal={SIGGRAPH},

year={2024}

}