By Kan Yip Keng, Lin Mei An, Yang Zi Yun, Yew Kai Zhe

The SemEval-2021 Shared Task NLP CONTRIBUTION GRAPH (a.k.a. ‘the NCG task’) tasks participants to develop automated systems that structure contributions from NLP scholarly articles in English.

Input: a research article in plaintext format

Output: a set of contributing sentences

Input: a contributing sentence

Output: a set of scientific knowledge terms and predicate phrases

Additionally, install other dependencies in requirements.txt using conda or pip

- Make sure you have installed all the dependencies mentioned above

- Clone or download this repository, then open a terminal and navigate to this folder

- Train, test & evaluate the model by running

python3 main.py {config}which:- Select the

config-th configurations fromconfig.py. Enterpython3 main.py 0to see all available configurations. - Load all data from the

data/folder - Randomly split the dataset into training & testing set

- Train the model using the training set and store the model in the

modelfile - Test the model against the testing set

- Select the

These optional flags can be combined into one single command line:

- Use

-d [data_dir/]to specify which dataset folder to use, default:data/ - Use

-m [model_name]to specify the model filename to generate, default:model/ - Use

-s [summary_name]to specify the summary name, default will be auto-generated bywandb - Use

--summaryto enable summary mode - Use

--trainto train model only - Use

--testto test model only

python3 main.py 1

python3 main.py 1 -d data-small/ --summary -s scibert

python3 main.py 2 --train

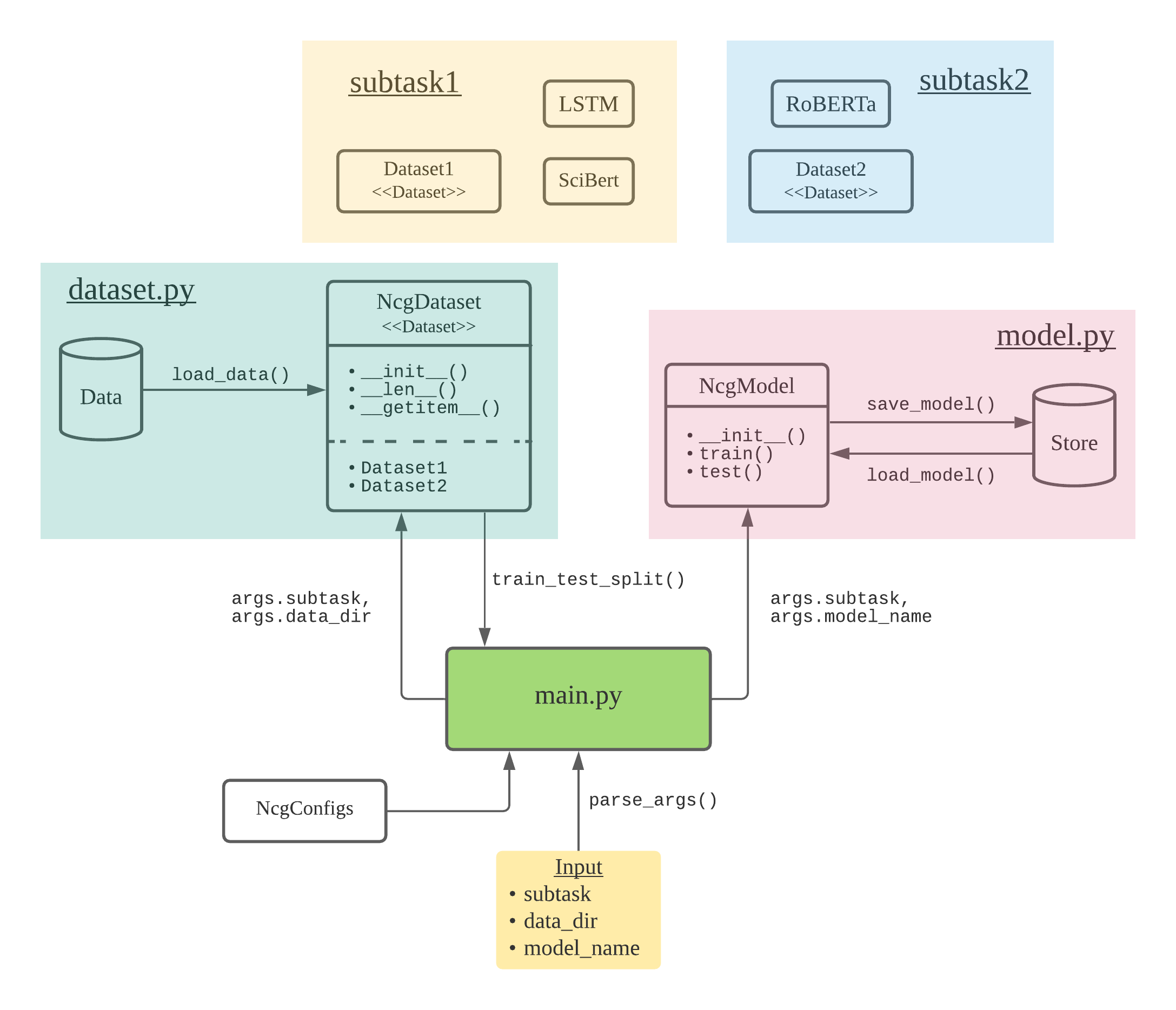

main.py- main runner file of the projectdataset.py- loads, pre-processes data and implements theNcgDatasetclassmodel.py- loads, saves model and implements theNcgModelclassconfig.py- defines all hyperparameterssubtask1/- implements the dataset, models and helper functions for subtask 1subtask2/- implements the dataset, models and helper functions for subtask 2documentation/- written reports

data/- contains 38 task foldersdata-small/- a subset ofdata/, contains 5 task foldersdata-one/- a subset ofdata/, contains 1 task folder

The data folders is organized as follows:

[task-name-folder]/ # natural_language_inference, paraphrase_generation, question_answering, relation_extraction, etc

├── [article-counter-folder]/ # ranges between 0 to 100 since we annotated varying numbers of articles per task

│ ├── [article-name].pdf # scholarly article pdf

│ ├── [article-name]-Grobid-out.txt # plaintext output from the [Grobid parser](https://github.com/kermitt2/grobid)

│ ├── [article-name]-Stanza-out.txt # plaintext preprocessed output from [Stanza](https://github.com/stanfordnlp/stanza)

│ ├── sentences.txt # annotated Contribution sentences in the file

│ ├── entities.txt # annotated entities in the Contribution sentences

│ └── info-units/ # the folder containing information units in JSON format

│ │ └── research-problem.json # `research problem` mandatory information unit in json format

│ │ └── model.json # `model` information unit in json format; in some articles it is called `approach`

│ │ └── ... # there are 12 information units in all and each article may be annotated by 3 or 6

│ └── triples/ # the folder containing information unit triples one per line

│ │ └── research-problem.txt # `research problem` triples (one research problem statement per line)

│ │ └── model.txt # `model` triples (one statement per line)

│ │ └── ... # there are 12 information units in all and each article may be annotated by 3 or 6

│ └── ... # there are K articles annotated for each task, so this repeats for the remaining K-1 annotated articles

└── ... # if there are N task folders overall, then this repeats N-1 more times