Additional code, analyses and results to extend the supplementary information of:

Fellows Yates, J. A. et al. (2021) ‘The evolution and changing ecology of the African hominid oral microbiome’, Proceedings of the National Academy of Sciences of the United States of America, 118(20), p. e2021655118. doi: 10.1073/pnas.2021655118.

Please read section R1 before proceeding.

- Evolution of the Hominid Calculus Microbiome

- Table of Contents

- R1 Introduction

- R2 Resources

- R3 Software Paths

- R4 Database and Genome Indexing

- R5 Data Acquisition

- R6 Data Preprocessing

- R7 Metagenomic Screening

- R8 Preservation Screening

- R9 Compositional Analysis

- R10 Core Microbiome Analysis

- R11 Genome Reconstruction and Phylogenetics

- R11.1 Production dataset sequencing depth calculations

- R11.2 Super-reference construction

- R11.3 Super-reference alignment and species selection

- R11.4 Comparative single reference mapping

- R11.5 Performance of super-reference vs. single genome mapping

- R11.6 Variant calling and single-allelic position assessment

- R11.7 Phylogenies

- R11.8 Pre- and Post-14k BP Observation Verification

- R12 Functional Analysis

This README acts as walkthrough guidance of the order of analyses for Fellows Yates, J.A. et al. (2021) PNAS. It acts as a practical methods supplement as well as for displaying additional figures only. Justification and discussion of figures and results are sparsely described, and the main publication should be referred to for scientific interpretation and context.

The repository contains additional data files, R notebooks, scripts and commands where a program was initiated directly from the command line, as well as some additional results.

The following objects that are referred to in the supplementary information of the paper can be found here:

- External Data Repository Section RXX (in this README)

- External Data Repository Figure RXX (in this README and under

05-images/.) - External Data Repository Table RXX (in this README)

- External Data Repository File RXX (in this README and under

06-additional_data_files/.)

Code can be found under the directory 02-scripts.backup, 'raw'

analysis results can be seen under 04-analysis. 'Cleaned-up'/summary analysis

results and metadata files can be seen under 00-documentation. Figures

displayed within this README are stored under 05-images. Additional data

files for fast access/referral in the main publication can be seen under

06-additional_data_files.

01-dataand03-preprocessingonly contains empty directories as these contain very large sequencing files that cannot be uploaded to this repository. They are kept here so if reproducing analysis, paths in scripts do not need to be modified. Raw data can be found on the ENA under project accession ID: PRJEB34569.

In more detail, the general structure of this project is typically as follows (although variants will occur):

README.md ## This walkthrough

00-documentation/ ## Contains main metadata files and summary result files

00-document_1.txt

01-document_2.txt

01-data/ ## Only contains directories where raw data FASTQ files

raw_data/ ## are stored (must be downloaded yourself, not included

screening/ ## due to large file size)

deep/

databases/

<database_1>/

02-scripts.backup/ ## Contains all scripts and R notebooks used in this

000-ANALYSIS_CONFIG ## project and referred to throughout this README

001-script.sh

002-notebook.Rmd

03-preprocessing ## Only contains directories where pre-processed FASTQ

screening/ ## files are stored (must be processed yourself, not

human_filtering/ ## included due to large file size)

input/

output/

library_merging/

input/

output/

deep/

human_filtering

input/

output/

library_merging/

input/

output/

04-analysis/ ## Contains all small(ish) results files from analyses,

screening/ ## such as tables, .txt. files etc. Large files (BAM, RAM6

analysis_1/ ## etc.) not included here due to large suze and must be

input ## generated yourself

output

analysis_2/

input

output

deep/

analysis_1/

input

output

analysis_2/

input

output

05-images/ ## Stores images displayed in this README

Figure_R01/

Figure_R02/

06-additional_data_files ## A fast look-up location for certain important files

## referred to in the supplementary text of the

## associated manuscript. Typically copies of files

## stored in 04-analysis.

Important: The code in this repository was written over multiple 'learning' years by non-bioinformaticians. Quality will vary and may not be immediately re-runable or readable - if you encounter any issues please leave an issue and we will endeavour to clarify.

We have tried to auto-replace all file paths to make it relative to this repository. This may not have been perfect, so please check the path begins with

../0{1,2,4}. If it does not, let us know and we will fix this accordingly.

Here is a list of programs and databases that will be used in this analysis and that you should have ready installed/downloaded prior carrying out the analysis. Note that the download and set up of the databases are described below.

This analysis was performed on a server running Ubuntu 14.04 LTS, and a SLURM submission scheduler. Some scripts or commands may heavily refer to SLURM, however these are directly related to our system. As far as we can, we have removed SLURM commands and/or MPI-SHH specific paths or parameters, but you should always check this for each command and script.

| Name | Version | Citation |

|---|---|---|

| GNU bash | 4.3.11 | NA |

| sratoolkit | 2.8.0 | https://www.ncbi.nlm.nih.gov/books/NBK158900/ |

| EAGER | 1.9.55 | Peltzer et al. 2016 Genome Biol. |

| AdapterRemoval | 2.3.0 | Schubert et al. 2016 BMC Research Notes |

| bwa | 0.7.12 | Li and Durbin 2009 Bioinformatics |

| samtools | 1.3 | Li et al. 2009 Bioinformatics |

| PicardTools | 1.140 | https://github.com/broadinstitute/picard |

| GATK | 3.5 | DePristo et al. 2011 Nat. Genets. |

| mapDamage | 2.0.6 | Jónsson et al. 2013 Bioinformatics |

| DeDup | 0.12.1 | Peltzer et al. 2016 Genome Bio |

| MALT | 0.4.0 | Herbig et al. 2016 bioRxiv, Vagene et al. 2018 Nat. Eco. Evo. |

| MEGAN CE | 6.12.0 | Huson et al. 2016 PLoS Comp. Bio. |

| FastP | 0.19.3 | Chen et al. 2018 Bioinformatics |

| Entrez Direct | Jun 2016 | http://www.ncbi.nlm.nih.gov/books/NBK179288 |

| QIIME | 1.9.1 | Caporaso et al. 2010 Nat. Methods. |

| Sourcetracker | 1.0.1 | Knights et al. 2011 Nat. Methods. |

| conda | 4.7.0 | https://conda.io/projects/conda/en/latest/ |

| pigz | 2.3 | https://zlib.net/pigz/ |

| R | >=3.5 | https://www.R-project.org/ |

| MaltExtract | 1.5 | Hübler et al. 2019 Genome Biology |

| MultiVCFAnalyzer | 0.87 | Bos et al. 2014 Nature |

| bedtools | 2.25.0 | Quinlan et al. 2010 Bioinformatics |

| panX | 1.5.1 | Ding et al. 2018 Nucleic Acids Research |

| MetaPhlAn2 | 2.7.1 | Truong et al. 2015 Nat. Methods |

| HuMAnN2 | 0.11.2 | Franzosa et al. 2018 Nat. Methods. |

| blastn | 2.7.1+ | Package: blast 2.7.1, build Oct 18 2017 19:57:24 |

| seqtk | 1.2-r95-dirty | https://github.com/lh3/seqtk |

| Geneious | R8 | https://www.geneious.com/ |

| IGV | 2.4 | https://software.broadinstitute.org/software/igv/ |

| Inkscape | 0.92 | www.inkscape.org |

Here we used R version 3.6.1

If did not come with package itself, we downloaded the following:

| Name | Version | Date | Download Location |

|---|---|---|---|

| NCBI Nucleotide | nt.gz | Dec. 2016 | ftp://ftp.ncbi.nlm.nih.gov/blast/db/FASTA/ |

| NCBI RefSeq | Custom | Nov. 2018 | ftp://ftp.ncbi.nlm.nih.gov/genomes/ |

| SILVA | 128_SSURef_Nr99 | Mar. 2017 | http://ftp.arb-silva.de/release_128/Exports/ |

| UniRef | uniref90_ec_filtered_diamond | Oct. 2018 | https://bitbucket.org/biobakery/humann2 |

⚠️ SILVA FASTA was modified after downloading replacing Us with Cs

| Species | Strain | Date | Completeness | Type | Source |

|---|---|---|---|---|---|

| Homo sapiens | HG19 | 2016-01-14 | Complete | Reference | http://hgdownload.cse.ucsc.edu/downloads.html#human |

| Actinomyces dentalis | DSM 19115 | 2019-02-25 | Scaffold | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/429/225/GCF_000429225.1_ASM42922v1/ |

| Aggregatibacter aphrophilus | W10433 | 2017-06-14 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/022/985/GCF_000022985.1_ASM2298v1/ |

| Campylobacter gracilis | - | 2019-05-22 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/001/190/745/GCF_001190745.1_ASM119074v1/ |

| Capnocytophaga gingivalis | ATCC 33624 | 2019-02-25 | Contigs | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/174/755/GCF_000174755.1_ASM17475v1/ |

| Corynebacterium matruchotii | ATCC 14266 | 2019-05-22 | Contigs | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/175/375/GCF_000175375.1_ASM17537v1/ |

| Desulfobulbus sp. oral taxon 041 | Dsb1-5 | 2018-06-06 | Contigs | Assembly | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCA/000/403/865/GCA_000403865.1_Dsb1-5/ |

| Fretibacterium fastidiosum | - | 2018-12-11 | Chromosome | Unknown | https://www.ncbi.nlm.nih.gov/nuccore/FP929056.1 |

| Fusobacterium hwasookii | ChDC F206 | 2019-10-27 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/001/455/105/GCF_001455105.1_ASM145510v1 |

| Fusobacterium nucleatum subsp. nucleatum | ATCC 25586 | 2017-06-14 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/007/325/GCF_000007325.1_ASM732v1/ |

| Olsenella sp. oral taxon 807 | 807 | 2019-01-10 | Complete | Unknown | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/001/189/515/GCF_001189515.2_ASM118951v2/ |

| Ottowia sp. oral taxon 894 | 894 | 2019-05-22 | Complete | Unknown | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/001/262/075/GCF_001262075.1_ASM126207v1/ |

| Porphyromonas gingivalis | ATCC 33277 | 2018-12-11 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/010/505/GCF_000010505.1_ASM1050v1/ |

| Prevotella loescheii | DSM 19665 | 2019-05-22 | Scaffolds | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/378/085/GCF_000378085.1_ASM37808v1/ |

| Pseudopropionibacterium propionicum | F0230a | 2018-12-11 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/277/715/GCF_000277715.1_ASM27771v1 |

| Rothia dentocariosa | ATCC 17831 | 2017-06-14 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/164/695/GCF_000164695.2_ASM16469v2/ |

| Selenomonas sp. F0473 | F0473 | 2019-05-22 | Scaffolds | Unknown | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/315/545/GCF_000315545.1_Seleno_sp_F0473_V1/ |

| Streptococcus gordonii | CH1 | 2018-06-14 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/017/005/GCF_000017005.1_ASM1700v1 |

| Streptococcus sanguinis | SK36 | 2018-12-11 | Complete | Reference | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/014/205/GCF_000014205.1_ASM1420v1/ |

| Tannerella forsythia | 92A2 | 2018-12-18 | Complete | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/238/215/GCF_000238215.1_ASM23821v1/ |

| Treponema socranskii subsp. paredies | ATCC 35535 | 2018-05-31 | Scaffolds | Representative | ftp://ftp.ncbi.nlm.nih.gov/genomes/all/GCF/000/413/015/GCF_000413015.1_Trep_socr_subsp_paredis_ATCC_35535_V1/ |

⚠️ The reference files are not provided here due to their large size.

Some scripts used in this project use variables stored a central profile called

02-scripts.backup/000-analysis_profile. These variables indicate the location

of certain programs in your servers file-system. The scripts loading the

profile will therefore look for and use the program stored in the variable.

You will need to replace the paths stored there to where each tool is stored

on your personal server, I have replaced our central storage to <YOUR_PATH>,

however you will need to check each path correctly.

Note: not all scripts use the 'profile', so please check each one before running

For direct commands (i.e. not used in a script), the path will either

be defined in the command block or assumed already in your $PATH.

The MALT nt databases was downloaded and generated as follows.

## MALT indexed NT database

mkdir -p 01-data/databases/malt/raw 01-data/databases/malt/indexed

cd 01-data/databases/malt/raw

### Download nucleotide database fasta and md5sum file into a database directory

wget ftp://ftp-trace.ncbi.nih.gov/blast/db/FASTA/nt.gz .

wget ftp://ftp-trace.ncbi.nih.gov/blast/db/FASTA/nt.gz.md5 .

### Generate the md5sum of the downloaded file, and comapre with contents of

### the NCBI .md5 version

md5sum nt.gz

cat nt.gz.md5

### Download into a different directory the accession to taxonomy mapping file

### as provided on the MEGAN6 website, and unzip

mkdir 01-data/databases/malt/acc2bin

cd !$

wget http://ab.inf.uni-tuebingen.de/data/software/megan6/download/nucl_acc2tax-May2017.abin.zip

unzip nucl_acc2tax-May2017.abin.zip

malt-build \

--step 2 \

-i "$DBDIR"/malt/raw/nt.gz \

-s DNA \

-d "$DBDIR"/malt/indexed \

-t 112 -a2taxonomy "$DBDIR"/malt/raw/nucl_acc2tax-May2017.abin

⚠️ The database files are not provided here due to their large size.

For the custom NCBI Genome RefSeq database containing bacterial and archaea

assemblies at scaffold, chromosome and complete levels - we follow the

R notebook here: 02-scripts.backup/099-refseq_genomes_bacteria_archaea_homo_complete_chromosome_scaffold_walkthrough_20181029.Rmd.

A corresponding list of genomes selected for use in this database can be seen

in in 06-additional_data_files under Data R10.

To build the aadder database for functional analysis - based on the custom

RefSeq MALT database above - we run the command

01-data/027-aadder_build_refseqCGS_bacarch_sbatch_script_20181104.sh. This

calls the adder-build command as provided in the MEGAN install directory's

tools folder. Note we have to change the MEGAN.vmoptions to have a large

enough memory allocation in the MEAN install directory.

The SILVA reference database, and all single genomes (HG19, bacterial etc.) were indexed as follows - with the SILVA database FASTA file as an example.

BWA=<PATH_TO>/bwa

SAMTOOLS=<PATH_TO>/samtools

PICARDTOOLS=<PATH_TO>/picard

mkdir $DBDIR/silva

cd !$

wget http://ftp.arb-silva.de/release_128/Exports/SILVA_128_SSURef_Nr99_tax_silva_trunc.fasta.gz

"$BWA" index SILVA_128_SSURef_Nr99_tax_silva_trunc.fasta

"$SAMTOOLS" faidx SILVA_128_SSURef_Nr99_tax_silva_trunc.fasta

"$PICARDTOOLS" CreateSequenceDictionary \

REFERENCE=SILVA_128_SSURef_Nr99_tax_silva_trunc.fasta \

O=SILVA_128_SSURef_Nr99_tax_silva_trunc.fasta.dict

⚠️ The indexing files are not provided here due to their large size.

For acquiring the UniRef database for HUMANn2, we used the script that comes with HUMANn2, and run as follows:

humann2_databases --download uniref uniref90_ec_filtered_diamond 01-data/databases/uniref90

⚠️ The database files are not provided here due to their large size.

For the scripts using analysis_profile file, ensure to update the paths in

02-scripts.backup/000-analysis_profile to in the analysis profile to your

corresponding location.

## MALT DB Directory containing all database files

MALTDB=<PATH_TO>/malt/databases/indexed/index038/full-nt_2017-10

## SILVA DB directory containing the converted U to T FASTA file and associated bwa indexed files

SILVADB=<PATH_TO>01/databases/silva/release_128_DNA/

## GreenGenes DB directory, as provided in QIIME

GREENGENESDB=<PATH_TO>/tools/qiime-environment/1.9.1/lib/python2.7/site-packages/qiime_default_reference/gg_13_8_otus

HG19REF=<PATH_TO>/Reference_Genomes/Human/HG19/hg19_complete.fastaAll raw FASTQ files should be downloaded to sample specific directories in 01-data/public_data/raw.

General laboratory and sequencing and meta information about all newly generated

libraries for this study can be seen in

06-additional_data_files/ under Data R01 and Data R02.

The same information but for controls can be seen in Data R03.

⚠️ FASTQ files are not provided here due to their large size. Please see ENA under accession ID: PRJEB34569

In addition to the samples sequenced in this study (or generated by our group, but previously published in the case of JAE), we downloaded the shotgun sequenced individuals from Weyrich et al. 2017 (Nature).

For this, I downloaded the 'processed' files from the OAGR database, as the original data had barcodes, and they already had used AdapterRemoval, as done here.

The list of files and hard links can be seen in the documentation under

00-documentation.backup/99-public_data-Weyrich_Neanderthals.txt.

I downloaded each file with wget, renaming with the file as listed in the

OAGR website, concatenated multi-file samples if required (i.e. ElSidron1) then

renamed to the EAGER standard.

An example:

cd 01-data/public_data/

mkdir ElSidron1

wget https://www.oagr.org.au/api/v1/dataset_file/3086/download

rename s/download/2NoAdapt_ELSIDRON1L7_lTACTG_rCTCGA_R1R2_Collapsed.fastq.gz/ download

wget https://www.oagr.org.au/api/v1/dataset_file/3109/download

rename s/download/2NoAdapt_ELSIDRON1L7_lTACTG_rCTCGA_R1R2_Collapsed_Truncated.fastq.gz/ download

cat *fastq.gz > ElSidron1_S0_L000_R1_000.fastq.merged.fq.gz

## If no concatenation required

# mv 2NoAdapt_CHIMP_150519_lACGTG_rATTGA_R1R2_Collapsed.fastq.gz Chimp.150519_S0_L000_R1_000.fastq.merged.fq.gz

The final merged individual fastq files were moved to individual directories in

/01-data/public_data/prepped.

The just renamed files were then symlinked into the folder above.

⚠️ FASTQ files are not provided here due to their large size. Please see corresponding locations described above

In addition to ancient and calculus samples, we also require comparative data from different sources of microbiomes (e.g. soil, skin, gut etc.).

Note that the bone 'environmental' sample comparative samples were previously published, but re-sequenced for this study. The new sequencing files used in this study can also be found in the ENA repository PRJEB34569 alongside all new calculus data.

Comparative source files were selected based on the following criteria:

- Had to be shotgun metagenomes

- Have had no modification or treatment made to DNA selection or host (e.g. no pesticide, as far as could be determined)

- Must have been generated on an Illumina platform

- Must have more than 10 million reads

- Must have yield than 1000 16S rRNA reads in the shotgun data (detected during analysis - see QIIME section)

In addition, the Human Microbiome Project gut and plaque samples had the additional criteria of:

- Each must be from unique individuals

- Aim for approximately 50/50 male and female where possible

The files were downloaded from the NCBI SRA and EBI ENA

databases. A list of these libraries can be seen either in

06-additional_data_files under R04, or in the 'mapping' file

00-documentation.backup/02-microbiome_calculus-deep_evolution-individualscontrolssources_metadata.tsv.

Metadata on the HMP samples can be seen in

00-documentation/99-sourcemetadata-hmp_SraRunTable_allRuns.tsv, with

samples selected for each source type coming from unique individuals, as

inferred by the hmp_subject_id column. Metadata for the other datasets can be

seen in the 00-documentation/99-sourcemetadata-* files

A file containing the ERR and SRR numbers of each library on each line was

given to the scripts 001-SRA_download_script.sh and 002-ERR_download_script.sh.

Some HMP project samples were not available directly from the SRA FTP server,

in which case these were directly pulled using either prefetch -v, which is

a similar command as in the 001-SRA_download_script.sh file, but without

the wget step.

SRATOOLKIT=/projects1/tools/sratoolkit/2.8.0/sratoolkit.2.8.0-ubuntu64/bin

"$SRATOOKIT"/prefetch -v SRR514306

"$SRATOOKIT"/fastq-dump -F --split-files --readids /projects1/clusterhomes/fellows/ncbi/public/sra/SRR514306.sra --gzip --outdir .These were then renamed using

cd 01-data/public_data/raw/

rename s/_1.fastq.gz/_S0_L001_R1_000.fastq.gz/ */*.fastq.gz

rename s/_2.fastq.gz/_S0_L001_R2_000.fastq.gz/ */*.fastq.gz

The final fastq files were then placed symlinked to individual directories in

01-data/public_data/prepped

For the sediment data from Slon et al. 2017, I also resorted to downloading the FASTQ files directly. However, unfortunately, the uploaded data was actually not 'raw' but the already merged data from the Slon 2017 paper. We downloaded the FASTQ data anyway and did a modified pre-processing.

I did this with the following command, and utilising the file generated from

the parsing script of the two metadata files which is stored here

02-scripts.backup/99-Slon2017_DataFinderScript.R.

cat 00-documentation.backup/99-Slon2017_AccessionsToDownload_2.tsv | while read LINE; do

mkdir 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev) && \

wget $(echo $LINE | cut -d ';' -f1) -P 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/ && \

wget $(echo $LINE | cut -d ';' -f2) -P 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/ && \

wget $(echo $LINE | cut -d ';' -f3) -P 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/ && \

rename s/.fastq.gz/_S0_L001_R1_000.merged.fastq.gz/ \

01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev).fastq.gz && \

rename s/_1.fastq.gz/_S0_L001_R1_000.fastq.gz/ 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/*.fastq.gz && \

rename s/_2.fastq.gz/_S0_L001_R2_000.fastq.gz/ 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/*.fastq.gz

done

To complete standardisation of this data, we combined singleton files.

cat 00-documentation.backup/99-Slon2017_AccessionsToDownload_2.tsv | while read LINE; do

mkdir 03-preprocessing/screening/human_filtering/input/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/ && \

cat 01-data/raw_data/screening/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/*.fastq.gz >> \

03-preprocessing/screening/human_filtering/input/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)/$(echo $LINE | cut -d ';' -f1 | rev | cut -d/ -f 2 | rev)_S0_L001_R1_000.merged.fq.gz

doneThe final merged individual fastq files were moved to individual directories in

01-data/public_data/prepped

⚠️ FASTQ files are not provided here due to their large size. Please see corresponding locations described above

Now downloaded, we started preprocessing by performing a sequencing quality check, merged any of the PE data (as well as trimming low quality bases), removed any DNA that maps to the human genome (but preserving the statistics) and extracted all-non human DNA for downstream processing.

We performed this in two different ways. When this project first started our

local build of EAGER was broken, so I made our own script version(s)

(02-scripts.backup/003-preprocessing_human_filtering.sh, and

02-scripts.backup/004-preprocessing_human_filtering_premerged.sh) utilising

the same commands and tool versions as run by EAGER. Once EAGER was fixed, we

returned to using to this as it was more robust. The only addition to the script

version was samtools fastq to convert unmapped reads (i.e. non-human reads,

used for metagenomic screening) to FASTQ.

The output of both methods of preprocessing was stored in 03-preprocessing/screening/human_filtering.

⚠️ Output FASTQ files are not provided here due to their large size.

Procedure is described in more detail in the next sections.

Below I provide a loop that made sure to run the script(s) on libraries we had not

already run based on what was already present in the output

directory. All we needed was to change the paths in the variables INDIR and

OUTDIR.

If you need to increase the number of cores of memory, you can modify this in the

CPUandMEMvariables in the script itself.

You will need to check if these 'manual' scripts are working correctly manually, as I didn't have time to in-built checks. We verified this by checking all the fields in the output of the statistics script (see here) was filled with numbers.

An example of the loop using with 'premerged' script is as follow (remove the

_premerged section of the script name to run for 'raw' FASTQs)

## Cycle through INDIR corresponding to each type of data (paired/single, hiseq/nextseq, pre-merged etc.)

INDIR=03-preprocessing/screening/human_filtering/input/hiseq_single

OUTDIR=03-preprocessing/screening/human_filtering/output

SCRIPTS=02-scripts.backup

for LIBDIR in "$INDIR"/*/; do

LIBNAME=$(echo "$LIBDIR" | rev | cut -d/ -f2 | rev)

if [ -d "$OUTDIR"/"$LIBNAME" ]; then

printf "\n $LIBNAME already Processed \n\n"

else

mkdir "$OUTDIR"/"$LIBNAME"

$SCRIPTS/03-preprocessing_human_filtering_premerged.sh $OUTDIR/$LIBNAME $LIBDIR $LIBNAME

sleep 1

doneFor the Slon et al. 2017 and Weyrich et al. 2017 datasets, we ran EAGER without AdapterRemoval as the ENA/OAGR uploaded data was already trimmed and merged.

For the VLC/ARS/DLV/PLV/RIG/OFN samples, and some of the blanks and deep sequenced samples that came later, I ran the same as below, but with FastQC and AdapterRemoval turned on with default settings.

Organism: Human

Age: Ancient

Treated Data: non-UDG [Deep : UDG]

Pairment: Single End (VLC/EXB/LIB - Paired End)

Input is already concatenated (skip merging): Y (VLC/EXB/LIB - N)

Concatenate Lanewise together: N (VLC -Y, EXB/LIB - N)

Reference: HG19

Name of mitocondrial chromosome: chrMT

FastQC: Off (VLC/EXB/LIB/ARS - On)

AdapterRemoval: Off (VLC/EXB/LIB - On)

Mapping: BWA

Seedlength: 32

Max # diff: 0.01

Qualityfilter: 0

Filter unmapped: Off

Extract Mapped/Unmapped: On

Remove Duplicates: DeDup

Treat all reads as merged: On

Damage Calculation: Damageprofiler

CleanUp: On

Create Report: On

These settings can be seen in table format in 06-additional_data_files

under Data R05.

The EAGER runs were be submitted with:

EAGER=<PATH_TO>/1.92.55/bin/eager

EAGERCLI=<PATH_TO>/1.92.55/bin/eagercli

for FILE in $(find 03-preprocessing/screening/human_filtering/output/* -name '*.xml'); do

unset DISPLAY && $EAGERCLI $(readlink -f $FILE)

done

For the screening data to clean up the EAGER results directories so that they are in the same format as the script version others we ran the following couple of commands.

All production dataset files were run with EAGER, so clean up was not required and the default ReportTable output was used for statistics reporting

To run clean-on on single libraries:

cd 03-preprocessing/screening/human_filtering/output

## Clean up

SAMPLE=LIB007.A0124

SAMTOOLS=<PATH_TO>/samtools_1.3/bin/samtools

for DIR in $(readlink -f "$SAMPLE"); do

cd "$DIR"

mkdir fastqc

mv 0-FastQC/*zip fastqc

mv 1-AdapClip/*settings .

mv 4-Samtools/*mapped.bam.stats .

mv 4-Samtools/*extractunmapped.bam .

mv 5-DeDup/*.mapped.sorted.cleaned.hist .

mv 5-DeDup/*.log .

mv 6-QualiMap/*/ qualimap

mv 7-DnaDamage/*/ damageprofiler

done

cd ..

for DIR in $(readlink -f "$SAMPLE"); do

cd "$DIR"

"$SAMTOOLS" idxstats $(readlink -f 4-Samtools/*.mapped.sorted.bam) >> $(readlink -f 4-Samtools/*.mapped.sorted.bam).idxstats

mv 4-Samtools/*idxstats .

rm -r 0-FastQC

rm -r 1-AdapClip

rm -r 3-Mapper

rm -r 4-Samtools

rm -r 5-DeDup

rm -r 6-QualiMap

rm -r 7-DnaDamage

rm DONE.CleanUpRedundantData

rm EAGER.log

done

And for multiple samples:

SAMPLES=($(find -name '2019*.xml' -type f -exec readlink -f {} \;))

SAMTOOLS=<PATH_TO>/samtools_1.3/bin/samtools

for DIR in ${SAMPLES[@]}; do

cd $(dirname "$DIR")

mkdir fastqc

mv 0-FastQC/*zip fastqc

mv 1-AdapClip/*settings .

mv 4-Samtools/*mapped.bam.stats .

mv 4-Samtools/*extractunmapped.bam .

mv 5-DeDup/*.mapped.sorted.cleaned.hist .

mv 5-DeDup/*.log .

mv 6-QualiMap/*/ qualimap

mv 7-DnaDamage/*/ damageprofiler

done

cd ..

for DIR in ${SAMPLES[@]}; do

cd $(dirname "$DIR")

"$SAMTOOLS" idxstats $(readlink -f 4-Samtools/*.mapped.sorted.bam) >> $(readlink -f 4-Samtools/*.mapped.sorted.bam).idxstats

mv 4-Samtools/*idxstats .

rm -r 0-FastQC

rm -r 1-AdapClip

rm -r 3-Mapper

rm -r 4-Samtools

rm -r 5-DeDup

rm -r 6-QualiMap

rm -r 7-DnaDamage

rm DONE.CleanUpRedundantData

rm EAGER.log

done

⚠️ The per-sample EAGER output files are not provided here due to their large size.

As EAGER itself does not have ability to convert unmapped read BAMs to FASTQ, we manually ran this for EAGER-preprocessed files with:

FILES=($(find -L 03-preprocessing/{screening,deep}/human_filtering/output/{FUM,GOY,PES,GDN}*.2/ -name '*.extractunmapped.bam' -type f))

for i in 1:${#FILES[@]}; do

samtools fastq ${FILES[$i]} | gzip > ${FILES[$i]} .fq.gz;

done

⚠️ The unmapped-read FASTQ files are not provided here due to their large size.

We then extracted the statistics of this pre-processing with the script

02-scripts.backup/005-statistics_human_filtering.sh. Once checked, we moved

the resulting human_filtering_statistics.csv output file to our 00-documents

folder and renamed as 03-human_filtering_statistics.csv.

SCRIPTS=02-scripts.backup

cd 03-preprocessing/screening/human_filtering

"$SCRIPTS"/005-statistics_human_filtering.sh output/

## Copy to documentation folder

mv human_filtering_statistics_"$(date +"%Y%m%d")".csv ..

cp ../human_filtering_statistics_"$(date +"%Y%m%d")".csv ../../../00-documentation.backup/03-human_filtering_statistics_"$(date +"%Y%m%d")".csvNote: if you get a "Runtime error (func=(main), adr=6): Divide by zero" error, don't worry, this is related to the cluster factor calculation for when there are no human reads after de-duplication. I just manually filled these in with NA.

The summarised results from this preprocessing can be seen in

06-additional_data_files under Data R06

For the deep sequenced data, the EAGER table was used for report statistics (see

below forfurther information), and can be seen in 06-additional_data_files

under Data R08.

Next, we merged together libraries that were sequenced multiple times, or individuals with multiple calculus samples.

A list of libraries that have been merged together can be seen for the screening

data at 06-additional_data_files under Data R07 or

00-documentation/04-library_merging_information.csv. For the production

dataset the same is either under Data R09 or

at 00-documentation/17-samples_deep_library_merging_information_20190708.csv.

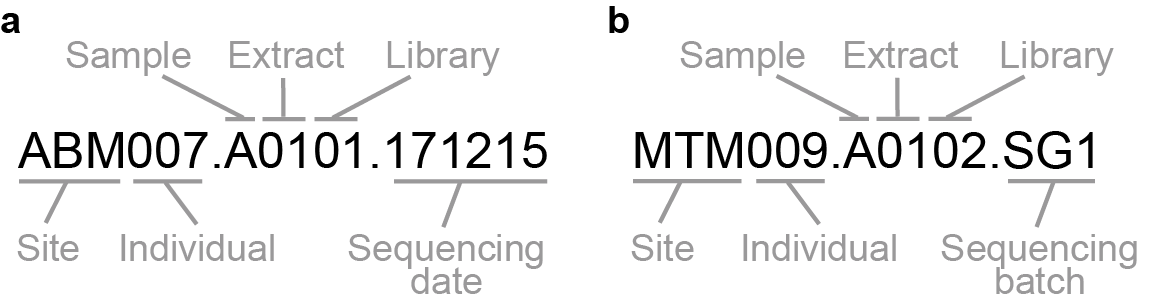

The structure of the MPI-SHH sample naming system can be seen here:

Library merging in this case can therefore be worked out by merging together any library that shares the first six character section of each library. For example:

CDC005.A0101

CDC005.A0101.170817

or

ECO002.B0101

ECO002.C0101

The two examples represent distinct individuals (but different libraries of a

single sample in the case of different .A characters or multiple sequencing

of the same library in the case of the date string .170817). In this study

we did not consider possible differences in sampling tooth source of the

calculus, and therefore pooled accordingly either at sampling or

in silico here.

First, into our final output preprocessing directory (03-preprocessing/*/library_merging/output),

we quickly imported all files for all individuals as symlinks

cd 03-preprocessing/screening/library_merging

## Make directory

for DIR in ../../screening/human_filtering/output/*/; do

mkdir "$(echo $DIR | rev | cut -d/ -f2 | rev)/"

done

## Make symlink of FastQ into new directory above.

for DIR in ../../screening/human_filtering/output/*/; do

ln -s $(readlink -f "$DIR")/*fq.gz "$(echo $DIR | rev | cut -d/ -f2 | rev)/";

doneThen we removed the imported directories of 'extra' samples/libraries that needed to be merged.

rm \

ABM007.A0101/*.fq.gz \

ABM008.A0101/*.fq.gz \

BIT001.A0101/*.fq.gz \

CDC005.A0101/*.fq.gz \

CDC011.A0101/*.fq.gz \

DJA006.A0101/*.fq.gz \

DJA007.A0101/*.fq.gz \

EBO008.A0101/*.fq.gz \

EBO009.A0101/*.fq.gz \

ECO002.B0101/*.fq.gz \

ECO004.B0101/*.fq.gz \

FDM001.A0101/*.fq.gz \

GDN001.A0101/*.fq.gz \

IBA001.A0101/*.fq.gz \

IBA002.A0101/*.fq.gz \

LOB001.A0101/*.fq.gz \

MOA001.A0101/*.fq.gz \

MTK001.A0101/*.fq.gz \

MTM003.A0101/*.fq.gz \

MTM010.A0101/*.fq.gz \

MTM011.A0101/*.fq.gz \

MTM012.A0101/*.fq.gz \

MTM013.A0101/*.fq.gz \

MTS001.A0101/*.fq.gz \

MTS002.A0101/*.fq.gz \

MTS003.A0101/*.fq.gz \

TAF017.A0101/*.fq.gz \

TAF018.A0101/*.fq.gz \

WAL001.A0101/*.fq.gzThese extras were then merged into a single FASTQ file for each individual using

cat, and placed in an independent file in the output directories with the

following commands

INDIR=03-preprocessing/screening/human_filtering/output

OUTDIR=03-preprocessing/screening/library_merging

cat "$INDIR"/ABM007*/*.fq.gz >> "$OUTDIR"/ABM007.A0101/ABM007_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/ABM008*/*.fq.gz >> "$OUTDIR"/ABM008.A0101/ABM008_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/BIT001*/*.fq.gz >> "$OUTDIR"/BIT001.A0101/BIT001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/CDC005*/*.fq.gz >> "$OUTDIR"/CDC005.A0101/CDC005_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/CDC011*/*.fq.gz >> "$OUTDIR"/CDC011.A0101/CDC011_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/DJA006*/*.fq.gz >> "$OUTDIR"/DJA006.A0101/DJA006_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/DJA007*/*.fq.gz >> "$OUTDIR"/DJA007.A0101/DJA007_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/EBO008*/*.fq.gz >> "$OUTDIR"/EBO008.A0101/EBO008_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/EBO009*/*.fq.gz >> "$OUTDIR"/EBO009.A0101/EBO009_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/ECO002*/*.fq.gz >> "$OUTDIR"/ECO002.B0101/ECO002_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/ECO004*/*.fq.gz >> "$OUTDIR"/ECO004.B0101/ECO004_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/FDM001*/*.fq.gz >> "$OUTDIR"/FDM001.A0101/FDM001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/GDN001*/*.fq.gz >> "$OUTDIR"/GDN001.A0101/GDN001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/IBA001*/*.fq.gz >> "$OUTDIR"/IBA001.A0101/IBA001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/IBA002*/*.fq.gz >> "$OUTDIR"/IBA002.A0101/IBA002_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/LOB001*/*.fq.gz >> "$OUTDIR"/LOB001.A0101/LOB001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MOA001*/*.fq.gz >> "$OUTDIR"/MOA001.A0101/MOA001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTK001*/*.fq.gz >> "$OUTDIR"/MTK001.A0101/MTK001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTM003*/*.fq.gz >> "$OUTDIR"/MTM003.A0101/MTM003_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTM010*/*.fq.gz >> "$OUTDIR"/MTM010.A0101/MTM010_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTM011*/*.fq.gz >> "$OUTDIR"/MTM011.A0101/MTM011_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTM012*/*.fq.gz >> "$OUTDIR"/MTM012.A0101/MTM012_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTM013*/*.fq.gz >> "$OUTDIR"/MTM013.A0101/MTM013_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTS001*/*.fq.gz >> "$OUTDIR"/MTS001.A0101/MTS001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTS002*/*.fq.gz >> "$OUTDIR"/MTS002.A0101/MTS002_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/MTS003*/*.fq.gz >> "$OUTDIR"/MTS003.A0101/MTS003_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/TAF017*/*.fq.gz >> "$OUTDIR"/TAF017.A0101/TAF017_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/TAF018*/*.fq.gz >> "$OUTDIR"/TAF018.A0101/TAF018_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

cat "$INDIR"/WAL001*/*.fq.gz >> "$OUTDIR"/WAL001.A0101/WAL001_S0_L000_R1_000.fastq.merged.prefixed.hg19unmapped.fq.gz

⚠️ All merged FASTQ are files not provided here due to their large size.

For screening data, the final number of reads going downstream analysis is also

recorded in the file 04-samples_library_merging_information.csv. For libraries

sequenced twice (i.e. has .SG.2 or a date, I manually added the two values

together.

For deep sequencing data, The same concatenating of multiple samples and/or

lanes was done for the deep sequencing samples. However the statistics were

summarised across multiple libraries using the R notebook

02-scripts.backup/099-eager_table_individual_summarised.Rmd.

Visualisation of summary statistics can also be seen below.

The human DNA GC content could be a bit off in some of the new libraries generated in this study, as we mostly sequenced with Illumina NextSeqs. These have a 2 colour chemistry that considers no light emission as a 'G'. Thus, empty or finished clusters can be read as long poly G reads - which can still map to the human genome in regions with long repetitive regions. Modern contamination with long human DNA reads on a failed flow cell cluster may also containing poly-G stretches if the florescence failed before the entire read is complete and the adapter had not been sequenced.

The polyG issue would not likely affect our ancient microbial data because these should be so short that each read would have both ends of the read entirely sequenced (the whole read with both adapters or most of both adapters would fit in 75 cycles). Thus, adapter removal would remove anything that comes after the adapters which would be the poly Gs. Poly G tails would only affect single index reads, where the read itself is too short and doesn't have an adapter to indicate the read has ended.

To get improved human DNA content calculations, we performed the following steps,

the output of which was stored in 04-analysis/screening/eager under the

polyGremoval* directories.

-

AdapterRemoval with no quality trimming or merging, just adapter removal

sbatch 02-scripts.backup/099-polyGcomplexity_filter_preFastPprep.sh

-

Clean up the output in

04-analysis/screening/eager/polyGremoval_input/output/withfind . ! -name '*fastq.gz' -type f -delete

-

Run FastP on output of AdapterRemoval with

sbatch 02-scripts.backup/099-polyGcomplexity_filter.sh

-

Finally run the output of FastP again with EAGER. Note that we still need to run AR to merge reads, but it's not important for re-clipping as there shouldn't be any adapters, so will put minimum adapter overlap to 11 (EAGER doesn't allow merging only)

Organism: Human Age: Ancient Treated Data: non-UDG Pairment: Single End (R1) or Paired End (R1/R2) Input is already concatenated (skip merging): N Concatenate Lanewise together: N (as lane concatenation already done) Reference: HG19 Name of mitochondrial chromosome: chrMT AdapterRemoval: N minimum adapter overlap: 11 Mapping: BWA Seedlength: 32 Max # diff: 0.01 Qualityfilter: 0 Filter unmapped: On Extract Mapped/Unmapped: Off Remove Duplicates: DeDup Treat all reads as merged: Y Damage Calculation: Y CleanUp: On Create Report: On

To run each EAGER run in parallel, we again used

02-scripts.backup/02-scripts.backup/021-eager_microbiota_slurm_array.sh after

updating the path in the find command in the script to point to the

corresponding EAGER XML directory.

The resulting human mapping data after poly-G removal can be seen in

00-documentation.backup/99-PolyGRemoved_HumanMapping_EAGERReport_output.csv

Visualisation of summary poly-G trimming statistics can also be seen below.

⚠️ The EAGER run output files are not provided here due to their large size.

Sequencing quality control results for both screening and production datasets

were generated in the script seen in

02-scripts.backup/099-SequencingQCMetrics_v2.Rmd.

The following figures also describe a subset of these preprocessing summary statistics.

Figure R1 | Sequencing metric distributions of the screening dataset of ancient and modern calculus samples newly sequenced during this study. Each point represents a single individual (i.e. all samples, libraries and re-sequencing runs combined). a Raw sequencing read counts (prior to adapter removal and merging). b Pre-processed read counts after adapter removal and read merging. c Proportion of human DNA. d Count of non-human reads used for downstream analysis (pre-processed reads with human sequences removed).

Figure R2 | Sequencing read count distributions of the production dataset of ancient calculus samples newly sequenced during this study. Each point represents a single indiviudal (i.e. all samples, libraries and re-sequencing runs combined). a Raw sequencing reads (prior adapter removal and merging), b Number of reads used for downstream analysis (processed reads with human sequences removed).

The general metadata file for all main individual-level pre-processing

statistics can be seen in 06-additional_data_files under Data R04. This file

is typically used as input for all downstream analyses, when required.

For the first step of analysing the microbiome content of dental calculus, controls and comparative samples is to perform taxonomic binning and classification. This allows us to rapidly identify what species are present in each library.

For taxonomic binning of reads of the screening dataset, we used MALT with

a wrapper script for efficient submission. The settings set in

02-scripts/007-malt-genbank-nt_2017_2step_85pc_supp_0.01 are as follows:

- a read requires a minimum of 85% sequence identity (

-id), - it will only retain a second hit if the alignment is within 1% of the

bit-score of the top scoring hit (

-top) - it will only keep a leaf-node on the tree if it has more than 0.01 of the hits

over all the hits in the sample (

-supp). - it will only retain 100 possible hits per node.

Note that you may have to change the

INSTALL4J_JAVA_HOMEpath within the script.

We then ran our taxonomic binning job with the following command, providing the input directory and a wild-cards to pick up all merged files to individual level, and an output directory.

02-scripts.backup/007-malt-genbank-nt_2017_2step_85pc_supp_0.01 \

03-preprocessing/screening/library_merging/*/*.fq.gz \

04-analysis/screening/malt/ntThis script aligns to the NCBI Nucleotide database (nt). For the CustomRefSeq database, see below

⚠️ The RMA6 files are not provided here due to their large size.

To extract the summary statistics for all the MALT runs, we ran the following command on the MALT logs produced by the script described above.

grep -e "Loading MEGAN File:" \

-e "Total reads:" \

-e "With hits:" \

-e "Alignments:" \

-e "Assig. Taxonomy" \

-e "Min-supp. changes" \

-e "Numb. Tax. classes:" \

-e "Class. Taxonomy:" \

-e "Num. of queries:" \

-e "Aligned queries:" \

-e "Num. alignments:" \

-e "MinSupport set to:" \

04-analysis/screening/malt/nt/*log | cut -d":" -f 2-99 > 00-documentation.backup/99-maltAlignedReadsSummary_raw_nt_$(date "+%Y%m%d").txtNote: if you have failed runs or single samples, and repeat the command, check to remove those 'failed' entries after running the grep command

To clean up the output of the grep command, and perform additional

calculation steps, we ran the following R

script: 02-scripts.backup/099-MALT_Summary_statistics.R, the output of which

is recorded in

00-documentation.backup/05-MALT_taxonomic_binning_summary_statistics_nt.tsv

for nt and for RefSeq in

00-documentation.backup/05-MALT_taxonomic_binning_summary_statistics_refseq_bacharchhomo_gcs_20181122.tsv

The MinSupport value column(s) was then manually added to the individuals

column of our the final main screening metadata file

00-documentation/02-calculus_microbiome-deep_evolution-individualscontrolssources_metadata_20190523.tsv.

Summary statistics on the number of reads assigned per individual to the MALT and RefSeq databases can be seen below

Once MALT had completed, we needed to generate an OTU table of all the alignments.

For this we used the GUI tool MEGAN6 that MALT is based on our local machine. To generate the OTU table, we opened the MALT RMA6 files in MEGAN with absolute counts, and ignored all unassigned reads.

To un-collapse the tree we pressed the following in the tool-bar: Tree > Rank > <Taxonomic level>, the then select species nodes with: Select > Rank > <Taxonomic level>.

Then, File > Export > Text (CSV) format, selected taxonName_to-count, summarised and tab as a separator.

For a 'microbial-only' OTU table (excluding prokaryotes and synthetic DNA sequences), we un-collapsed the tree by firstly selecting 'collapse non-prokaryotes' under the 'Tree' menu, and then Select > Rank > <taxonomic level> to select only Bacteria and Archaea. We also exported the a tree based on the same data with the option: File > Export > Tree, and saved in Newick format. This was also done for each prokaryote taxonomic level.

These were be saved in 04-analysis/screening/megan.backup, but additionally

the corresponding .megan, .nwk and OTU tables at various taxonomic

levels (as exported by MEGAN) for both Nt and RefSeq

databases can be seen in 06-additional_data_files under Data R11.

For faster accessibility to OTU tables at different stages of downstream

analysis, we made raw MALT OTU tables with and without badly-preserved

individuals (see below) and at

different min. support values (see below)

were generated by the Notebook

02-scripts.backup/016-MALT_otutable_generation.Rmd. These tables are stored

as .tsv files in the 04-analysis/screening/megan.backup

directory as well 06-additional_data_files under Data R11.

We were also interested in whether there were any overall patterns between the samples in terms of broad taxonomic content.

Visualisation and statistical testing of whether the ratio of prokaryotic to

eukyarotic reads differs between well-preserved and badly-preserved samples can

be seen in 02-scripts.backup/099-cumulativedecay_vs_sourcetracker.Rmd.

Visualisation of this analysis can be seen

below

To get an overview of the 'mapability' of the (merged) samples used in this, we

generated summary statistics of numbers of reads taxonomically assigned, as well

as made comparison between the two MALT databases. This was performed with the

R notebook 02-scripts.backup/099-MALTAssignmentResults.Rmd.

Summary figures are below. Please see the main publication for further discussion.

Figure R3 | Comparison of the mean number of taxonomically assigned non-human reads across all sources, laboratory controls and comparative sample groups. Taxonomic assignment is from aligning to the NCBI nt (2017) and a custom NCBI refseq (2018) databases using MALT. Colours correspond to calculus host genus. Blue: Alouatta; Purple: Gorilla; Green: Pan; Orange: Homo; Grey: non-calculus.

Figure R4 | Gardner-Altman plot showing differences in the mean percent of taxonomically assigned reads between the NCBI nt (2017) and a custom NCBI RefSeq (2018) databases using MALT. Right hand plot: dot represents mean difference between the two groups with resampling distribution (5000 bootstraps). Left hand plot: slope graph showing relationship between means percent taxonomically assigned reads between two databases per group. Vertical dark line on dot represents 95% confidence interval around the mean. Y axis represents mean percentage of taxonomically assigned reads within each group. a Comparison of overall mean between all calculus samples, laboratory controls and comparative sources in this study. b Comparison of just dental calculus samples.

Figure R5 | Comparison of mean percent of taxonomically assigned reads to different groups of humans, when aligning between the NCBI nt (2017) and a custom NCBI RefSeq (2018) database using MALT. Ancient sample groups are 'pre-agricultural' and 'pre-antibiotic' humans and are taken from skeletal remains, whereas Modern Day Human calculus and HMP plaque samples come from living individuals. Colours correspond to sample type. Orange: Homo calculus; Grey: non-calculus.

We also visualised the prokaryotic over eukaryotic content. See the main publication and below for further discussion of these observations.

Figure R6 | Comparison of ratios of alignments to Bacterial/Archaeal/Viral reference sequences vs. Eukaryotic reference sequences between all calculus, laboratory controls and comparative sources. Ratios are based on the number of reads aligned the NCBI nt (2017) database using MALT. Y-axis is log10 scaled. Colours correspond to calculus host genus. Colours correspond to calculus host genus. Blue: Alouatta; Purple: Gorilla; Green: Pan; Orange: Homo; Grey: non-calculus.

Figure R7 | Comparison of ratios of alignments to Bacterial/Archaeal/Viral reference sequences vs. Eukaryotic reference sequences between different groups of humans. Ratios are based on the number of reads aligned the NCBI nt (2017) database using MALT. Y-axis is log10 scaled. Ancient sample groups are 'pre-agricultural' and 'pre-antibiotic' humans and are taken from skeletal remains, whereas Modern Day Human calculus and HMP plaque samples come from living individuals. Colours correspond to calculus host genus. Colours correspond to calculus host genus. Orange: Homo; Grey: non-calculus.

A crucial part of any ancient DNA study is to control for preservation of the DNA content of any analysed samples. Due to taphonomic processes, the original endogenous DNA can be come very degraded and also entirely lost. This can lead to samples containing only contaminant DNA and thus cause major skews and complications in downstream analysis. Identifying well-preserved samples containing a sufficient fraction of the original microbiome is critical, but equally so is assessing that their DNA is not derived from modern contamination.

The MALT OTU table(s) alone does not give us much information about the genetic preservation of the original oral signature within each individual.

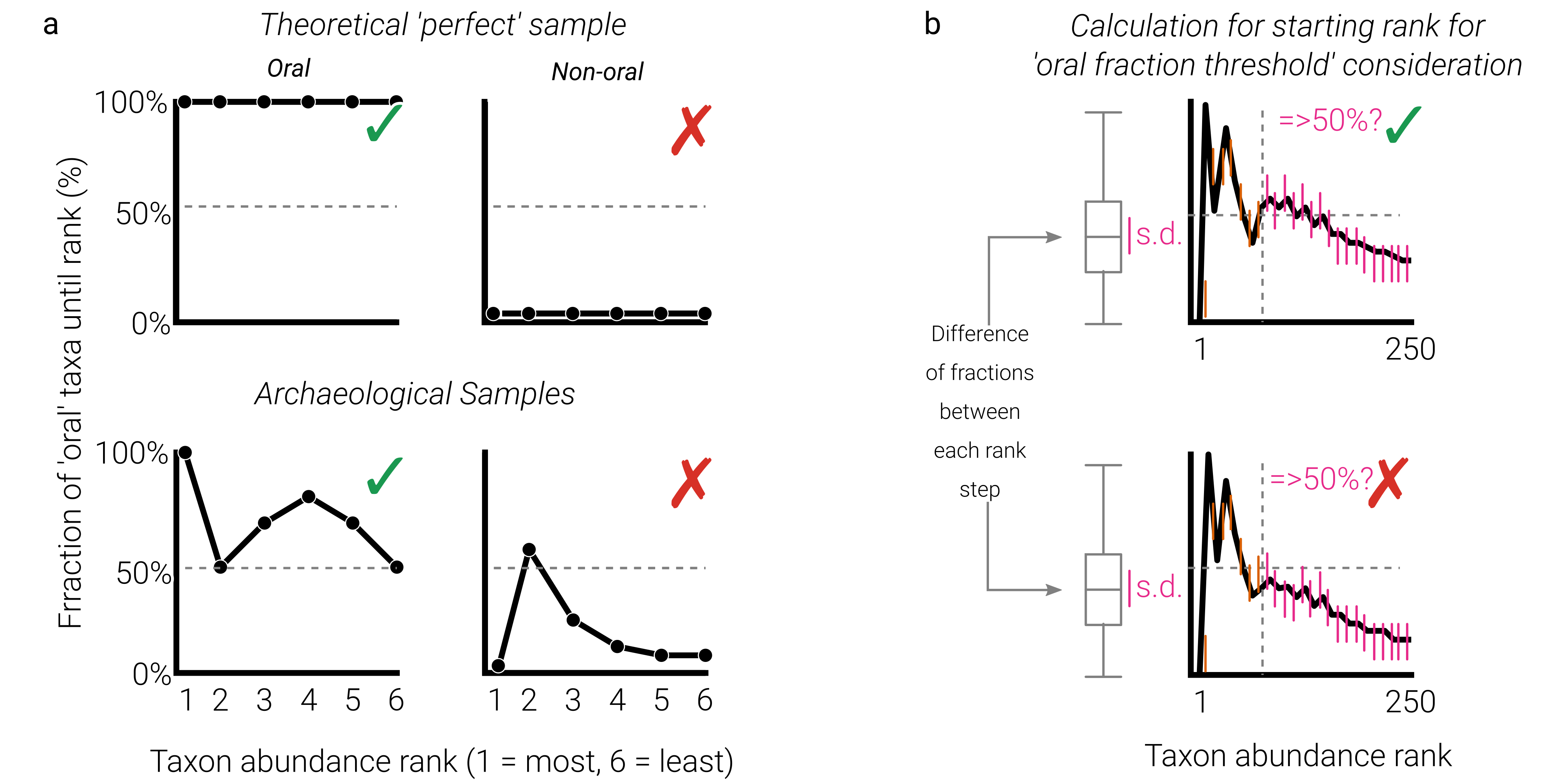

To get a rapid idea of the level of identifiable oral taxa in each individual, I came up with a simple visualisation to show how abundant the (hpoefully endogenous) oral signal in the samples are. It is based on the decay of the fraction of oral taxa identified when looking from most to least abundant taxa within a sample. The concept is further described in the main publication supplement, but a schematic on how to interpret them can be seen here:

Figure R8 | Schematic diagram of a cumulative percent decay method of preservation assessment. a example curves of a theoretical sample consisting of purely oral taxa (top left), and a theoretical sample containing no oral taxa (top right). For the archaeological samples, a well-preserved sample (bottom left) will consist mostly of oral taxa but may include uncharacterised or contaminant taxa leading to a non-linear relationship, but remaining above an oral taxon fraction percent of 50% (as identified in modern plaque samples). An archaeological sample (bottom right) with no endogenous oral content will have few oral taxa, and may have occasional modern contaminants resulting in a non-linear relationship. b a representation of the method for calculating the rank from which to begin assessing whether a sample decay curve goes above the 'well-preserved' fraction threshold, accounting for high variation in mixed preservation samples with both oral and non-oral/uncharacterised taxa at higher ranks. Given the large differences between the initial ranks due to small denominators, a 'burn-in' like procedure is applied. The rank at which the difference change between each subsequent rank does not exceed the standard deviation of all rank differences, is set as the rank from which, it is assessed whether the sample curve exceeds the preservation threshold (here 50% for the NCB nt OTU table). A curve that does not exceed this threshold at any point from this rank onwards, is considered not to have sufficient preservation for downstream analysis.

This visualisation requires two objects: an OTU table from MEGAN at species level (generated above) and a database of taxa with their 'sources'.

To generate the database of oral taxa, I used the steps outlined in the

notebook

02-scripts.backup/013-Organism_Isolation_Source_Database_Generation.Rmd.

Note that database also requires manual curation over time as more taxa are identified and the isolation sources reported.

This database used in this study can be

seen under 06-additional_data_files under Data R16, or stored under

00-documentation as 07-master_oralgenome_isolationsource_database.tsv

To actually generate the visualisations, the R notebook

02-scripts.backup/014-cumulative_proportion_decay_curves.Rmd describes how to

calculate the percent decay curves, including burn-in calculations, and how to

display the curves.

The resulting list(s) of individuals passing or failing to pass this threshold

can be seen in 06-additional_data_files under Data R17 or

04-analysis/screening/cumulative_decay.backup, and summaries of how many

samples passed the calculated threshold in the tables below.

Figure R9 | Cumulative percent decay plots of fraction of oral taxa across taxa ordered by abundance rank. Taxonomic assignment against the: a NCBI nt database, and b a custom NCBI RefSeq database showing a large number of calculus samples displayed greater levels of preservation (blue), and although a smaller number do not pass the estimated preservation threshold (red). Plots are limited to 250 rank positions (x-axis) for visualization purposes. Thresholds are selected based on observations that all sources and controls do not increase about 50% (nt) and 65% (RefSeq) of fraction of oral taxon. Point at which the per-sample threshold is considered is based on when the fluctuation of the fraction of oral taxa (i.e. fraction difference between a taxon and next abundant taxon) does not exceed the standard deviation of all differences of the rank.

Table R1 | Summary of sample counts passing preservation thresholds as implemented in the cumulative percent decay method. Preservation was assessed using the cumulative percent decay method with the fluctuation burn-in threshold based on the MALT OTU tables.

| Database | Host Group | Passed | Failed | Total Samples (n) | Passed (%) |

|---|---|---|---|---|---|

| nt | Howler | 5 | 0 | 5 | 100 |

| nt | Gorilla | 14 | 15 | 29 | 48.28 |

| nt | Chimp | 16 | 5 | 21 | 76.19 |

| nt | Neanderthal | 7 | 10 | 17 | 41.18 |

| nt | PreagriculturalHuman | 16 | 4 | 20 | 80 |

| nt | PreantibioticHuman | 13 | 1 | 14 | 92.86 |

| nt | ModernDayHuman | 18 | 0 | 18 | 100 |

| refseq | Howler | 5 | 0 | 5 | 100 |

| refseq | Gorilla | 12 | 17 | 29 | 41.38 |

| refseq | Chimp | 11 | 10 | 21 | 52.38 |

| refseq | Neanderthal | 7 | 10 | 17 | 41.18 |

| refseq | PreagriculturalHuman | 11 | 9 | 20 | 55 |

| refseq | PreantibioticHuman | 13 | 1 | 14 | 92.86 |

| refseq | ModernDayHuman | 18 | 0 | 18 | 100 |

Table R2 | Comparison of number of individuals with supported good- or low- preservation assignment between the nt and custom RefSeq database. Preservation was assessed using the cumulative percent decay method with the fluctuation burn-in threshold based on the MALT OTU tables.

| Population | Total Individuals in Population | Individuals with Matching Preservation Assignment | Individuals not Matching Preservation Assignment |

|---|---|---|---|

| Chimp_2 | 7 | 6 | 1 |

| Chimp_3 | 6 | 4 | 2 |

| Chimp_4 | 1 | 1 | 0 |

| Gorilla_1 | 15 | 13 | 2 |

| Gorilla_2 | 8 | 7 | 1 |

| Gorilla_3 | 6 | 5 | 1 |

| Howler_Monkey | 5 | 5 | 0 |

| ModernDayHuman_1 | 10 | 10 | 0 |

| ModernDayHuman_2 | 8 | 8 | 0 |

| Neanderthal | 17 | 15 | 2 |

| PreagriculturalHuman_1 | 10 | 8 | 2 |

| PreagriculturalHuman_2 | 10 | 7 | 3 |

| PreantibioticHuman_1 | 10 | 10 | 0 |

| PreantibioticHuman_2 | 4 | 4 | 0 |

We next wanted to compare our screening method to a less-suitable but more established approach, Sourcetracker analysis. However this typically uses an OTU table of 16S rRNA reads to estimate the proportion of OTUs derived from the sources that are also in your sample.

As we have shotgun data, we instead extracted reads with sequence similarity to translated 16S rRNA sequences and ran on that.

The script 02-scripts.backup/009-preprocessing-16s_mapping works very much

the same way as with the human DNA preprocessing, but

mapping to the SILVA database and converting the unmapped-only reads (i.e.

non-human reads from the preprocessing) to FASTA

format, with a particular header format (>sample.name_1) that is

compatible with QIIME.

INDIR=02-scripts.backup/03-preprocessing/screening/library_merging

OUTDIR=02-scripts.backup/03-preprocessing/screening/silva_16s_reads

SCRIPTS=02-scripts.backup/02-scripts.backup

for LIBDIR in "$INDIR"/*/; do

LIBNAME=$(echo "$LIBDIR" | rev | cut -d/ -f2 | rev)

if [ -d "$OUTDIR"/"$LIBNAME" ]; then

printf "\n $LIBNAME already Processed \n\n"

else

mkdir "$OUTDIR"/"$LIBNAME"

$SCRIPTS/009-preprocessing_16s_mapping.sh $OUTDIR/$LIBNAME $LIBDIR $LIBNAME

sleep 1

fi

doneTo get the number of 16S rRNA reads that mapped, we can ran script

02-scripts.backup/06-16s_extraction_statistics.sh, and copied the resulting

file into our documents directory with

SCRIPTS=02-scripts.backup/02-scripts.backup

cd 02-scripts.backup/03-preprocessing/screening

## Generate stats

"$SCRIPTS"/011-16s_extraction_statistics.sh silva_16s_reads/

## Copy into documentation folder

mv silva_16s_reads/16s_extraction_statistics_"$(date +"%Y%m%d")".csv .

cp 16s_extraction_statistics_"$(date +"%Y%m%d")".csv ../../00-documentation.backup/05-16s_extraction_statistics_"$(date +"%Y%m%d")".csvThe mapping results files are not provided here due to their large size.

The summary statistics for the number of 16S reads identified can be seen in

06-additional_data_files under Data R12 or

00-documentation.backup/05-16s_extraction_statistics.csv, as well as in the

figures below.

Figure R10 | Distributions of percentages of 16S rRNA mapping reads extracted out of all non-human reads across all samples in the dataset, when mapping to the SILVA database. Colours correspond to calculus host genus. Blue: Alouatta; Purple: Gorilla; Green: Pan; Orange: Homo; Grey: non-calculus.

Figure R11 | Distributions of percentages of 16S rRNA mapping reads extracted out of all non-human reads libraries across human calculus and plaque samples by mapping to the SILVA database. Ancient sample groups are 'pre-agricultural' and 'pre-antibiotic' humans and are taken from skeletal remains, whereas Modern Day Human calculus and HMP plaque samples come from living individuals. Colours correspond to sample type. Orange: Homo calculus; Grey: non-calculus.

Summary statistic visualisation of mapping can be seen under

02-scripts.backup/099-16sResults.Rmd

To assign taxonomic classifications to the identified 16S reads, we used then used QIIME (v1.9) to cluster the reads by similarity, and assign a taxonomic 'name'. We remained with using OTU clustering rather than the more recent (and more powerful/statistically valid) ASV (amplicon sequence variant) methods. This is because we are not dealing directly with amplicon data, and we also have damage which would affect reliability of assignment. Finally, the more recent version of QIIME, QIIME2, has been made much less flexible for non-amplicon data (at the time of writing) and I couldn't work out how to adapt the data. For our rough preservational screening purposes using QIIME 1.9 was deemed sufficient.

Firstly, we made a combined FASTA file containing all the 16S reads from all the samples.

INDIR=02-scripts.backup/03-preprocessing/screening/silva_16s_reads

OUTDIR=04-analysis/screening/qiime/input

rm "$OUTDIR"/silva_16s_reads_concatenated.fna.gz

touch "$OUTDIR"/silva_16s_reads_concatenated.fna.gz

for SAMPLE in "$INDIR"/*; do

find "$SAMPLE" -maxdepth 1 -name '*_renamed.fa.gz' -exec cat {} >> \

"$OUTDIR"/silva_16s_reads_concatenated.fna.gz \;

doneThe FASTA file is not provided here due to their large size.

For OTU clustering itself, we additionally defined custom parameters that have

been adapted for shotgun data by LMAMR in Oklahoma. These are stored in the

file 02-scripts.backup/010-params_CrefOTUpick.txt, as well as in the table

below.

Table R3 | Non-default parameters used for QIIME closed-reference clustering.

| Parameter | Value |

|---|---|

| pick_otus:max_accepts | 100 |

| pick_otus:max_rejects | 500 |

| pick_otus:word_length | 12 |

| pick_otus:stepwords | 20 |

| pick_otus:enable_rev_strand_match | TRUE |

To actually run the clustering analysis and generate our OTU table we ran the following.

INDIR=04-analysis/screening/qiime/input

OUTDIR=04-analysis/screening/qiime/output

GREENGENESDB=<PATH_TO>/qiime_default_reference/gg_13_8_otus/

SCRIPTS=02-scripts.backup/02-scripts.backup

gunzip "$INDIR/silva_16s_reads_concatenated.fna.gz"

pick_closed_reference_otus.py \

-i $INDIR/silva_16s_reads_concatenated.fna \

-o $OUTDIR/otu_picking \

-a \

-O 16 \

-r $GREENGENESDB/rep_set/97_otus.fasta \

-t $GREENGENESDB/taxonomy/97_otu_taxonomy.txt \

-p $SCRIPTS/010-qiime_1_9_1_params_CrefOTUpick.txt

Once finished we re-gzipped the input fasta file to save disk space with:

INDIR=04-analysis/screening/qiime/input

pigz -p 4 "$INDIR/silva_16s_reads_concatenated.fna"To get some basic statistics about the OTU picking, we used the BIOM package that came with the QIIME environment.

INDIR=04-analysis/screening/qiime/input

OUTDIR=04-analysis/screening/qiime/output

cd "$OUTDIR"

biom summarize-table -i "$OUTDIR"/otu_picking/otu_table.biom >> \

"$OUTDIR"/otu_picking/otu_table_summary.txt

## Check with:

head "$OUTDIR"/otu_picking/otu_table_summary.txt -n 20This summary can also be seen in 06-additional_data_files under Data R13.

Sourcetracker (I realised later) doesn't do rarefaction properly as it allows sampling with replacement of the OTUS. Therefore, we needed to manually remove samples that have less than 1000 OTUs (which is the default rarefaction level in Sourcetracker - see below). Looking at the table summary in Data R13 summary shows that fortunately this will remove very few samples and will remove mostly blanks.

cd 04-analysis/screening/qiime/output/otu_picking

INDIR=04-analysis/screening/qiime/output/otu_picking

filter_samples_from_otu_table.py \

-i "$INDIR"/otu_table.biom \

-o "$INDIR"/otu_table_1000OTUsfiltered.biom \

-n 1000

biom summarize-table -i "$INDIR"/otu_table_1000OTUsfiltered.biom >> \

"$INDIR"/otu_table_1000OTUsfiltered_summary.txt

## Check with:

head "$INDIR"/otu_table_1000OTUsfiltered_summary.txt -n 20We also only wanted to look at genus level assignments, given species specific IDs could be unreliable due to damage and different mixtures of strain in different individuals.

To filter to Genus (L6) level we then did

cd 04-analysis/screening/qiime/output/otu_picking

INDIR=04-analysis/screening/qiime/output/otu_picking

## For Genus

summarize_taxa.py \

-i "$INDIR"/otu_table_1000OTUsfiltered.biom \

-o "$INDIR" \

-a \

-L 6

## Fix crappy taxon ID that breaks loading into R when running Sourcetracker :+1: due to hanging quote

sed s#\'Solanum#Solanum#g otu_table_1000OTUsfiltered_L6.txt > otu_table_1000OTUsfiltered_L6_cleaned.tsvWith this final OTU table - as seen (in both biom or TSV format) in

06-additional_data_files under Data R14 or

04-analysis/screening/qiime/output/otu_picking - we could now run

Sourcetracker.

Figure R12 | Distributions of the number of OTUs identified after closed-reference clustering of 16S rRNA reads across all calculus, laboratory controls and comparative sources in this study. Clustering was performed in QIIME at 97% identity Colours correspond to calculus host genus. Blue: Alouatta; Purple: Gorilla; Green: Pan; Orange: Homo; Grey: non-calculus.

Figure R13 | Distributions of the number of OTUs identified after closed-reference clustering of 16S rRNA read sequences at 97% sequence similarity in QIIME across human calculus and plaque samples. Ancient sample groups are 'pre-agricultural' and 'pre-antibiotic' humans and are taken from skeletal remains, whereas Modern Day Human calculus and plaque samples come from living individuals. Colours correspond to sample type. Orange: Homo calculus; Grey: non-calculus.

Summary statistic visualisation of clustering was generated via the Rmarkdown

notebook 02-scripts.backup/099-16sResults.Rmd.

With the OTU tables, we are now able to compare the environmental sources (plaque, gut, skin, sediment etc.) to each of the calculus samples and estimate the proportion of each sample that resembles the sources. This thus can help indicate the level of (hopefully) endogenous oral-content preservation in the samples.

Sourcetracker requires an OTU table (generated above) and a metadata file

that tells the program what libraries in the OTU are a 'sink' or a 'source'.

This metadata file used in this case is recorded here,

02-scripts.backup/02-microbiome_calculus-deep_evolution-individualscontrolssources_metadata.tsv,

which I then tried to use for all downstream analysis. In particular here we

needed to ensure there was an 'Env' and a 'SourceSink' column.

To then to run Sourcetracker we ran the following command:

## change to new directory for output directories

INDIR=04-analysis/screening/qiime/output/otu_picking

OUTDIR=04-analysis/screening/sourcetracker.backup/

MAPPINGFILE=00-documentation.backup/02-calculus_microbiome-deep_evolution-individualscontrolssources_metadata_20190429.tsv

Rscript \

sourcetracker_for_qiime.r \

-i "$INDIR"/otu_table_1000OTUsfiltered_L6_cleaned.tsv \

-m "$MAPPINGFILE" \

-o "$OUTDIR"/otu_table_L6_1000_"$(date +"%Y%m%d")" \

-r 1000 \

-v

For plotting of these - with comparison to the cumulative percent decay plots,

I use the following R notebook to summarise the results:

02-scripts.backup/099-cumulativedecay_vs_sourcetracker.Rmd. Discussion

of the comparison can be seen in the main publication, however there was

a generally good concordance between the two approaches.

The final proportions (with standard deviation) can be seen in

06-additional_data_files under Data R18.

Figure R14 | Stacked bar plots representing the estimated proportion of sample resembling a given source, as estimated by Sourcetracker across all calculus samples. Visual inspection shows general concordance between the cumulative percent decay method and Sourcetracker estimation is seen. Coloured label text indicate whether that sample passed (grey) or failed (black) the cumulative percent decay threshold (see above) based on alignments to the NCBI nt (2017) database.

Returning back to the MALT tables and cumulative percent decay plots, we had also observed that the older samples and those with weaker indication of oral content appeared to have a greater ratio of prokaryotic to eukartotic alignments.

We also explored whether this ratio could be used as an additional validation of

oral microbiome preservation in ancient samples. Visualisation and statistical

testing of these ratios can be seen in the figure below, and was generated as

described in the R notebook

02-scripts.backup/099-cumulativedecay_vs_sourcetracker.Rmd. For discussion

of the results, please refer to the main publication.

Figure R15 | Comparison of ratios of bacterial/archaeal/viral over eukaryotic alignments, between ancient calculus samples that passed the cumulative decay cut off for preservation. Samples not passing the preservation threshold as estimated with the cumulative percent decay plots, tend to have smaller ratio and therefore greater amounts of eukaryotic DNA reads being assigned. Ratios are based on the number of reads aligned the NCBI nt (2017) database using MALT.

To rapidly screen for damage patterns indicative of ancient DNA on the observed core microbiome (see below), we ran MaltExtract on all output of MALT, with the core microbiome as input list.

We then developed MEx-IPA to rapidly visualise ancient DNA characteristics across all samples and core taxa.

The results files for this analysis can be seen in

04-analysis/screening/maltExtract/output/AnthropoidsHominidaeHoiminini_core_20190509.

⚠️ the text files in this directory are gzipped and must be decompressed before loading into MEx-IPA!

If you do not wish to clone this whole repository (which is very large),

you can use the following suggestions from

stackoverflow. However, in

case that link doesn't work - the most stable method is to use subversion

(svn).

svn checkout https://github.com/jfy133/Hominid_Calculus_Microbiome_Evolution/trunk/04-analysis/screening/maltExtract/output/AnthropoidsHominidaeHoiminini_core_20190509

find -name '*gz' -type f -exec gunzip {} \;Then copy and paste <path>/<to>/AnthropoidsHominidaeHoiminini_core_20190509

into MEx-IPA to find this.

Example reports for the two oldest Neanderthals (PES001 and GDN001), can be seen below in Figure R16, where for multiple known oral species, both cases display indicative characteristics of true endogenous DNA.

Figure R16 | Example of MEx-IPA reports from the MaltExtract tool of the HOPS pipeline for two Neanderthal individuals, across four known oral microbiome taxa. Individual-Taxon combination shows: C to T misincorporation lesions indicative of DNA deamination; read length distribution with a typical peak <50 bp indicative of fragmented DNA; edit distance and percent identity to the given reference which both show close similarity to the oral reference genome in most cases (1-2 edit distance peak; and 95% identity peak identity). Note that Fretibacterium fastidiosum shows a higher edit distance and low percent identity score, suggesting the reads are likely derived from a relative of that taxon that does not have a genome represented in the database used (NCBI nt 2017).

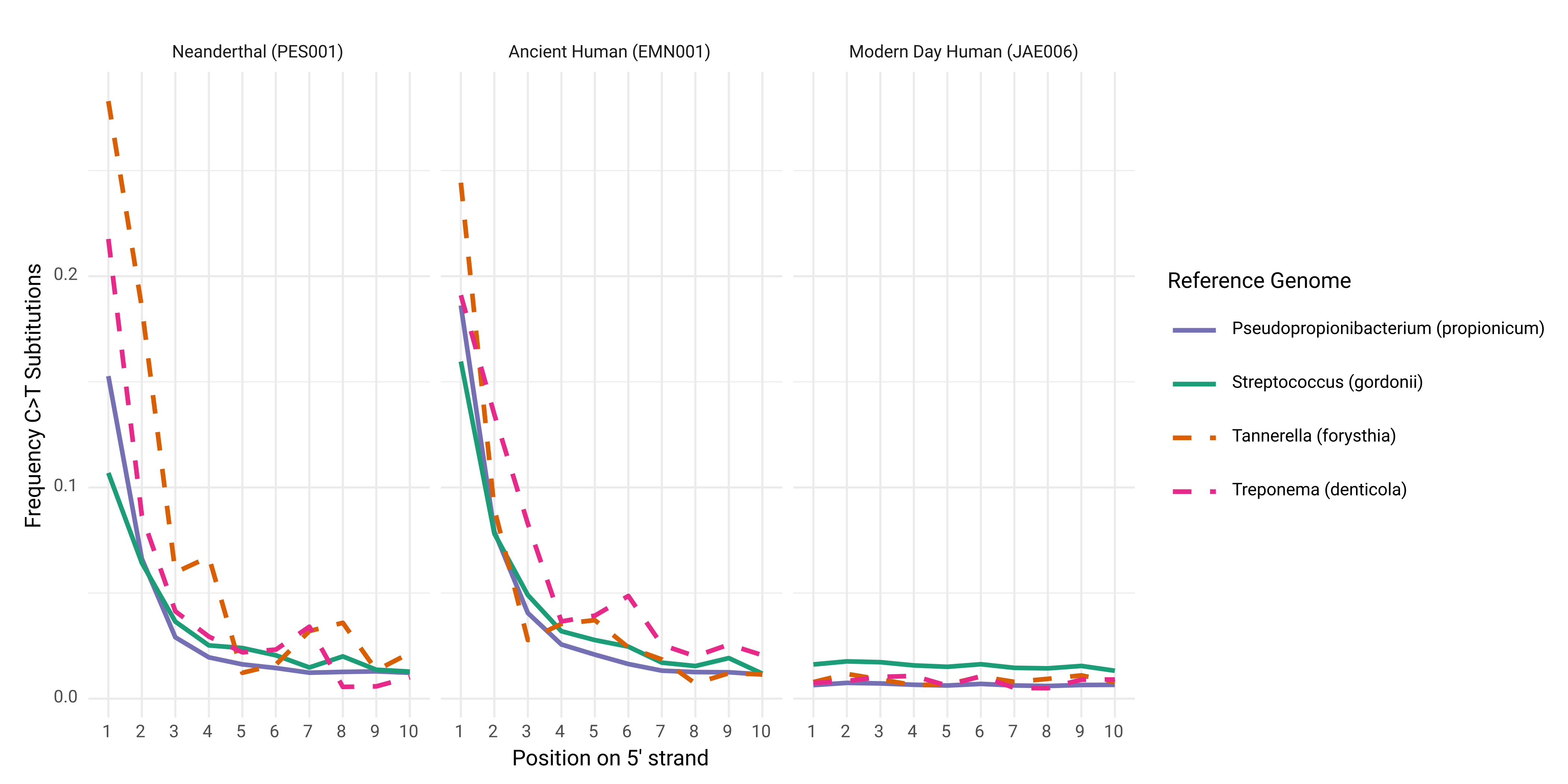

To generate additional confirmation of damage patterns in oral taxa, the screening data was also mapped to a subset of observed core microbiome reference genomes (see below), using EAGER.

DamageProfiler results were collated and visualised with the R script

02-scripts.backup/099-Coretaxa_SubSet_DamageProfiler_Summary.R. An example of the range of damage signals in ancient Human remains can be seen below in Figure R17. Again showing the presence of multiple well-preserved endogenous DNA of

oral taxa.

Figure R17 | Frequency of C to T miscorporations along 5' ends of DNA reads compared to references of four representative human oral-specific species as calculated by DamageProfiler. Neanderthal and Upper Palaeolithic individuals show damage patterns indicative of authentic aDNA, whereas a modern day individual does not.

The collated results for the whole screening dataset are stored in the file

00-documentation.backup/14-damageprofiler_screening_3p_5p_summaries_20191113.csv.

In addition to identifying well preserved samples, we can also remove possibly

contaminating OTUs from our sample's OTU tables that are derived from the

laboratory environment, we can use the R package decontam. The idea here

is to use this to reduce the number of noisy taxa in the downstream

compositional analysis, e.g. false positive clustering due to laboratory batch

effects.

The method in the decontam package uses library quantification information

to trace inverse correlations in OTU abundance compared to DNA abundance - where

laboratory derived taxa appear more abundant in controls versus true samples.

We manually added the library quantification values (qPCR) to our

main metadata file

02-scripts.backup/02-microbiome_calculus-deep_evolution-individualscontrolssources_metadata.tsv.

We then ran decontam following the decontam tutorial vignette on CRAN as

described here 02-scripts.backup/015-decontam_contamination_detection_analysis.Rmd.

The final list of contaminants for all methods and databases can be seen in

06-additional_data_files under Data R19 and 04-analysis/screening/decontam.backup,

with a summary below in Table R4.

This list of OTUs was subsequently removed from OTU tables in all downstream analyses when indicated.

Table R4 | Summary of OTUs detected across each shotgun taxonomic binner/classifier and databases as potential contaminants by the R package decontam. MetaPhlAn2 was run for functional analysis below. While MetaPhlAn2 was run as reference but wasn't utilised, the contaminants were not removed downstream due to unknown effects of removing these for HUMANn2.

| Binning Method | Database | Taxonomic Level | Total OTUs Detected (n) | Contaminants Detected (n) | Contaminants Detected (%) |

|---|---|---|---|---|---|

| megan | nt | genus | 1392 | 651 | 46.77 |

| megan | nt | species | 3401 | 1557 | 45.78 |

| megan | refseq | genus | 1241 | 630 | 50.77 |

| megan | refseq | species | 5195 | 2183 | 42.02 |

| metaphlan2 | metaphlan2 | genus | 675 | 121 | 17.93 |

| metaphlan2 | metaphlan2 | species | 1626 | 310 | 19.07 |

We observed a high number of putative contaminant OTUs when using our strict

decontam parameters. We wanted to see how much this would provisionally impact

our downstream analyses by investigating how many actual reads the OTUs consist

of a sample. The R notebook

02-scripts.backup/099-decontam_OTU_impact_check.Rmd describes how we did this.

We see that despite large numbers of contaminants are detected by decontam as a fraction of overall OTUs, this only makes up a minority fraction of actual alignments in the MALT OTU table in well preserved samples - suggesting that the majority of contaminants are derived from low-abundant taxa. This is shown in figure R18.

Figure R18 | Fraction of MALT Alignments derived from putative contaminant OTUs show only small effect on well-preserved samples Individuals are ordered by percentage of alignments that are derived from OTUs considered putative contaminants by decontam and removed from downstream analysis. Colour indicates whether the individual was considered to be well-preserved or not based on the cumulative percentage decay curves with the within standard variation burn-in method.

After filtering to contain only well-preserved samples and removing possible contaminants, we then could begin comparison of the calculus microbiomes of our different host groups. We wanted to identify taxonomic similarities and differences between each of the groups to help reconstruct the evolutionary (co-)history of the microbiomes and their hosts.

To explore if we have a structure in our data that can describe differences between each group we want to explore, we performed a Principal Coordinate Analysis to reduce the variation between the samples to human-readable dimensions. An important aspect of this analysis was the use of Compositional Data (CoDA) principles - here implemented with PhILR - which allow us to apply 'traditional' statistics to taxonomic group comparisons.

The steps for the generation of PCoA are described in the R notebook

02-scripts.backup/017-PhILR_PCoA_20190527.Rmd. However, the notebook ending