This is a repository for a take home interview question I had in 2021. I have removed screenshots with the company name, plus resources containing the company name from this repository. History has also been removed to preserve the company's anonymity. I was given around four hours to complete this assignment. I worked on it all weekend (oops!). That said, they no longer utilize this form of take home tests since I started working for them :)

This repository contains a very simple automated AWS service deployed with CodePipeline and Terraform. It contains two parts: A deployment automation, and a serverless, RESTful web service.

(screenshot removed)

Structurally, the following primary folders are defined below:

- /

- config

- terraform

- scripts

- lambda

configcontains the build definitions plus all other app configurations.terraformcontains all of the terraform definitions.scriptscontains all standalone logic required for deployment.lambdacontains the logic of the function app.

There are several assumptions within this repository:

- Though cloned on GitHub, this repository exists within my personal AWS environment in CodeCommit to simplify automation for the sake of this activity.

- My AWS account already includes a CodeBuild policy used by automation. This policy includes a combination of other policies which are outlined below.

- I've removed my account numbers from the checked in code and this README.

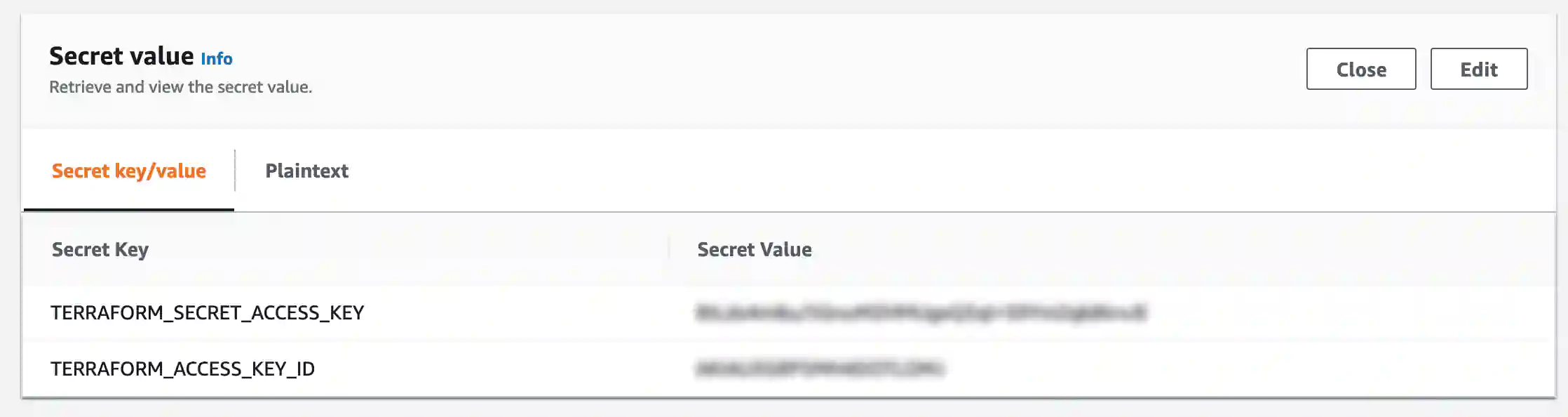

- All secrets are managed with AWS Secrets Manager; these secrets are configured in the

buildspec.yamland passed to terraform in thescripts/terraformscript:

I tried to create commits in logical order so that the commit history illustrates my general thought process.This was relevent when I submitted for the job, but the repository has now been flattened.

Here are the steps I followed to setup a code pipeline for automated deployments based on commits to the master branch of a new repository.

- Init an empty git repository and add this README.md.

- Create AWS git repository in CodeCommit: (screenshot removed)

- Add S3 bucket for CodeBuild.

- Create CodeBuild project: (sceenshot removed)

- Bootstrap deployment.

At this point in the boostrap process, there now exists a CodeBuild project with

a buildspec.yaml that can be triggered manually. It would be nice, though, not

to manually trigger the build. To that end, I finalized the automation through

the following steps:

- Add Terraform state lock table to terraform resources and configure Terraform with S3 plus state lock table options.

- Add CodePipeline resource to auto-build commits.

At this stage, the pipeline is now 100% automated; infrastructure will deploy on

each successful git push.

The basic premise (time permitting) is to have an API endpoint that accepts a JSON payload, places it on a queue, and returns a 202 response. Once on the queue, a separate lambda will pick up the payload and save it to persistent storage, in this case, DynamoDB.

Here are the steps, in order, I followed to establish the endpoints and functional aspects of this service:

- Create the

fakecompany-queue-dataandfakecompany-persist-datalambda stubs. (screenshot removed) - Create the queue to be fed from

queue-dataand read bypersist-dataplus a deadletter queue. (screenshot removed) - Set SQS permissions for both labmdas with policies. (screenshot removed)

- Finalize the

fakecompany-queue-datalambda, which will post an event to thefakecompany-dataqueue, returning a payload with the event and posted queue properties. - Add an API Gateway integration to the

fakecompany-queue-datalambda. - Adjust the

fakecompany-queue-datalambda to account for the format required by the API Gateway. - Finalize the

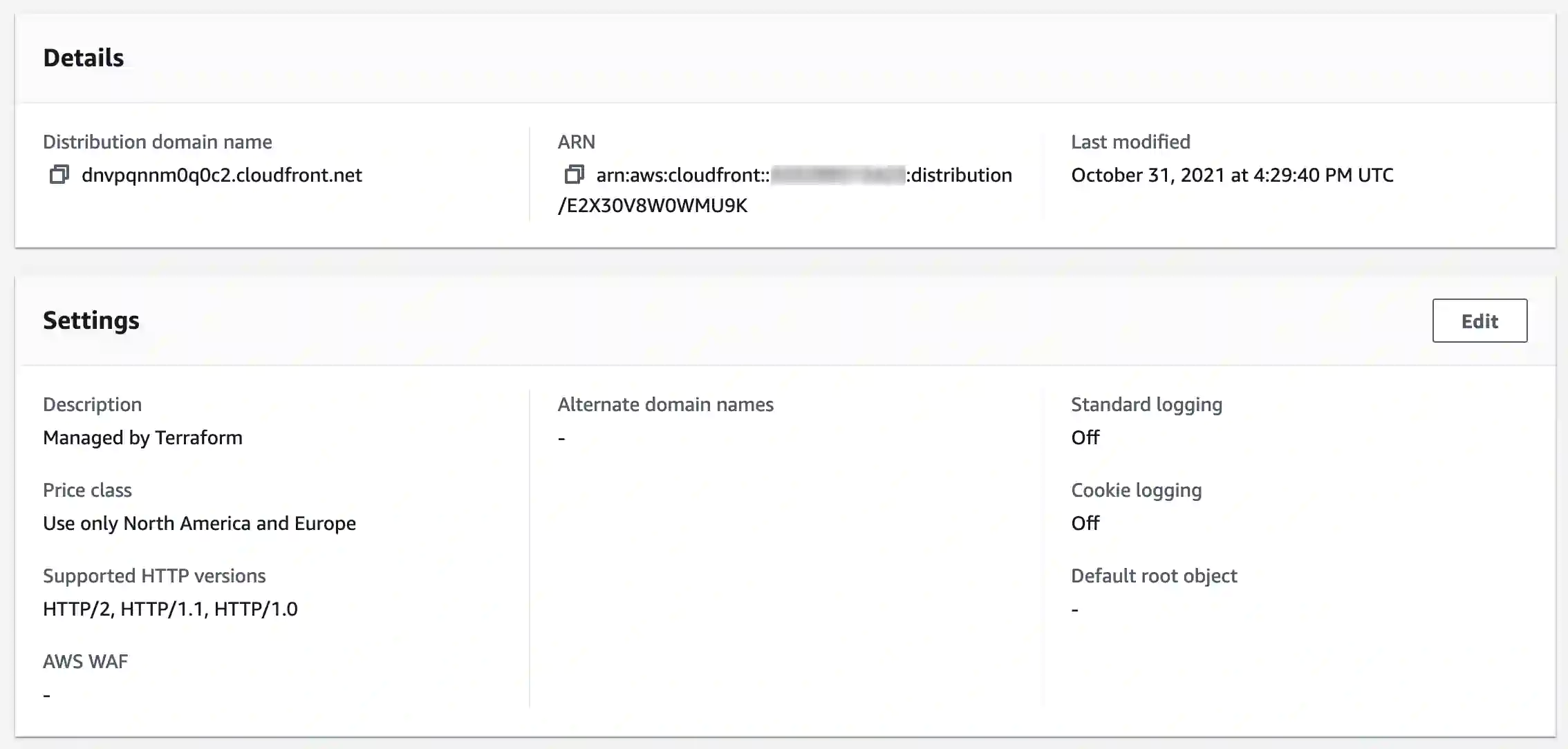

fakecompany-persist-datalambda, which will listen to thefakecompany-dataqueue and save it to persistent storage. - Setup a CloudFront distribution in front of the API Gateway.

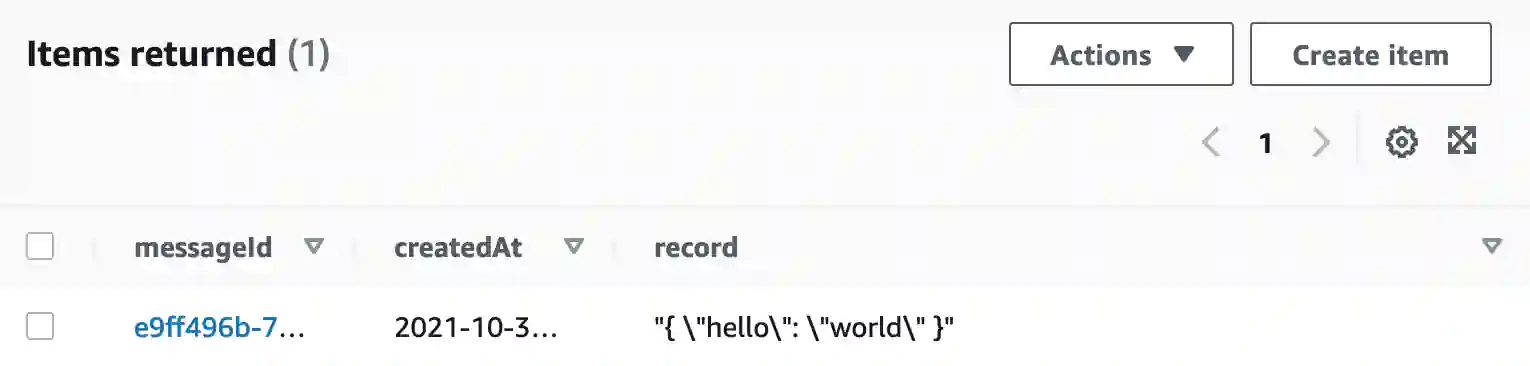

At this stage, everything is established. We can now post to the CloudFront-fronted API:

$ curl -X POST \

-H "Content-Type: application/json" \

-H "X-Auth-Token: my-very-secret-token" \

-d '{ "hello": "world" }' \

https://dnvpqnnm0q0c2.cloudfront.net/api/save | jqThis will save the { hello: "world" } object to Dynamo:

Sample 202 response:

{

"ResponseMetadata": {

"RequestId": "acdeb16f-4557-5fc6-935d-e897a387221e"

},

"MD5OfMessageBody": "07d031ca09ecdd2db304fe072e9bafb5",

"MessageId": "8e017ab2-804d-4aee-9308-335ae97b8360",

"body": "{ \"hello\": \"world\" }"

}Sample 401 response:

$ curl -X POST \

-H "Content-Type: application/json" \

-H "X-Auth-Token: my-very-broken-token" \

-d '{ "hello": "world" }' \

https://dnvpqnnm0q0c2.cloudfront.net/api/save | jq{

"errorType": "Unauthorized",

"httpStatus": 401,

"requestId": "a20ddca7-7636-437a-9622-4ae7e314939e"

}(This section has been removed from this repository as it contained interview-specific questions about the company in question).

The persist lambda has access to the fakecompany-queue (and dlq) to send, receive, and delete messages, while the queue lambda only has access to send messages.

For the persist lambda:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sqs:SendMessage",

"sqs:ReceiveMessage",

"sqs:GetQueueAttributes",

"sqs:DeleteMessage"

],

"Resource": [

"arn:aws:sqs:us-east-2:111111111111:fakecompany-data-dlq",

"arn:aws:sqs:us-east-2:111111111111:fakecompany-data"

]

}

]

}For the queue lambda:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "sqs:SendMessage",

"Resource": "arn:aws:sqs:us-east-2:111111111111:fakecompany-data"

}

]

}Both lambdas have access to CloudWatch:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:PutLogEvents",

"logs:CreateLogStream"

],

"Resource": "arn:aws:logs:*:*:*"

}

]

}Only the persist lambda has access to write to Dynamo:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "dynamodb:PutItem",

"Resource": "arn:aws:dynamodb:us-east-2:111111111111:table/fakecompany"

}

]

}The following policies allow CodeBuild to access CloudWatch and S3 for logging and artifacts:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Resource": [

"arn:aws:logs:us-east-2:111111111111:log-group:/aws/codebuild/fakecompany",

"arn:aws:logs:us-east-2:111111111111:log-group:/aws/codebuild/fakecompany:*"

],

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

]

},

{

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::codepipeline-us-east-2-*"

],

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObjectVersion",

"s3:GetBucketAcl",

"s3:GetBucketLocation"

]

},

{

"Effect": "Allow",

"Resource": [

"arn:aws:codecommit:us-east-2:111111111111:fakecompany"

],

"Action": [

"codecommit:GitPull"

]

},

{

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::fakecompany-test-project",

"arn:aws:s3:::fakecompany-test-project/*"

],

"Action": [

"s3:PutObject",

"s3:GetBucketAcl",

"s3:GetBucketLocation"

]

},

{

"Effect": "Allow",

"Action": [

"codebuild:CreateReportGroup",

"codebuild:CreateReport",

"codebuild:UpdateReport",

"codebuild:BatchPutTestCases",

"codebuild:BatchPutCodeCoverages"

],

"Resource": [

"arn:aws:codebuild:us-east-2:111111111111:report-group/fakecompany-*"

]

}

]

}{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Resource": [

"arn:aws:logs:us-east-2:111111111111:log-group:/aws/CodeBuild/fakecompany",

"arn:aws:logs:us-east-2:111111111111:log-group:/aws/CodeBuild/fakecompany:*"

],

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

]

}

]

}{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "FakeCompanyCodePipelinePolicy",

"Effect": "Allow",

"Principal": {

"Service": "events.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}- Chose the wrong CodeBuild environment initially, selecting

ARMinstead ofx86; this broke the terraform installation viayum. - Made the mistake of not properly bootstrapping the terraform state lock table and had to import it for a single build.

- Did not gracefully handle workspace existence in the

terraformscript once state management was enabled. - Early on, for the sake of time, I didn't add tests for the lambdas. As time went on and the project grew, I ended up regretting this decision.

- A typo on a policy (

dynamo:PutItemvsdynamodb:PutItem) caused me more grief than it should have. - I returned the wrong error status code (403 vs 401) on the POST endpoint and didn't notice until early 2021/11/03. Fixed.

- Spent more time than I should have on this project, but it was fun :)

- Establish proper authentication/authorization at the API-level. It would interesting to create a Cognito (or other) service to validate requests and assert an authorization token.

- Play around with different AWS storage options, such as Aurora or RDS.

- Add tests for the Lambdas.

- Really finalize the network aspect, with everything properly isolated across VPCs. Additionally, I included everything in the same AZ; in a real production environment, there'd be geographic replication of critical infrastructure which isn't present in this solution. I'm disappointed I didn't get to this.

Thank you so much for allowing me to participate. I sincerely appreciate it!

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.