Reconstructing image/movie from their features in arbitrary CNN model written in pytorch

These images are reconstuctred from the activation (features) of layers in VGG16 trained on ImageNet dataset. You can reconstruct arbitorary images/videos from their features such as AlexNet, ResNet, DenseNet, and other architecture written in pytorch.

The basic idea of the algorithm (Mahendran A and Vedaldi A (2015). Understanding deep image representations by inverting them. https://arxiv.org/abs/1412.0035) is that the image is reconstructed such that the CNN features of the reconstructed image are close to those of the target image. The reconstruction is solved by gradient based optimization algorithm. The optimization starts with a random initial image, inputs the initial image to the CNN model, calculates the error in feature space of the CNN, back-propagates the error to the image layer, and then updates the image.

- Python 3.6 or later

- Numpy 1.19.0

- Scipy 1.5.1

- PIL 7.2.0

- Pytorch 1.5.1

- Torchvison 0.6.1

- Opencv 4.3.0 (if you want to only generate images, you don't need it)

The example of reconstructing image is at example/icnn_shortest_demo.ipynb.

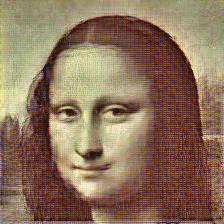

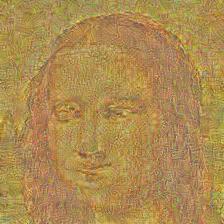

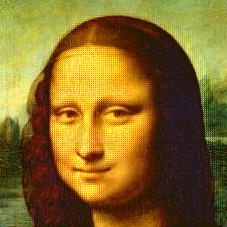

Heres are the example results of a various network.

#####resnet

| Original image |  |

|

|---|---|---|

Reconstructed fromconv1 |

|

|

Reconstructed fromlayer1[0].conv2 |

![recon-image_1-layer1[0].conv2](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/result_resnet/image_1/recon-image_1-layer1%5B0%5D.conv2.jpg) |

![recon-image_2-layer1[0].conv2](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/result_resnet/image_2/recon-image_2-layer1%5B0%5D.conv2.jpg) |

Reconstructed fromlayer3[1].conv1 |

![recon-image_1-layer3[1].conv1](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/result_resnet/image_1/recon-image_1-layer3%5B1%5D.conv1.jpg) |

![recon-image_2-layer3[1].conv1](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/result_resnet/image_2/recon-image_2-layer3%5B1%5D.conv1.jpg) |

| Original image |  |

|

|---|---|---|

Reconstructed fromfeatures.denseblock1.denselayer1.conv1 |

|

|

Reconstructed fromfeatures.denseblock1.denselayer4.conv1 |

|

|

Reconstructed fromfeatures.denseblock3.denselayer24.conv1 |

|

|

This code also optimized multi layers output.

| Original image |  |

|

|---|---|---|

| Reconstructed from first 2 layers |

|

|

| Reconstructed from first 3 layers |

|

|

| Reconstructed from 5 layers |

|

|

version 0.5 #released at 2020/07/13

version 0.8 #updated to show various DNN model at 2020/07/14

The codes in this repository are based on Inverting CNN (iCNN): image reconstruction from CNN features(https://github.com/KamitaniLab/icnn) which is written for "Caffe" and Python2. These scripts are released under the MIT license.

Ken Shirakawa

Ph.D. student at Kamitani lab, Kyoto University (http://kamitani-lab.ist.i.kyoto-u.ac.jp)

![recon-image_1-features[0]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-features%5B0%5D.jpg)

![recon-image_2-features[0]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-features%5B0%5D.jpg)

![recon-image_1-features[2]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-features%5B2%5D.jpg)

![recon-image_2-features[2]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-features%5B2%5D.jpg)

![recon-image_1-features[10]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-features%5B10%5D.jpg)

![recon-image_2-features[10]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-features%5B10%5D.jpg)

![recon-image_1-features[12]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-features%5B12%5D.jpg)

![recon-image_2-features[12]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-features%5B12%5D.jpg)

![recon-image_1-features[21]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-features%5B21%5D.jpg)

![recon-image_2-features[21]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-features%5B21%5D.jpg)

![recon-image_1-classifier[0]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_1/recon-image_1-classifier%5B0%5D.jpg)

![recon-image_2-classifier[0]](https://raw.githubusercontent.com/jhkim0911/pytorch_iCNN/master/image_gallery/image_2/recon-image_2-classifier%5B0%5D.jpg)