A flexible, high-performance 3D simulator with configurable agents, multiple sensors, and generic 3D dataset handling (with built-in support for MatterPort3D, Gibson, Replica, and other datasets). When rendering a scene from the Matterport3D dataset, Habitat-Sim achieves several thousand frames per second (FPS) running single-threaded, and reaches over 10,000 FPS multi-process on a single GPU!

- Updates

- Motivation

- Citing Habitat

- Details

- Performance

- Quick installation

- Testing

- Developer installation and getting started

- Datasets

- Examples

- Common issues

- Acknowledgments

- License

- References

- Urgent Update (4/2/19) There was a bug in the code used to generate the semantic meshes habitat verion of MP3D. If you do not have a README stating this was fixed in your download of this dataset, please redownload using the

download_mp.pyscript.

AI Habitat enables training of embodied AI agents (virtual robots) in a highly photorealistic & efficient 3D simulator, before transferring the learned skills to reality. This empowers a paradigm shift from 'internet AI' based on static datasets (e.g. ImageNet, COCO, VQA) to embodied AI where agents act within realistic environments, bringing to the fore active perception, long-term planning, learning from interaction, and holding a dialog grounded in an environment.

If you use the Habitat platform in your research, please cite the following technical report:

@article{habitat19arxiv,

title = {Habitat: A Platform for Embodied AI Research},

author = {Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh and Dhruv Batra},

journal = {arXiv preprint arXiv:1904.01201},

year = {2019}

}

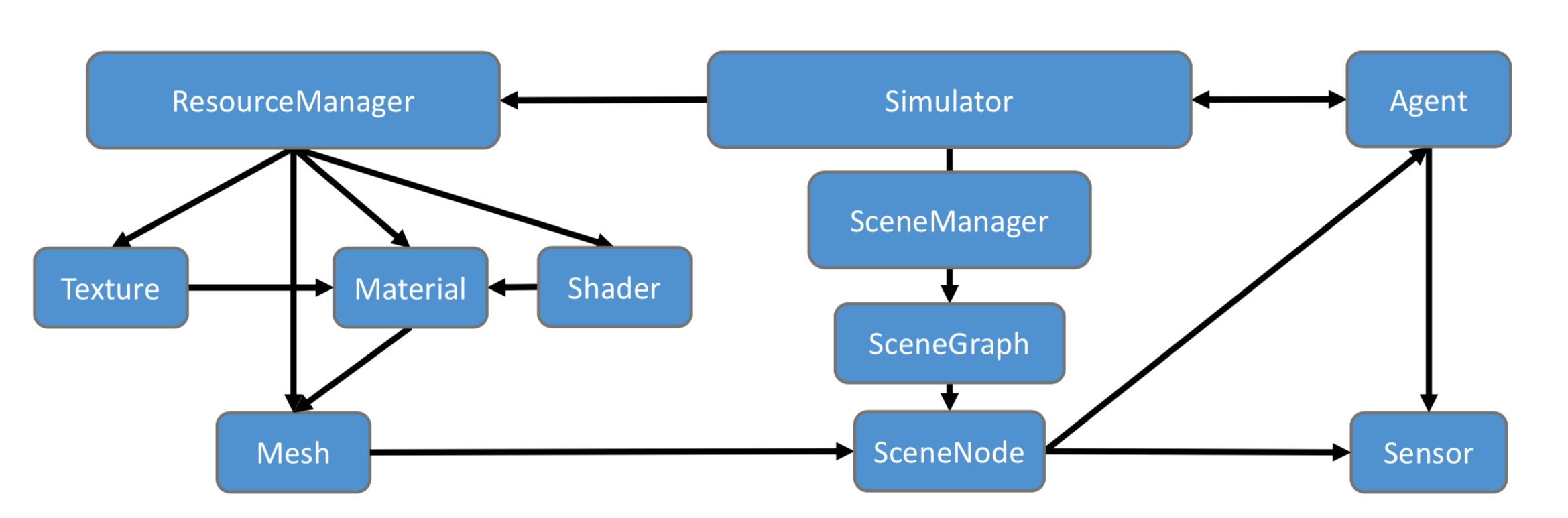

The Habitat-Sim backend module is implemented in C++ and leverages the magnum graphics middleware library to support cross-platform deployment on a broad variety of hardware configurations. The architecture of the main abstraction classes is shown below. The design of this module ensures a few key properties:

- Memory-efficient management of 3D environment resources (triangle mesh geometry, textures, shaders) ensuring shared resources are cached and re-used

- Flexible, structured representation of 3D environments using SceneGraphs, allowing for programmatic manipulation of object state, and combination of objects from different environments

- High-efficiency rendering engine with multi-attachment render passes for reduced overhead when multiple sensors are active

- Arbitrary numbers of Agents and corresponding Sensors that can be linked to a 3D environment by attachment to a SceneGraph.

Architecture of Habitat-Sim main classes

The Simulator delegates management of all resources related to 3D environments to a ResourceManager that is responsible for loading and caching 3D environment data from a variety of on-disk formats. These resources are used within SceneGraphs at the level of individual SceneNodes that represent distinct objects or regions in a particular Scene. Agents and their Sensors are instantiated by being attached to SceneNodes in a particular SceneGraph.

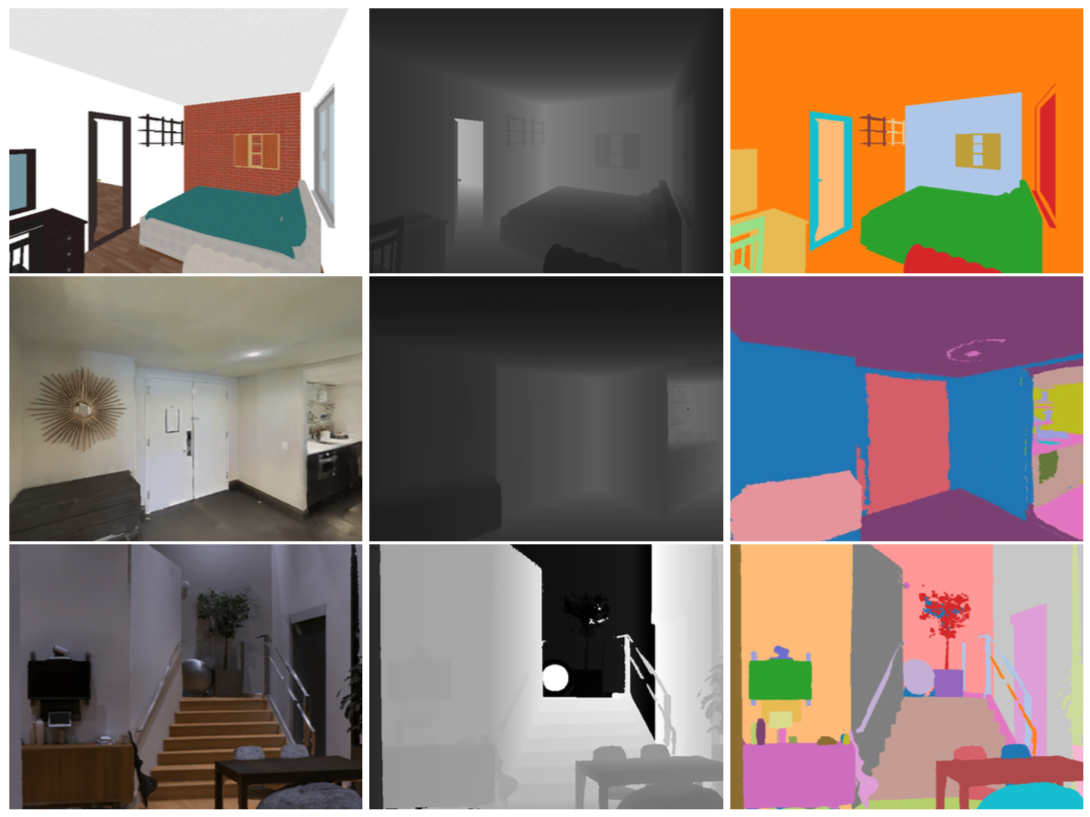

Example rendered sensor observations

The table below reports performance statistics for a test scene from the Matterport3D dataset (id 17DRP5sb8fy) on a Xeon E5-2690 v4 CPU and Nvidia Titan Xp. Single-thread performance reaches several thousand frames per second, while multi-process operation with several independent simulation backends can reach more than 10,000 frames per second on a single GPU!

| 1 proc | 3 procs | 5 procs | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Sensors / Resolution | 128 | 256 | 512 | 128 | 256 | 512 | 128 | 256 | 512 |

| RGB | 4093 | 1987 | 848 | 10638 | 3428 | 2068 | 10592 | 3574 | 2629 |

| RGB + depth | 2050 | 1042 | 423 | 5024 | 1715 | 1042 | 5223 | 1774 | 1348 |

| RGB + depth + semantics* | 439 | 346 | 185 | 502 | 385 | 336 | 500 | 390 | 367 |

Previous simulation platforms that have operated on similar datasets typically produce on the order of a couple hundred frames per second. For example Gibson reports up to about 150 fps with 8 processes, and MINOS reports up to about 167 fps with 4 threads.

*Note: The semantic sensor in MP3D houses currently requires the use of additional house 3D meshes with orders of magnitude more geometric complexity leading to reduced performance. We expect this to be addressed in future versions leading to speeds comparable to RGB + depth; stay tuned.

To run the above benchmarks on your machine, see instructions in the examples section.

-

Clone the repo

-

Install numpy in your python env of choice (e.g.,

pip install numpyorconda install numpy) -

Install Habitat-Sim via

python setup.py installin your python env of choice (note: python 3 is required)Use

python setup.py install --headlessfor headless systems (i.e. without an attached display) or if you need multi-gpu support.Note: the build requires

cmakeversion 3.10 or newer. You can install cmake throughconda install cmakeor download directly from https://cmake.org/download/

- Download the test scenes from this link and extract locally.

- Interactive testing: Use the interactive viewer included with Habitat-Sim

You should be able to control an agent in this test scene. Use W/A/S/D keys to move forward/left/backward/right and arrow keys to control gaze direction (look up/down/left/right). Try to find the picture of a woman surrounded by a wreath. Have fun!

build/viewer /path/to/data/scene_datasets/habitat-test-scenes/skokloster-castle.glb

- Non-interactive testing: Run the example script:

The agent will traverse a particular path and you should see the performance stats at the very end, something like this:

python examples/example.py --scene /path/to/data/scene_datasets/habitat-test-scenes/skokloster-castle.glb

640 x 480, total time: 3.208 sec. FPS: 311.7. Note that the test scenes do not provide semantic meshes. If you would like to test the semantic sensors viaexample.py, please use the data from the Matterport3D dataset (see Datasets).

We also provide a docker setup for habitat-stack, refer to habitat-docker-setup.

- Clone the repo.

- Install numpy in your python env of choice (e.g.,

pip install numpyorconda install numpy) - Install dependencies in your python env of choice (e.g.,

pip install -r requirements.txt) - If you are using a virtual/conda environment, make sure to use the same environment throughout the rest of the build

- Build using

./build.shor./build.sh --headlessfor headless systems (i.e. without an attached display) or if you need multi-gpu support - Test the build as described above (except that the interactive viewer is at

build/utils/viewer/viewer). - For use with Habitat-API and your own python code, add habitat-sim to your

PYTHONPATH(not necessary if you usedpython setup.py installinstead ofbuild.sh). For example modify your.bashrc(or.bash_profilein Mac osx) file by adding the line:export PYTHONPATH=$PYTHONPATH:/path/to/habitat-sim/

- The full Matterport3D (MP3D) dataset for use with Habitat can be downloaded using the official Matterport3D download script as follows:

python download_mp.py --task habitat -o path/to/download/. You only need the habitat zip archive and not the entire Matterport3D dataset. Note that this download script requires python 2.7 to run. - The Gibson dataset for use with Habitat can be downloaded by agreeing to the terms of use in the Gibson repository

Load a specific MP3D or Gibson house: examples/example.py --scene path/to/mp3d/house_id.glb.

Additional arguments to example.py are provided to change the sensor configuration, print statistics of the semantic annotations in a scene, compute action-space shortest path trajectories, and set other useful functionality. Refer to the example.py and demo_runner.py source files for an overview.

To reproduce the benchmark table from above run examples/benchmark.py --scene /path/to/mp3d/17DRP5sb8fy/17DRP5sb8fy.glb.

- Build is tested on Ubuntu 16.04 with gcc 7.1.0, Fedora 28 with gcc 7.3.1, and MacOS 10.13.6 with Xcode 10 and clang-1000.10.25.5. If you experience compilation issues, please open an issue with the details of your OS and compiler versions.

- If you are running on a remote machine and experience display errors when initializing the simulator, ensure you do not have

DISPLAYdefined in your environment (rununset DISPLAYto undefine the variable) - If your machine has a custom installation location for the nvidia OpenGL and EGL drivers, you may need to manually provide the

EGL_LIBRARYpath to cmake as follows. Add-DEGL_LIBRARY=/usr/lib/x86_64-linux-gnu/nvidia-opengl/libEGL.soto thebuild.shcommand line invoking cmake. When running any executable adjust the environment as follows:LD_LIBRARY_PATH=/usr/lib/x86_64-linux-gnu/nvidia-opengl:${LD_LIBRARY_PATH} examples/example.py.

We use clang-format for linting and code style enforcement of c++ code.

Code style follows the Google C++ guidelines.

Install clang-format through brew install clang-format on MacOS, or by downloading binaries or sources from http://releases.llvm.org/download.html for Ubuntu etc.

For vim integration add to your .vimrc file map <C-K> :%!clang-format<cr> and use Ctrl+K to format entire file.

Integration plugin for vscode.

We use black for linting python code.

Install black through pip install black.

We also use pre-commit hooks to ensure linting and style enforcement.

Install the pre-commit hooks with pip install pre-commit && pre-commit install.

- Install

ninja(sudo apt install ninja-buildon Linux, orbrew install ninjaon MacOS) for significantly faster incremental builds - Install

ccache(sudo apt install ccacheon Linux, orbrew install ccacheon MacOS) for significantly faster clean re-builds and builds with slightly different settings

The Habitat project would not have been possible without the support and contributions of many individuals. We would like to thank Xinlei Chen, Georgia Gkioxari, Daniel Gordon, Leonidas Guibas, Saurabh Gupta, Or Litany, Marcus Rohrbach, Amanpreet Singh, Devendra Singh Chaplot, Yuandong Tian, and Yuxin Wu for many helpful conversations and guidance on the design and development of the Habitat platform.

Habitat-Sim is MIT licensed. See the LICENSE file for details.

- Habitat: A Platform for Embodied AI Research. Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh, Dhruv Batra. Tech report, arXiv:1904.01201, 2019.