- This repo is forked from https://github.com/wenet-e2e/wekws, this is the original doc

- As a forked repo, why create a new repo rather than a "fork"?

Because the github dose not support the lfs upload into a forked repo, and I need lfs on my developments. In addition, I made some incompatible modifications and have no plan to make a pull request to the original wekws.

- 方案1,使用脚本下载AISHELL2, musan, dns_challenge数据集

python tools/download.py <download_dir>, 该方案从aliyun镜像下载,并且会进行解压缩,并生成文件列表all.txt - 方案2,手动下载

- Hi-MIA(你好米雅): A far-field text-dependent speaker verification database for AISHELL Speaker Verification Challenge 2019

- LibriSpeech: Large-scale (1000 hours) corpus of read English speech

- THCHS30: A Free Chinese Speech Corpus Released by CSLT@Tsinghua University

- AIShell-1: An Open-Source Mandarin Speech Corpus and A Speech Recognition Baseline

- MUSAN: a corpus of music, speech, and noise recordings.

- AISHELL-2: the largest free speech corpus available for Mandarin ASR research

- dns_challenge: ICASSP 2023 Deep Noise Suppression Challenge

- "hi小问、你好问问": Chinese hotwords detection dataset, provided by Mobvoi CO.,LTD

- 数据说明

- 这些数据集中只有

Hi-MIA和hi小问数据集适合作为正样本进行语音唤醒(KWS)模型的训练与研究,其他数据集均只适合与作为无关样本或负样本. HI-MIA的音频的主要差异是距离话筒远近不同,hi小问的数据更加多样化,包含了合成语音和加噪数据,以及各种语调.AISHELL-2数据集需要进行申请,提供方没有提供下载链接,申请成功后会收到邮件,需要邮寄硬盘获取数据.

- 这些数据集中只有

- 训练使用himia数据集作为唤醒词(正例)数据来源,AISHELL2数据集作为非唤醒词(反例)数据来源. 在开发阶段,对于训练集,随机选出1000段正样本和1000段副样本,对于验证集,分别选出100段, 均转为16k采样, 具体才做方法如下:

- 使用

python tools/create_dataset.py进行训练集合和验证集的采样和转换, 并创建文件列表文件wav.scp和标签文件label.txt. - 使用

bash tools/wav_to_duration.sh /path/to/wav.scp /path/to/wav.dur创建dur文件,注意训练集和验证集都需要生成该文件. - 使用

python tools/make_list.py /path/to/wav.scp /path/to/label_text /path/to/wav.dur /path/to/data.list生成数据集索引,注意训练集和验证集都需要生成该文件. - 如需增加样本量,修改

tools/create_dataset.py以实现不同的采样数量.

# 安装cuda 12.1,确保/usr/local/cuda/bin已经加入PATH

# 通过nvcc -V确认版本,通过nvidia-smi确认显卡型号,驱动版本和cuda版本,

# 确保/usr/local/cuda/lib64路径已经加入LD_LIBRARY_PATH

sudo apt-get install portaudio19-dev

cd examples/hi_xiaowen/s0

conda create -n annakws python=3.9

conda activate annakws

pip install -r requirements.txt

bash run.sh -1 4 # 数据下载-数据处理-训练-模型合并和导出

# 通过gradio_example进行简单测试。注意gradio_example中自带了一个以LFS形式上传的模型文件和若干音频文件,你可以替换为自己训练的其他模型。

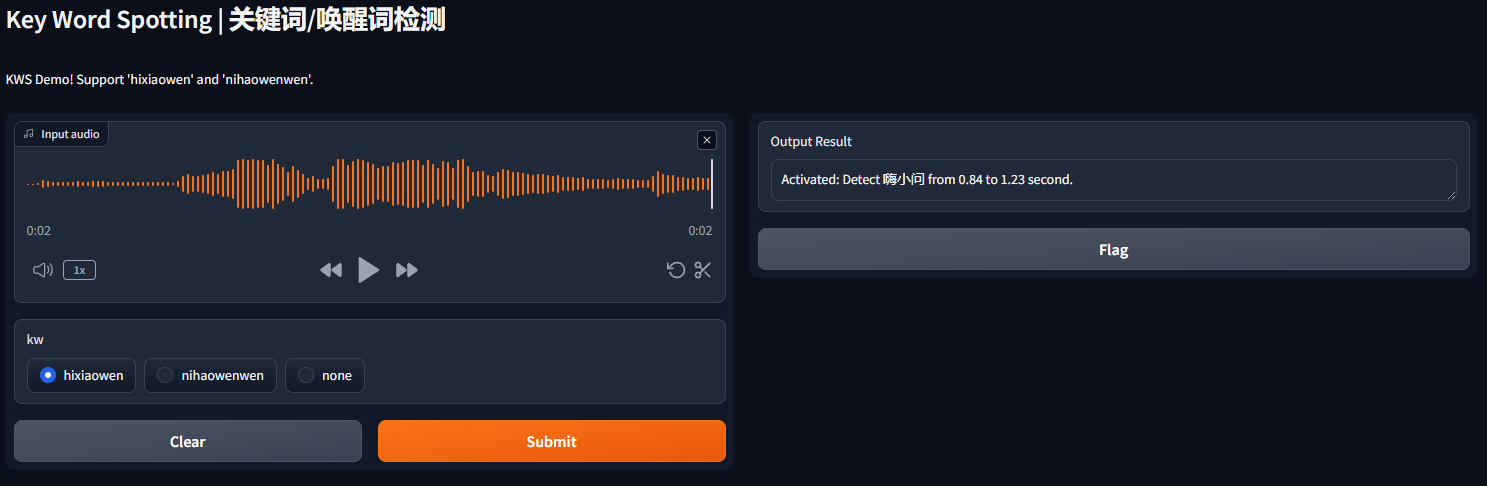

cd examples/hi_xiaowen/s0/gradio_example

python app.py

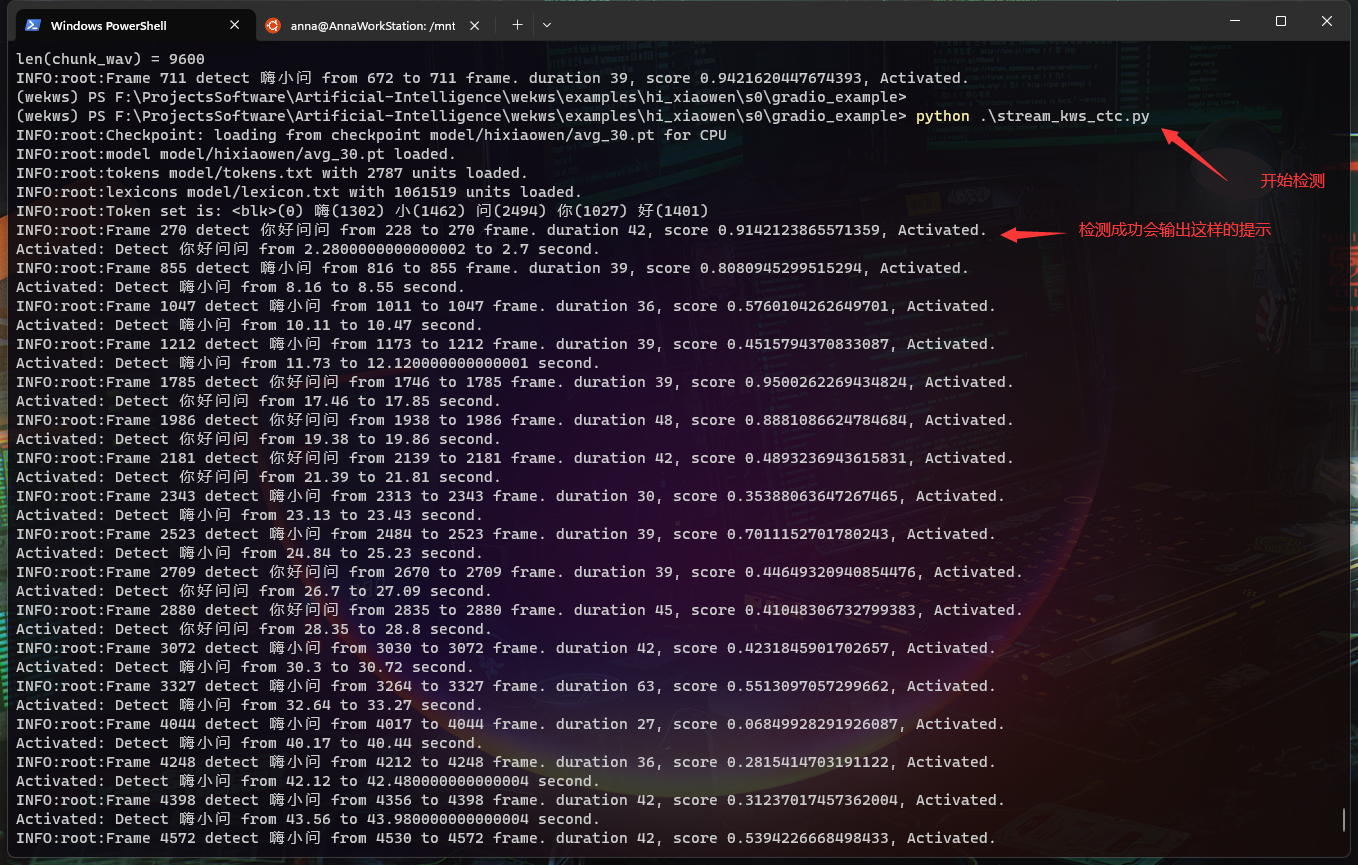

# stream 测试,即维持音频流的开启,持续进行唤醒检测

cd examples/hi_xiaowen/s0/gradio_example

python stream_kws_ctc.py1. [mycroft-precise](https://fancyerii.github.io/books/mycroft-precise/), tf+keras, 重构后复活并兼容新框架,模型精度无法爬坡,但音频处理可以借鉴. [forked by jiafeng5513](https://github.com/jiafeng5513/precise)

2. [snowboy](https://github.com/Kitt-AI/snowboy), 半开源,效果非常强,但是无法获得模型设计和音频处理细节,多方尝试无法移植到android x86.

3. [snowman](https://github.com/Thalhammer/snowman), 逆向的[snowboy](https://github.com/Kitt-AI/snowboy), 写的不太好,不建议花时间研究.

4. [SWIG](https://luoxiangyong.gitbooks.io/swig-documentation-chinese/content/chapter02/02-04-supported_ccpp-language-features.html), 这是用来编译[snowboy](https://github.com/Kitt-AI/snowboy)的,其作用是快速生产C++的其他语音接口.

5. [Apple ML Research: Voice Trigger System for Siri](https://machinelearning.apple.com/research/voice-trigger)

6. [paddlespeech 语音唤醒初探](https://www.cnblogs.com/talkaudiodev/p/17176554.html),此乃二手学术资源,谨慎阅读.

7. [lenovo-voice: PVTC2020](https://github.com/lenovo-voice/THE-2020-PERSONALIZED-VOICE-TRIGGER-CHALLENGE-BASELINE-SYSTEM),一个比赛的基线, 主要作用是引导我找到了数据集.

8. [paddlespeech 关键词识别](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/demos/keyword_spotting/README_cn.md)

9. speechcommand_v1 需要重新编译torchaudio或者切到cuda

10. hi_sinps需要下载一个需要注册的数据集,已经提交注册[Sonos Voice Experience Open Datasets ](https://docs.google.com/forms/d/e/1FAIpQLSdluk5umSFUJhzcmrcbQJoVNPyMoiQgLoMEXS11Bju0_fF_kw/viewform)

11. hi_xiaowen 需要重新编译torchaudio或者切到cuda

- DCCRN-KWS: An Audio Bias Based Model for Noise Robust Small-Footprint Keyword Spotting,模型看起来很不错,但是无代码,从论文中看,训练所需的资源可能是不可承受的.

- ML-KWS-for-MCU

- paperswithcode: Keyword Spotting

- Learning Efficient Representations for Keyword Spotting with Triplet Loss

- RapidASR,ASR,开源情况不明,此处有代码似乎为语音唤醒.

- Maix-Speech,半开源,且主要为ASR和TTS.

- Android离线语音识别,项目过老,其中的release apk无法安装运行于IVI,迁移到新工具链上进行重新编译需要消耗时间进行调试,不建议.

- 使用ModelScope训练自有的远场语音唤醒模型