This repository contains pre-trained models and testing code for MarrNet presented at NIPS 2017.

|

|

|

|

|

|

We use Torch 7 (http://torch.ch) for our implementation with these additional packages:

cutorch,cudnn: we use cutorch and cudnn for our model.fb.mattorch: we use.matfile for saving voxelized shapes.

- Basic visualization: MATLAB (tested on R2016b)

- Advanced visualization: Blender with bundled Python and packages

numpy,scipy,mathutils,itertools,bpy,bmesh.

Our current release has been tested on Ubuntu 14.04.

git clone git@github.com:jiajunwu/marrnet.gitcd marrnet

./download_models.shWe show how to reconstruct shapes from 2D images by using our pre-trained models. The file (main.lua) has the following options.

-imgname:The name of test image, which should be stored inimagefolder.

Usage

th main.lua -imgname chair_1.pngThe output is saved under folder ./output, named %imgname.mat, where %imgname is the name of the image without the filename extension.

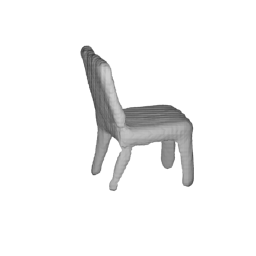

Note that the input image will be automatically resized to 256 x 256. The model works the best if the chair is centered and fills up about half of the whole image (like image/chair_1.png).

We offer two ways of visualizing results, one in MATLAB and the other in Blender. We used the Blender visualization in our paper. The MATLAB visualization is easier to install and run, but it is also slower, and its output has a lower quality compared with Blender.

MATLAB:

Please use the function visualization/matlab/visualize.m for visualization. The MATLAB code allows users to either display rendered objects or save them as images. The script also supports downsampling and thresholding for faster rendering. The color of voxels represents the confidence value.

Options include

inputfile: the .mat file that saves the voxel matricesindices: the indices of objects in the inputfile that should be rendered. The default value is 0, which stands for rendering all objects.step (s): downsample objects via a max pooling of step s for efficiency. The default value is 4 (128 x 128 x 128 -> 32 x 32 x 32).threshold: voxels with confidence lower than the threshold are not displayedoutputprefix:- when not specified, Matlab shows figures directly.

- when specified, Matlab stores rendered images of objects at

../result/outputprefix_%i_front.bmpand../result/outputprefix_%i_side.bmp, where%iis the index of objects.frontview should be the same view with the input image, andsideview would be a more understandable view for us.

Usage (after running th main.lua -imgname chair_1.png, in MATLAB, in folder visualization/matlab):

visualize('../../output/chair_1.mat', 0, 2, 0.03, 'chair_1')The code will visualize the shape in 2 different views. The visualization might take a while. Please change the step parameter if it is too slow.

Blender: Options for the Blender visualization include

file_path: the path to the.matfile.output_dir: the path of the output directory.outputprefix: Blender stores rendered images of objects atoutputprefix_%i_view_%j.png, where%iis the index of objects,%jis the index of views. When not specified, Blender will use 'im' by default.

Usage (after running th main.lua -imgname chair_1.png):

cd visualization/blender

blender --background --python render.py -- ../../output/chair_1.mat ../result/ chair_1Blender will render 3 different views, where the first view should be the same as the input image.

@inproceedings{marrnet,

title={{MarrNet: 3D Shape Reconstruction via 2.5D Sketches}},

author={Wu, Jiajun and Wang, Yifan and Xue, Tianfan and Sun, Xingyuan and Freeman, William T and Tenenbaum, Joshua B},

booktitle={Advances In Neural Information Processing Systems},

year={2017}

}

For any questions, please contact Jiajun Wu (jiajunwu@mit.edu) and Yifan Wang (wangyifan1995@gmail.com).