DisOptNet: Distilling Semantic Knowledge from Optical Images for Weather-independent Building Segmentation

Jian Kang, Zhirui Wang, Zhirui Wang, Junshi Xia, Xian Sun, Ruben Fernandez-Beltran, Antonio Plaza

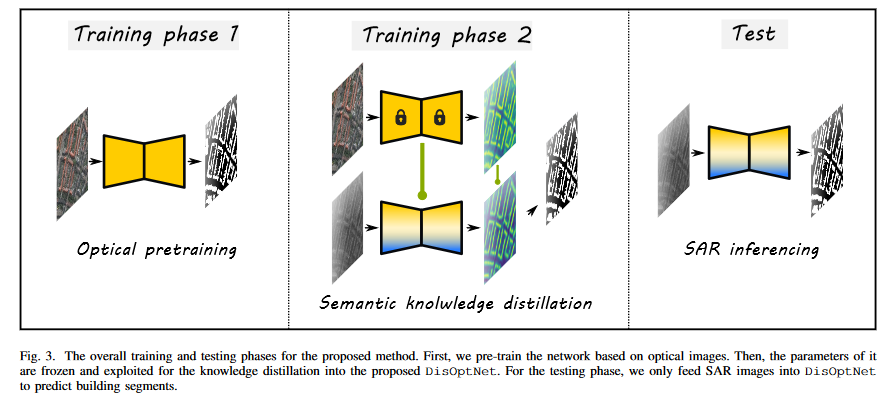

This repo contains the codes for the TGRS paper: DisOptNet: Distilling Semantic Knowledge from Optical Images for Weather-independent Building Segmentation. Compared to optical images, the semantics inherent to SAR images are less rich and interpretable due to factors like speckle noise and imaging geometry. In this scenario, most state-of-the-art methods are focused on designing advanced network architectures or loss functions for building footprint extraction. However, few works have been oriented towards improving segmentation performance through knowledge transfer from optical images. In this paper, we propose a novel method based on the DisOptNet network which can distill the useful semantic knowledge from optical images into a network only trained with SAR data.

We use the SpaceNet6 dataset in the paper. The dataset preparation is based on the SpaceNet6 baseline repo.

# first stage training (RGB)

./run_st1.sh

# second stage training (SAR+RGB)

./run_st2.sh

@article{kang2021RiDe,

title={DisOptNet: Distilling Semantic Knowledge from Optical Images for Weather-independent Building Segmentation},

author={Kang, Jian and Wang, Zhirui and Zhu, Ruoxin and Xia, Junshi and Sun, Xian and Fernandez-Beltran, Ruben and Plaza, Antonio},

journal={IEEE Transactions on Geoscience and Remote Sensing},

year={2022},

note={DOI:10.1109/TGRS.2022.3165209}

publisher={IEEE}

}