English | 中文

Vega ver1.5.0 released

-

Feature enhancement:

- Fixed some bugs in distributed training.

- Some networks support PyTorch + Ascend 910.

- The Vega-process, Vega-progress, and vega-verify-cluster commands provide JSON format information.

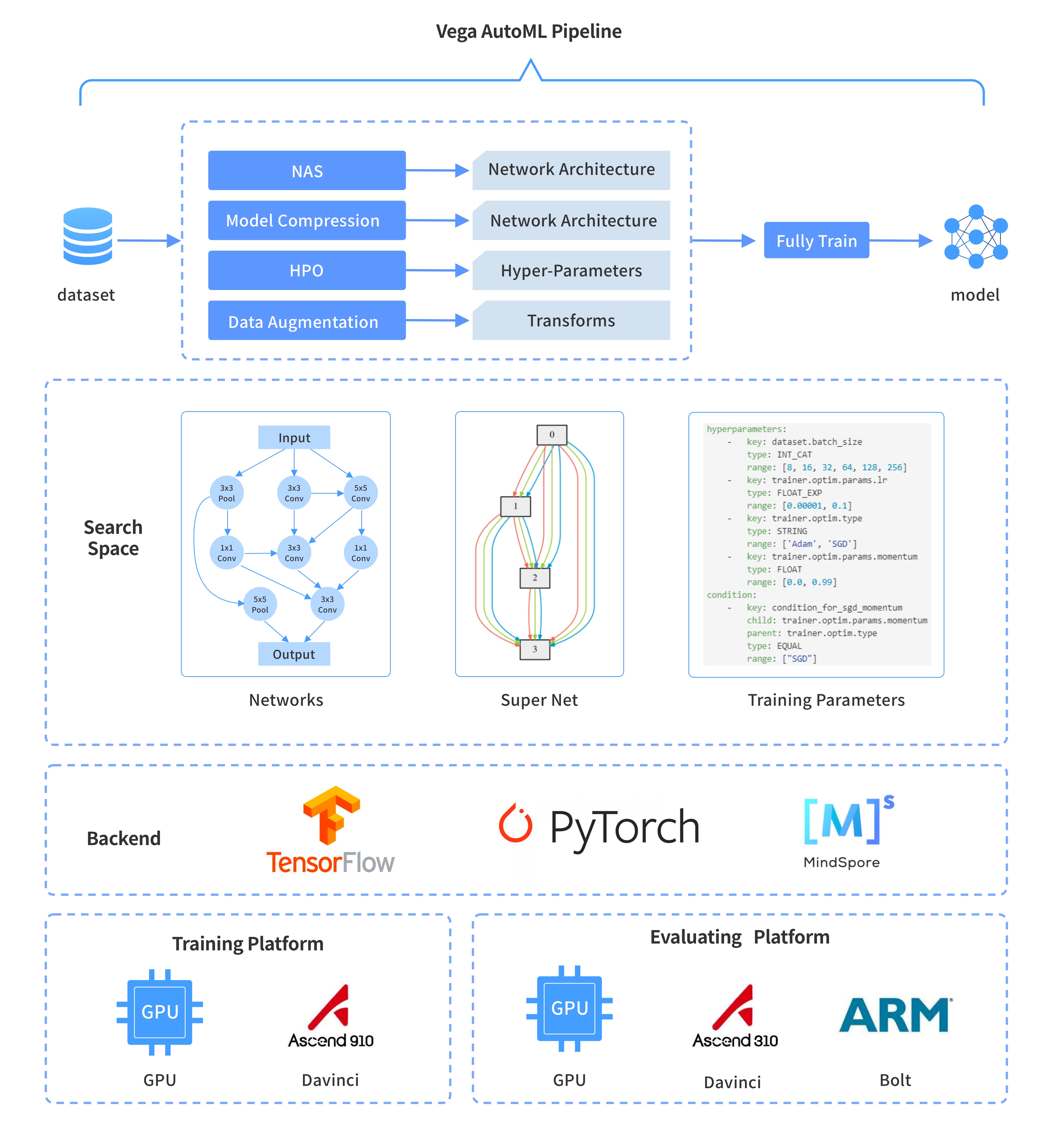

Vega is an AutoML algorithm tool chain developed by Noah's Ark Laboratory, the main features are as follows:

- Full pipeline capailities: The AutoML capabilities cover key functions such as Hyperparameter Optimization, Data Augmentation, Network Architecture Search (NAS), Model Compression, and Fully Train. These functions are highly decoupled and can be configured as required, construct a complete pipeline.

- Industry-leading AutoML algorithms: Provides Noah's Ark Laboratory's self-developed industry-leading algorithm (Benchmark) and Model Zoo to download the state-of-the-art (SOTA) models.

- Fine-grained network search space: The network search space can be freely defined, and rich network architecture parameters are provided for use in the search space. The network architecture parameters and model training hyperparameters can be searched at the same time, and the search space can be applied to Pytorch, TensorFlow and MindSpore.

- High-concurrency neural network training capability: Provides high-performance trainers to accelerate model training and evaluation.

- Multi-Backend: PyTorch (GPU and Ascend 910), TensorFlow (GPU and Ascend 910), MindSpore (Ascend 910).

- Ascend platform: Search and training on the Ascend 910 and model evaluation on the Ascend 310.

| Supported Frameworks | HPO Algorithms | NAS Algorithms | Device-Side Evaluation | Model Filter | Universal Network | |

|---|---|---|---|---|---|---|

| AutoGluon | mxnet, PyTorch | Random Search, Bayesian, Hyper-Band | Random Search, RL | × | × | × |

| AutoKeras | Keras | No Restrictions | Network Morphism | × | × | × |

| Model Search | TensorFlow | No Restrictions | Random Search, Beam Search | × | × | × |

| NNI | No Restrictions | Random Search and Grid Search, Bayesian, Annealing, Hyper-Band, Evolution, RL | Random Search, Gradient-Based, One-Shot | × | × | × |

| Vega | PyTorch, TensorFlow, MindSpore | Random Search, Grid Search, Bayesian, Hyper-Band, Evolution | Random Search, Gradient-Based, Evalution, One-Shot | Ascend 310, Kirin 980/990 | Quota (filter model based on parameters, flops, latency) | provides networks compatibility with PyTorch, TensorFlow, and MindSpore |

| Category | Algorithm | Description | reference |

|---|---|---|---|

| NAS | CARS: Continuous Evolution for Efficient Neural Architecture Search | Structure Search Method of Multi-objective Efficient Neural Network Based on Continuous Evolution | ref |

| NAS | ModularNAS: Towards Modularized and Reusable Neural Architecture Search | A code library for various neural architecture search methods including weight sharing and network morphism | ref |

| NAS | MF-ASC | Multi-Fidelity neural Architecture Search with Co-kriging | ref |

| NAS | NAGO: Neural Architecture Generator Optimization | An Hierarchical Graph-based Neural Architecture Search Space | ref |

| NAS | SR-EA | An Automatic Network Architecture Search Method for Super Resolution | ref |

| NAS | ESR-EA: Efficient Residual Dense Block Search for Image Super-resolution | Multi-objective image super-resolution based on network architecture search | ref |

| NAS | Adelaide-EA: SEGMENTATION-Adelaide-EA-NAS | Network Architecture Search Algorithm for Image Segmentation | ref |

| NAS | SP-NAS: Serial-to-Parallel Backbone Search for Object Detection | Serial-to-Parallel Backbone Search for Object Detection Efficient Search Algorithm for Object Detection and Semantic Segmentation in Trunk Network Architecture | ref |

| NAS | SM-NAS: Structural-to-Modular NAS | Two-stage object detection architecture search algorithm | Coming soon |

| NAS | Auto-Lane: CurveLane-NAS | An End-to-End Framework Search Algorithm for Lane Lines | ref |

| NAS | AutoFIS | An automatic feature selection algorithm for recommender system scenes | ref |

| NAS | AutoGroup | An automatically learn feature interaction for recommender system scenes | ref |

| NAS | MF-ASC | Multi-Fidelity neural Architecture Search with Co-kriging | ref |

| Model Compression | Quant-EA: Quantization based on Evolutionary Algorithm | Automatic mixed bit quantization algorithm, using evolutionary strategy to quantize each layer of the CNN network | ref |

| Model Compression | Prune-EA | Automatic channel pruning algorithm using evolutionary strategies | ref |

| HPO | ASHA: Asynchronous Successive Halving Algorithm | Dynamic continuous halving algorithm | ref |

| HPO | BOHB: Hyperband with Bayesian Optimization | Hyperband with Bayesian Optimization | ref |

| HPO | BOSS: Bayesian Optimization via Sub-Sampling | A universal hyperparameter optimization algorithm based on Bayesian optimization framework for resource-constraint hyper-parameters search | ref |

| Data Augmentation | PBA: Population Based Augmentation: Efficient Learning of Augmentation Policy Schedules | Data augmentation based on PBT optimization | ref |

| Data Augmentation | CycleSR: Unsupervised Image Super-Resolution with an Indirect Supervised Path | Unsupervised style migration algorithm for low-level vision problem. | ref |

| Fully Train | Beyond Dropout: Feature Map Distortion to Regularize Deep Neural Networks | Neural network training (regularization) based on disturbance of feature map | ref |

| Fully Train | Circumventing Outliers of AutoAugment with Knowledge Distillation | Joint knowledge distillation and data augmentation for high performance classication model training, achieved 85.8% Top-1 accuracy on ImageNet 1k | Coming soon |

Run the following commands to install Vega and related open-source software:

pip3 install --user --upgrade noah-vegaIf you need to install the Ascend 910 training environment, please contact us.

Run the vega command to run the Vega application. For example, run the following command to run the CARS algorithm:

vega ./examples/nas/cars/cars.ymlThe cars.yml file contains definitions such as pipeline, search algorithm, search space, and training parameters.

Vega provides more than 40 examples for reference: Examples, Example Guide, and Configuration Guide.

For common problems and exception handling, please refer to FAQ.

@misc{wang2020vega,

title={VEGA: Towards an End-to-End Configurable AutoML Pipeline},

author={Bochao Wang and Hang Xu and Jiajin Zhang and Chen Chen and Xiaozhi Fang and Ning Kang and Lanqing Hong and Wei Zhang and Yong Li and Zhicheng Liu and Zhenguo Li and Wenzhi Liu and Tong Zhang},

year={2020},

eprint={2011.01507},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Welcome to use Vega. If you have any questions or suggestions, need help, fix bugs, contribute new algorithms, or improve the documentation, submit an issue in the community. We will reply to and communicate with you in a timely manner.

Welcome to join our QQ chatroom (Chinese): 833345709.