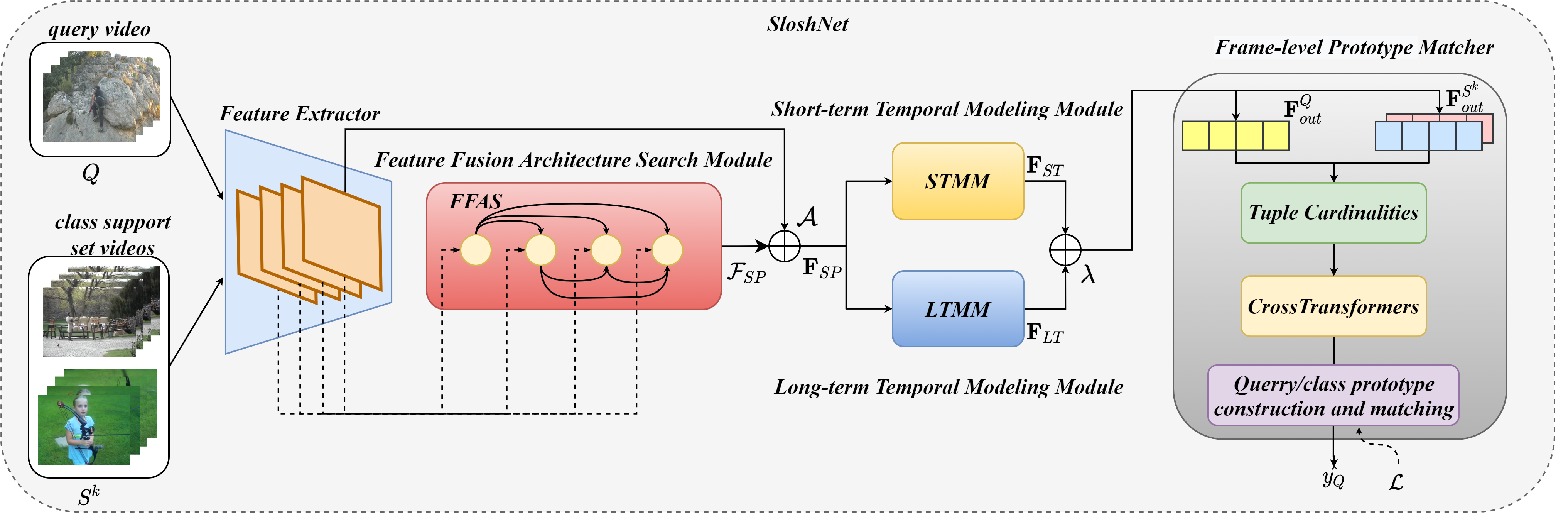

AAAI2023: Revisiting the Spatial and Temporal Modeling for Few-shot Action Recognition(SloshNet) [arXiv]

The code is built with following libraries:

- PyTorch >= 1.8

- tensorboardX

- pprint

- tqdm

- dotmap

- yaml

- csv

For video data pre-processing, you may need ffmpeg.

More detail information about libraries see INSTALL.md.

We need to first extract videos into frames for fast reading. Please refer to TSN repo for the detailed guide of data pre-processing. We have successfully trained on Kinetics, SthV2 ,UCF101, HMDB51.

We provided several examples to train SloshNet with this repo:

- To train on Kinetics or SthV2 or Hmdb51 or UCF101 from Imagenet pretrained models, you can run:

# train Kinetics

bash ./scripts/train_kin-1s.sh

# train SthV2

bash ./scripts/train_ssv2-1s.sh

# train HMDB

bash ./scripts/train_hmdb-1s.sh

# train UCF

bash ./scripts/train_ucf-1s.sh

To test the trained models, you can run scripts/run_test.sh. For example:

bash ./scripts/test.sh

If you find SloshNet useful in your research, please cite our paper.

Our code is based on TRX.