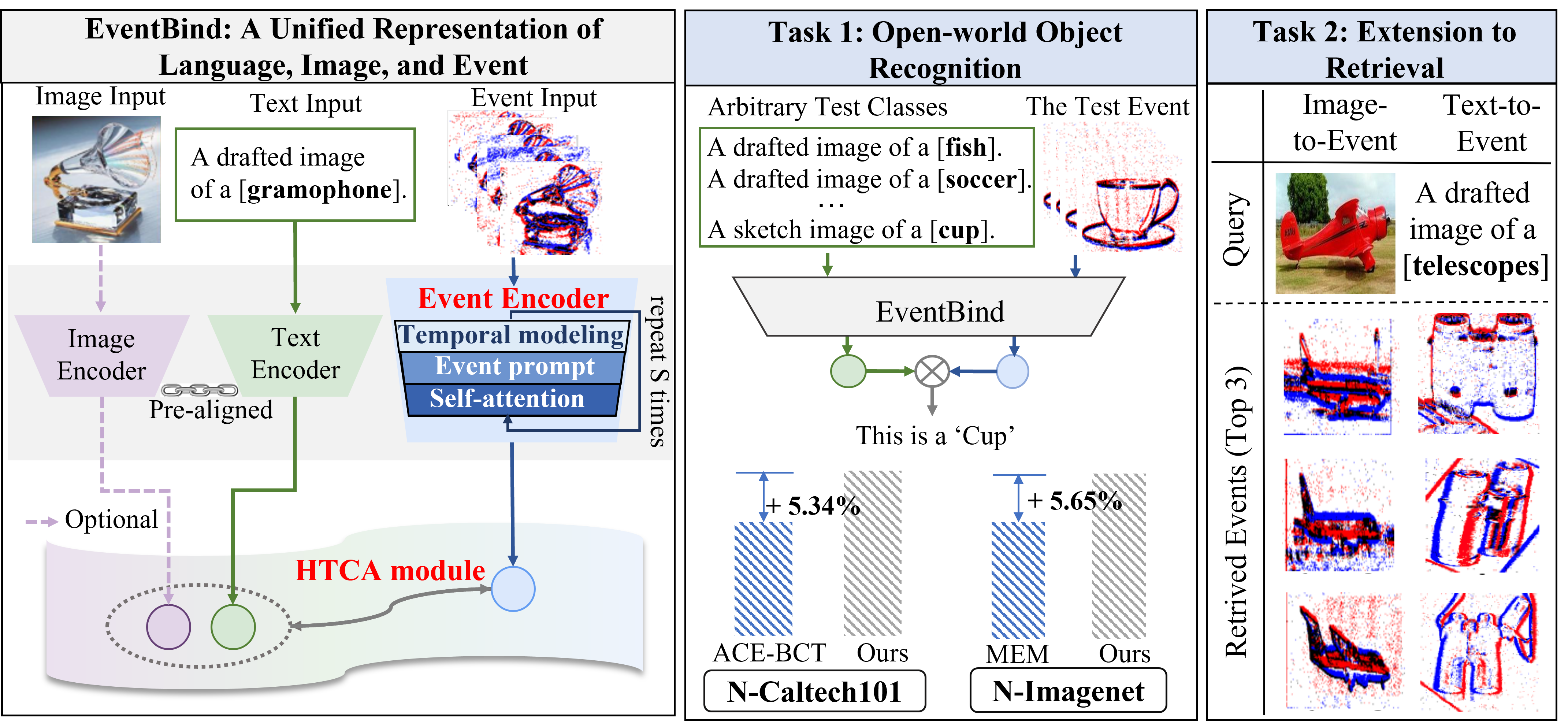

[🌟ECCV2024🌟]EventBind: Learning a Unified Representation to Bind Them All for Event-based Open-world Understanding

This repository contains the official PyTorch implementation of the paper "EventBind: Learning a Unified Representation to Bind Them All for Event-based Open-world Understanding" paper.

If you find this paper useful, please consider staring 🌟 this repo and citing 📑 our paper:

@article{zhou2023clip,

title={EventBind: Learning a Unified Representation to Bind Them All for Event-based Open-world Understanding},

author={Zhou, Jiazhou and Zheng, Xu and Lyu, Yuanhuiyi and Wang, Lin},

journal={arXiv preprint arXiv:2308.03135},

year={2023}

}

- Refer to install.md for step-by-step guidance on how to install the packages.

- Download the ViT-B-32, ViT-B-16, ViT-L-14 CLIP pretrained backbone in this repository.

- Download the dataset and its corresponding model checkpoints in the following Dataset section and Checkpoints section, respectively. Note that the train-val split for N-Caltech101/Caltech101 and N-MNIST/MNIST dataset are provided in Dataloader folder to ensure the fairness of future comparison. and we follow the N-Imagenet/Imagenet dataset's official train-val split.

- Change settings of the dataset_name.yaml in the Configs folder, which are emphasized by TODO notes.

- Finally, train and evaluate the EventBind using the following command!

python ./EventBind/train_dp_dataset_name.py

| Datasets | Access to Model checkpoints |

|---|---|

| N-Caltech101 | ViT-B-32, ViT-B-16, ViT-L-14 |

| N-MINIST | ViT-B-32, ViT-B-16, ViT-L-14 |

| N-Imagenet | ViT-B-32, ViT-B-16, ViT-L-14 |

| Event Datasets | Acesse to Download | Corresponding Image Datasets | Acesse to Download |

|---|---|---|---|

| N-Caltech101 | Download | Caltech101 | Download |

| N-Imagenet | Download | Imagenet | Download |

| N-MINIST | Download | MINIST | Download |

Please refer to install.md for step-by-step guidance on how to install the packages.

We thank the authors of CLIP, CoOp for opening source their wonderful works.

This repository is released under the MIT License.

If you have any question about this project, please feel free to contact jiazhouzhou@hkust-gz.edu.cn.