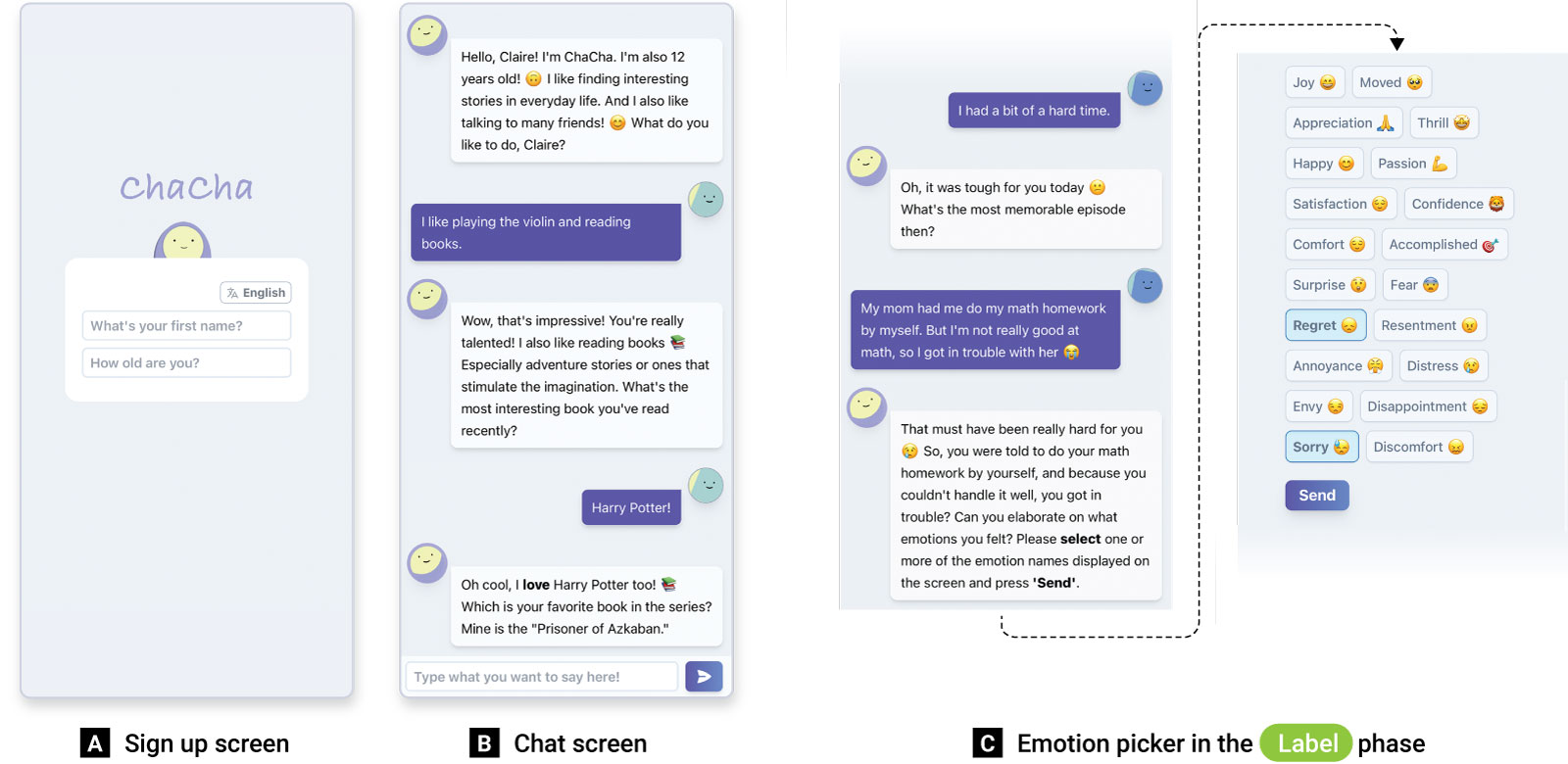

ChaCha (CHAtbot for CHildren's emotion Awareness): LLM-Driven Chatbot for Enhancing Emotional Awareness in Children

This repository is a source code of the chatbot implementation presented in the ACM CHI 2024 paper, titled "ChaCha: Leveraging Large Language Models to Prompt Children to Share Their Emotions about Personal Events."

Woosuk Seo, Chanmo Yang, and Young-Ho Kim. 2024.

ChaCha: Leveraging Large Language Models to Prompt Children to Share Their Emotions about Personal Events.

In Proceedings of ACM CHI Conference on Human Factors in Computing Systems (CHI'24). To appear.

https://naver-ai.github.io/chacha/

- Python 3.11.2 or higher

- Poetry - Python project dependency manager

- NodeJS and NPM - tested on 18.17.0

- Paid OpenAI API key (ChaCha uses GPT-3.5 and GPT-4 models internally).

- In the root directory, install dependencies using

poetry.

> poetry install- Install frontend Node dependencies

> cd frontend

> npm install

> cd ..- Run the setup script and follow the steps. It would help if you prepared the OpenAI API Key ready.

> poetry run python setup.py- Run chat.py on the command line:

> poetry run python chat.py-

Run backend server

> poetry run python main.pyThe default port is 8000. You can set

--portto designate manually.> poetry run python main.py --port 3000 -

Run frontend server

The frontend is implemented with React in TypeScript. The development server is run on Parcel.

> cd frontend > npm install <-- Run this to install dependencies > npm run dev

Access http://localhost:8888 on web browser.

You can perform the above steps using a shell script:

> sh ./run-web-dev.shThe backend server can serve the frontend web under the hood via the same port. To activate this, build the frontend code once:

> cd frontend

> npm run buildThen run the backend server:

> cd ..

> poetry run python main.py --production --port 80Access http://localhost on web browser.

A session chat can be reviewed by visiting [domain]/share/{session_id}. There, you can also download the chat logs in CSV.

To keep the framework lightweight, ChaCha leverages a file storage instead of a database. The session information and chat messages are stored in ./data/sessions/{session_name} in real time.

In the session directory, info.json maintains the metadata and the global configuration of the current session. For example:

{

"id": "User_001",

"turns": 1,

"response_generator": {

"state_history": [

[

"explore",

null

]

],

"verbose": false,

"payload_memory": {},

"user_name": "John",

"user_age": 12,

"locale": "kr"

}

}In the same location, dialogue.jsonl keeps the list of chat messages in a format of JsonLines, where each message is formatted as a single-lined json object.

- Young-Ho Kim (NAVER AI Lab) - Maintainer (yghokim@younghokim.net)

- Woosuk Seo (Intern at NAVER AI Lab, PhD candidate at University of Michigan)

- This work was supported by NAVER AI Lab through a research internship.

- The conversational flow design of ChaCha is grounded in Woosuk Seo’s dissertation research, which was supported by National Science Foundation CAREER Grant #1942547 (PI: Sun Young Park), advised by Sun Young Park and Mark S. Ackerman, who are instrumental in shaping this work.