This document explains how to use AWS ParallelCluster to build HPC compute cluster that uses trn1 compute nodes to run your distributed ML training job. Once the nodes are launched, we will run a training task to confirm that the nodes are working, and use SLURM commands to check the job status. In this tutorial, we will use AWS pcluster command to run a YAML file in order to generate the cluster. As an example, we are going to launch multiple trn1.32xl nodes in our cluster.

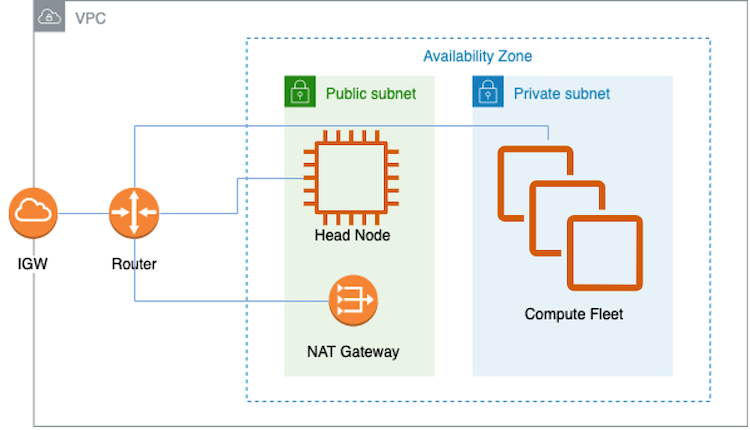

We are going to set up our ParallelCluster infrastructure as below:

As shown in the figure above, inside a VPC, there are two subnets, a public and a private ones. Head node resides in the public subnet, while the compute fleet (in this case, trn1 instances) are in the private subnet. A Network Address Translation (NAT) gateway is also needed in order for nodes in the private subnet to connect to clients outside the VPC. In the next section, we are going to describe how to set up all the necessary infrastructure for Trn1 ParallelCluster.

A ParallelCluster requires a VPC that has two subnets and a Network Address Translation (NAT) gateway as shown in the diagram above. Here are the instructions to create the VPC and enable auto-assign public IPv4 address for the public subnet.

A key pair is needed for access to the head node of the cluster. You may use an existing one or create a new key pair by following the instruction here

AWS ParallelCluster Python package is needed in a local environment (i.e., your Mac/PC desktop with a CLI terminal or an AWS Cloud9) where you issue the command to launch the creation process for your HPC environment in AWS. See here for instructions about installing AWS ParallelCluster Python package in your local environment.

See table below for script to create trn1 ParallelCluster:

| Cluster | Link |

|---|---|

| 16xTrn1 nodes | trn1-16-nodes-pcluster.md |

See table below for script to launch a model training job on the ParallelCluster:

| Job | Link |

|---|---|

| BERT Large | dp-bert-launch-job.md |

| GPT3 (neuronx-nemo-megatron) | neuronx-nemo-megatron-gpt-job.md |

| Llama 2 7B (neuronx-nemo-megatron) | neuronx-nemo-megatron-llamav2-job.md |

See table below for scripts that are no longer supported:

| Job | Link |

|---|---|

| GPT3 (Megatron-LM) | gpt3-launch-job.md |

See CONTRIBUTING for more information.

This library is licensed under the Amazon Software License.

Please refer to the Change Log.