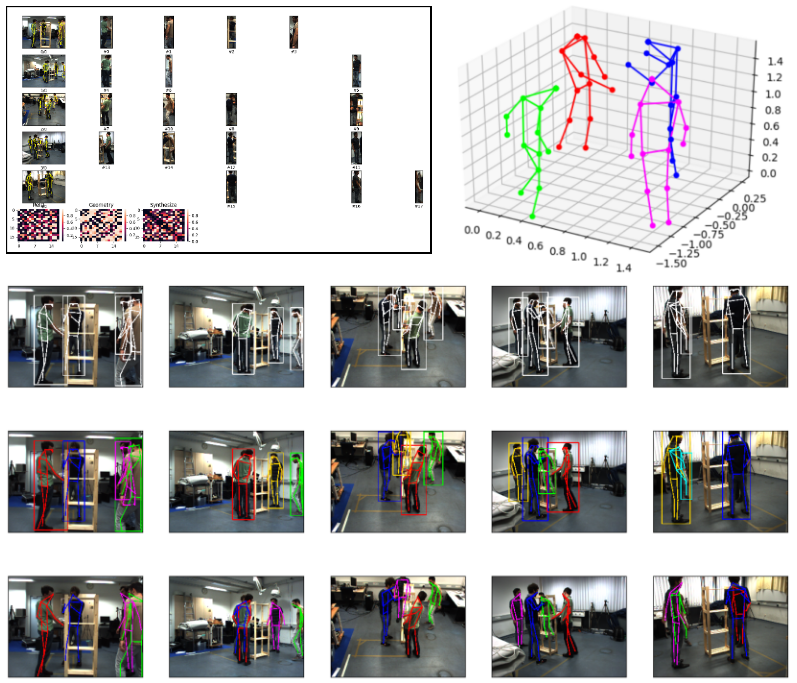

Fast and Robust Multi-Person 3D Pose Estimation from Multiple Views

Junting Dong, Wen Jiang, Qixing Huang, Hujun Bao, Xiaowei Zhou

CVPR 2019 Project Page

Any questions or discussions are welcomed!

- Set up python environment

pip install -r requirements.txt

- Compile the

backend/tf_cpn/libandbackend/light_head_rcnn/lib

cd mvpose/backend/tf_cpn/lib/

make

cd ./lib_kernel/lib_nms

bash compile.sh

cd mvpose/backend/light_head_rcnn/lib/

bash make.sh

Since they use py-faster-rcnn as backbone. Many people using faster-rcnn meet with some problems when compiling those components. Suggestions on google can be helpful.

- Compile the pictorial function for accelerate

cd mvpose/src/m_lib/

python setup.py build_ext --inplace

-

Prepare models: Please put light-head-rcnn models to

backend/light_head_rcnn/output/model_dump, backend/tf_cpn/log/model_dump tobackend/tf_cpn/log/model_dump, and CamStyle model trained by myself tobackend/CamStyle/logs -

Prepare the datasets: Put datasets such as Shelf and CampusSeq1 to

./datasets/Download Campus and Shelf datasets. Then, put datasets such as Shelf and CampusSeq1 to datasets/ -

Generate the camera parameters: Since each dataset uses different way to obtain the camera parameters, we show an example to deal with the Campus dataset:

- Add following code to

.datasets/CampusSeq1/Calibration/producePmat.m

K = cell(1,3); K{1} = K1; K{2} = K2; K{3} = K3; m_RT = cell(1,3); m_RT{1} = RT1; m_RT{2} = RT2; m_RT{3} = RT3; save('intrinsic.mat','K'); save('m_RT.mat', 'm_RT'); save('P.mat', 'P'); save('prjectionMat','P');- generate the

camera_parameter.pickle

python ./src/tools/mat2pickle.py /parameter/dir ./datasets/CampusSeq1Here, we also provide the camera_parameter.pickle of Campus and Shelf. You can generate the .pickle file for your datasets using the same way.

- Add following code to

python ./src/m_utils/demo.py -d Campus

python ./src/m_utils/demo.py -d Shelf

If all the configuration is OK, you may see the visuluization of following items.

python ./src/m_utils/evaluate.py -d Campus

python ./src/m_utils/evaluate.py -d Shelf

As long as the progress bar finished, you may see a beautified table of evaluation result and a csv file for the evaluation result will be save in ./result directory.

Since the 2D pose estimator (CPN) is a little slow, we can save the predicted 2D poses and heatmaps and then start with these saved files.

- produce the files

python src/tools/preprocess.py -d Campus -dump_dir ./datasets/Campus_processed

python src/tools/preprocess.py -d Shelf -dump_dir ./datasets/Shelf_processed

- evaluate with saved 2D poses and heatmaps

python ./src/m_utils/evaluate.py -d Campus -dumped ./datasets/Campus_processed

python ./src/m_utils/evaluate.py -d Shelf -dumped ./datasets/Shelf_processed

Note: for the sake of convenience, we do not optimize on the size of dumped file.

Therefore, the size of Campus_processed is around 4.0G and the size of Shelf_processed is around 234G. Please make sure your disk have 200+G free space. Any pull request to solve this issues will be welcomed.

If you find this code useful for your research, please use the following BibTeX entry.

@article{dong2019fast,

title={Fast and Robust Multi-Person 3D Pose Estimation from Multiple Views},

author={Dong, Junting and Jiang, Wen and Huang, Qixing and Bao, Hujun and Zhou, Xiaowei},

journal={CVPR},

year={2019}

}

This code uses these code (Light head rcnn, Cascaded Pyramid Network, CamStyle) as backbone. We gratefully appreciate the impact it had on our work. If you use our code, please consider citing the original paper as well.