NOTE: The simplified version of this example is at feat-simple-tutorial branch, which contains the full codes for the tutorial at docs.jina.ai

Imagine that you remember one specific scene from a movie, for example the scene from The Lord of the Rings where Gandalf is figthing the dragon Balrog. Unfortunately, you forgot both the name Gandalf and Balrog and also in which of the three movies the scene occurred. How could you find the correct scene in the movie now? This is where this example can help you. This Video Search System allows you to search in movies based on text. This means you could search 'Old wizard fighting dragon' and the search system would return the correct movie and timestamp of the scene.

Table of Contents

| About this example: | |

|---|---|

| Learnings | How to search through both image frames and audio of a video. |

| Used for indexing | Video Files. |

| Used for querying | Text Query (e.g. "girl studying engineering") |

| Dataset used | Choose your own videos |

| Model used | AudioCLIP |

In this example, we create a video search system that retrieves the videos based on short text descriptions of the scenes. The main challenge is to enable the user to search videos without using any labels or text information about the videos.

We choose to use Audio CLIP models to encode the video frames and audios

Jina searches both the image frames and the audio of the video and returns the matched video and a timestamp.

These instructions explain how to build the example yourself and deploy it with Python.

- You have a working Python 3.7 or 3.8 environment and a installation of Docker. Ensure that you set enough memory resources(more than 6GB) to docker. You can set it in settings/resources/advanced in Docker.

- You have at least 5 GB of free space on your hard drive.

- You have installed

ffmpegand it is available from the command line (it's in yourPATHenvironment variable). On Ubuntu, this should cover it:sudo apt-get install -y ffmpeg - We recommend creating a new Python virtual environment to have a clean installation of Jina and prevent dependency conflicts.

python -m venv venv

source venv/bin/activateBegin by cloning the repo, so you can get the required files and datasets.

git clone https://github.com/jina-ai/example-video-search

cd example-video-searchIn your terminal, you should now be located in the example-video-search folder. Let's install Jina and the other required Python libraries. For further information on installing Jina check out our documentation.

pip install -r requirements.txtWe recommend you to download the AudioCLIP model in advance. To do that, run:

bash scripts/download_model.shTo quickly get started, you can index a small dataset to make sure everything is working correctly.

To index the toy dataset, run

python app.py -m grpcAfter indexing, the search flow is started automatically and three simple test queries are performed. The results are displayed in your terminal.

We recommend you come back to this step later and index more data.

After indexing once, you can query without indexing by running

python app.py -m restful_queryAfterwards, you can query with

curl -X 'POST' 'localhost:45678/search' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"data": [{"text": "this is a highway"}]}'The retrieved results contains the video filename (id) and the best matched frame in that video together with its timestamp.

You can also add more parameters to the query:

curl -X POST -d '{"parameters":{"top_k": 5}, "data": ["a black dog and a spotted dog are fighting"]}' -H 'accept: application/json' -H 'Content-Type: application/json' 'http://localhost:45678/search'Once you run this command, you should see a JSON output returned to you. This contains the video uri and the timestamp, which together determine one part of the video that matches the query text description.

By default, the toy_data contains two videos clipped from YouTube.

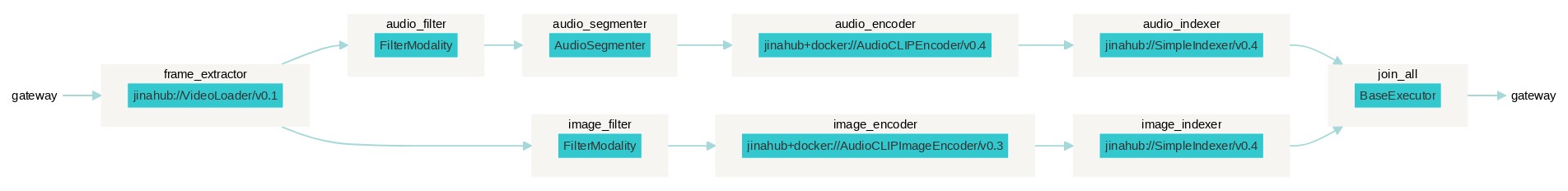

This diagram provides a visual representation of the Flows in this example; Showing which executors are used in which order. Remember, our goal is to compare vectors representing the semantics of images and audio with vectors encoding the semantics of short text descriptions.

As you can see, the Flow that Indexes the data contains two parallel branches:

- Image: Encodes image frames from the video and indexes them.

- Audio: Encodes audio of the images and indexes it.

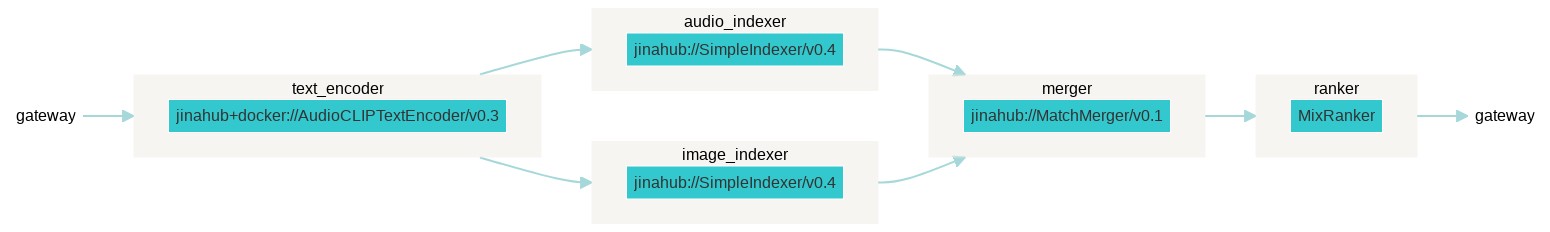

The query flow is different to the index flow. We are encoding the text input using the AudioCLIP model and then

compare the embeddings with the audio and image embeddings we have stored in the indexers.

Then, the indexers add the closest matches to the documents.

📃 index-flow.yml |

YAML file to configure indexing Flow |

📃 search-flow.yml |

YAML file to configure querying Flow |

📃 executors.py |

File that contains Ranker and ModalityFilter executors |

📂 workspace/ |

Folder to store indexed files (embeddings and documents). Automatically created after the first indexing |

📂 toy-data/ |

Folder to store the toy dataset for the example |

📃 app.py |

Main file that runs the example |

Did you like this example and are you interested in building your own? For a detailed tutorial on how to build your Jina app check out How to Build Your First Jina App guide in our documentation.

To learn more about Jina concepts, check out the cookbooks.

If you have any issues following this guide, you can always get support from our Slack community .

- Slack channel - a communication platform for developers to discuss Jina.

- LinkedIn - get to know Jina AI as a company and find job opportunities.

- follow us and interact with us using hashtag

#JinaSearch.- Company - know more about our company, we are fully committed to open-source!

Copyright (c) 2021 Jina AI Limited. All rights reserved.

Jina is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.