This README contains instructions for local testing, deployment and cloud deployment of the news classification model.

Before we can get started with writing any code for the project, we recommend creating a Python virtual environment. If you haven't created virtual environments in Python before, you can refer to this documentation.

It needs the following steps (the commands shown below work for MacOS/Unix operating systems. Please refer to the documentation above for Windows):

- Insall

pip:

$ python3 -m pip install --user --upgrade pip- Install

virtualenv:

$ python3 -m pip install --user virtualenv- Create virtual environment:

$ python3 -m venv mlopsproject- Activate the virtual environment:

$ source mlopsproject/bin/activate- Install the required python dependencies:

$ python3 -m pip install -r requirements.txtgo to the deploy/ directory and run:

$ python3 -m pip install -r requirements.txtDownload and install Docker. You can follow the steps in this document.

If you are new to Docker, we suggest spending some time to get familiar with the Docker command line and dashboard. Docker's getting started page is a good resource.

- Before getting started on the web application changes, make sure you have serialized the model artifact for deployment from Part1.ipynb. You can run the starter web server code:

$ cd deploy

$ uvicorn service:app --reloadYou should see an output like:

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [29334] using watchgod

INFO: Started server process [29336]

INFO: Waiting for application startup.

2022-10-26 14:45:28.082 | INFO | model.classifier:load:92 - Loaded trained model pipeline from: news_classifier.joblib

2022-10-26 14:45:31.705 | INFO | service:startup_event:47 - Setup completed

INFO: Application startup complete.When you go to http://127.0.0.1:8000 from a web browser, you should see this text output:

{"Hello": "World"}:

-

We are now ready to get started on writing the code! All the required code changes for this project are in

deploy/service.py. Comments in this file will help you understand the changes we need to make to create our web application to make model predictions. Once the code changes are done, you can start the web server again using the command from the above step. -

Test with an example request:

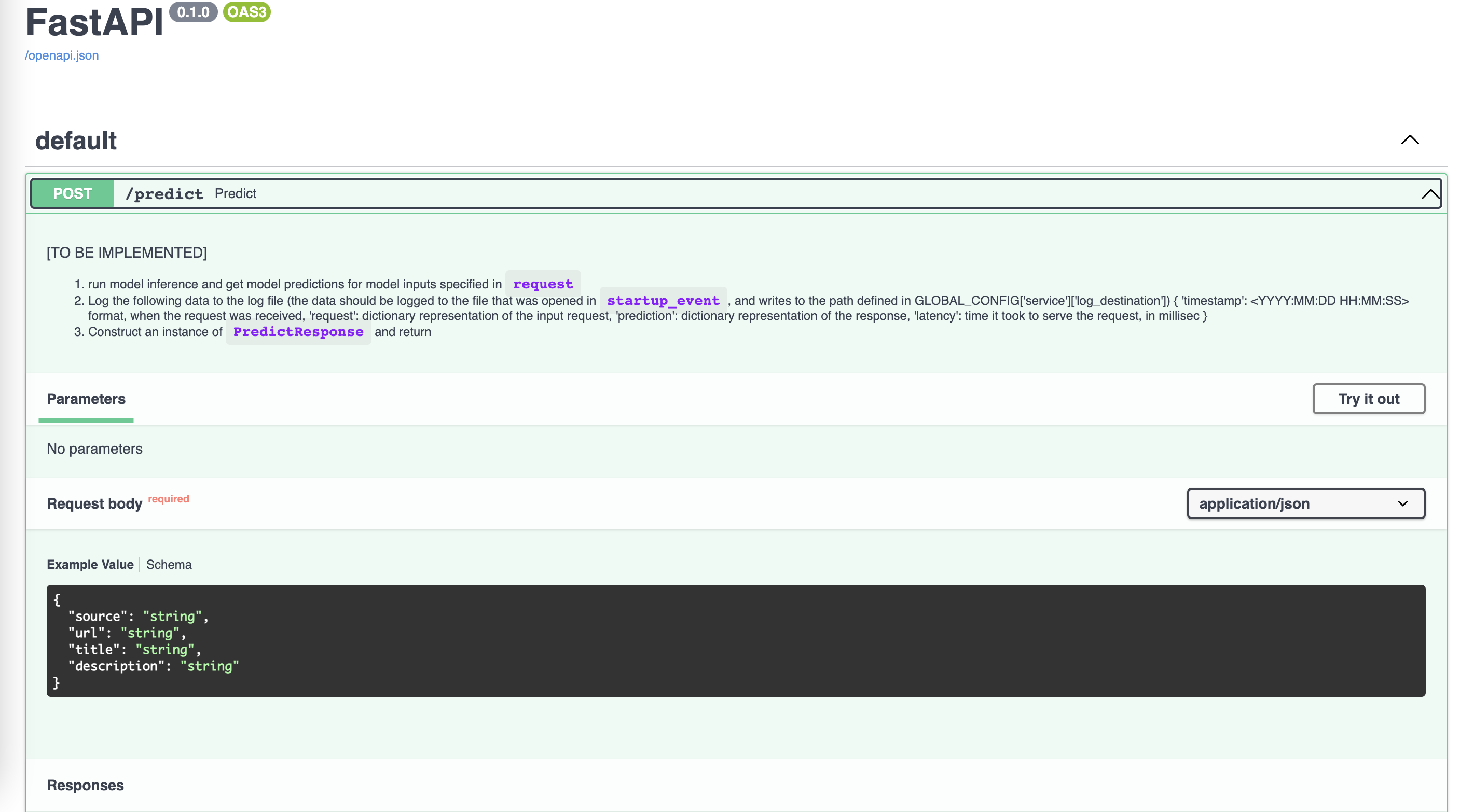

Visit http://127.0.0.1:8000/docs. You will see a /predict endpoint:

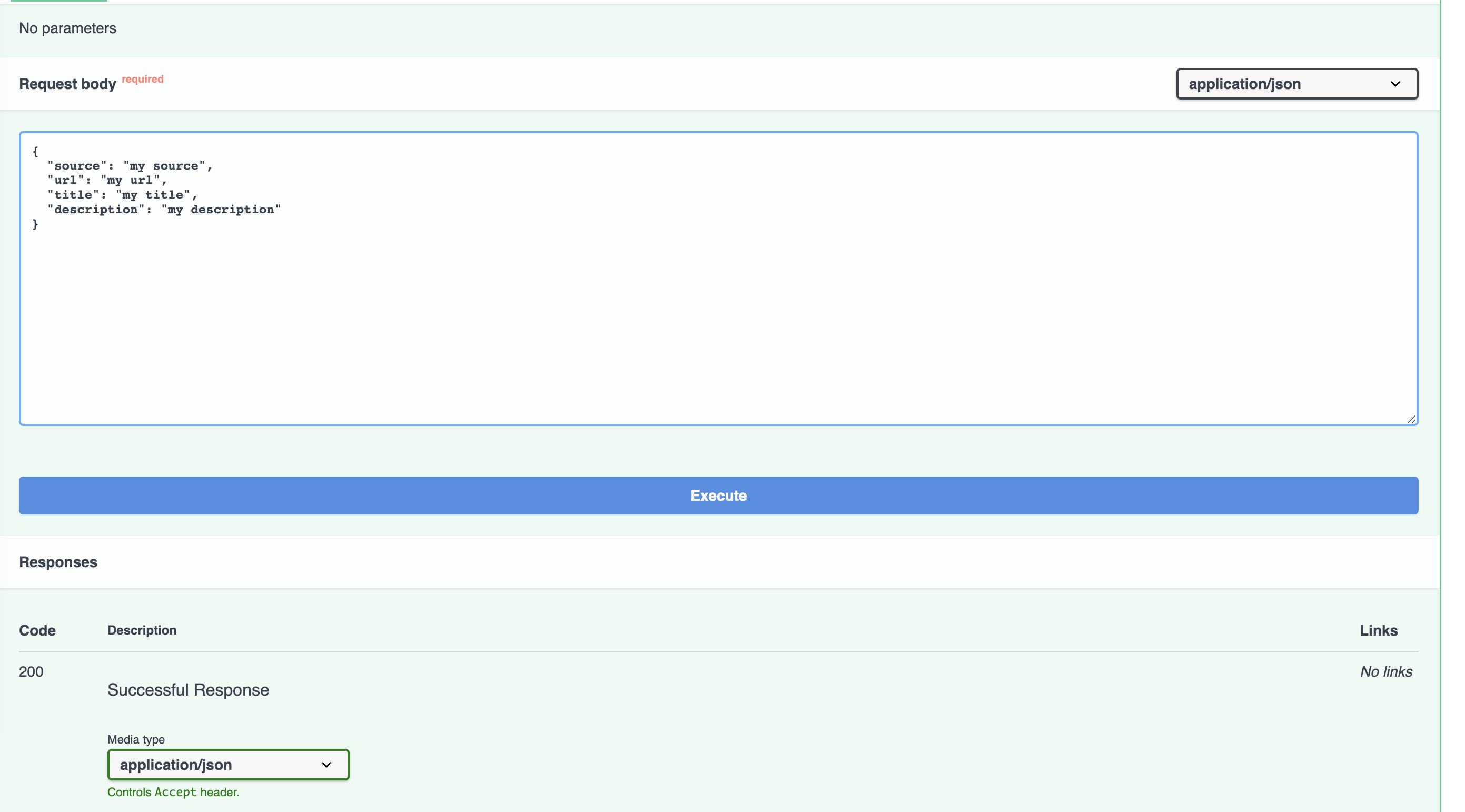

You can click on "Try it now" which will let you modify the input request. Click on "Execute" to see the model prediction response from the web server:

Some suggested requests to try out:

{

"source": "BBC Technology",

"url": "http://news.bbc.co.uk/go/click/rss/0.91/public/-/2/hi/business/4144939.stm",

"title": "System gremlins resolved at HSBC",

"description": "Computer glitches which led to chaos for HSBC customers on Monday are fixed, the High Street bank confirms."

}{

"source": "Yahoo World",

"url": "http://us.rd.yahoo.com/dailynews/rss/world/*http://story.news.yahoo.com/news?tmpl=story2u=/nm/20050104/bs_nm/markets_stocks_us_europe_dc",

"title": "Wall Street Set to Open Firmer (Reuters)",

"description": "Reuters - Wall Street was set to start higher on\Tuesday to recoup some of the prior session's losses, though high-profile retailer Amazon.com may come under\pressure after a broker downgrade."

}{

"source": "New York Times",

"url": "",

"title": "Weis chooses not to make pickoff",

"description": "Bill Belichick won't have to worry about Charlie Weis raiding his coaching staff for Notre Dame. But we'll have to see whether new Miami Dolphins coach Nick Saban has an eye on any of his former assistants."

}{

"source": "Boston Globe",

"url": "http://www.boston.com/business/articles/2005/01/04/mike_wallace_subpoenaed?rss_id=BostonGlobe--BusinessNews",

"title": "Mike Wallace subpoenaed",

"description": "Richard Scrushy once sat down to talk with 60 Minutes correspondent Mike Wallace about allegations that Scrushy started a huge fraud while chief executive of rehabilitation giant HealthSouth Corp. Now, Scrushy wants Wallace to do the talking."

}{

"source": "Reuters World",

"url": "http://www.reuters.com/newsArticle.jhtml?type=worldNewsstoryID=7228962",

"title": "Peru Arrests Siege Leader, to Storm Police Post",

"description": "LIMA, Peru (Reuters) - Peruvian authorities arrested a former army major who led a three-day uprising in a southern Andean town and will storm the police station where some of his 200 supporters remain unless they surrender soon, Prime Minister Carlos Ferrero said on Tuesday."

}{

"source": "The Washington Post",

"url": "http://www.washingtonpost.com/wp-dyn/articles/A46063-2005Jan3.html?nav=rss_sports",

"title": "Ruffin Fills Key Role",

"description": "With power forward Etan Thomas having missed the entire season, reserve forward Michael Ruffin has done well in taking his place."

}Remember, we are containerizing this service to be deployed on AWS Lambda. There's a specific request schema that the API gateway will use to route the incoming request to the deployed lambda. We use Mangum (https://mangum.io/) to parse this request and spit out the json request that our endpoint can understand.

- Build the Docker Image

$ docker build --platform linux/amd64 -t news-classifier .- Start the container:

$ docker run -p 9000:8080 news-classifier:latest- Test the Docker container with an example request:

Execute the following example request from the command line: https://gist.github.com/nihit/6eabbc571a24fa0318b3893f4eaa6321

$ curl --location --request POST 'http://localhost:9000/2015-03-31/functions/function/invocations' \

--header 'Content-Type: application/json' \

--data-raw '{

"resource": "/",

"path": "/predict",

"httpMethod": "POST",

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"cache-control": "no-cache",

"CloudFront-Forwarded-Proto": "https",

"CloudFront-Is-Desktop-Viewer": "true",

"CloudFront-Is-Mobile-Viewer": "false",

"CloudFront-Is-SmartTV-Viewer": "false",

"CloudFront-Is-Tablet-Viewer": "false",

"CloudFront-Viewer-Country": "US",

"Content-Type": "application/json",

"headerName": "headerValue",

"Host": "gy415nuibc.execute-api.us-east-1.amazonaws.com",

"Postman-Token": "9f583ef0-ed83-4a38-aef3-eb9ce3f7a57f",

"User-Agent": "PostmanRuntime/2.4.5",

"Via": "1.1 d98420743a69852491bbdea73f7680bd.cloudfront.net (CloudFront)",

"X-Amz-Cf-Id": "pn-PWIJc6thYnZm5P0NMgOUglL1DYtl0gdeJky8tqsg8iS_sgsKD1A==",

"X-Forwarded-For": "54.240.196.186, 54.182.214.83",

"X-Forwarded-Port": "443",

"X-Forwarded-Proto": "https"

},

"multiValueHeaders": {

"Accept": [

"*/*"

],

"Accept-Encoding": [

"gzip, deflate"

],

"cache-control": [

"no-cache"

],

"CloudFront-Forwarded-Proto": [

"https"

],

"CloudFront-Is-Desktop-Viewer": [

"true"

],

"CloudFront-Is-Mobile-Viewer": [

"false"

],

"CloudFront-Is-SmartTV-Viewer": [

"false"

],

"CloudFront-Is-Tablet-Viewer": [

"false"

],

"CloudFront-Viewer-Country": [

"US"

],

"": [

""

],

"Content-Type": [

"application/json"

],

"headerName": [

"headerValue"

],

"Host": [

"gy415nuibc.execute-api.us-east-1.amazonaws.com"

],

"Postman-Token": [

"9f583ef0-ed83-4a38-aef3-eb9ce3f7a57f"

],

"User-Agent": [

"PostmanRuntime/2.4.5"

],

"Via": [

"1.1 d98420743a69852491bbdea73f7680bd.cloudfront.net (CloudFront)"

],

"X-Amz-Cf-Id": [

"pn-PWIJc6thYnZm5P0NMgOUglL1DYtl0gdeJky8tqsg8iS_sgsKD1A=="

],

"X-Forwarded-For": [

"54.240.196.186, 54.182.214.83"

],

"X-Forwarded-Port": [

"443"

],

"X-Forwarded-Proto": [

"https"

]

},

"queryStringParameters": {},

"multiValueQueryStringParameters": {},

"pathParameters": {},

"stageVariables": {

"stageVariableName": "stageVariableValue"

},

"requestContext": {

"accountId": "12345678912",

"resourceId": "roq9wj",

"stage": "testStage",

"requestId": "deef4878-7910-11e6-8f14-25afc3e9ae33",

"identity": {

"cognitoIdentityPoolId": null,

"accountId": null,

"cognitoIdentityId": null,

"caller": null,

"apiKey": null,

"sourceIp": "192.168.196.186",

"cognitoAuthenticationType": null,

"cognitoAuthenticationProvider": null,

"userArn": null,

"userAgent": "PostmanRuntime/2.4.5",

"user": null

},

"resourcePath": "/predict",

"httpMethod": "POST",

"apiId": "gy415nuibc"

},

"body": "{\"source\": \"\", \"url\": \"string\", \"title\": \"string\", \"description\": \"Ellis L. Marsalis Sr., the patriarch of a family of world famous jazz musicians, including grandson Wynton Marsalis, has died. He was 96.\"}",

"isBase64Encoded": false

}'

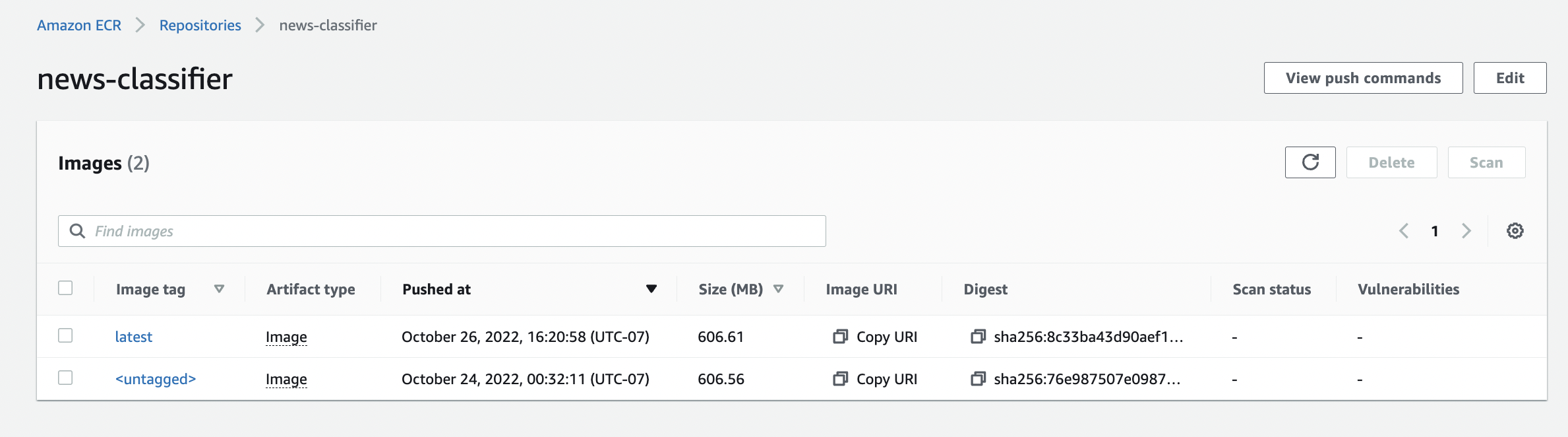

Once you have the Docker image, and have tested the container locally:

[1] Login to the ECR repository aws ecr get-login-password --region us-west-2 | docker login --username AWS --password-stdin 215673578938.dkr.ecr.us-west-2.amazonaws.com

[2] Push the Docker image to ECR:

docker tag news-classifier:latest 215673578938.dkr.ecr.us-west-2.amazonaws.com/news-classifier:latest

docker push 215673578938.dkr.ecr.us-west-2.amazonaws.com/news-classifier:latest- Create/Update lambda function:

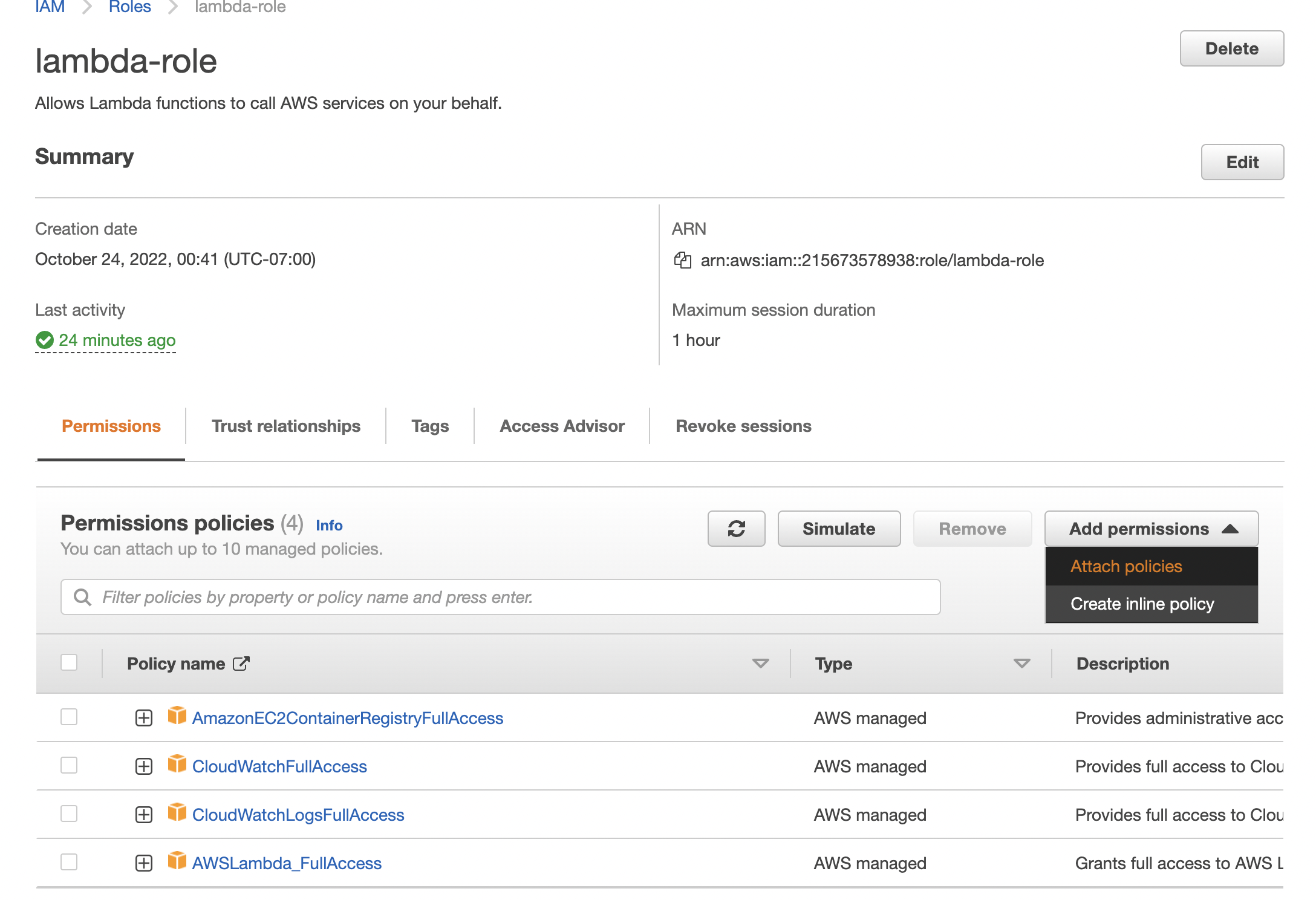

aws lambda create-function --function-name news-classifier-lambda --package-type Image --code ImageUri=215673578938.dkr.ecr.us-west-2.amazonaws.com/news-classifier:latest --role arn:aws:iam::215673578938:role/lambda-role --timeout 900--memory-size 2048Response:

{

"FunctionName": "news-classifier-lambda",

"FunctionArn": "...",

"Role": "...",

"CodeSize": 0,

"Description": "",

"Timeout": 3,

"MemorySize": 128,

"LastModified": "2022-10-24T07:43:41.855+0000",

"CodeSha256": "76e987507e09875d833208f21990d2543a36dbce5ff7db5935799effc6c94e1c",

"Version": "$LATEST",

"TracingConfig": {

"Mode": "PassThrough"

},

"RevisionId": "35be7fc5-ab9c-4ce7-9a57-46f40ed2cb34",

"State": "Pending",

"StateReason": "The function is being created.",

"StateReasonCode": "Creating",

"PackageType": "Image",

"Architectures": [

"x86_64"

]

}

We can now try executing the lambda in AWS console!

Some good resources are:

- Blog post showing FastAPI deployment with API Gateway with a Dockerized Lambda

- Another blog post showing a similar deployment

- AWS Docs for creating a Python Lambda with Docker

Future:

- Blog post showing Dockerized FastAPI with Fargate (and with Terraform) if we ever choose to move past Lambda

Other Docker commands that are useful:

docker ps: Show list of running containersdocker images: Show list of images available locallydocker images --digests: Show list of images with the SHA256 digests of each imagedocker container prune: Delete stopped containersdocker rmi: Delete an imagedocker rmi -f $(docker images -aq): Delete all imagesdocker image prune: Delete all dangling images