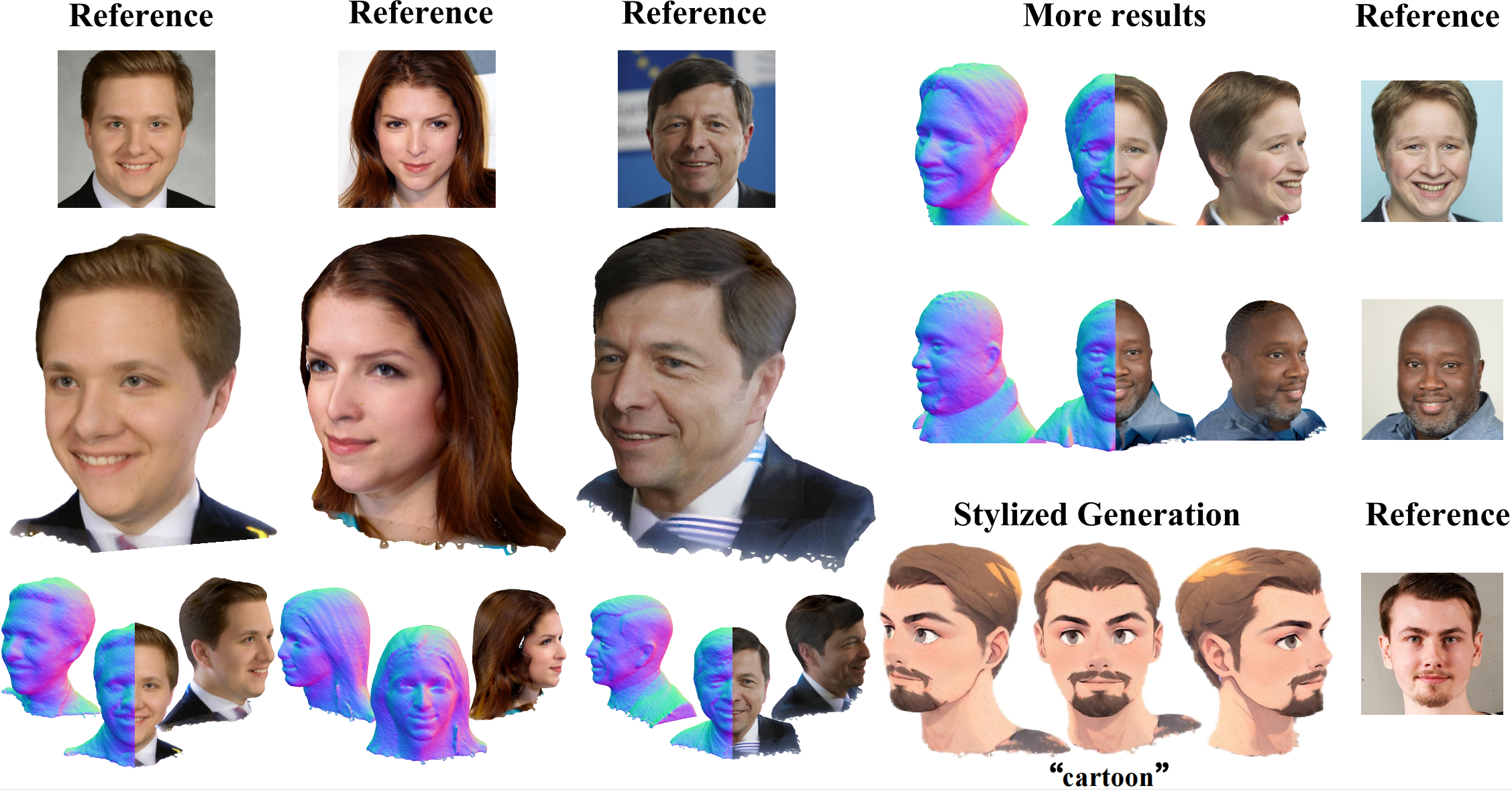

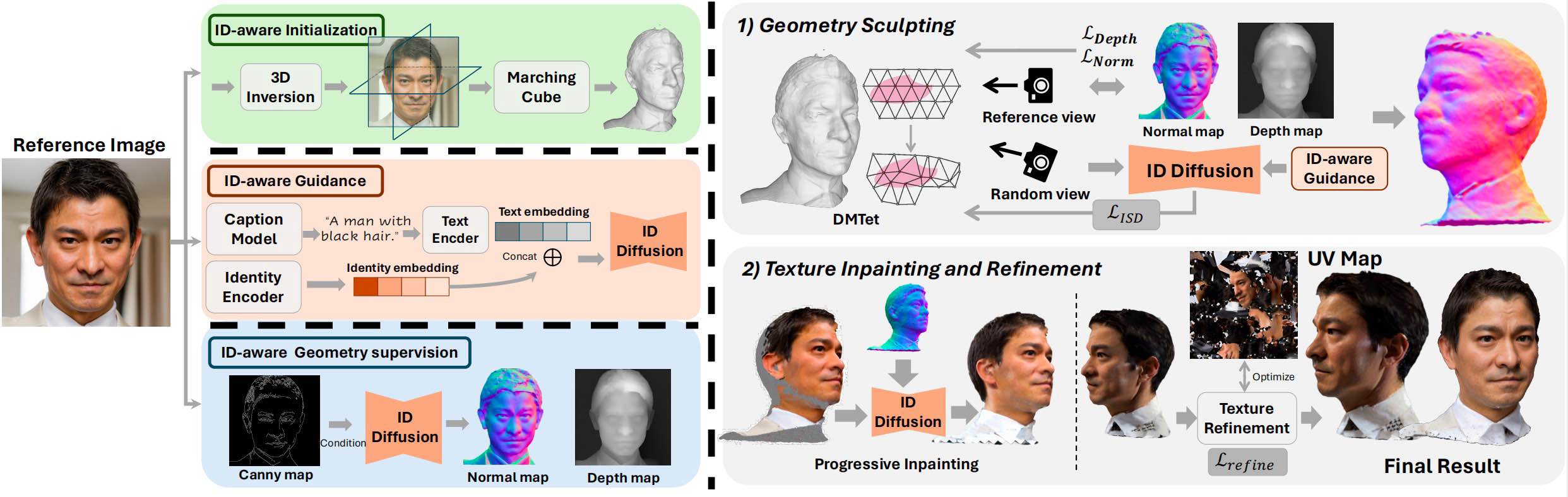

Official implementation of ID-Sculpt, a method for generating high-quality and realistic 3D Head from Single In-the-wild Portrait Image.

Jinkun Hao1,

Junshu Tang1,

Jiangning Zhang2,

Ran Yi1,

Yijia Hong1,

Moran Li2,

Weijian Cao2

,

Yating Wang1,

Chengjie Wang2,

Lizhuang Ma1

1Shanghai Jiao Tong University, 2Youtu Lab, Tencent

This part is the same as the original threestudio. Skip it if you already have installed the environment.

- You must have an NVIDIA graphics card with at least 20GB VRAM and have CUDA installed.

- Install

Python >= 3.8. - (Optional, Recommended) Create a virtual environment:

pip3 install virtualenv # if virtualenv is installed, skip it

python3 -m virtualenv venv

. venv/bin/activate

# Newer pip versions, e.g. pip-23.x, can be much faster than old versions, e.g. pip-20.x.

# For instance, it caches the wheels of git packages to avoid unnecessarily rebuilding them later.

python3 -m pip install --upgrade pip- Install

PyTorch >= 1.12. We have tested ontorch1.12.1+cu113andtorch2.0.0+cu118, but other versions should also work fine.

# torch1.12.1+cu113

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

# or torch2.0.0+cu118

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118- (Optional, Recommended) Install ninja to speed up the compilation of CUDA extensions:

pip install ninja- Install dependencies:

pip install -r requirements.txt- (Optional)

tiny-cuda-nninstallation might require downgrading pip to 23.0.1

- cd sd_model

- Download fine-tuned text to humannormal models (Humannorm CVPR24) on HuggingFace: Normal-adapted-model, Depth-adapted-model

- Download controlnet: (All model on huggingface is torch.float16 weight detype)

- normal ('models--lllyasviel--control_v11p_sd15_normalbae')

- canny ('models--lllyasviel--control_v11p_sd15_canny')

- landmark ('models--CrucibleAI--ControlNetMediaPipeFace')

- Prepare insightface checkpoint.

Downloading buffalo_l and unpack the buffalo_l.zip file, organize the insightface like:

./sd_model └── insightface/ └── models/ └── buffalo_l/ - Prepare IP-Adapter checkpoint. Download ip-adapter-faceid-portrait-v11_sd15 follow IP-Adapter official guideline.

- Download other models:

- models--stabilityai--sd-vae-ft-mse

- models--runwayml--stable-diffusion-v1-5

- models--SG161222--Realistic_Vision_V4.0_noVAE

- Download vgg16 to $/root/.cache/torch/hub/checkpoints/vgg16-397923af.pth

After downloading, the sd_model/ is structured like:

./pretrained_models

├── insightface/

├── models--stabilityai--sd-vae-ft-mse/

├── models--runwayml--stable-diffusion-v1-5/

├── models--runwayml--stable-diffusion-v1-5/

├── models--SG161222--Realistic_Vision_V4.0_noVAE/

├── models--lllyasviel--control_v11p_sd15_normalbae/

├── models--lllyasviel--control_v11p_sd15_canny/

├── models--CrucibleAI--ControlNetMediaPipeFace/

├── models--CrucibleAI--ControlNetMediaPipeFace/

└── models--xanderhuang--normal-adapted-sd1.5/

You can download the predefined Tetrahedra for DMTET from this link or generate locally.

After downloading, the load/ is structured like:

./load

├── lights/

├── shapes/

└── tets

├── ...

├── 128_tets.npz

├── 256_tets.npz

└── 512_tets.npz

preprocess_data/

├── ...

└── id_xxx

├── mask/

├── ...

├── 030.png

└── 031.png

├── normal/

├── ...

├── 030.png

└── 031.png

├── rgb/

├── ...

├── 030.png

└── 031.png

├── cam_params.json # cam_param corresponding to img in mask/normal/rgb

├── camera.npy # reference view cam_param

├── face_mask.png # mask of face region

├── img_canny.png

├── img_depth.png

├── img_normal.png

├── img.png # reference portrait image

├── init_mesh.ply # initialized mesh

├── laion.txt # face landmark coordinates

└── mask.png # mask of forground region

1st. Link sd models to current project path:

ln -s ../../../sd_model sd_model2ed. Preprocessing:

- put images to

preprocess/dataset/head_img_new - add image captions in

preprocess/get_facedepth.pyandpreprocess/get_facenormal.py - run preprocessing:

cd preprocess && source preprocess.sh

3rd. Run optimization: modify variables (prompt, image_path and tag) in run_exp.py. Then

python run_exp.pyIf you find our work useful in your research, please cite:

@misc{hao2024portrait3d,

title={Portrait3D: 3D Head Generation from Single In-the-wild Portrait Image},

author={Jinkun Hao and Junshu Tang and Jiangning Zhang and Ran Yi and Yijia Hong and Moran Li and Weijian Cao and Yating Wang and Lizhuang Ma},

year={2024},

eprint={2406.16710},

archivePrefix={arXiv},

}

Our project benefits from the amazing open-source projects:

We are grateful for their contribution.