Official Implementation of "PMP: Learning to Physically Interact with Environments using Part-wise Motion Priors" (SIGGRAPH 2023) (paper, video, talk)

-

Assets

- Deepmimic-MPL Humanoid

- Objects for interaction

- Retargeted motion data (check for the license)

-

Simulation Configuration Files

-

.yamlfiles for whole-body and hand-only gyms - documentations about details

-

-

Hand-only Gym : training one hand to grab a bar

- Model (Train / Test)

- pretrained weight

- expert trajectories

- Environment

- Model (Train / Test)

-

Shell script to install all external dependencies

-

Retargeting pipeline (Mixamo to Deepmimic-MPL Humanoid)

-

Whole-body Gym : training hand-equipped humanoid

- Model (Train / Test)

- pretrained weights

- Environments

- Model (Train / Test)

Note) I'm currently focusing on the other projects mainly so this repo will be updated slowly. In case you require early access to the full implementation, please contact me through my personal website.

This code is based on Isaac Gym Preview 4.

Please run installation code and create a conda environment following the instruction in Isaac Gym Preview 4.

We assume the name of conda environment is pmp_env.

Then, run the following script.

conda activate pmp_env

cd pmp

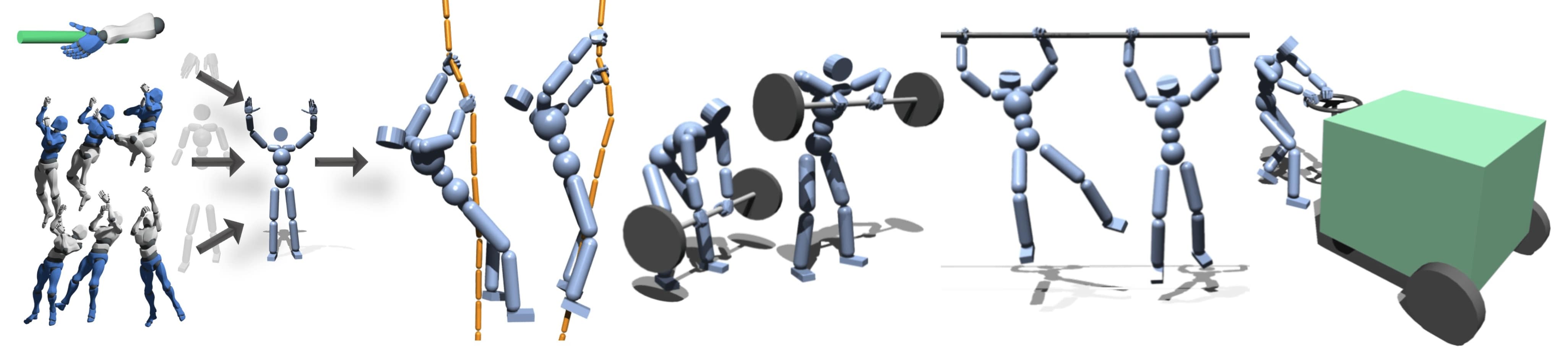

pip install -e .Our paper introduces interaction prior obtained from hand-only simulation. We provide example training gym for training hand grasping (code pointer). Hand-only policy is trained to grasp a bar against arbitrary forces & torques.

We use simple PPO algorithm to train a policy (code pointer). We provide the pretrained weights (Google Drive). Additionally, we share the extracted demonstrations (i.e. pair of state-action) from the pretrained policy, which are later used as interaction prior for the whole-body training.

You can train a policy from scratch using the following commands:

cd isaacgymenvs

# train

python train.py headless=True task=HandGraspGym train=HandOnlyPPO experiment=hand_grasping

# test - visualize only one agent

python train.py headless=False test=True num_envs=1 task=HandGraspGym train=HandOnlyPPO checkpoint=pretrained/hand_grasping_weights.pthHowever, please note that this grasping gym is only the example and you can replace this gym with the customized one that satisfies your end goal (e.g. gym to train punch).

TBA

This code is based on the official release of IsaacGymEnvs.

Especially, this code largely borrows implementations of AMP in the original codebase (paper, code).

Our whole-body agent is modified from the humanoid in Deepmimic. We replace the sphere-shaped hands of the original humanoid with the hand from Modular Prosthetic Limb (MPL).

We use Mixamo animation data for training part-wise motion prior. We retarget mixamo animation data into our whole-body humanoid using the similar process used in the original codebase.