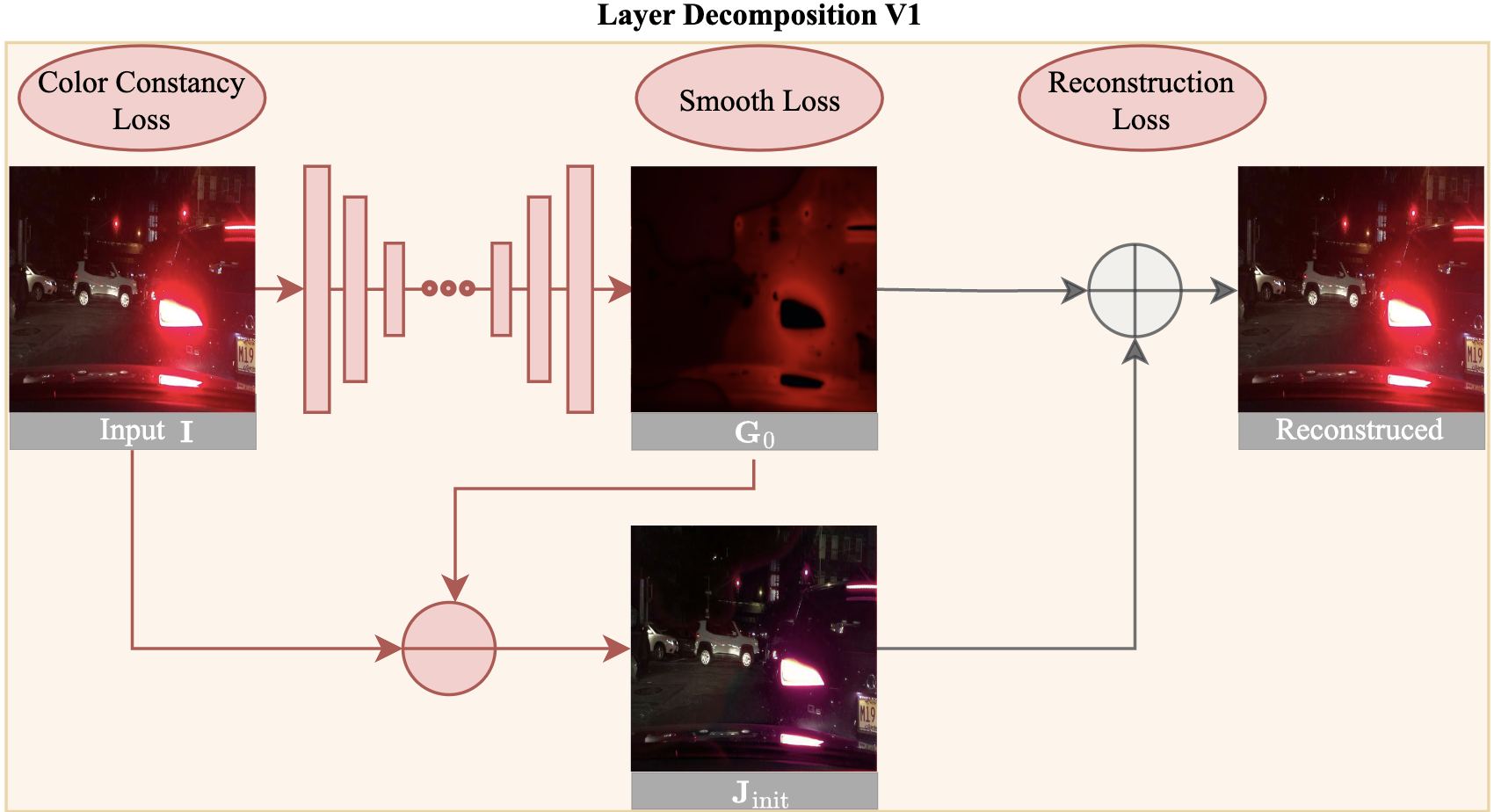

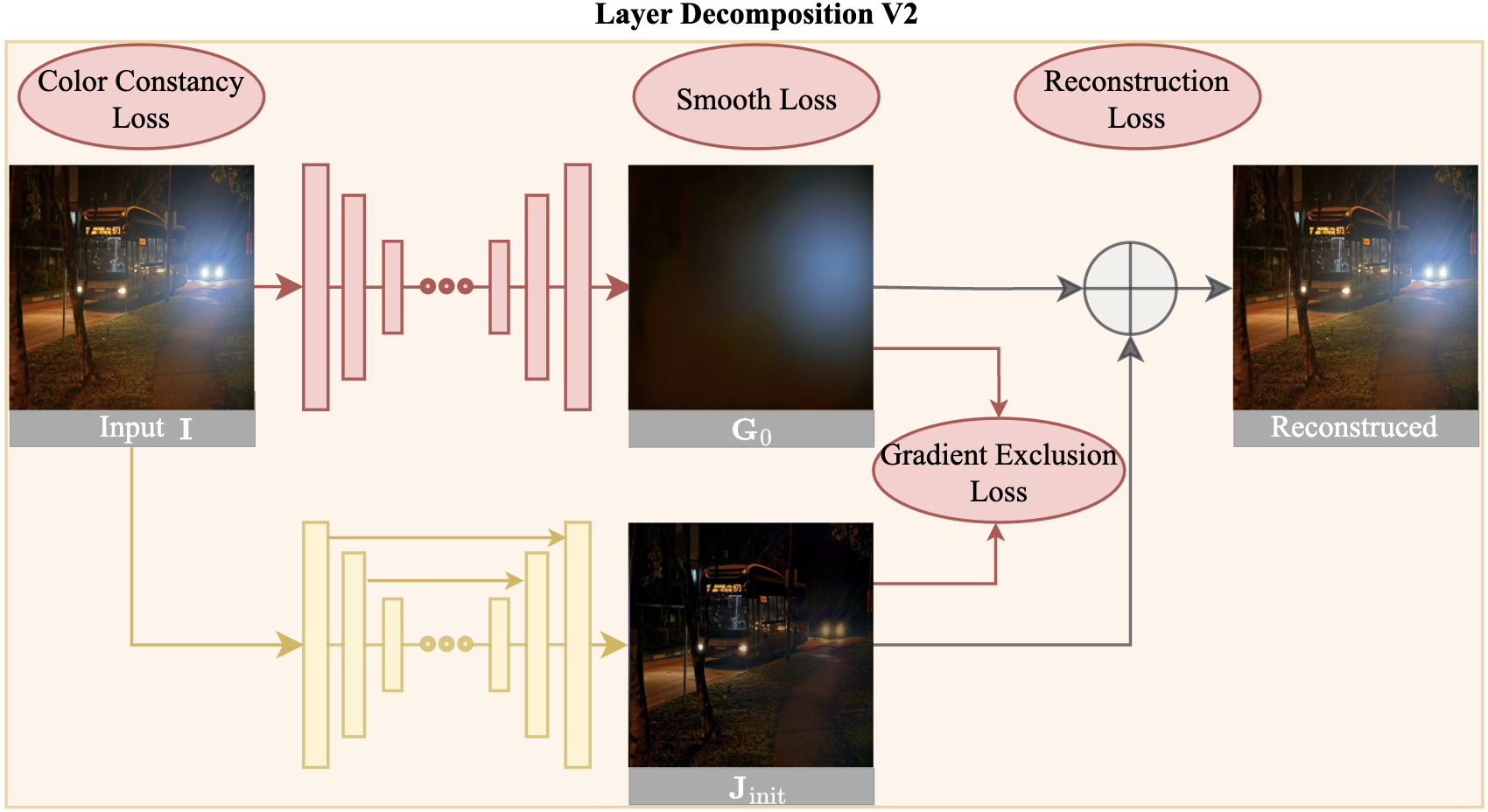

This is an implementation of the following paper.

Unsupervised Night Image Enhancement: When Layer Decomposition Meets Light-Effects Suppression

European Conference on Computer Vision (ECCV'2022)

Yeying Jin, Wenhan Yang and Robby T. Tan

[Paper]

[Supplementary]

Prerequisites, or follow bilibili

git clone https://github.com/jinyeying/night-enhancement.git

cd night-enhancement/

conda create -n night python=3.7

conda activate night

conda install pytorch=1.10.2 torchvision torchaudio cudatoolkit=11.3 -c pytorch

python3 -m pip install -r requirements.txt

- Light-effects data [Dropbox] | [BaiduPan (code:self)]

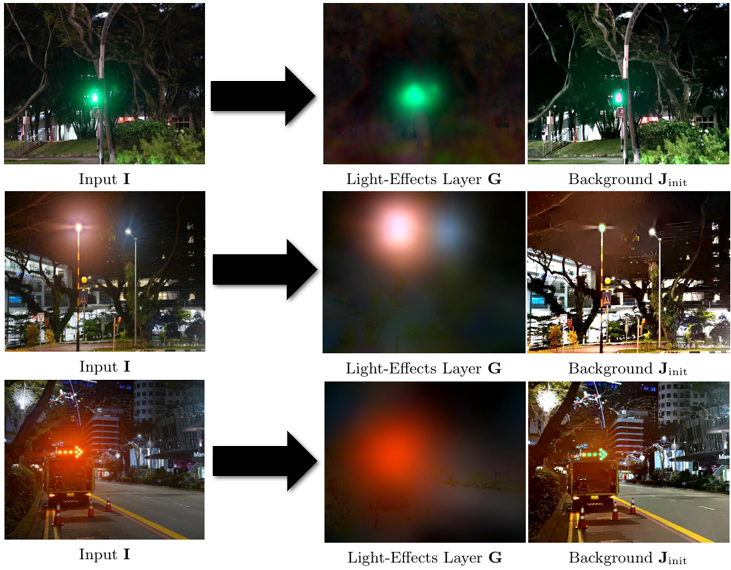

Light-effects data is collected from Flickr and by ourselves, with multiple light colors in various scenes.

CVPR2021Nighttime Visibility Enhancement by Increasing the Dynamic Range and Suppression of Light Effects [Paper]

Aashish Sharma and Robby T. Tan

- LED data [Dropbox] | [BaiduPan (code:ledl)]

We captured images with dimmer light as the reference images.

- GTA5 nighttime fog [Dropbox] | [BaiduPan (code:67ml)]

Synthetic GTA5 nighttime fog data.

ECCV2020Nighttime Defogging Using High-Low Frequency Decomposition and Grayscale-Color Networks [Paper]

Wending Yan, Robby T. Tan and Dengxin Dai

- Syn-light-effects [Dropbox] | [BaiduPan (code:synt)]

Synthetic-light-effects data is the implementation of the paper:

ICCV2017A New Convolution Kernel for Atmospheric Point Spread Function Applied to Computer Vision [Paper]

Run the Matlab code to generate Syn-light-effects:

glow_rendering_code/repro_ICCV2007_Fig5.m

- Download the pre-trained LOL model [Dropbox] | [BaiduPan (code:lol2)], put in

./results/LOL/model/ - Put the test images in

./LOL/

🔥🔥 Online test: https://replicate.com/cjwbw/night-enhancement

python main.py

- Download Low-Light Enhancement Dataset

1.1 LOL dataset

"Deep Retinex Decomposition for Low-Light Enhancement", BMVC, 2018. [Baiduyun (code:sdd0)] | [Google Drive]

1.2 LOL_Cap dataset

"Sparse Gradient Regularized Deep Retinex Network for Robust Low-Light Image Enhancement", TIP, 2021. [Baiduyun (code:l9xm)] | [Google Drive]

|-- LOL_Cap

|-- trainA ## Low

|-- trainB ## Normal

|-- testA ## Low

|-- testB ## Normal

- There is no decomposition, light-effects guidance for low-light enhancement.

CUDA_VISIBLE_DEVICES=1 python main.py --dataset LOL --phase train --datasetpath /home1/yeying/data/LOL_Cap/

- LOL-test Results (15 test images) [Dropbox] | [BaiduPan (code:lol1)]

Get the following Table 3 in the main paper on the LOL-test dataset.

| Learning | Method | PSNR | SSIM |

|---|---|---|---|

| Unsupervised Learning | Ours | 21.521 | 0.7647 |

| N/A | Input | 7.773 | 0.1259 |

- LOL_Cap Results (100 test images) [Dropbox] | [BaiduPan (code:lolc)]

Get the following Table 4 in the main paper on the LOL-Real dataset.

| Learning | Method | PSNR | SSIM |

|---|---|---|---|

| Unsupervised Learning | Ours | 25.51 | 0.8015 |

| N/A | Input | 9.72 | 0.1752 |

Re-train (train from scratch) in LOL_V2_real (698 train images), and test on LOL_V2_real [Dropbox] | [BaiduPan (code:lol2)].

PSNR: 20.85 (vs EnlightenGAN's 18.23), SSIM: 0.7243 (vs EnlightenGAN's 0.61).

- Download the pre-trained de-light-effects model [Dropbox] | [BaiduPan (code:dele)], put in

./results/delighteffects/model/ - Put the test images in

./light-effects/

python main_delighteffects.py

Inputs are in ./light-effects/, Outputs are in ./light-effects-output/.

Inputs and Outputs are trainA and trainB for the translation network.

demo_all.ipynb

python demo.py

Inputs are in ./light-effects/, Outputs are in ./light-effects-output/DSC01065/.

Inputs and Outputs are trainA and trainB for the translation network.

python demo_separation.py --img_name DSC01065.JPG

demo_decomposition.m

Inputs and Initial Background Results are trainA and trainB for the translation network.

| Initial Background Results [Dropbox] | Light-Effects Results [Dropbox] | Shading Results [Dropbox] |

|---|---|---|

| [BaiduPan (code:jjjj)] | [BaiduPan (code:lele)] | [BaiduPan (code:llll)] |

CUDA_VISIBLE_DEVICES=1 python main.py --dataset delighteffects --phase train --datasetpath /home1/yeying/data/light-effects/

- Run the MATLAB code to adaptively fuse the three color channels, and output

I_gray.

checkGrayMerge.m

-

Download the fine-tuned VGG model [Dropbox] | [BaiduPan (code:dark)] (fine-tuned on ExDark), put in

./VGG_code/ckpts/vgg16_featureextractFalse_ExDark/nets/model_best.tar -

Obtain structure features.

python test_VGGfeatures.py

The code and models in this repository are licensed under the MIT License for academic and other non-commercial uses.

For commercial use of the code and models, separate commercial licensing is available. Please contact:

- Yeying Jin (jinyeying@u.nus.edu)

- Robby T. Tan (tanrobby@gmail.com)

- Jonathan Tan (jonathan_tano@nus.edu.sg)

The decomposition code is implemented based on DoubleDIP, Layer Seperation and LIME.

The translation code is implemented based on U-GAT-IT, we would like to thank them.

One trick used in networks.py is to change out = self.UpBlock2(x) to out = (self.UpBlock2(x)+input).tanh() to learn a residual.

If this work is useful for your research, please cite our paper.

@inproceedings{jin2022unsupervised,

title={Unsupervised night image enhancement: When layer decomposition meets light-effects suppression},

author={Jin, Yeying and Yang, Wenhan and Tan, Robby T},

booktitle={European Conference on Computer Vision},

pages={404--421},

year={2022},

organization={Springer}

}

@inproceedings{jin2023enhancing,

title={Enhancing visibility in nighttime haze images using guided apsf and gradient adaptive convolution},

author={Jin, Yeying and Lin, Beibei and Yan, Wending and Yuan, Yuan and Ye, Wei and Tan, Robby T},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

pages={2446--2457},

year={2023}

}If light-effects data is useful for your research, please cite the paper.

@inproceedings{sharma2021nighttime,

title={Nighttime Visibility Enhancement by Increasing the Dynamic Range and Suppression of Light Effects},

author={Sharma, Aashish and Tan, Robby T},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={11977--11986},

year={2021}

}