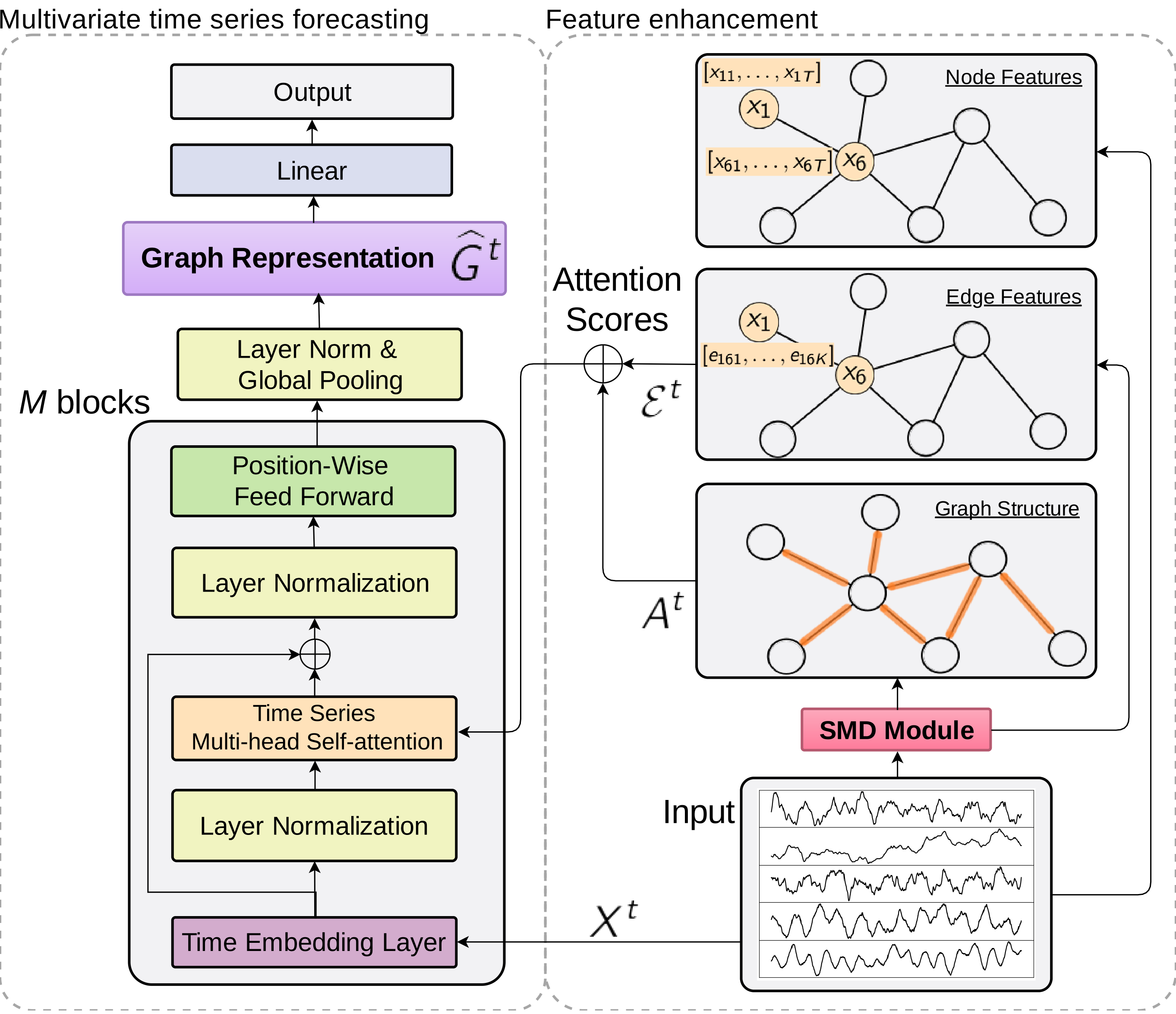

The official implementation of the Time Series Attention Transformer (TSAT).

main.py: main TSAT model interface with training and testingTSAT.py: with TSAT class implementationutils.py: utility functions and dataset parameterdataset_TSAT_ETTm1_48.py: generate graph from dataset ETTm1

Download the Electricity Transformer Temperature Dataset from https://github.com/zhouhaoyi/ETDataset. Uncompress them and move the .csv to the Data folder.

The Electricity consumption dataset can be found on https://github.com/laiguokun/multivariate-time-series-data.

The parameters setting can be found in utils.py.

-

l_backcast: lengths of backcast -

d_edge: number of IMF used -

d_model: the time embedding dimension -

N: number of Self_Attention_Block -

h: number of head in Multi-head-attention -

N_dense: number of linear layer in Sequential feed forward layers -

n_output: number of output (lengths of forecast$\times$ number of node) -

n_nodes: number of node (aka number of time series) -

lambda: the initial value of the trainable lambda$\alpha_i$ -

dense_output_nonlinearitythe nonlinearity function in dense output layer

- Python 3.8

- PyTorch = 1.8.0 (with corresponding CUDA version)

- Pandas = 1.4.0

- Numpy = 1.22.2

- PyEMD = 1.2.1

Dependencies can be installed using the following command:

pip install -r requirements.txtIf you have any questions, please feel free to contact William Ng (Email: william.ng@koiinvestments.com).