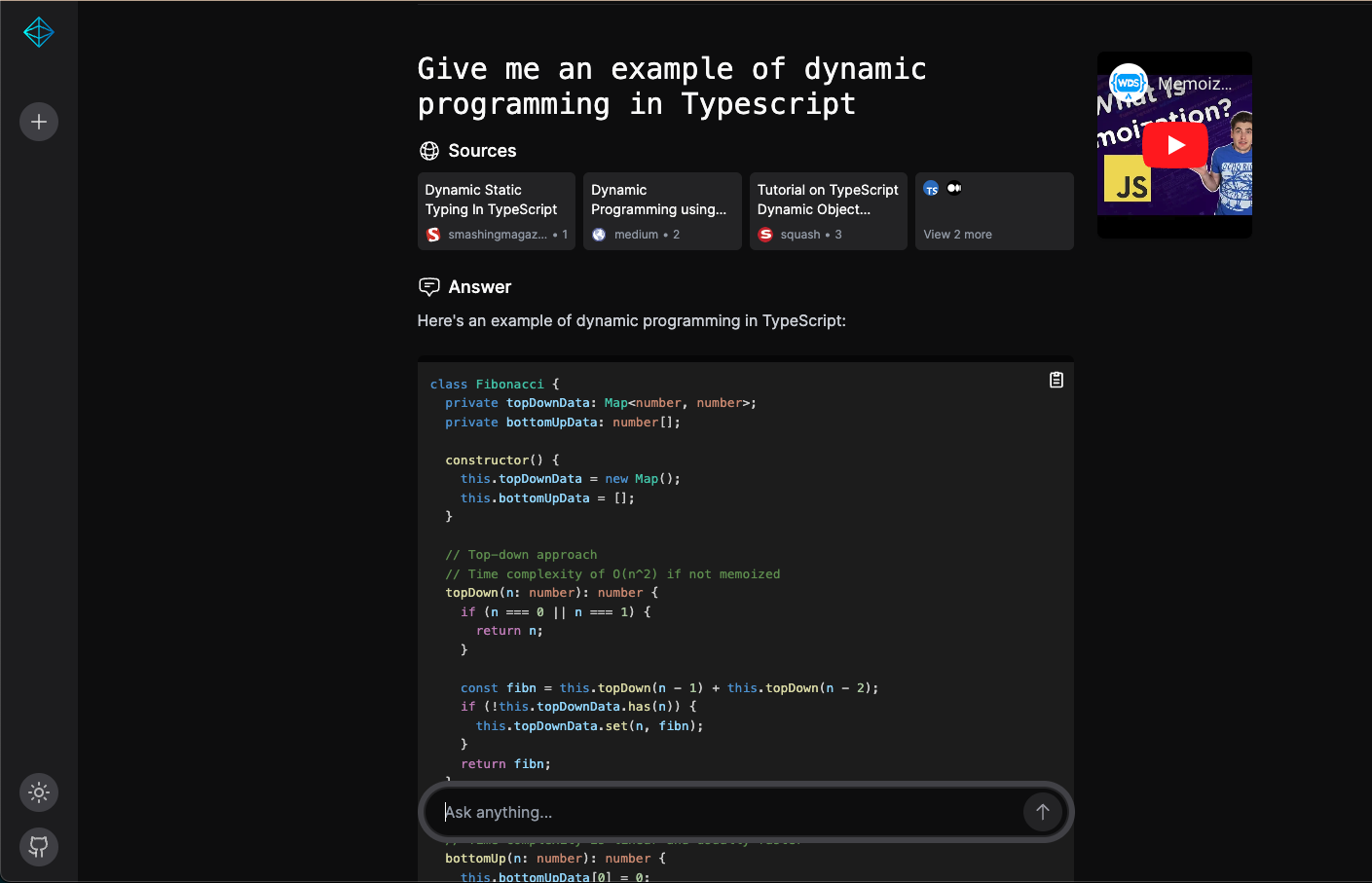

Sensei Search is an AI-powered answer engine.

The key takeaways and experiences of working with open source Large Language Models are summarized in a detailed discussion. For more information, you can read the full discussion on Reddit:

Sensei Search is built using the following technologies:

- Frontend: Next.js, Tailwind CSS

- Backend: FastAPI, OpenAI client

- LLMs: Command-R, Qwen-2-72b-instruct, WizardLM-2 8x22B, Claude Haiku, GPT-3.5-turbo

- Search: SearxNG, Bing

- Memory: Redis

- Deployment: AWS, Paka

You can run Sensei Search either locally on your machine or in the cloud.

Follow these steps to run Sensei Search locally:

-

Prepare the backend environment:

cd sensei_root_folder/backend/ mv .env.development.example .env.developmentEdit

.env.developmentas needed. The example environment assumes you run models through Ollama. Make sure you have reasonably good GPUs to run the command-r/Qwen-2-72b-instruct/WizardLM-2 8x22B model. -

No need to do anything for the frontend.

-

Run the app with the following command:

cd sensei_root_folder/ docker compose up -

Open your browser and go to http://localhost:3000

We deploy the app to AWS using paka. Please note that the models require GPU instances to run.

Before you start, make sure you have:

- An AWS account

- Requested GPU quota in your AWS account

The configuration for the cluster is located in the cluster.yaml file. You'll need to replace the HF_TOKEN value in cluster.yaml with your own Hugging Face token. This is necessary because the mistral-7b and command-r models require your account to have accepted their terms and conditions.

Follow these steps to run Sensei Search in the cloud:

-

Install paka:

pip install paka

-

Provision the cluster in AWS:

make provision-prod

-

Deploy the backend:

make deploy-backend

-

Deploy the frontend:

make deploy-frontend

-

Get the URL of the frontend:

paka function list

-

Open the URL in your browser.