... deployed on MicroK8s!

The READMEs in this repository are aimed to be technical and help you get started quickly.

For more stories and explanations, read the Ubuntu blog:

- Intel and Canonical to secure containers software supply chain (Intro)

- How to colourise black & white pictures: OpenVINO™ on Ubuntu containers demo (Part 1) (Architecture)

- How to colourise black & white pictures with OpenVINO™ on Ubuntu containers (Part 2) (How to)

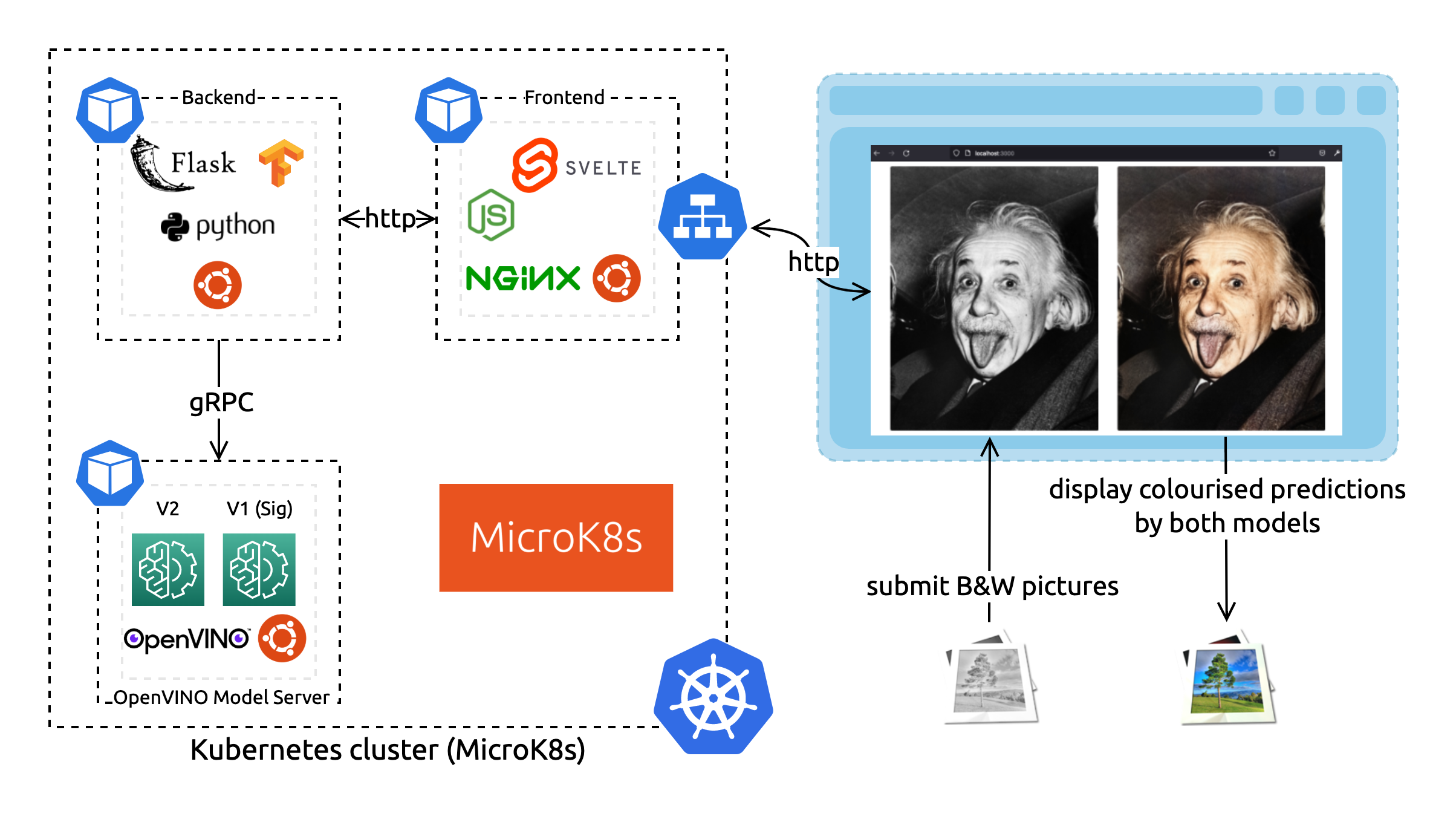

Our architecture consists of three microservices: a backend, a frontend, and the OpenVINO Model Server to serve the neural network predictions. The Model Server component will serve two different demo neural networks to compare the results (V1 and V2). All these components use the Ubuntu base image for a consistent software ecosystem and containerised environment.

Interface frontend <> OVMS

Serves the Colorization Model

You can try it alone running

# Build the image

docker build modelserver -t modelserver:latest

# Deploy locally with Docker

docker run --rm -it -p 8001:8001 -p 9001:9001 modelserver:latest --config_path /models_config.json --port 9001 --rest_port 8001

# The REST API is available at http://localhost:8001/

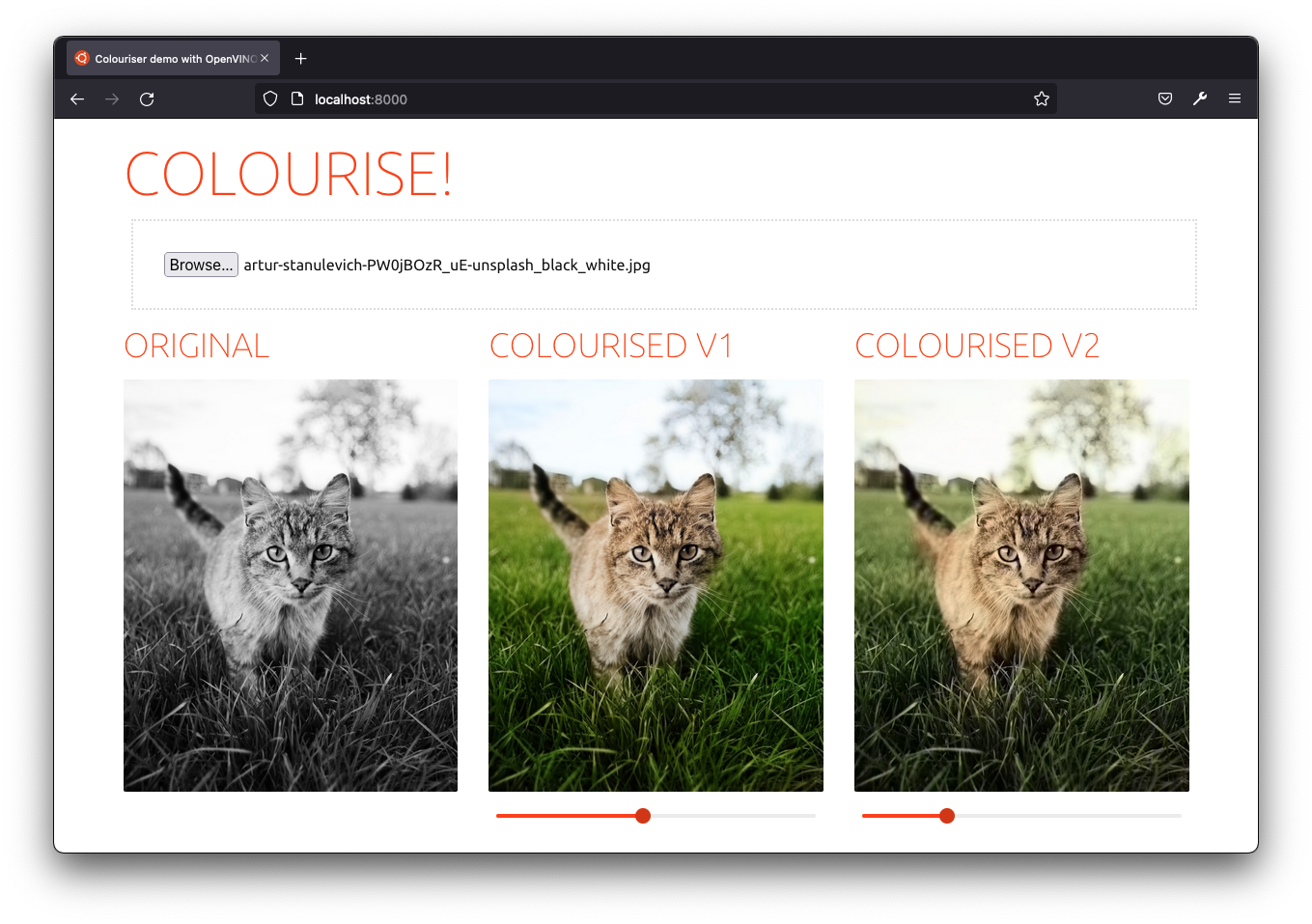

# read more on https://docs.openvino.ai/A sweet interface to try the demo colourisation neural networks!

You can skip this step if you already have a Kubernetes cluster at hand.

Setup MicroK8s to quickly get a Kubernetes cluster on your machine.

# https://microk8s.io/docs

sudo snap install microk8s --classic

# Add current user ($USER) to the microk8s group

sudo usermod -a -G microk8s $USER && sudo chown -f -R $USER ~/.kube

newgrp microk8s

# Enable the DNS, Storage, and Registry addons required later

microk8s enable dns storage registry

# Wait for the cluster to be in a Ready state

microk8s status --wait-ready

# Create an alias to enable the `kubectl` command

sudo snap alias microk8s.kubectl kubectlEvery component comes with a Dockerfile to build itself in a standard environment and ship with its deployment dependencies (read What are containers). All components build an Ubuntu-based docker image for a consistent developer experience.

Before being able to deploy all our microservices with Kubernetes to run our colouriser app, we need to build the images and upload them to a registry accessible from our Kubernetes cluster.

It is expected that this step will take some time to complete as there are many container images and depencies to fetch in order to build and deploy the components. Make sure you're on a performant network (and not billed per data usage!).

docker build backend -t localhost:32000/backend:latest

docker push localhost:32000/backend:latestdocker build modelserver -t localhost:32000/modelserver:latest

docker push localhost:32000/modelserver:latestdocker build frontend -t localhost:32000/frontend:latest

docker push localhost:32000/frontend:latestApply the deployments and services configuration files

kubectl apply -f k8sAfter a few minutes, that's it! Access the application at http://localhost:30000/.

# Watch the application being deployed

$ watch kubectl get all

NAME READY STATUS RESTARTS AGE

pod/modelserver-6fdcc8f5f7-czx4m 1/1 Running 0 3m44s

pod/frontend-7d59b8dbd6-fw5cm 1/1 Running 0 3m44s

pod/backend-86d49b7f89-g8lnd 1/1 Running 0 3m44s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 27m

service/backend ClusterIP 10.152.183.156 <none> 5000/TCP 3m44s

service/frontend NodePort 10.152.183.47 <none> 3000:30000/TCP 3m44s

service/modelserver ClusterIP 10.152.183.219 <none> 9001/TCP 3m44s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/modelserver 1/1 1 1 3m44s

deployment.apps/frontend 1/1 1 1 3m44s

deployment.apps/backend 1/1 1 1 3m44s

NAME DESIRED CURRENT READY AGE

replicaset.apps/modelserver-6fdcc8f5f7 1 1 1 3m44s

replicaset.apps/frontend-7d59b8dbd6 1 1 1 3m44s

replicaset.apps/backend-86d49b7f89 1 1 1 3m44sTo get started quickly, we also provided a docker-compose.yaml file.

# start the project

docker-compose up

# stop the project

docker-compose up

# clean up

docker-compose up --removeIf you make changes in the code, you'll need to specifically rebuild the updated components:

# rebuild components and deploy the changes

docker-compose up --build

# rebuild a specific component (needs deploy)

docker-compose build <component-name>Access the frontend at http://localhost:8000/ and the backend at http://localhost:8080/.