- Overview

- Where to Start

- Confluent Cloud

- Stream Processing

- Data Pipelines

- Confluent Platform

- Build Your Own

- Additional Demos

This is a curated list of demos that showcase Apache Kafka® event stream processing on the Confluent Platform, an event stream processing platform that enables you to process, organize, and manage massive amounts of streaming data across cloud, on-prem, and serverless deployments.

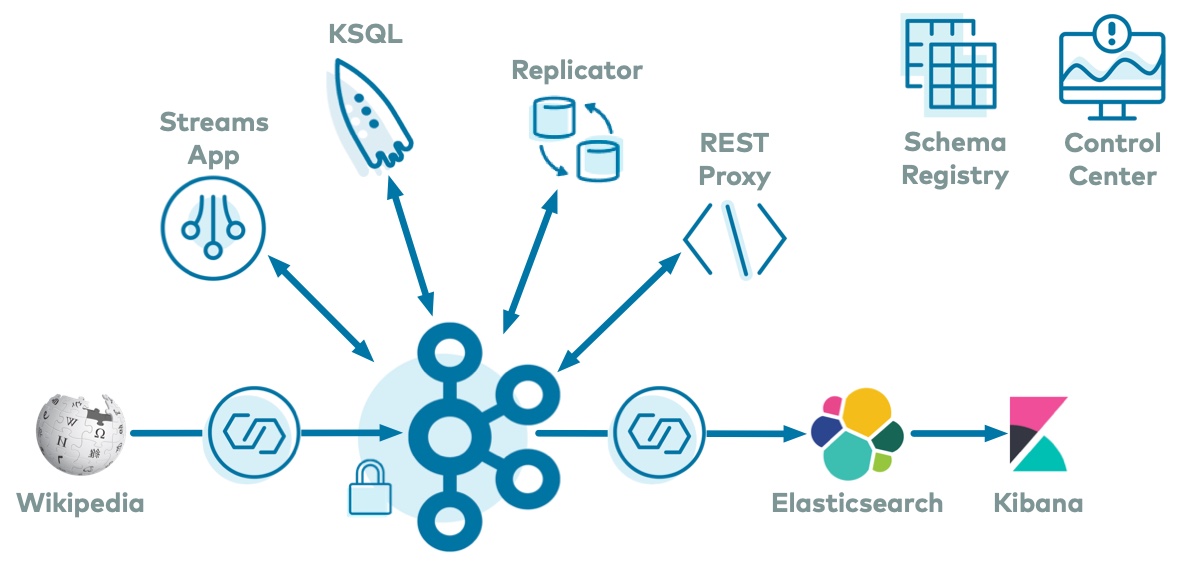

The best demo to start with is cp-demo which spins up a Kafka event streaming application using ksqlDB for stream processing, with many security features enabled, in an end-to-end streaming ETL pipeline with a source connector pulling from live data and a sink connector connecting to Elasticsearch and Kibana for visualizations.

cp-demo also comes with a tutorial and is a great configuration reference for Confluent Platform.

| Demo | Local | Docker | Description |

|---|---|---|---|

| Beginner Cloud | Y | N | Fully automated demo interacting with your Confluent Cloud cluster using Confluent Cloud CLI  |

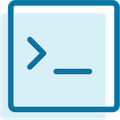

| Clients to Cloud | Y | N | Client applications in different programming languages connecting to Confluent Cloud  |

| Cloud ETL | Y | N | Cloud ETL solution using fully-managed Confluent Cloud connectors and fully-managed ksqlDB  |

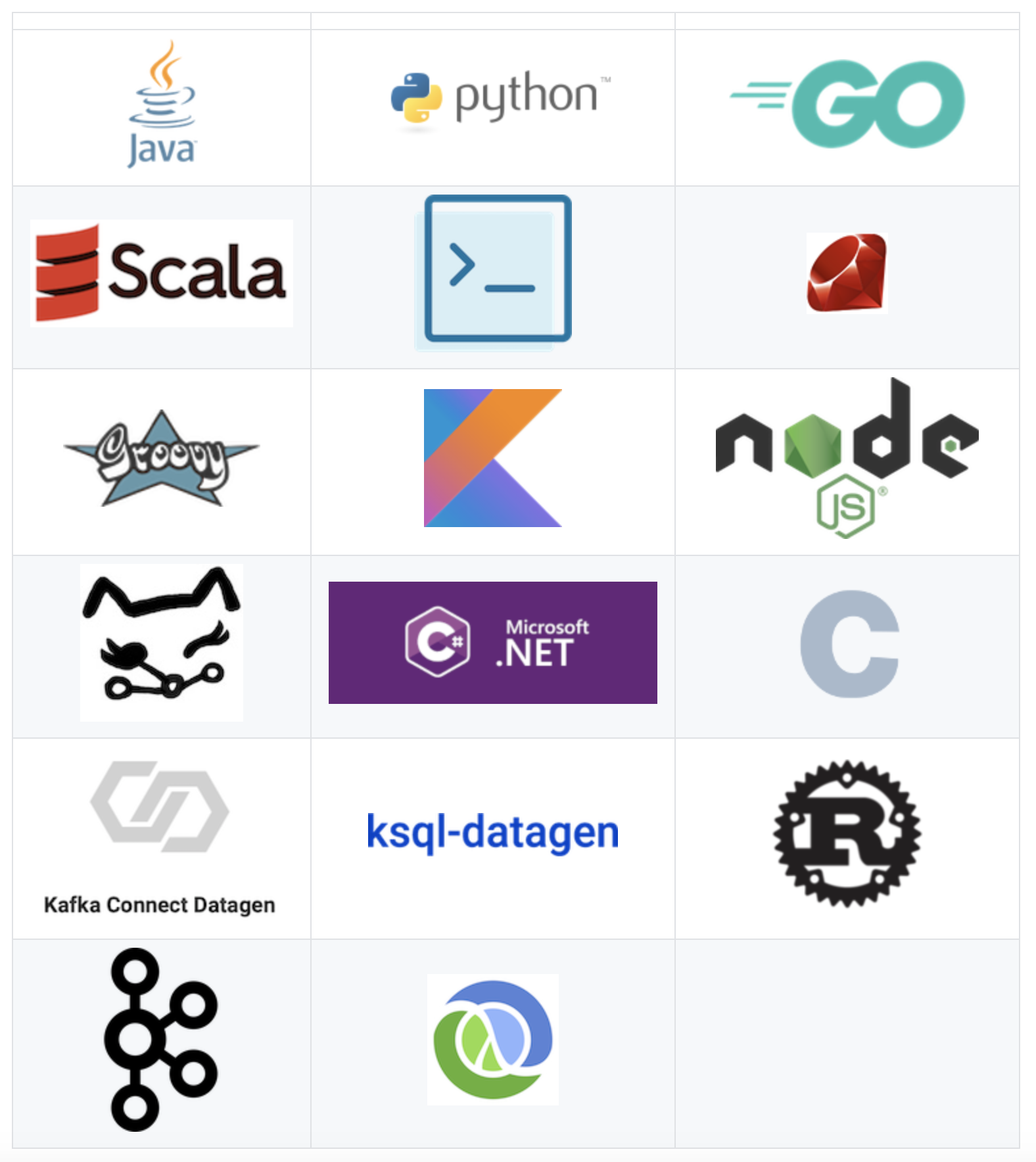

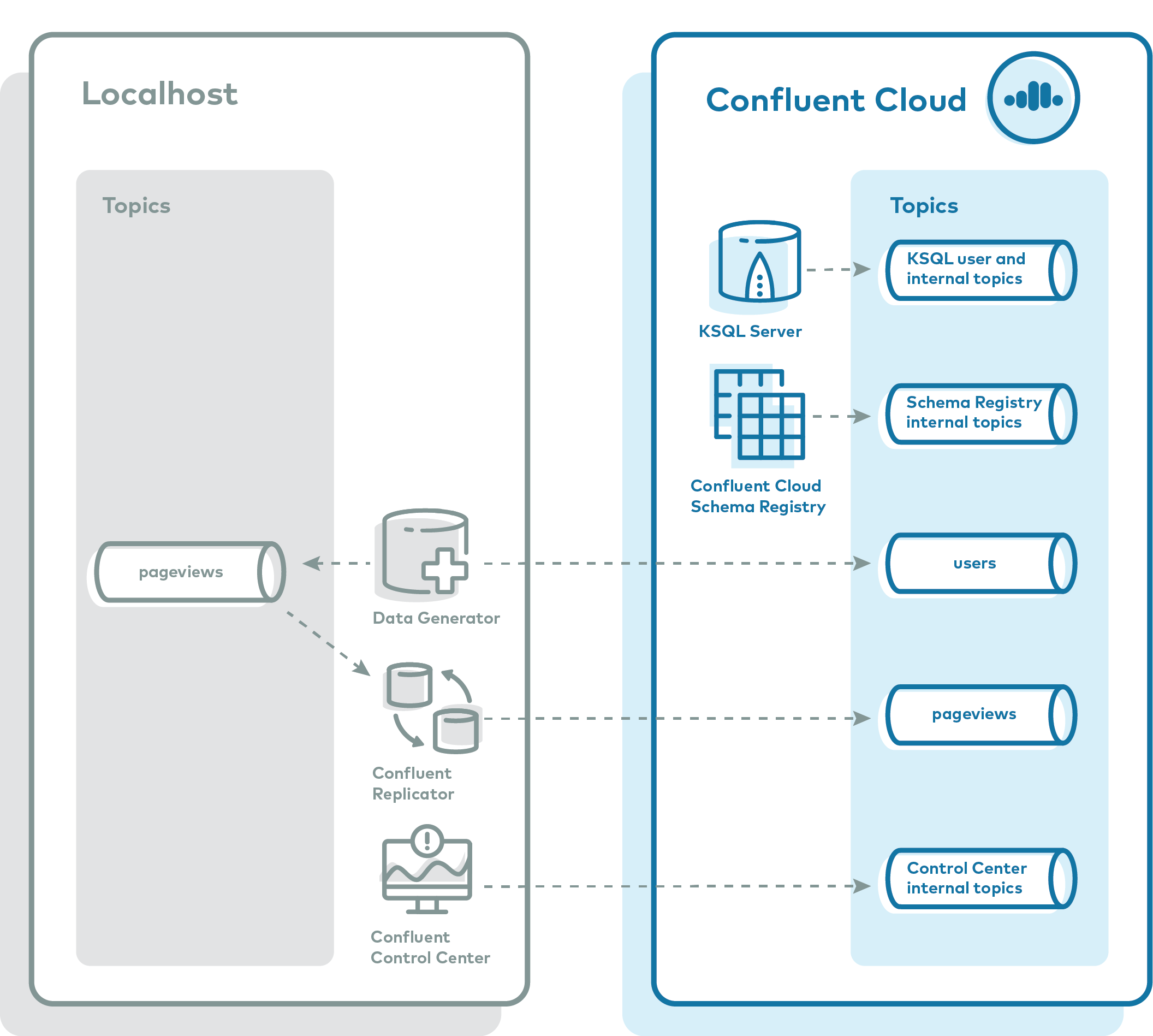

| On-Prem Kafka to Cloud | Y | Y | This more advanced demo showcases an on-prem Kafka cluster and Confluent Cloud cluster, and data copied between them with Confluent Replicator  |

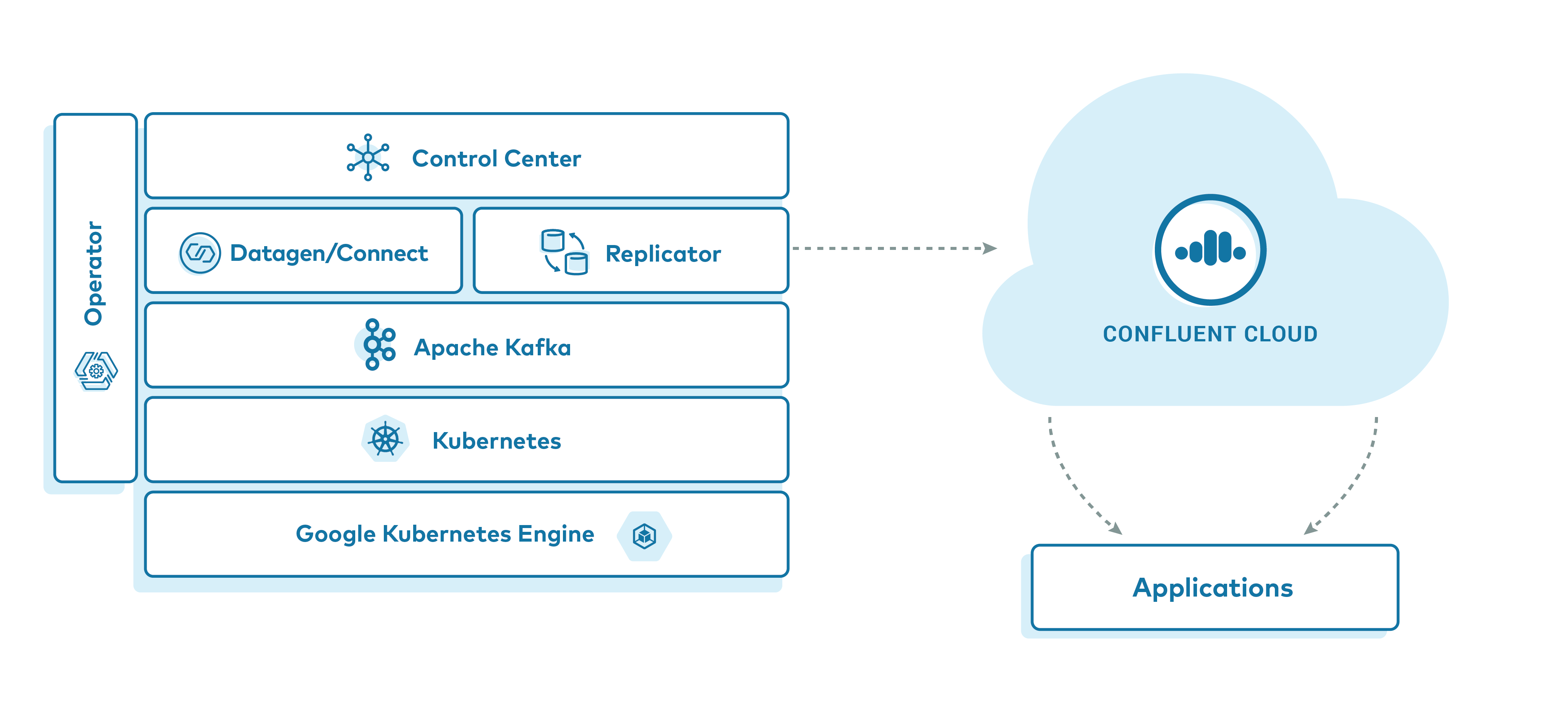

| GKE to Cloud | N | Y | Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment  |

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clickstream | N | Y | Automated version of the ksqlDB clickstream demo  |

| Kafka Tutorials | Y | Y | Collection of common event streaming use cases, with each tutorial featuring an example scenario and several complete code solutions  |

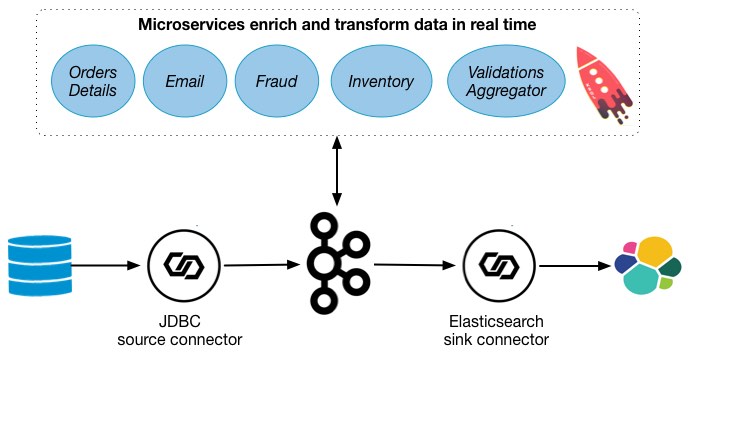

| Microservices ecosystem | Y | N | Microservices orders Demo Application integrated into the Confluent Platform  |

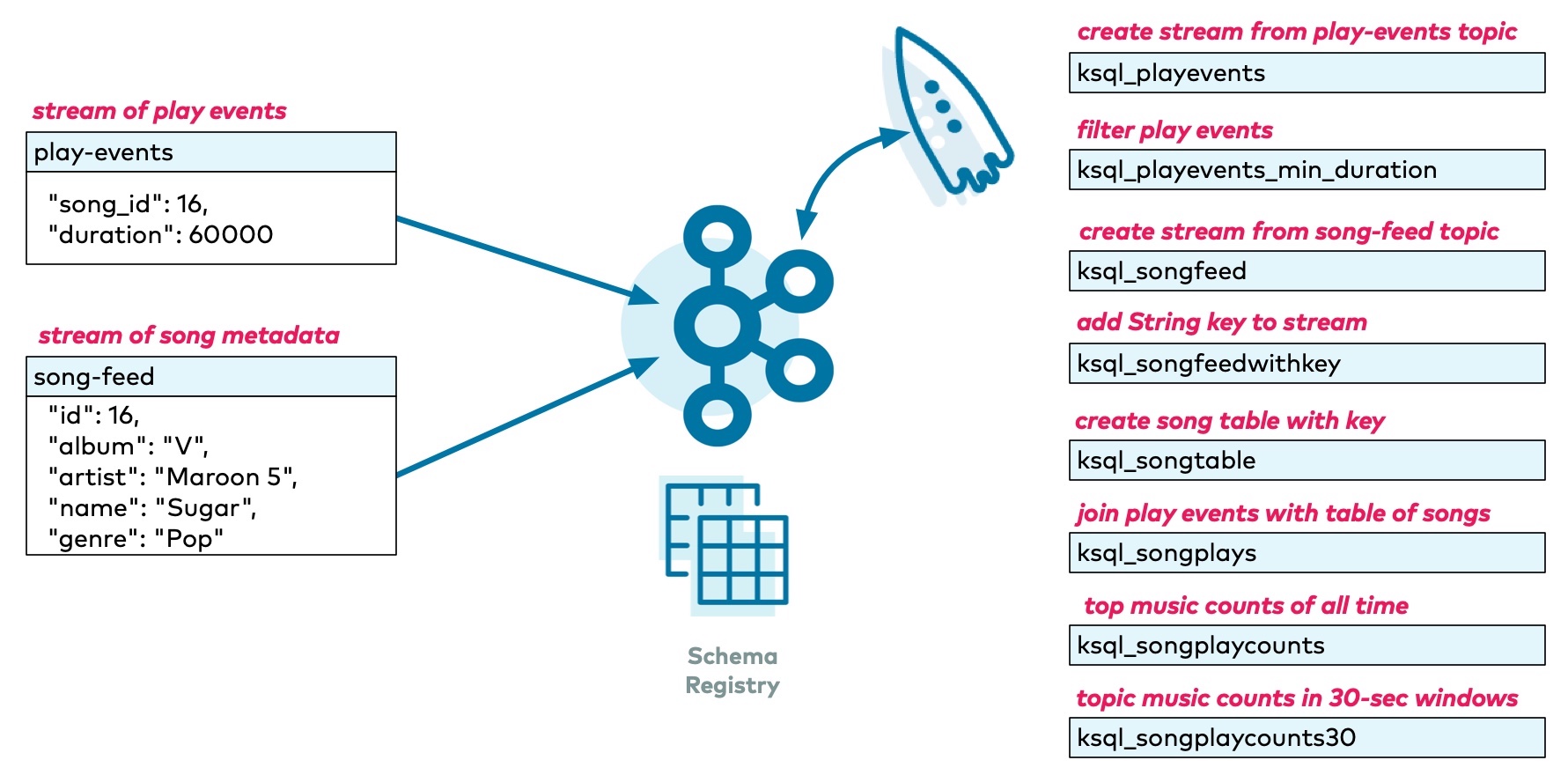

| Music demo | Y | Y | ksqlDB version of the Kafka Streams Demo Application  |

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clients | Y | N | Client applications in different programming languages  |

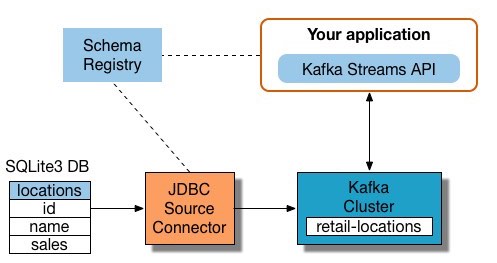

| Connect and Kafka Streams | Y | N | Demonstrate various ways, with and without Kafka Connect, to get data into Kafka topics and then loaded for use by the Kafka Streams API  |

| Demo | Local | Docker | Description |

|---|---|---|---|

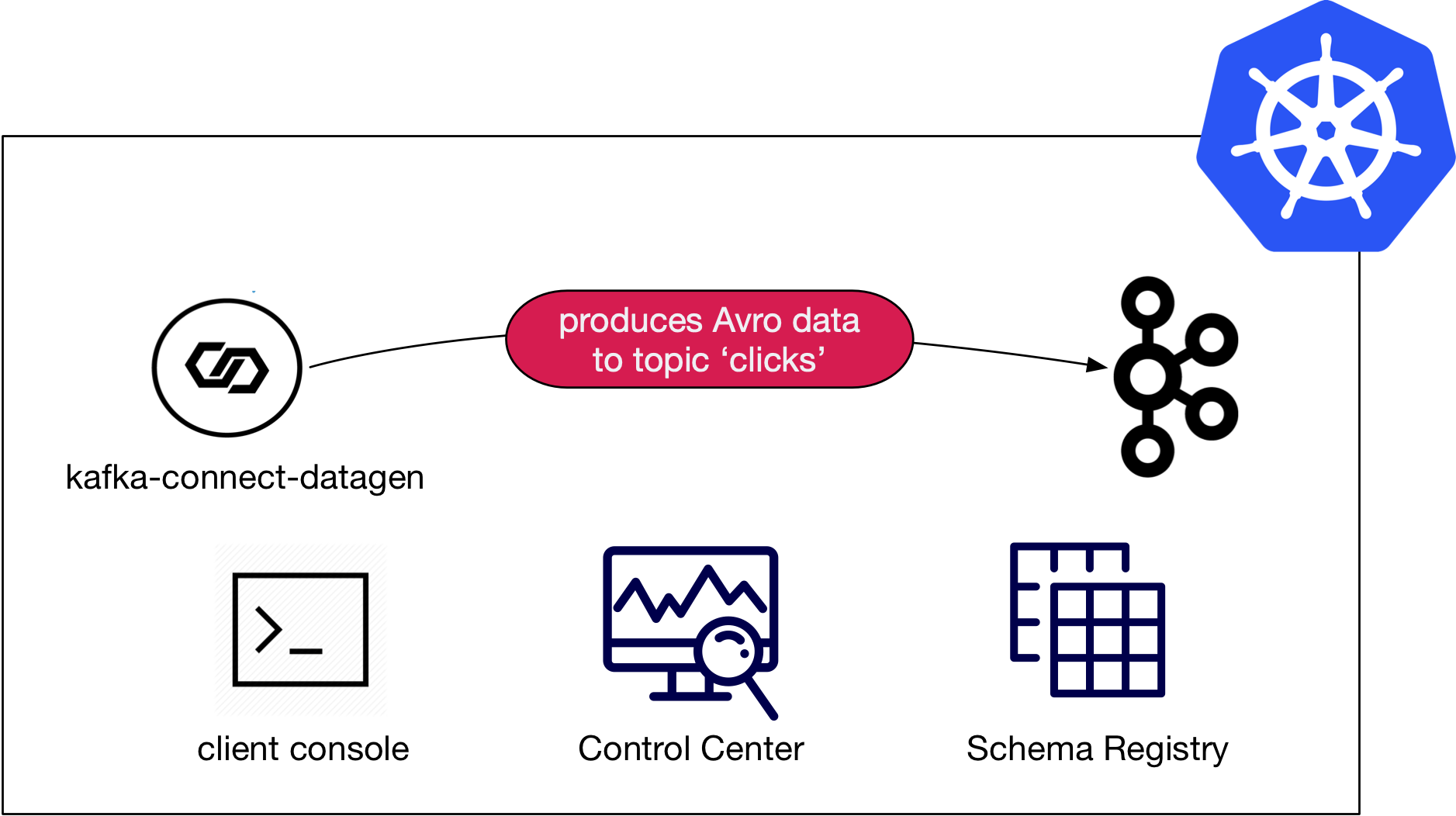

| Avro | Y | N | Client applications using Avro and Confluent Schema Registry  |

| CP Demo | N | Y | Confluent Platform demo (cp-demo) with a playbook for Kafka event streaming ETL deployments  |

| Kubernetes | N | Y | Demonstrations of Confluent Platform deployments using the Confluent Operator  |

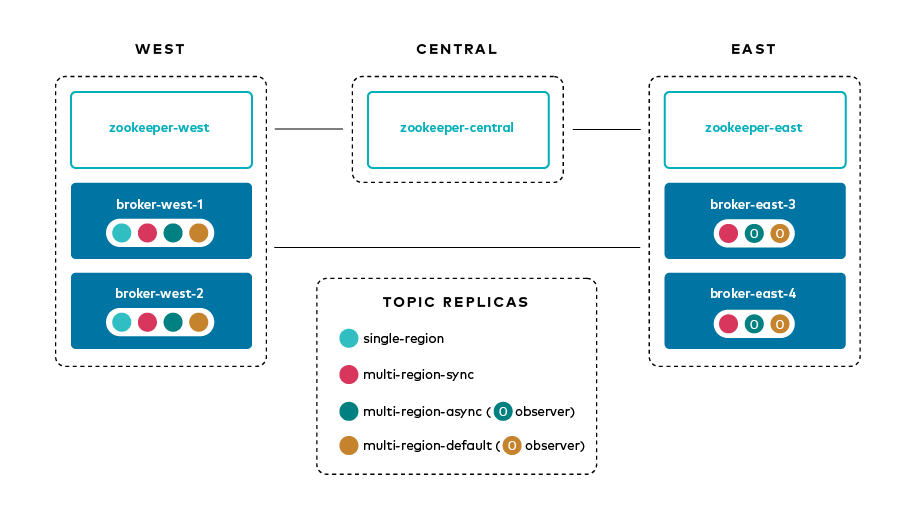

| Multi Datacenter | N | Y | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters  |

| Multi Region Replication | N | Y | Multi-region replication with follower fetching, observers, and replica placement |

| Quickstart | Y | Y | Automated version of the Confluent Platform Quickstart  |

| Role-Based Access Control | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts  |

| Secret Protection | Y | Y | Secret Protection feature encrypts secrets in configuration files  |

| Replicator Security | N | Y | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them  |

As a next step, you may want to build your own custom demo or test environment. We have several resources that launch just the services in Confluent Platform with no pre-configured connectors, data sources, topics, schemas, etc. Using these as a foundation, you can then add any connectors or applications. See confluentinc/cp-all-in-one for more information.

Here are additional GitHub repos that offer an incredible set of nearly a hundred other Apache Kafka demos. They are not maintained on a per-release basis like the demos in this repo, but they are an invaluable resource.