Fast, Lightweight Style Transfer using Deep Learning: A re-implementation of "A Learned Representation For Artistic Style" (which proposed using Conditional Instance Normalization), "Instance Normalization: The Missing Ingredient for Fast Stylization", and the fast neural-style transfer method proposed in "Perceptual Losses for Real-Time Style Transfer and Super-Resolution" using Lasagne and Theano.

This repository contains a re-implementation of the paper A Learned Representation For Artistic Style and its Google Magenta TensorFlow implementation. The major differences are as follows:

- The batch size has been changed (from 16 to 4); this was found to reduce training time without affecting the quality of the images generated.

- Training is done with the COCO dataset, as opposed to with ImageNet

- The style loss weights have been divided by the number of layers used to calculate the loss (though the values of the weights themselves have been increased so that the actual weights effectively remain the same)

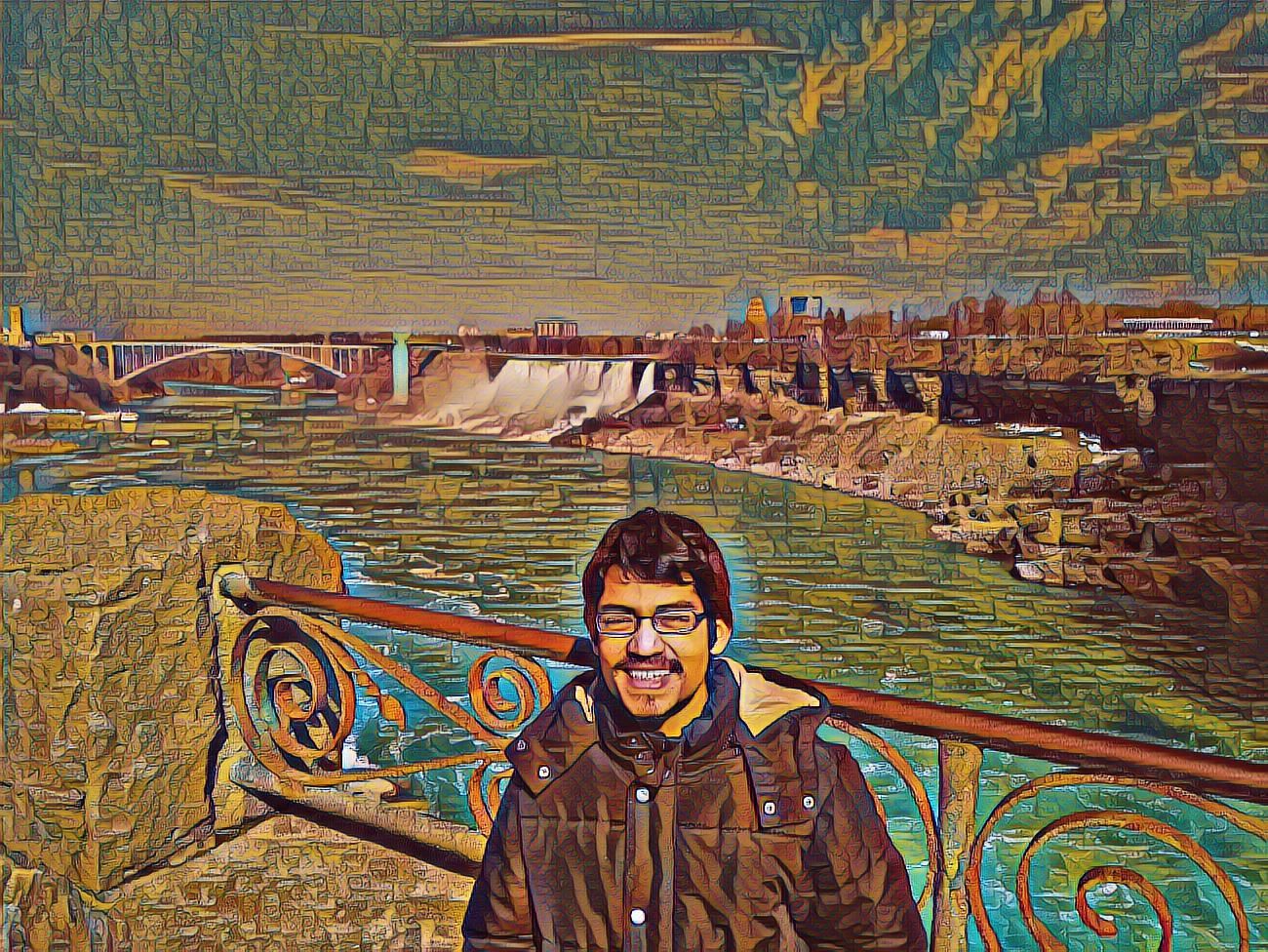

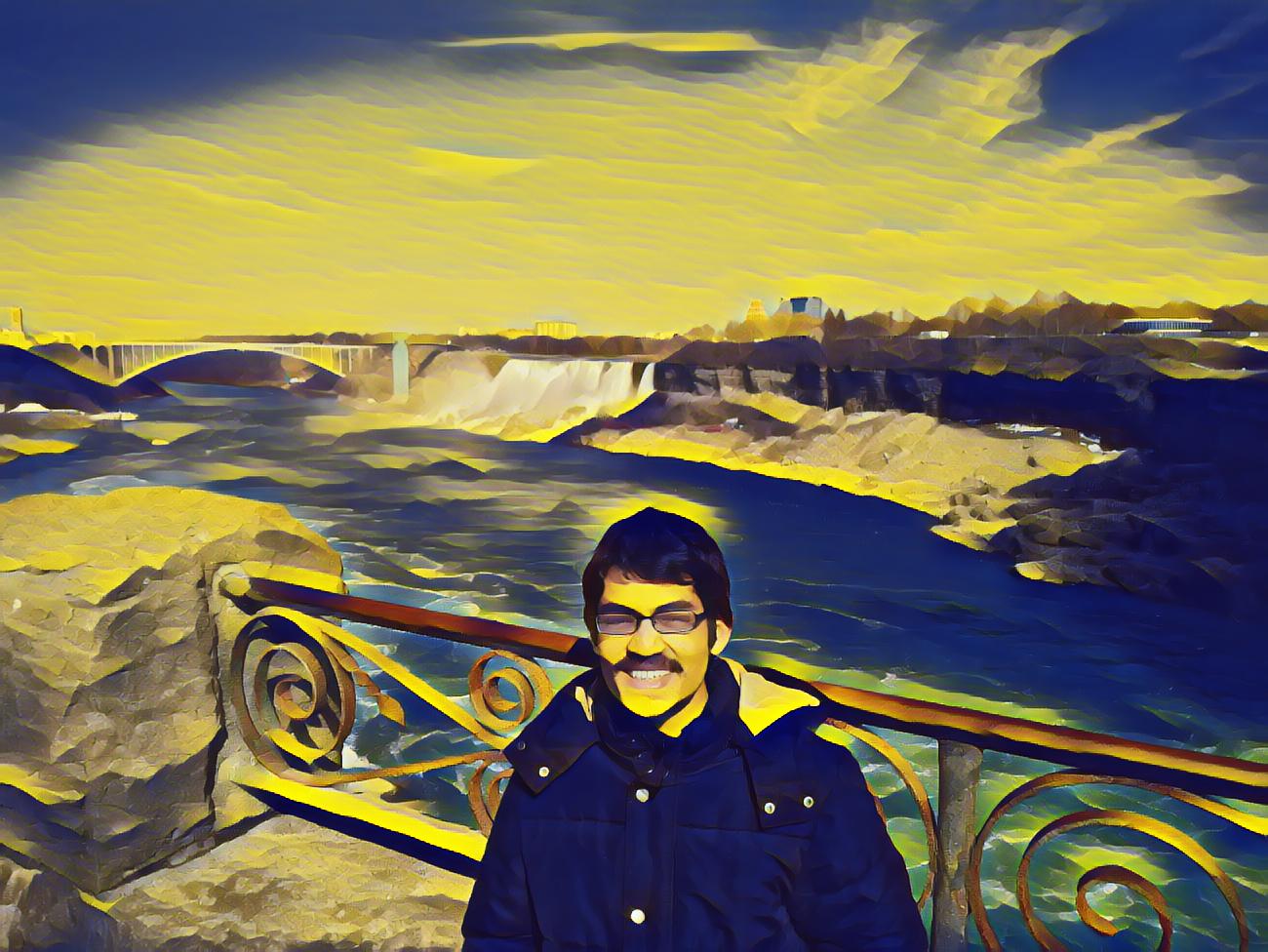

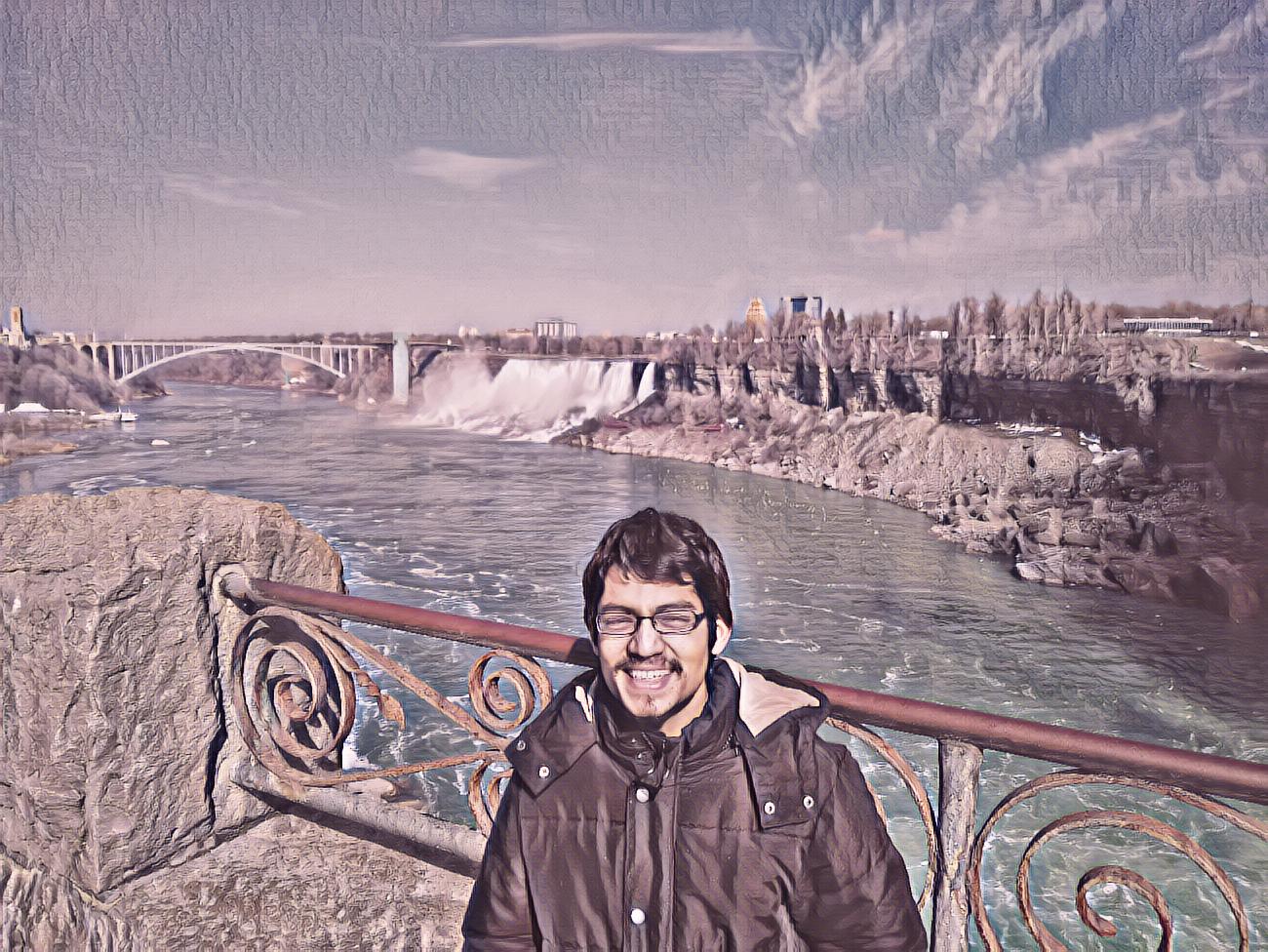

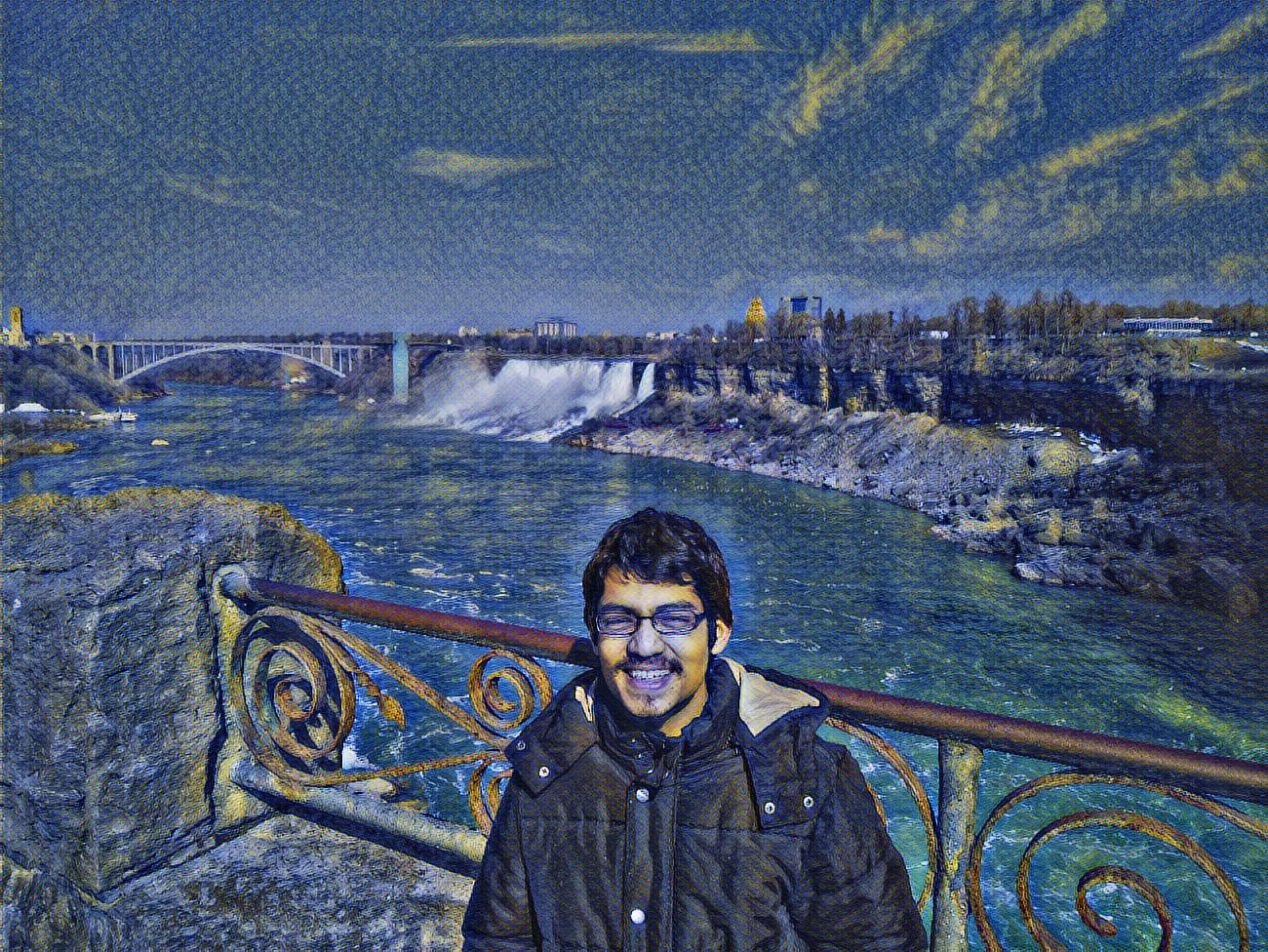

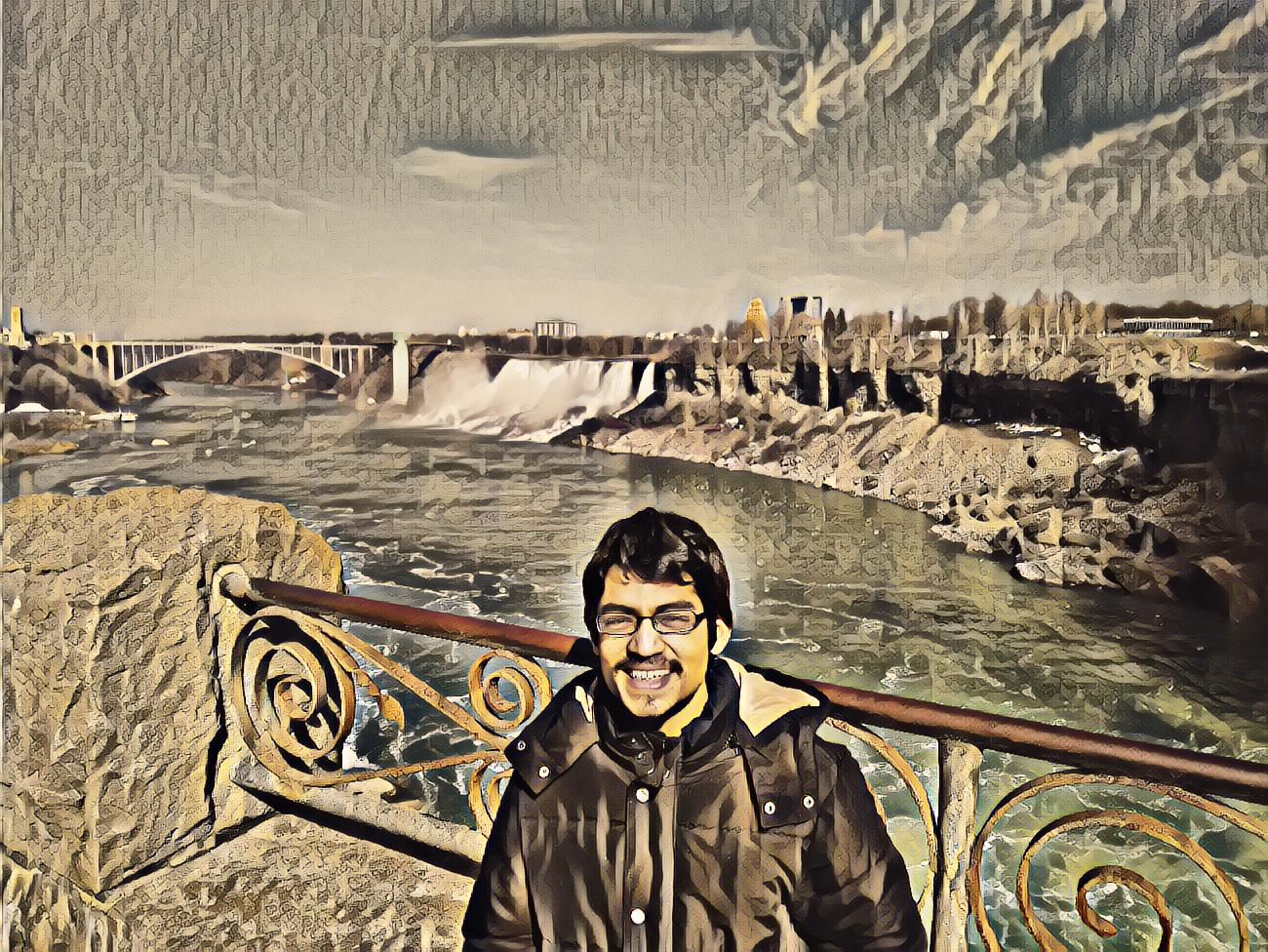

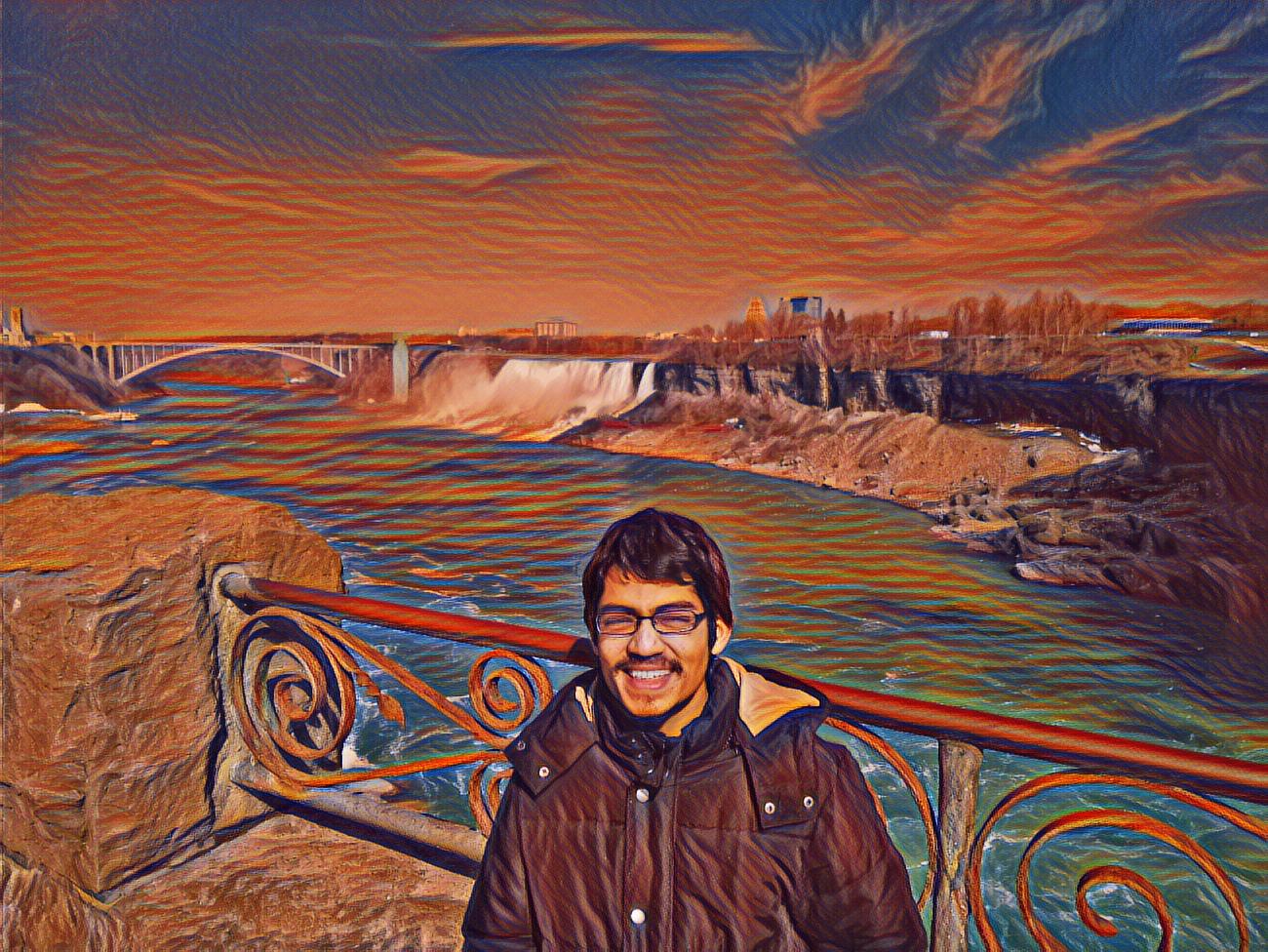

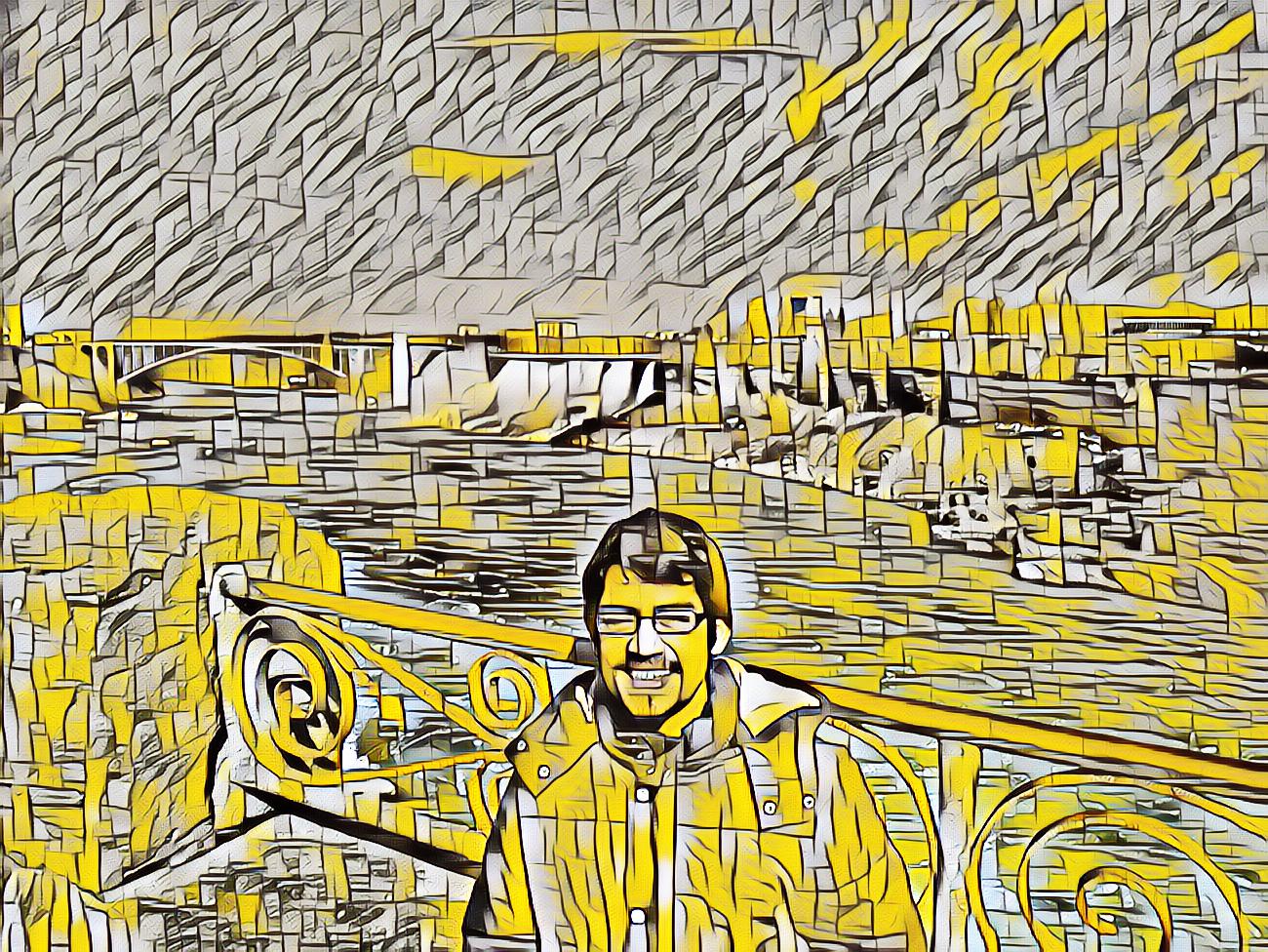

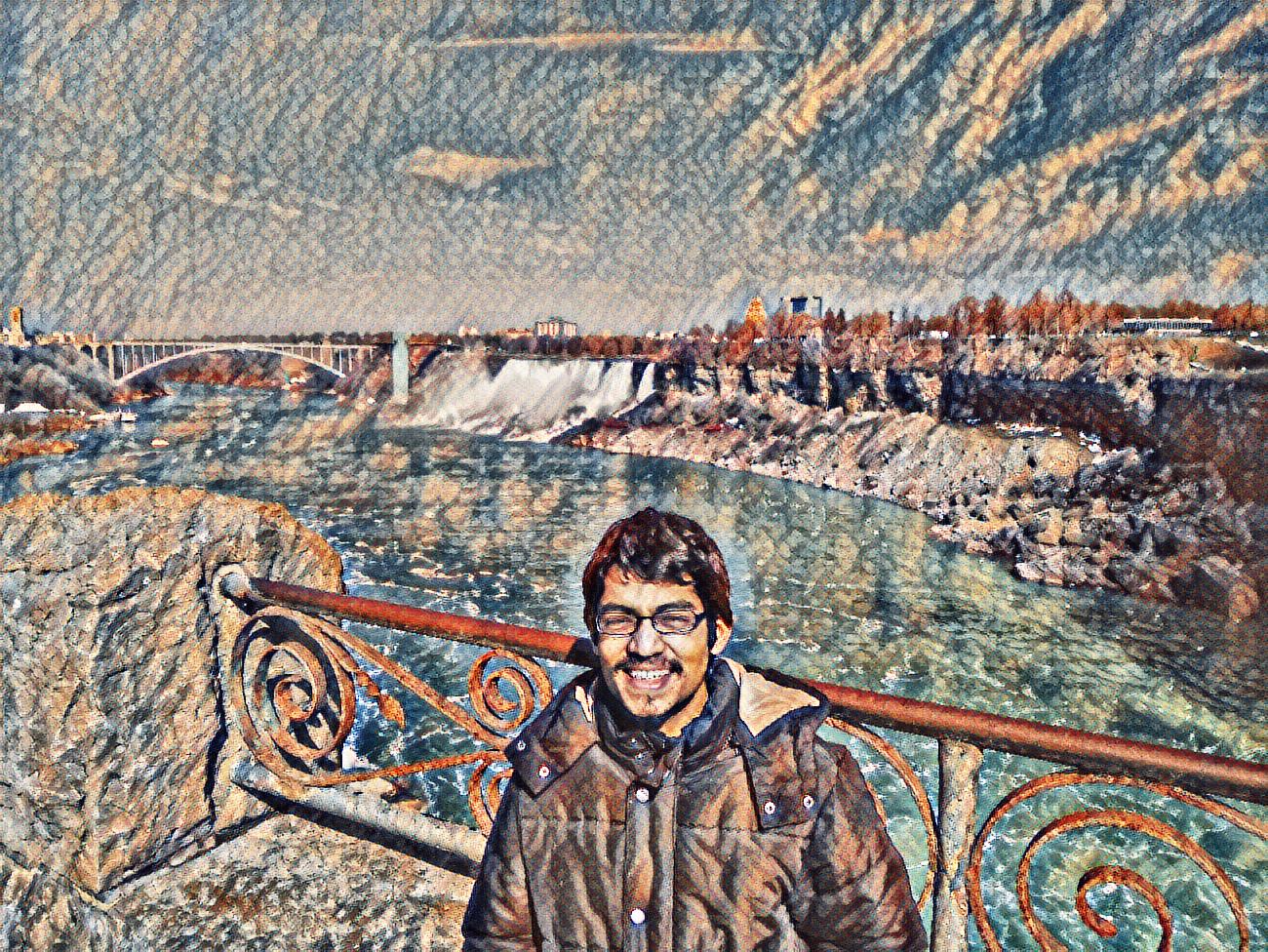

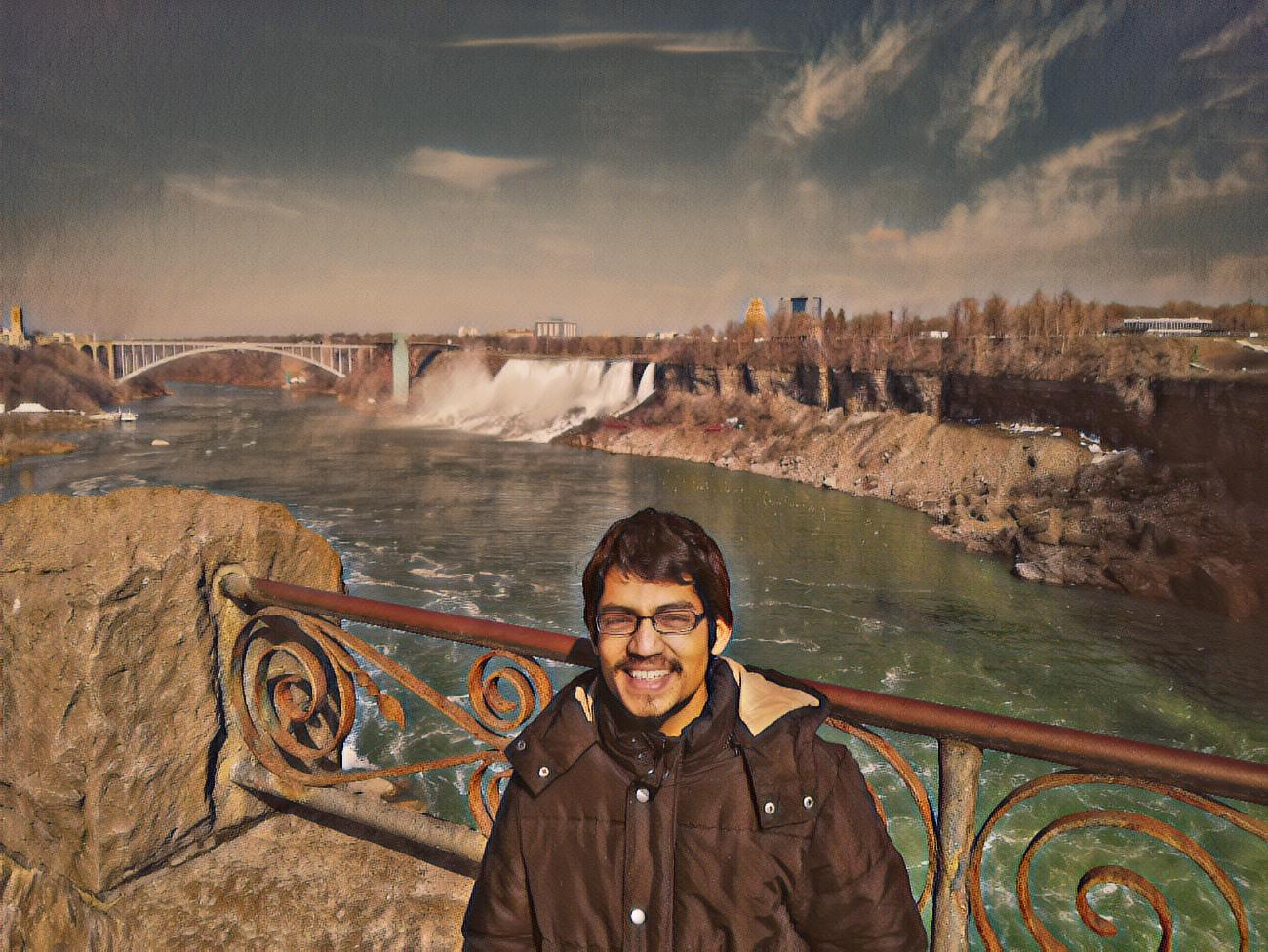

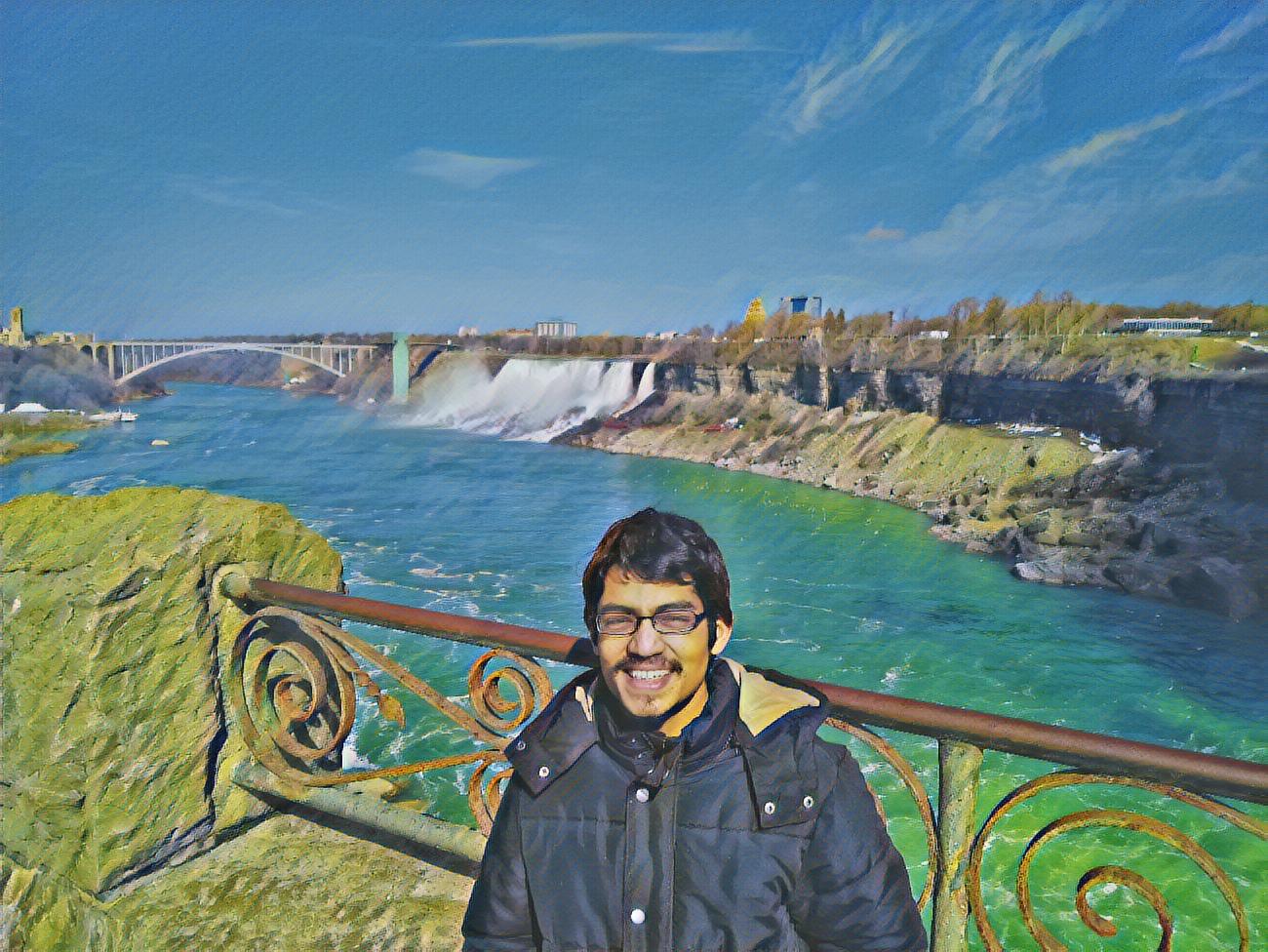

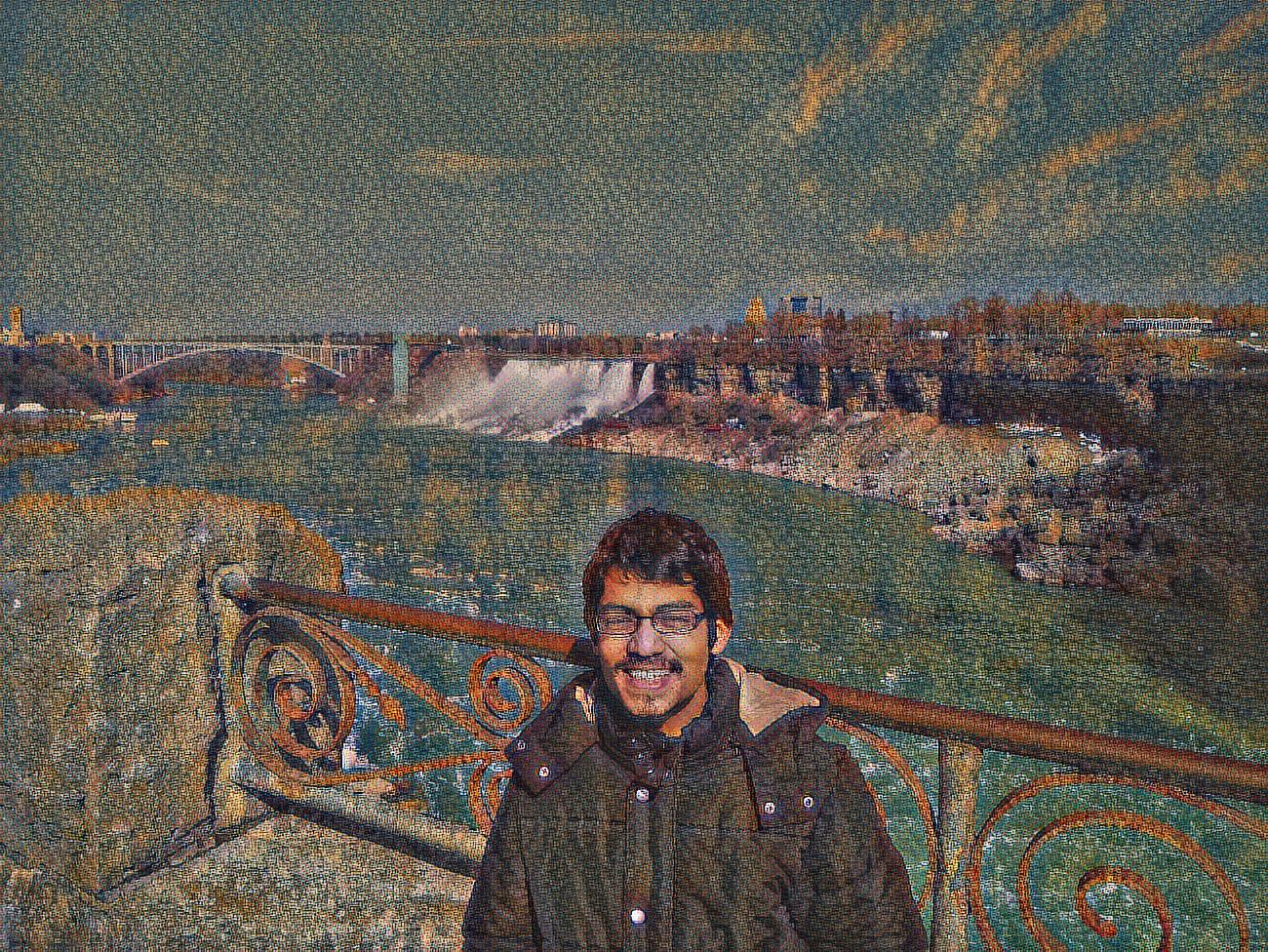

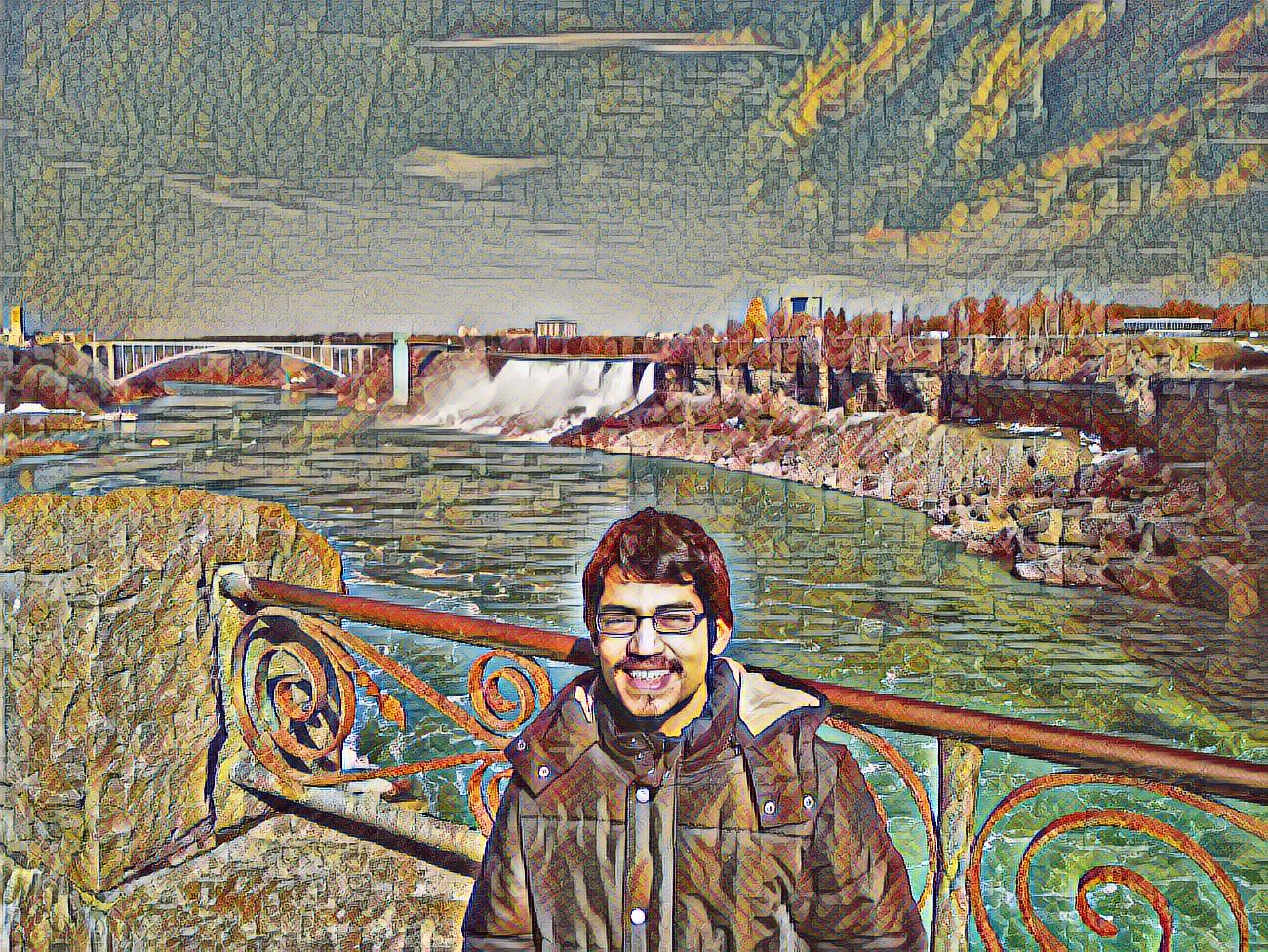

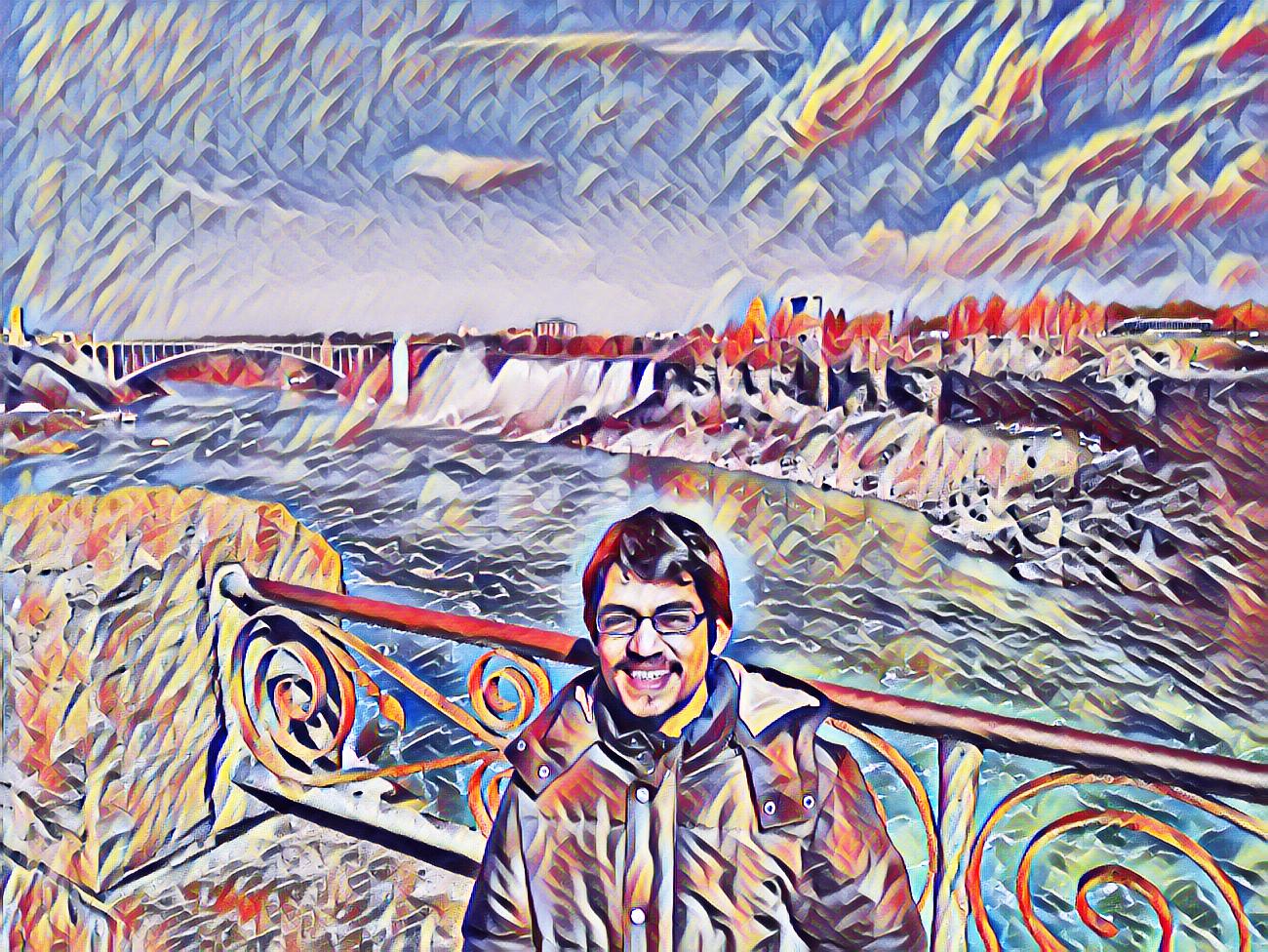

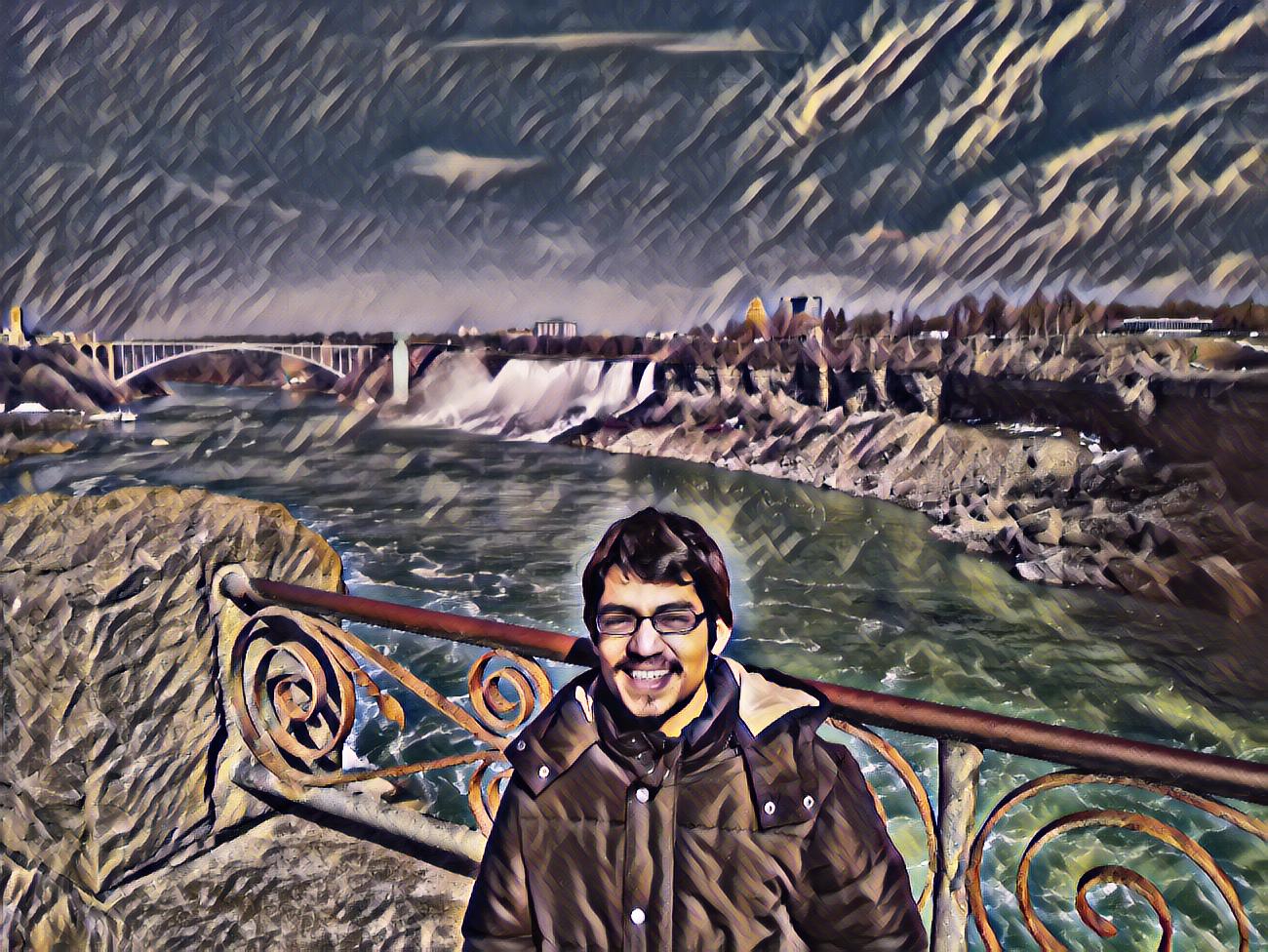

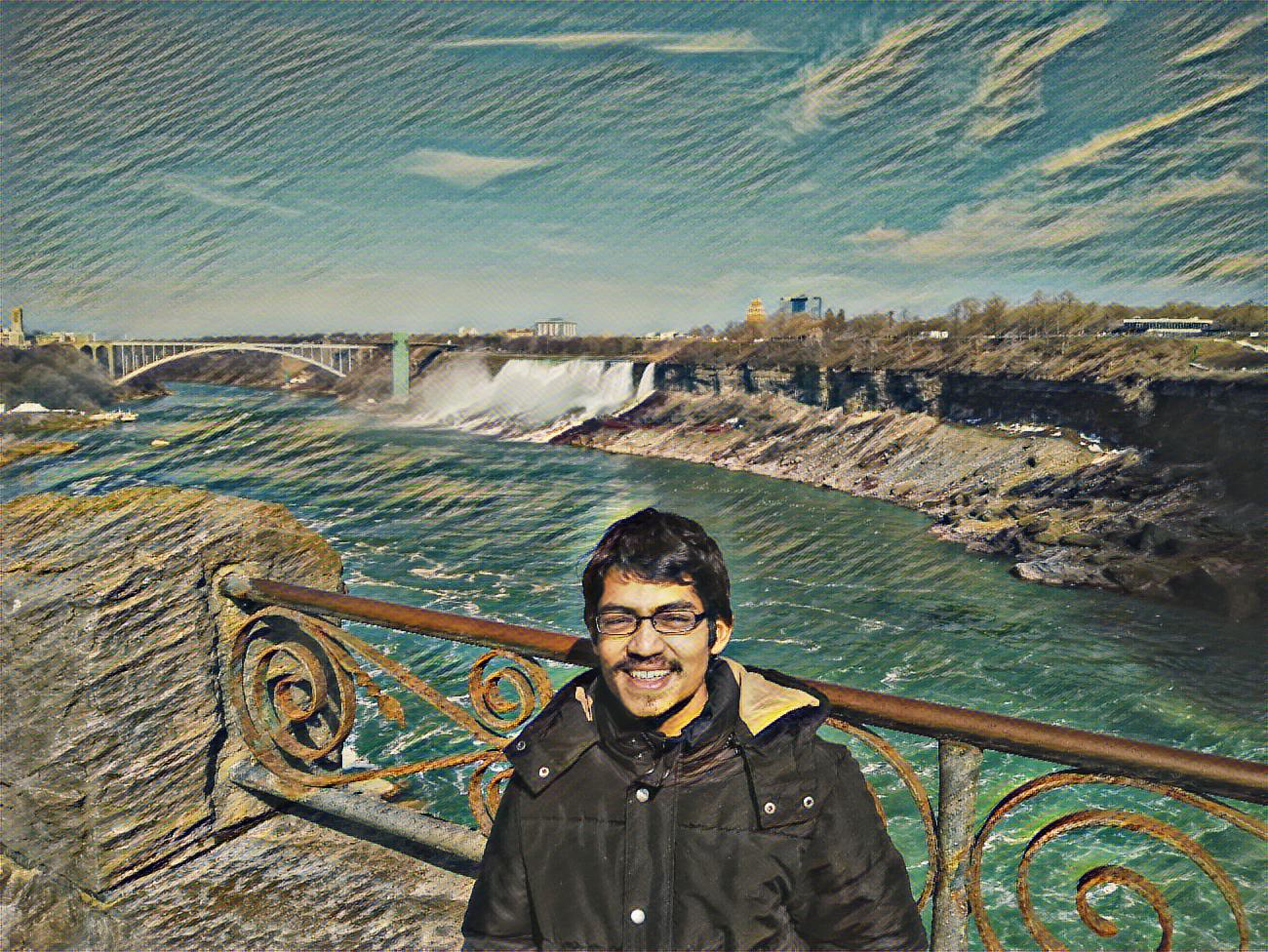

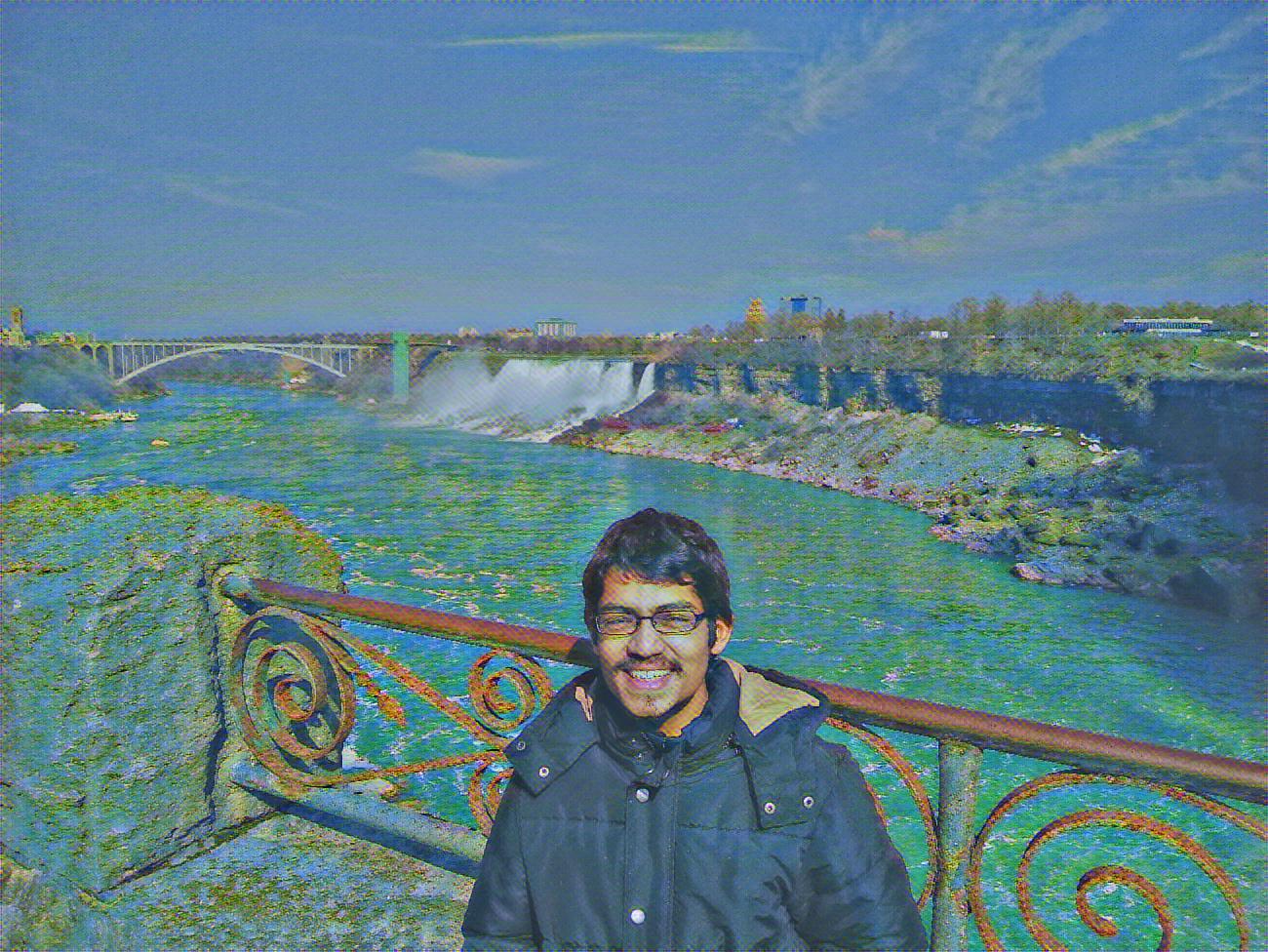

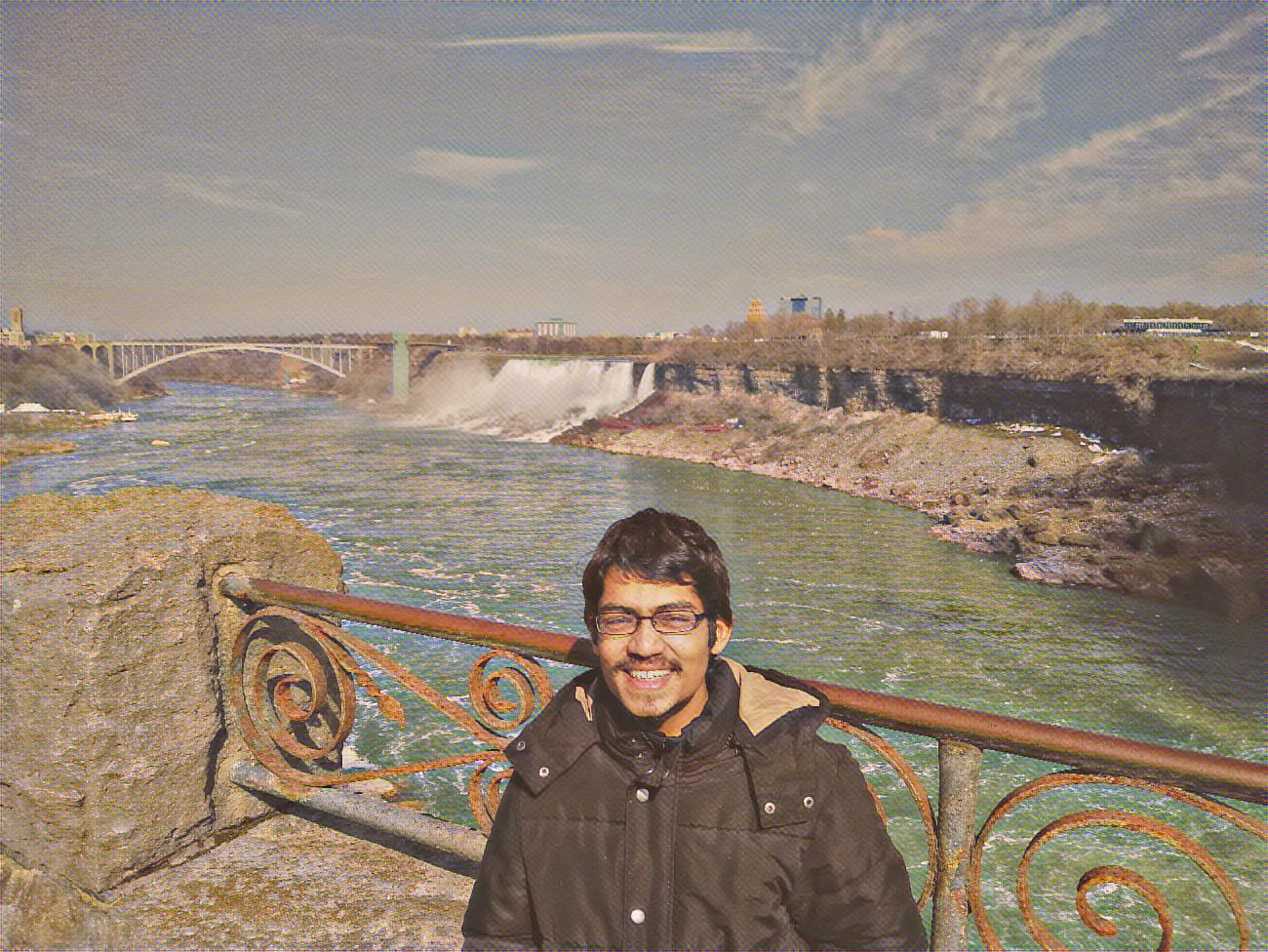

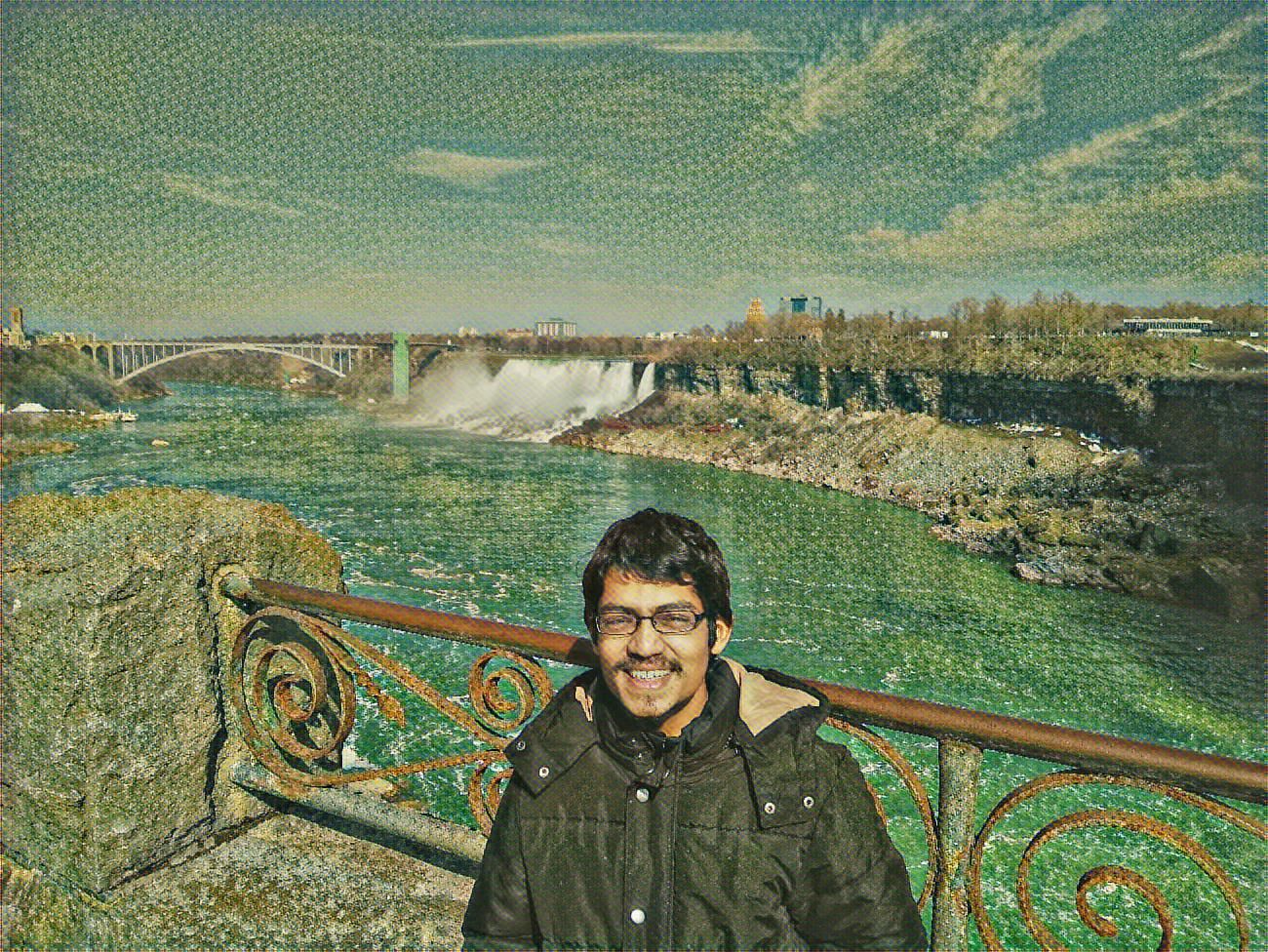

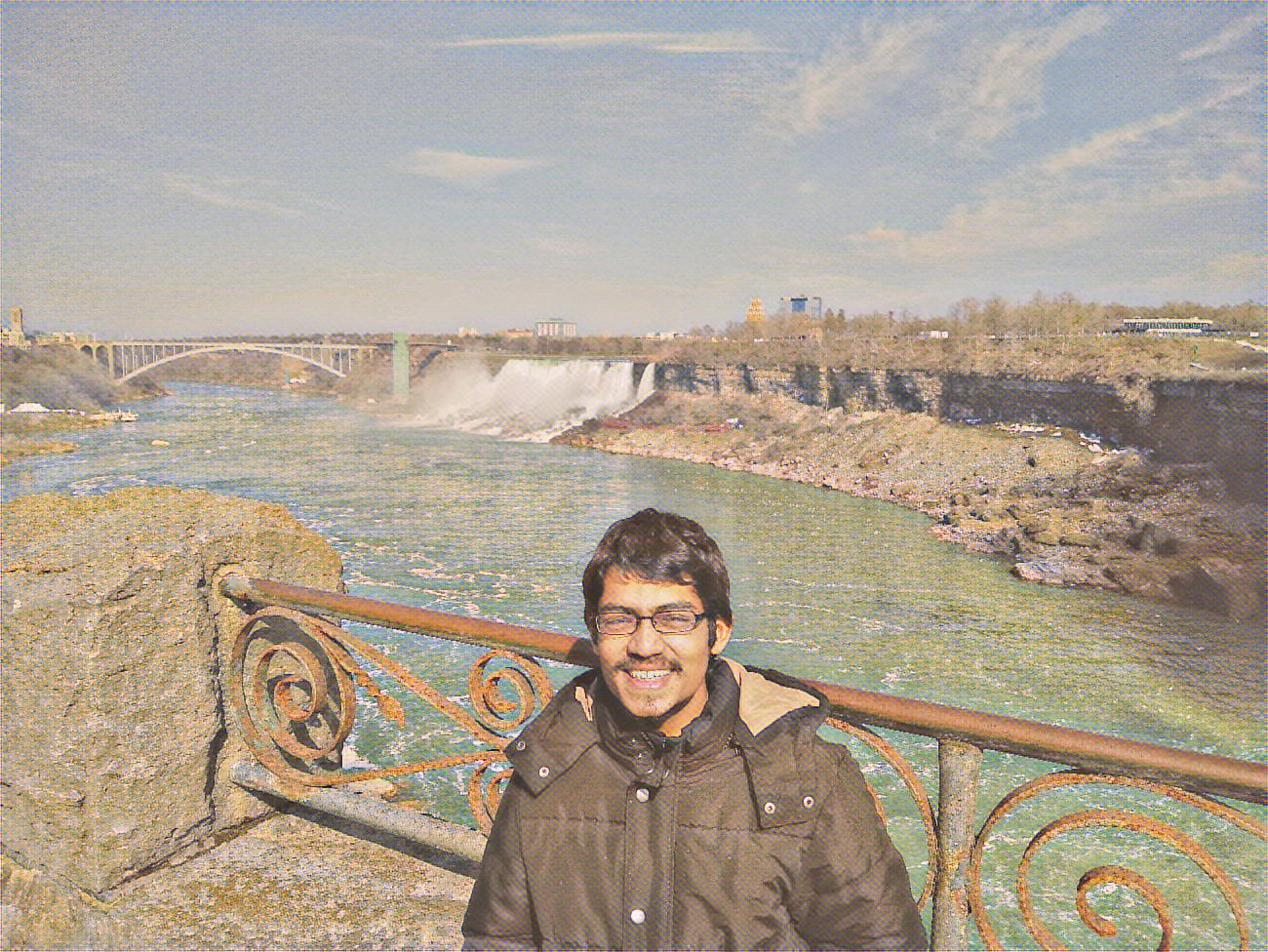

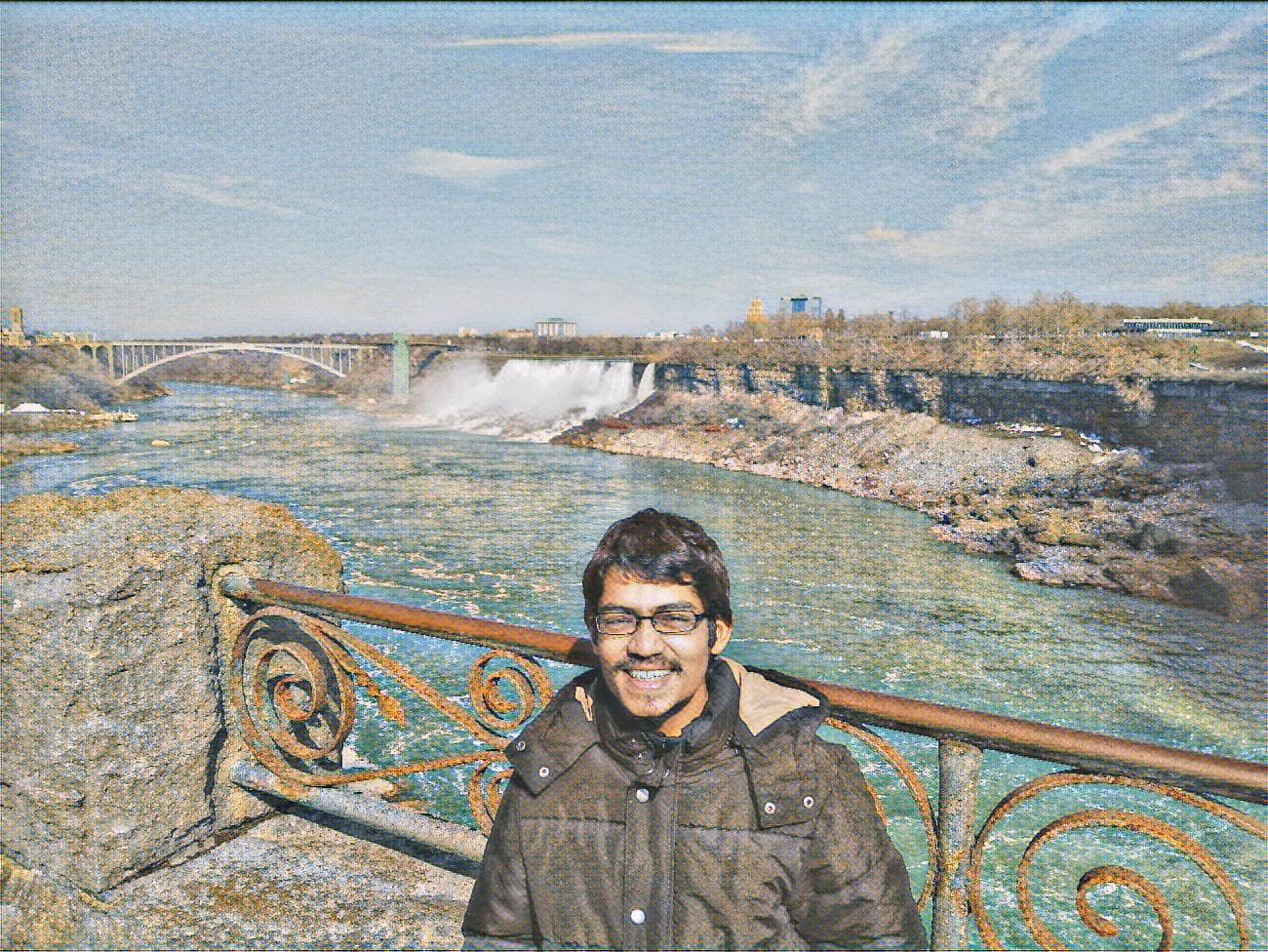

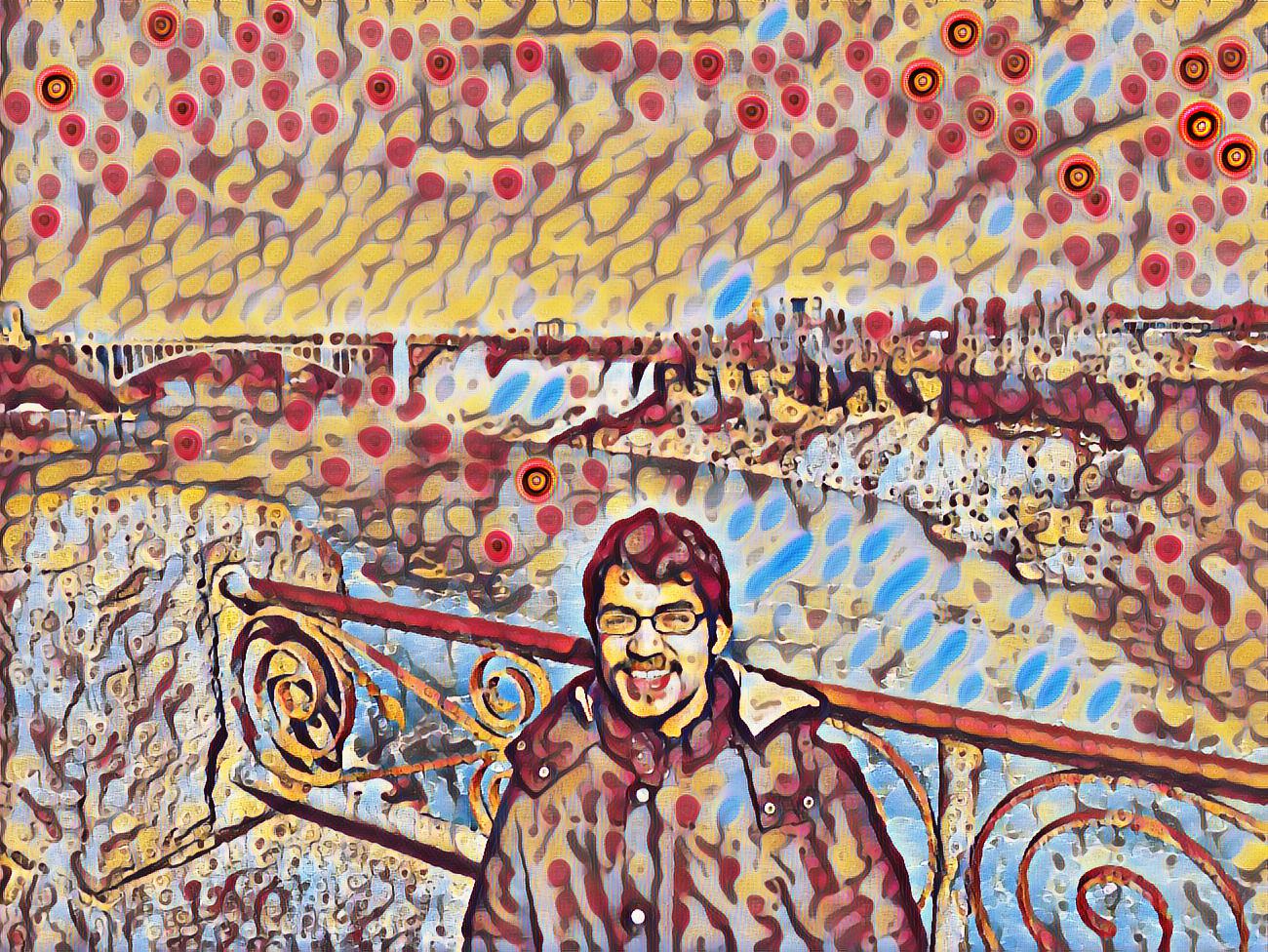

The following are the results when this technique was applied to style images described in the paper (to generate pastiches of a set of 32 paintings by various artists, and of 10 paintings by Monet, respectively):

This repository also contains a re-implementation of the paper Perceptual Losses for Real-Time Style Transfer and Super-Resolution and the author's torch implementation(fast-neural-style) with the following differences:

- The implementation uses the

conv2_2layer of the VGG-Net for the content loss as in the paper, as opposed to theconv3_3layer as in the author's implementation. - The following architectural differences are present in the transformation network, as recommended in the paper A Learned Representation For Artistic Style:

a. Zero-padding is replaced with mirror-padding

b. Deconvolutions are replaced by a nearest-neighbouring upsampling layer followed by a convolution layer

These changes obviate the need of a total variation loss, in addition to providing other advantages. - The implementation of the total variational loss is in accordance with this one, different from the author's implementation. Total varition loss is no longer required, however (refer point 2).

- The implementation uses Instance Normalization (proposed in the paper "Instance Normalization: The Missing Ingredient for Fast Stylization") by default: although Instance Normalization has been used in the repo containing the author's implementation of the paper, it was proposed after the paper itself was released.

- The style loss weights have been divided by the number of layers used to calculate the loss (though the values of the weights themselves have been increased so that the actual weights effectively remain the same)

This repository re-implements 3 research papers:

- A Learned Representation For Artistic Style

- Instance Normalization: The Missing Ingredient for Fast Stylization

- Perceptual Losses for Real-Time Style Transfer and Super-Resolution

- The author's implementation of the paper "Perceptual Losses for Real-Time Style Transfer and Super-Resolution" (fast-neural-style)

- The Google Magenta implementation of the paper "A Learned Representation for Artistic Style" (magenta: image-stylization)

- The neural-enhance repository

- The Lasagne implementation of of the paper "A Neural Algorithm of Artistic Style" (lasagne/recipes: styletransfer)

- The Keras implementation of of the paper "A Neural Algorithm of Artistic Style" (keras: neural_style_transfer)