This is my collection of implimention of llm,paper and things I hand in my mind I hand lot of fragmented code of implimention of diffrent model

this is attempt to make it eveything in one place

This lib is for runing/copying code for expriments

install with

git clone https://github.com/joey00072/ohara.git

pip install -e .then

## download and pretokenize

python examples/prepare-dataset.py

## train

## look at train.py its fairly easy

python examples/train_llama.py

# lighting fabric verison is also avalible (recommanded)

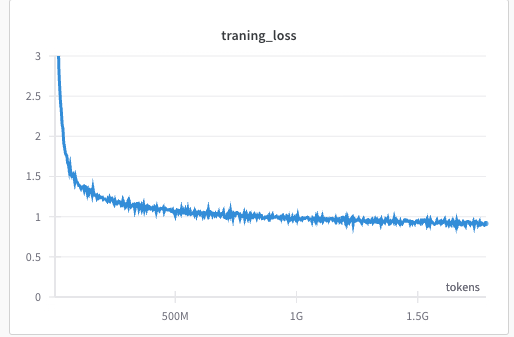

python examples/train_llama_fabric.py llama-20M trained on tinystores for 1.7B

inferance on phi2

## this will download model from hf and run it in torch.flaot16

python phi_inference.py

## look at files and you can impliment rest of things easily,

## I belive in you 😉papaers and theory is on one side but code is truth, in the end things that matter that works (runs)

If you look into docs you can find some written things. this are mostly copied from my obsidian notes

- TokenFormer

- MLA

- Griffin & Hawk

- Galore

- Qsparse

- Bitnet

- renet

- Alibi Embeddings | md

- Rotary Embeddings | md

- LoRA

- LLAMA | md

- XPOS

- Mamba

- GPT | md

- make infercer class better

- make training loop better (use lightning fabric maybe)

- Finetuning in structed way (I just rawdoag code when I need it)

- DPO

- make is py modele so I can create expriment folder and put all this in it

- be nice,

- code explaintions || docs are appricated

- memes on pr recommend