TensorFlow implementation for the paper:

Learning to Evaluate Image Captioning

Yin Cui, Guandao Yang, Andreas Veit, Xun Huang, Serge Belongie

CVPR 2018

Code originates from this article but has been modified for our application. The data used for our implementation has been provided by the company and is not avaible.

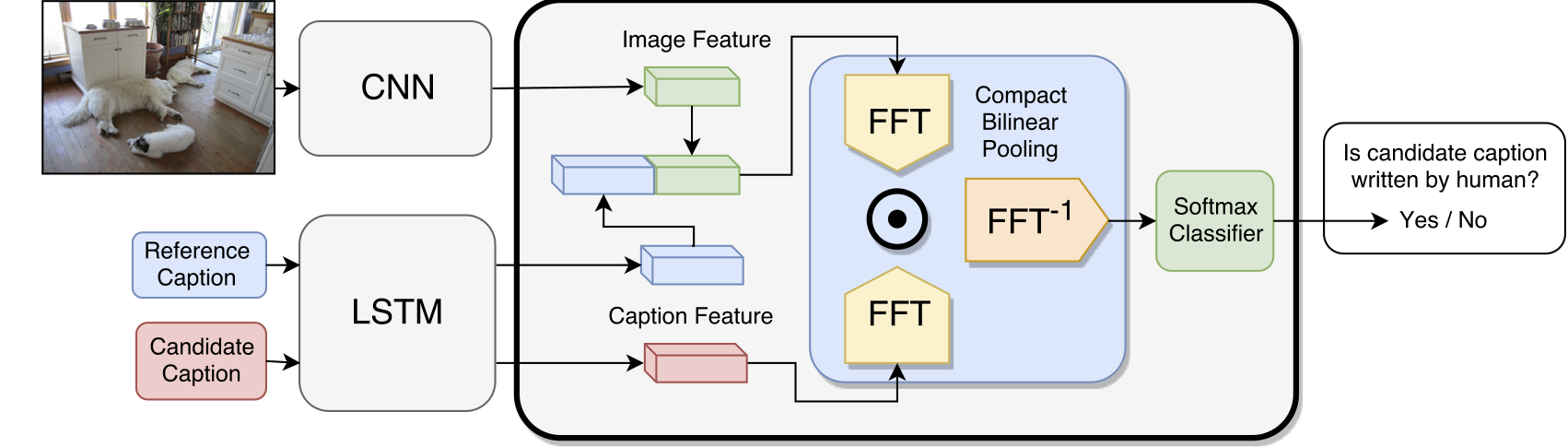

This repository contains a discriminator that could be trained to evaluate image captioning systems. The discriminator is trained to distinguish between machine generated captions and human written ones. During testing, the trained discriminator take the cadidate caption, the reference caption, and optionally the image to be captioned as input. Its output probability of how likely the candidate caption is human written can be used to evaluate the candidate caption. Please refer to our paper [link] for more detail.

- Python (3.7)

- Tensorflow (1.15.0)

- request: 2.22.0

- urllib.request: 3.7

- keras-2.3.1

The project uses different data source provided by WriteReaders. The objective is to implement the TensorFlow model and use it to between machine generated captions and human written ones.

- To generate the folder structure for this project:

python scripts/config.py

Downloading and performing feature extraction for all images takes quite a lot of time 2-3 hours. Hence, to ease this process we recommend downloading preprocessed dataset (LINK). To do it manully find the instructions further down.

- To generate the embedding for the dataset we use the python package called ‘fasttext’ which introduces high level interface to use the vector files along with some other fastText functionalities:

pip install fasttext

Download the pre-trained fastText "da" model and the binary file: https://fasttext.cc/docs/en/pretrained-vectors.html (bin+text) and put the folder in local_files. Note, to specify the number of words wanted in the vocabulary "vocab_size" in config.py.

- Generate vocabulrary

python scripts/prep_vocab.py --model "wiki.da/wiki.da.bin"

python scripts/image_scraper.py --fname "data/proposals2.npz" --url_name "url"

To download the test images first convert tsv file to npz and remove all samples without a BadProposal:

python scripts/test_data_prep.py

Then download:

python scripts/image_scraper.py --fname "data/test_data.npz" --url_name "ImageUrl" --test "True"

This process can easily take an hour. For the 5k sample size we experinced an error for 10 of the urls. Since this is a significantly small amount of images, these samples will simple be removed at a later state (prep_submission.py).

Having all the images downloaded the next step is to perfom feature extraction for each image.

pip install opencv-python

To get the features from each image:

python scripts/image_feature_extraction.py --fname "./local_files/images"

- Prepare the data Prepare the training data

python scripts/prep_submission.py --name_human "adult_texts" --name_mc "proposals"

Prepare the test data

python scripts/prep_submission.py --data "test_data.npz" --image_feat "image_features_test.npz" --url_name "ImageUrl" --name_human "AdultText" --name_mc "Text Proposal" --train_test_val_split "False" --single_name "test" --scoring_proposal "BadProposal"

Note that we assume you've followed through the steps in the Preparation section before running this command. This script will create a folder data/proposals and three .npy files that contain data needed for training the metric. Please use the following command to train the metric:

python scripts/score.py --name proposalsTo run the articles

python scripts/score.py --name neuraltalkThe results will be logged in local_files/proposals_scoring directory. If you use the default model architecture, the results will be in local_files/proposals_scoring/mlp_1_img_1_512_0.txt.

All results can be visualised by

python scripts/visulization.py --name "proposals" --show_plt "True" --save_plt "True"

And articles

python scripts/visulization.py --name "neuraltalk" --show_plt "True" --save_plt "True"

Both show_plt and save_plt is by default False. A directory is automatically generated for storing the images.