Lately I've been experiencing poetry update routinely taking 60-90 minutes (5000 seconds). I've used Poetry in anger for 6 months now, before that pip-tools and conda. Exceptionally long Poetry compile times feel like a new thing, but I have no hard evidence for this.

Perhaps, there's lots of open issues, but I haven't seen any systematic data collection on this:

- Poetry #2094 ("Poetry is extremely slow when resolving the dependencies") discusses the difficulties of missing package metadata causing Poetry to download and then inspect packages for dependency resolution; also perhaps some optimisations in the PyPI API. Some of the more recent comments suggest a possible uptick in macOS-related issues?

- Poetry #4924 ("Dependency resolution is extremely slow") cover much the same ground.

- Poetry #5194 ("Dependency resolution takes awfully long time") mentions issues with pandas and numpy, as well as querying PyPI for private packages. It doesn't mention any OS-specific differences.

- pip #9187 ("New resolver takes a very long time to complete") and pip #10201 ("Resolver preformance regression in 21.2") document various issues such as backtracking and optimizations for the pip resolver itself.

As mentioned in Poetry's docs, one big issue is whether packages include metadata that defines their dependencies. If they do, then the PyPI API can return all this information to Poetry. If they don't, then Poetry must download the package in order to inspect its setup.py to determine required dependencies at install time.

Dustin Ingram wrote a fantastic blog post explaining this, for example setup.py may contain code that inspects the environment in order to install different dependencies for Windows vs macOS. This may be very reasonable in e.g. deep learning libraries with architecture-dependent dependencies. PEP 517 helps with this.

poetry update is now routinely taking over an hour. That's a 5000s delay for everyone in our team before they can push code to CI. We've removed our poetry-lock pre-commit hook to side-step this, but now we're seeing more CI failures and lock files that don't match pyproject.toml. Running all our pre-commit hooks (including poetry lock --no-updates) used to take roughly the time for a kettle to boil, that's an acceptable (and welcome) break in our workflow.

I compared poetry update across two environments: MacBook Pro (my local machine) vs Ubuntu (Google Cloud Platform). Running poetry update locally in a fresh repo (with all caches cleared) took 3700s to resolve the dependencies on the MacBook Pro. On Ubuntu on GCP, it took 120s.

Ubuntu running in GCP runs poetry update 30x faster than my local machine!

That's a huge insight! There are three culprits:

- Network speed

- Ubuntu vs macOS

- Hardware architecture

Definitely! This is mentioned in the Poetry Github issues and explains most of the difference in dependency resolution time. The Ubuntu GCP machine was clocking 2300 Mbps download speed via speedtest. My local machine was clocking 80 Mbps download speed. That explains a 30x difference in poetry update resolution time.

You can see why by running poetry update -vvv on e.g. a simple pandas-only environment, clearly showing the repeated network calls to the PyPI API. Regardless, the PyPI API can be quite slow. A step like PyPI: Getting info for pytz (2021.3) from PyPI isn't downloading packages, its just an API call, but still takes 10s on my local network. Steps like PyPI: Downloading sdist: numpy-1.22.2.zip which require downloading multiple versions of numpy for dependency inspection cause the bulk of the 90 seconds spent resolving a pandas-only environment.

🔥 Tip #1: Faster internet might give you much faster Poetry performance. (Going from 70Mbps to 400Mbps by plugging into my ethernet cable reduced dependency resolution times considerably.)

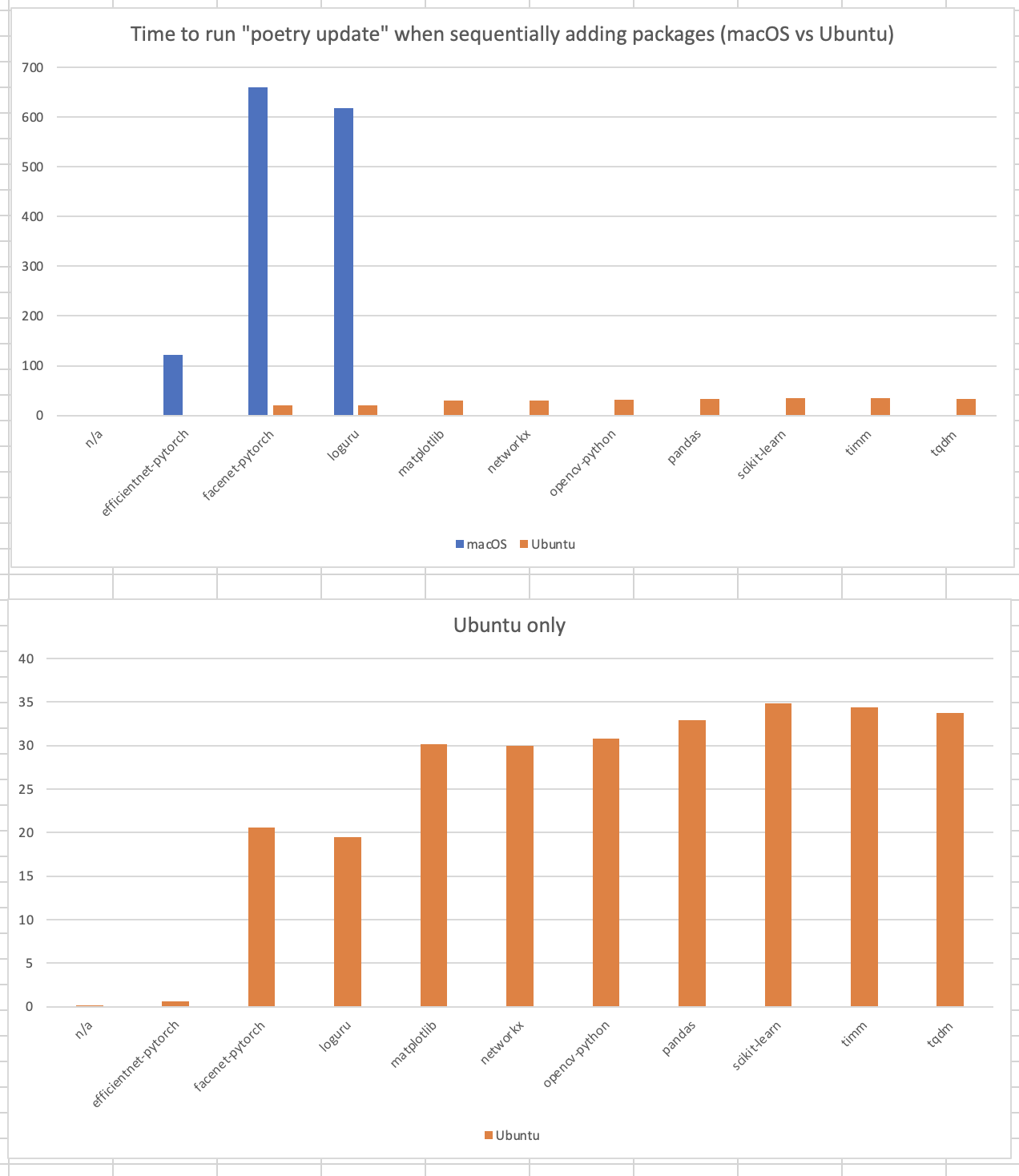

Reading the Poetry docs, there could be OS-level differences in setup.py that cause variation between the environments. Perhaps there's a "problem" package that resolves different on macOS? I added packages one-by-one into each environment, timing every step of the way. Here's the results.

So, it seems that including facenet-pytorch in the environment gives a step change in both macOS dependency resolution time (100s to 600s), so something about this package is definitely slowing things down considerably.

This is not hugely important though. Installing an environment that only contains pandas takes 10s on Ubuntu vs 80s on macOS, so macOS is much slower than the GCP Ubuntu box regardless of the package we're adding.

Yes! This Github repository has Github Actions configured to run poetry update on identical pyproject.toml in both Ubuntu and macOS (Github Actions is pretty cool!).

The results: Ubuntu takes 125 seconds to lock this pyproject.toml whereas macOS takes 145 seconds.

- Lesson #1: Both are very fast. We're talking a couple minutes for an environment that takes over 5000s to compile on my local machine.

- Lesson #2: Using Github Actions, macOS is still between 15% - 50% slower than Ubuntu across different runs. There might be something in this?

- Action #1: Network speed is critical to

poetry updatetime. If you can use ethernet to boost your speed from 50 Mbps -> 300 Mbps, you might see a 6x reduction in Poetry performance. - Action #2: Some packages are much slower. I'm curious to know more about when metadata cannot be included. Is there a gap in PEP standards that cannot be closed for some packages? Or does this require some effort to add the right metadata to these packages? Is it worth building https://pyreadiness.org/3.10/ for "has PyPI API friendly metadata?"

- Action #3: Use Poetry in CI!

Right now, this is a killer productivity win for us! (And our clients.) 🔥🚀

This repo contains a Github Action, poetry-lock-export.yml, that runs poetry update/lock/export against pyproject.toml. Simply copy this into your own repo's .github/workflows/ folder and you're good to go!

This is cumbersome, but let's remember it's much faster than waiting 5000s to do poetry add pandas locally!

- Check latest package version on PyPI, e.g. for pandas we'd add

pandas = "^1.4.0"topyproject.toml. - Commit, run pre-commit (our

poetry-lockis manual stages only, so won't run here), push. - Run your new Poetry lock and export Github Action against the branch you're working on, put the kettle on, and within 2 minutes it will have run poetry update, poetry lock, poetry export, and sent you a Pull Request (against that same branch) for you to merge into your working branch.

I'm writing this up because a) this feels not at all correct!, b) perhaps someone can help us find sanity, and c) until then, I hope this workaround helps save people a huge amount of time.

I'm now curious to learn more about dependency resolution algorithms, it's clearly a hard problem with hidden complexities! Perhaps this investigation inspires you to learn more also? Perhaps, like me, you could:

- 📦 Attend the next Python Packaging Summit?

- 🪶 Try to adapt Airspeed Velocity for consistently measuring dependency resolution algorithms in tools like pip and Poetry?

- 💵 Be paid to fix this! The Python Software Foundation is looking to pay 1x backend developer and 1x frontend developer up to $98,000 each to work on improving PyPI.

This repo was created using the Coefficient Cookiecutter.

- pre-commit:

pre-commit run --all-files - pytest:

pytestorpytest -s - coverage:

coverage run -m pytestorcoverage html - poetry sync:

poetry install --no-root --remove-untracked - updating requirements: see docs/updating_requirements.md

- create towncrier entry:

towncrier create 123.added --edit

- See docs/getting_started.md or docs/quickstart.md for how to get up & running.

- Check docs/project_specific_setup.md for project specific setup.

- See docs/using_poetry.md for how to update Python requirements using Poetry.

- See docs/detect_secrets.md for more on creating a

.secrets.baselinefile using detect-secrets. - See docs/using_towncrier.md for how to update the

CHANGELOG.mdusing towncrier.