This repository holds the project code for using Deep Reinforcement Learning algorithms - DDPG, TD3 and A2C on Reacher envrionment with continuous controls provided by Unity Technology. It is part of the Udacity Deep Reinforcement Learning Nanodegree requirement.

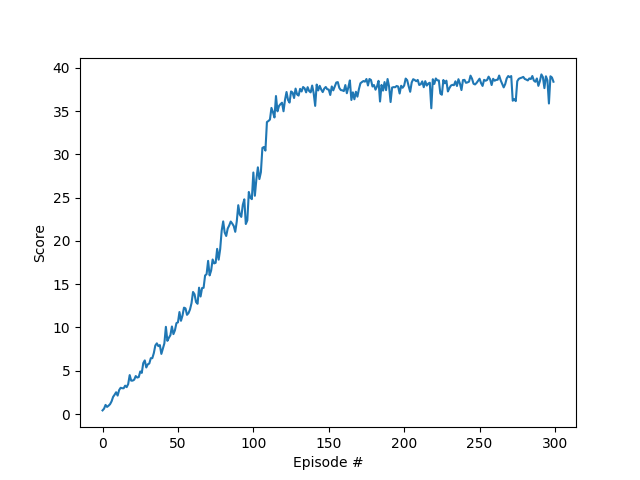

The state is represented as a 33 dimensional observations including the agent's velocity, ray-based perception of objects etc.. The agent has to learn to choose the optimal action based on the state it finds itself in. The action space is a 4 dimensional continuous space representing the torque of the double jointed robot arms. The agent's goal is to track a moving object as many time steps as possible. A reward of +0.1 is earned each step the agent successfully tracks the moving object. The environment is considered solved when the return reaches an average of 30 consistently (over 100 episodes).

All three algorithms solved the environment and A2C seems to be the best.

To set up your python environment to run the code in this repository, follow the instructions below.

-

Create (and activate) a new environment with Python 3.6.

- Linux or Mac:

conda create --name drlnd python=3.6 source activate drlnd- Windows:

conda create --name drlnd python=3.6 activate drlnd

-

Clone the repository (if you haven't already!), and navigate to the

python/folder. Then, install several dependencies.

git clone https://github.com/udacity/deep-reinforcement-learning.git

cd deep-reinforcement-learning/python

pip install .- Create an IPython kernel for the

drlndenvironment.

python -m ipykernel install --user --name drlnd --display-name "drlnd"-

Before running code in a notebook, change the kernel to match the

drlndenvironment by using the drop-downKernelmenu. -

Download the environment - there are two versions of the environment below (specifically built for this project, not the Unity ML-Agents package). Then place the file in the root folder and unzip the file. For the first verion:

- Linux: click here

- Mac: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

For the second version: - Linux: click here

- Mac: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

-

Import the environment in Jupyter notebook under the the drlnd environment.

from unityagents import UnityEnvironment

env = UnityEnvironment(file_name="[to be replaced with file below depending on OS]")

Replace the file name with the following depending on OS:

- Mac: "Reacher.app"

- Windows (x86): "Reacher_Windows_x86/Reacher.exe"

- Windows (x86_64): "Reacher_Windows_x86_64/Reacher.exe"

- Linux (x86): "Reacher_Linux/Reacher.x86"

- Linux (x86_64): "Reacher_Linux/Reacher.x86_64"

- Linux (x86, headless): "Reacher_Linux_NoVis/Reacher.x86"

- Linux (x86_64, headless): "Reacher_Linux_NoVis/Reacher.x86_64"

Load the Jupyter notebook Report.ipynb and run all cells.