The World's Leading Cross Platform AI Engine for Edge Devices, with over 10 million installs on Docker Hub.

Website: https://deepstack.cc

Documentation: https://docs.deepstack.cc

Forum: https://forum.deepstack.cc

Dev Center: https://dev.deepstack.cc

DeepStack is owned and maintained by DeepQuest AI.

DeepStack is an AI API engine that serves pre-built models and custom models on multiple edge devices locally or on your private cloud. Supported platforms are:

- Linux OS via Docker ( CPU and NVIDIA GPU support )

- Mac OS via Docker

- Windows 10 ( native application, CPU and GPU )

- NVIDIA Jetson via Docker.

- Rasperry Pi & ARM64 Devices via Docker.

DeepStack runs completely offline and independent of the cloud. You can also install and run DeepStack on any cloud VM with docker installed to serve as your private, state-of-the-art and real-time AI server.

-

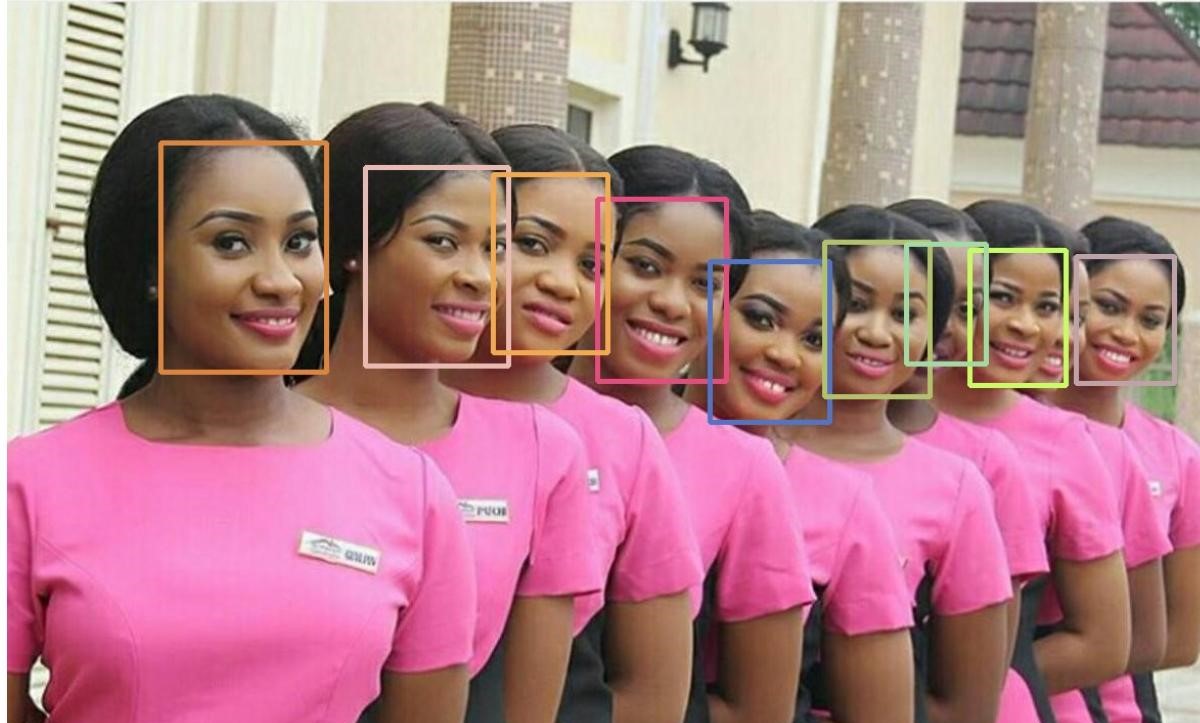

Face APIs: Face detection, recognition and matching.

-

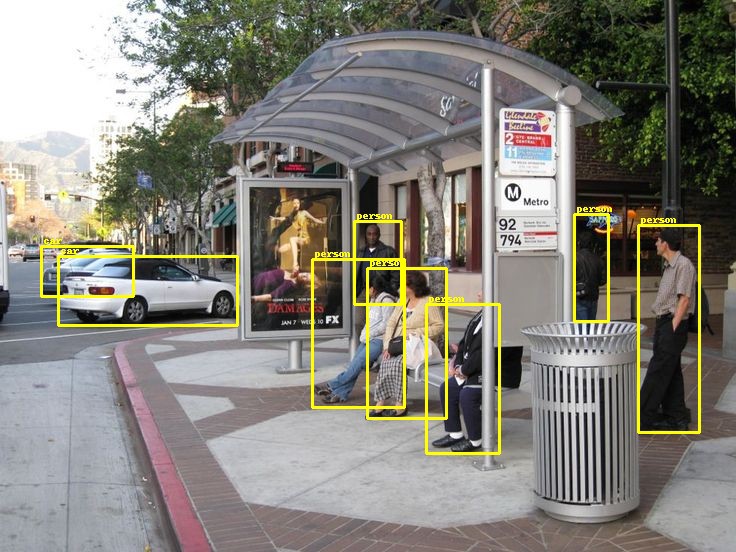

Common Objects APIs: Object detection for 80 common objects

-

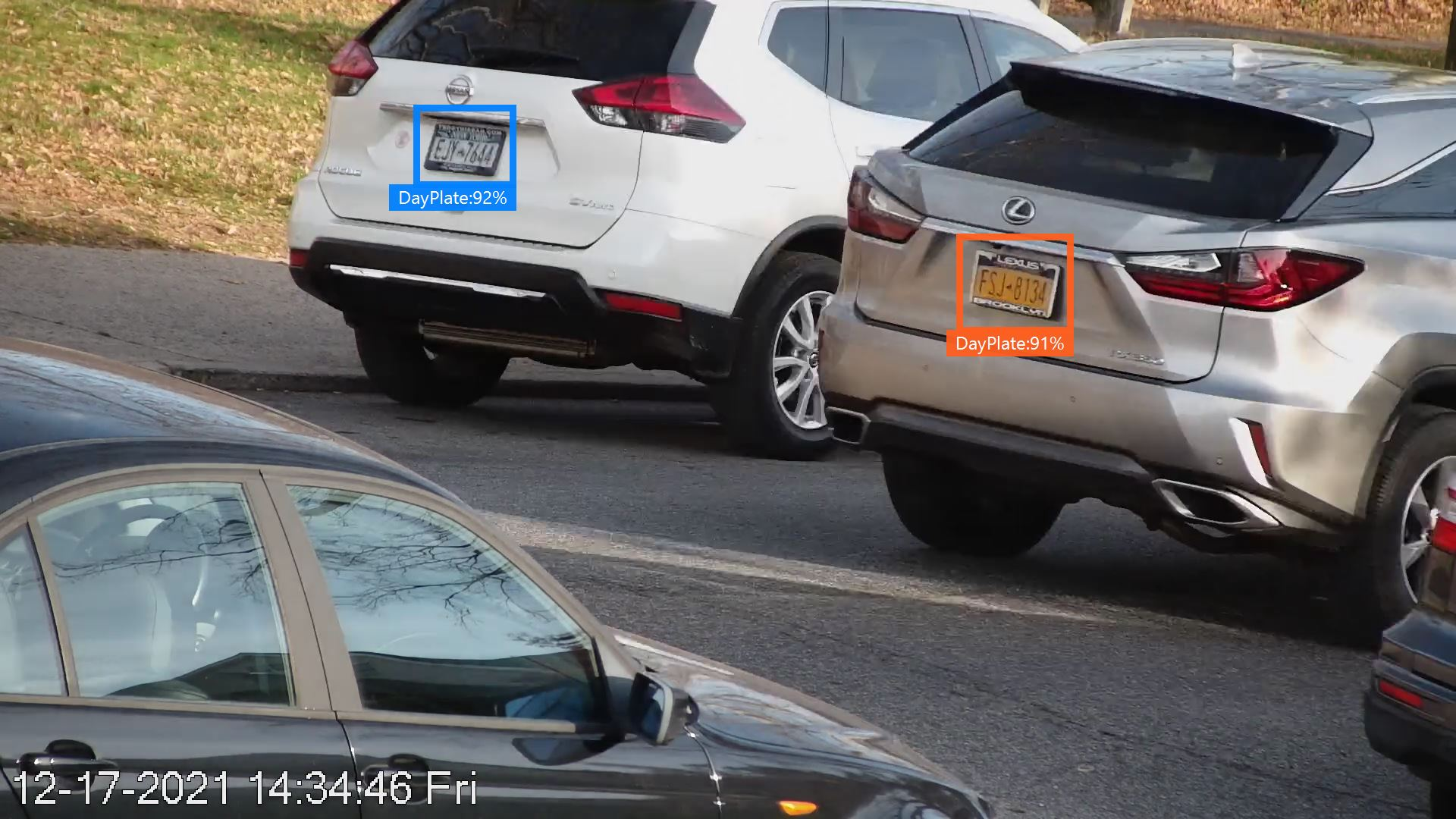

Custom Models: Train and deploy new models to detect any custom object(s)

-

Image Enhance: 4X image superresolution

Input -

Scene Recognition: Image scene recognition

-

SSL Support

-

API Key support: Security options to protect your DeepStack endpoints

Visit https://docs.deepstack.cc/getting-started for installation instructions. The documentation provides example codes for the following programming languages with more to be added soon.

- Python

- C#

- NodeJS

-

Install Prerequisites

-

Clone DeepStack Repo

git clone https://github.com/johnolafenwa/DeepStack.git -

CD to DeepStack Repo Dir

cd DeepStack -

Fetch Repo Files

git lfs pull -

Download Binary Dependencies With Powershell

.\download_dependencies.ps1 -

Build DeepStack CPU Version

cd .. && sudo docker build -t deepquestai/deepstack:cpu . -f Dockerfile.cpu -

Build DeepStack GPU Version

sudo docker build -t deepquestai/deepstack:gpu . -f Dockerfile.gpu -

Build DeepStack Jetson Version

sudo docker build -t deepquestai/deepstack:jetpack . -f Dockerfile.gpu-jetpack -

Running and Testing Locally Without Building

-

Unless you wish to install requirements system wide, create a virtual environment with

python3.7 -m venv venvand activate withsource venv/bin/activate -

Install Requirements with

pip3 install -r requirements.txt -

For CPU Version, Install PyTorch with

pip3 install torch==1.6.0+cpu torchvision==0.7.0+cpu -f https://download.pytorch.org/whl/torch_stable.html -

For GPU Version, Install Pytorch with

pip3 install torch==1.6.0+cu101 torchvision==0.7.0+cu101 -f https://download.pytorch.org/whl/torch_stable.html -

Start Powershell

pwsh -

For CPU Version, Run

.\setup_docker_cpu.ps1 -

For GPU Version, Run

.\setup_docker_gpu.ps1 -

CD To Server Dir

cd server -

Build DeepStack Server

go build -

Set Any of the APIS to enable;

$env:VISION_DETECTION = "True",$env:VISION_FACE = "True",$env:VISION_SCENE = "True" -

Run DeepStack

.\server

You can find all logs in the

directoryin the repo root. Note that DeepStack will be running on the default port5000. -

The DeepStack ecosystem includes a number of popular integrations and libraries built to expand the functionalities of the AI engine to serve IoT, industrial, monitoring and research applications. A number of them are listed below

- HASS-DeepStack-Object: An Home Assistant addon by Robin Cole for detecting common and custom objects

- HASS-DeepStack-Face: An Home Assistant addon by Robin Cole for face detection, registration and recognition

- HASS-DeepStack-Scene: An Home Assistant addon by Robin Cole for scene recognition

- DeepStack with Blue Iris - YouTube video: A DeepStack + BluIris setup tutorial by TheHookUp YouTube channel

- Blue Iris + Deepstack BUILT IN! Full Walk Through: Another and very recent DeepStack + BluIris setup tutorial by TheHookUp YouTube channel

- DeepStack with Blue Iris - Forum Discussion: A comprehensive DeepStack discussion thread on the IPCamTalk website.

- DeepStack on Home Assistant: A comprehensive DeepStack discussion thread on the Home Assistant forum website.

- DeepStack-UI: A Streamlit by Robin Cole for exploring DeepStack's features

- DeepStack-Python Helper: A Python client library by Robin Cole for DeepStack APIs

- DeepStack-Analytics: A analytics tool by Robin Cole for exploring DeepStack's APIs

- DeepStackAI Trigger: A DeepStack automation system integration with MQTT and Telegram support by Neil Enns

- node-red-contrib-deepstack: A NodeRED integration for all DeepStack APIs by Joakim Lundin

- DeepStack_USPS: A custom DeepStack model for detecting USPS logo by Stephen Stratoti

- AgenDVR: A DVR platform with DeepStack integrations built by Sean Tearney

- On-Guard: A security camera application for HTTP, ONVIF and FTP with DeepStack integrations by Ken

(coming soon)