The Coworking Space Service is a set of APIs that enables users to request one-time tokens and administrators to authorize access to a coworking space. This service follows a microservice pattern and the APIs are split into distinct services that can be deployed and managed independently of one another.

For this project, you are a DevOps engineer who will be collaborating with a team that is building an API for business analysts. The API provides business analysts basic analytics data on user activity in the service. The application they provide you functions as expected locally and you are expected to help build a pipeline to deploy it in Kubernetes.

- Python Environment - run Python 3.6+ applications and install Python dependencies via

pip - Docker CLI - build and run Docker images locally

kubectl- run commands against a Kubernetes clusterhelm- apply Helm Charts to a Kubernetes cluster

- AWS CodeBuild - build Docker images remotely

- AWS ECR - host Docker images

- Kubernetes Environment with AWS EKS - run applications in k8s

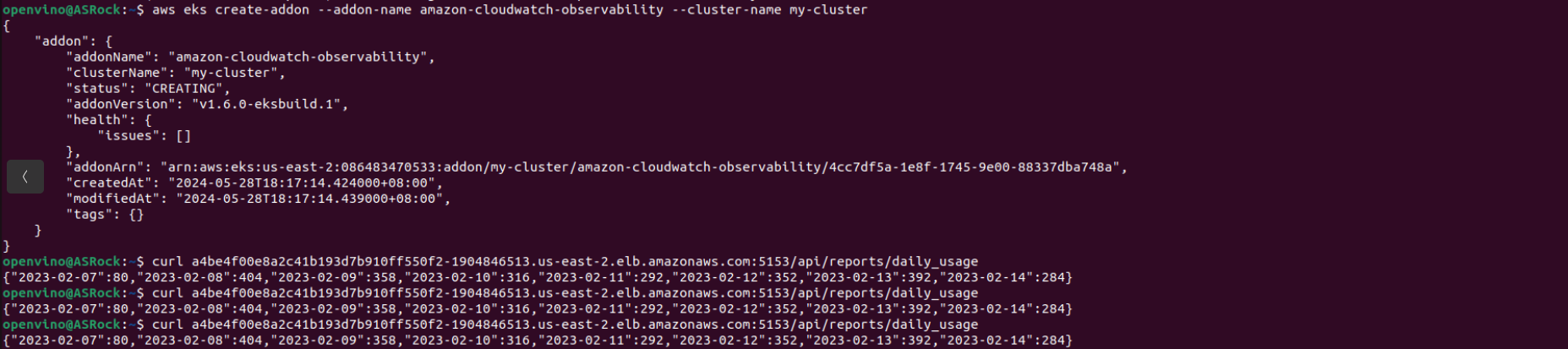

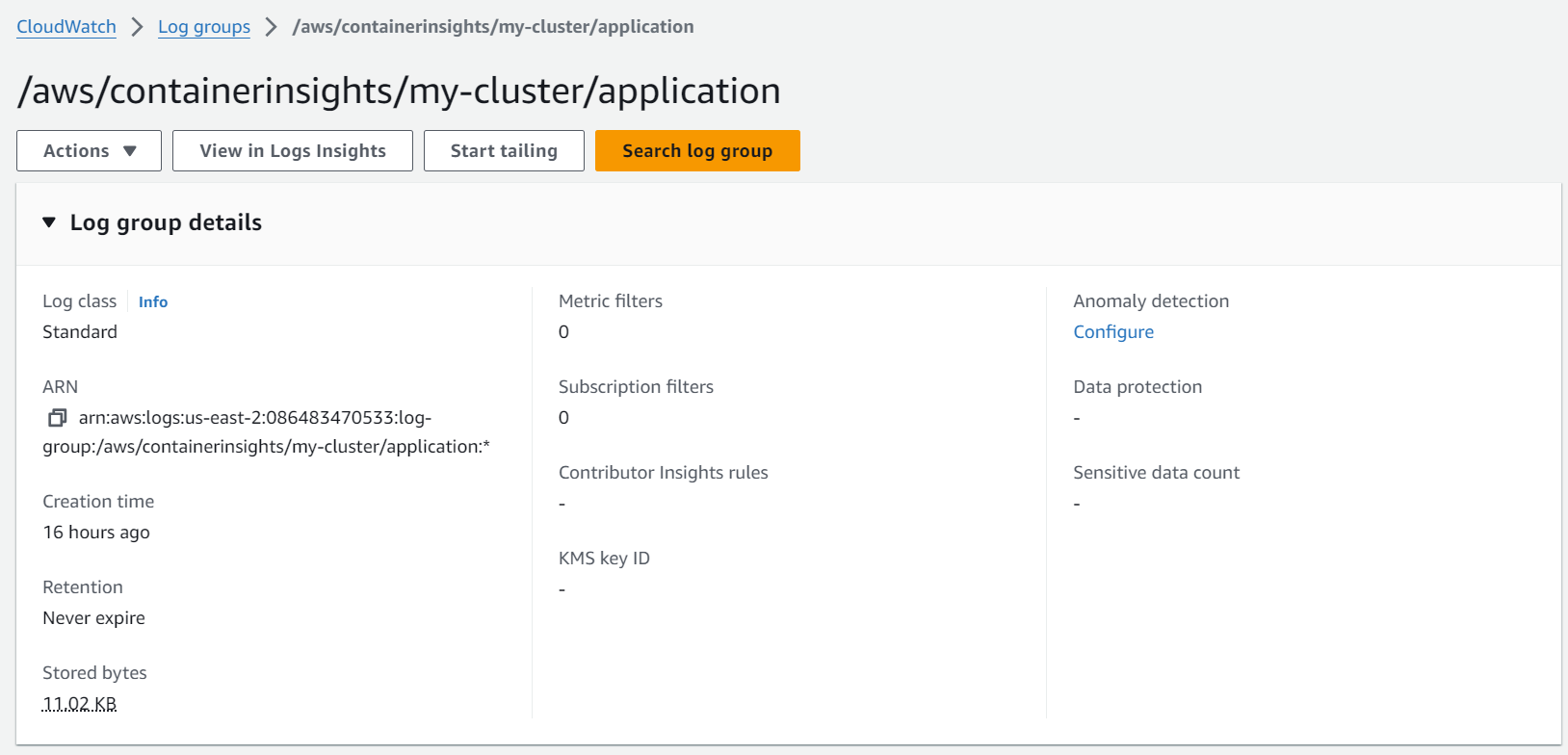

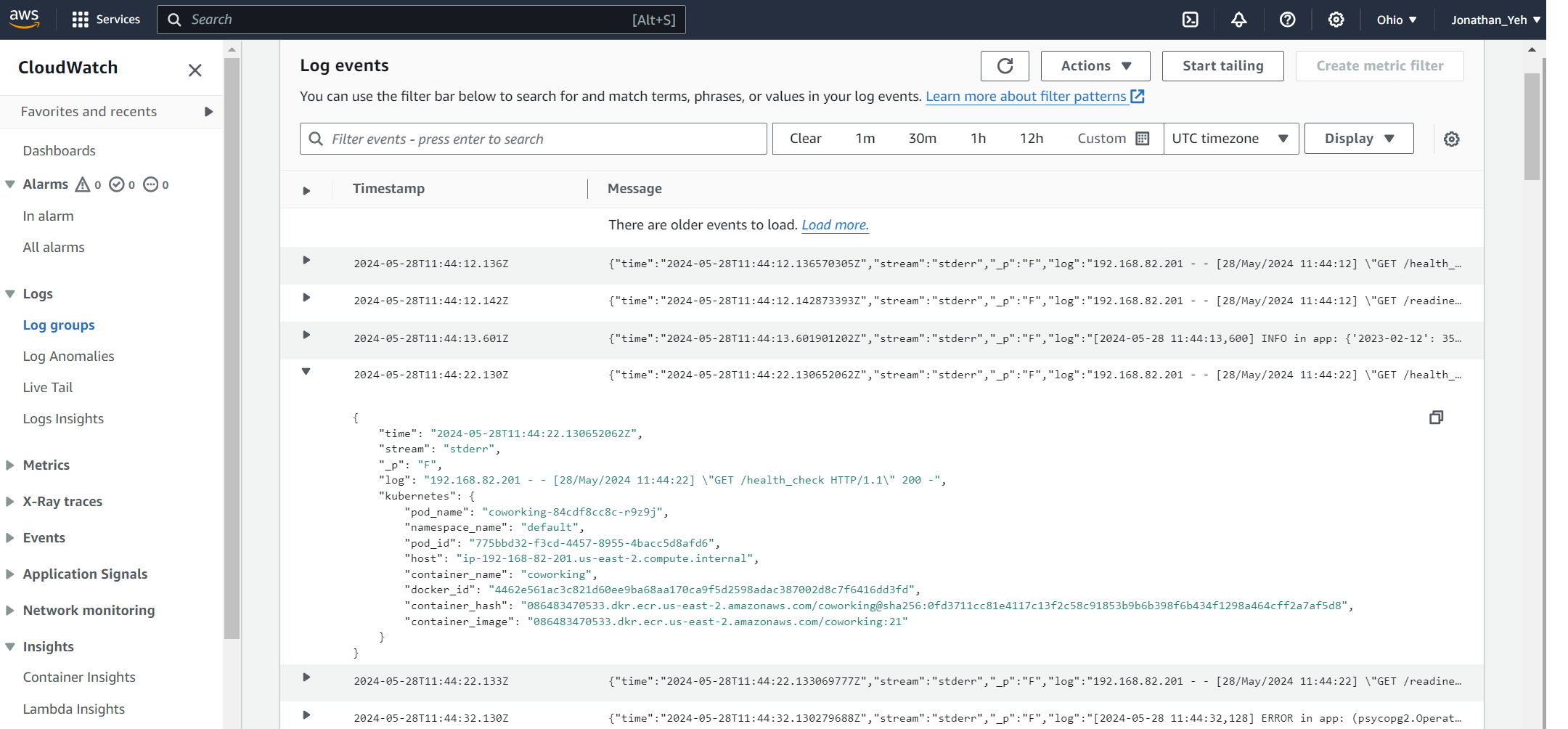

- AWS CloudWatch - monitor activity and logs in EKS

- GitHub - pull and clone code

See the following.

In the analytics/ directory:

- Install dependencies

pip install -r requirements.txt- Run the application (see below regarding environment variables)

<ENV_VARS> python app.pyThere are multiple ways to set environment variables in a command. They can be set per session by running export KEY=VAL in the command line or they can be prepended into your command.

DB_USERNAMEDB_PASSWORDDB_HOST(defaults to127.0.0.1)DB_PORT(defaults to5432)DB_NAME(defaults topostgres)

If we set the environment variables by prepending them, it would look like the following:

DB_USERNAME=username_here DB_PASSWORD=password_here python app.pyThe benefit here is that it's explicitly set. However, note that the DB_PASSWORD value is now recorded in the session's history in plaintext. There are several ways to work around this including setting environment variables in a file and sourcing them in a terminal session.

- Verifying The Application

-

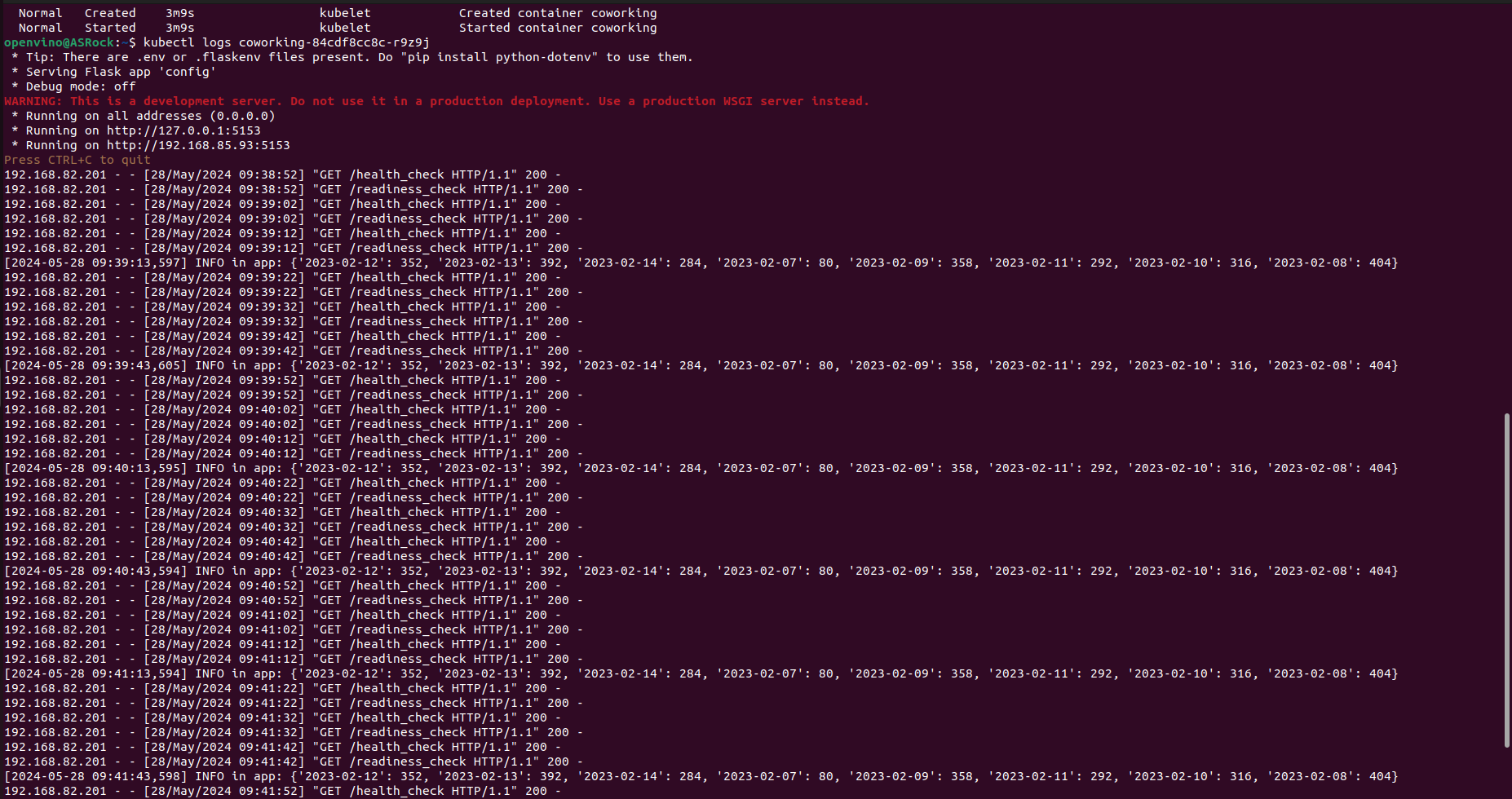

Generate report for check-ins grouped by dates

curl <BASE_URL>/api/reports/daily_usage -

Generate report for check-ins grouped by users

curl <BASE_URL>/api/reports/user_visits

- Set up a Postgres database with a Helm Chart

- Create a

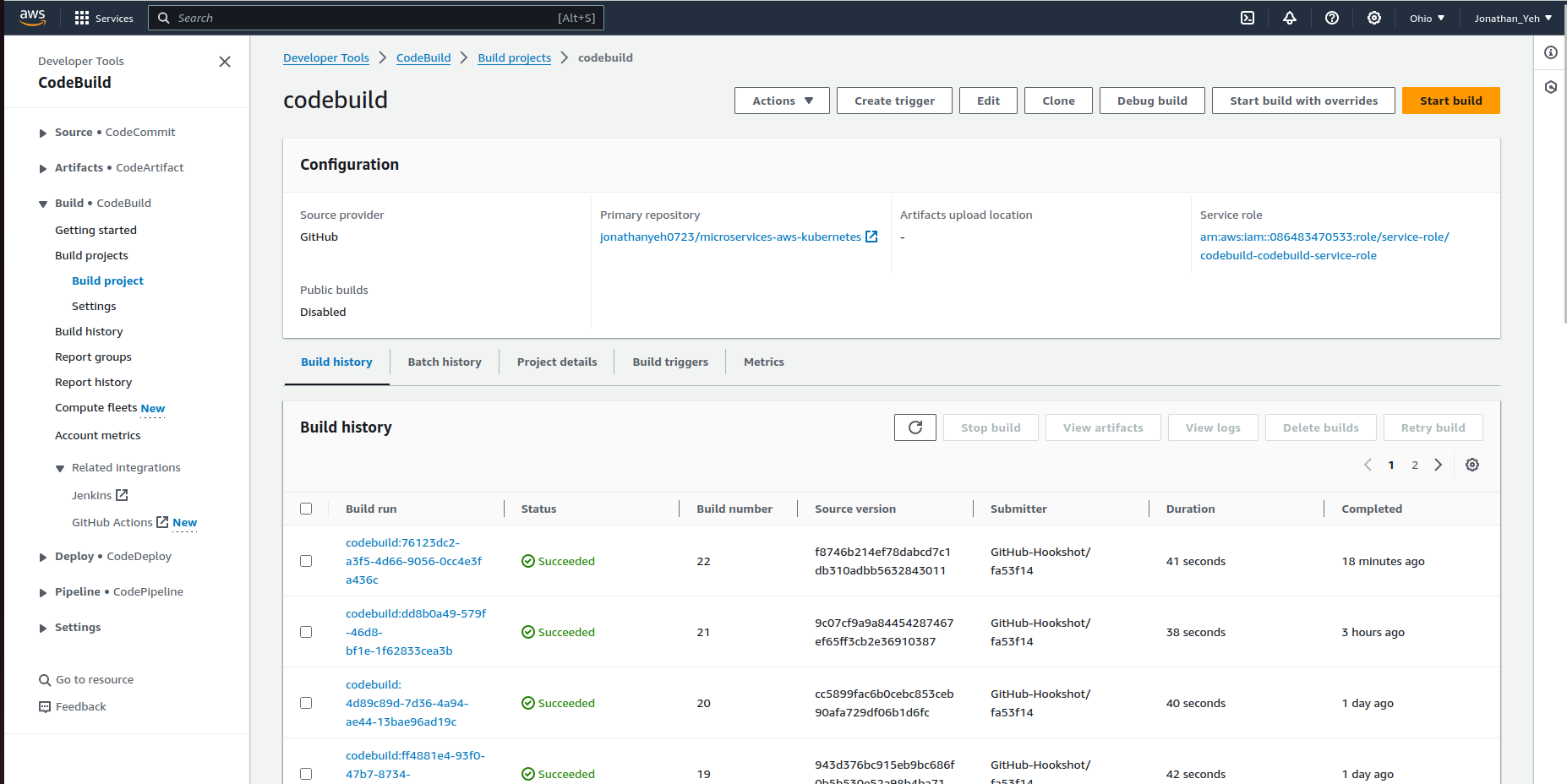

Dockerfilefor the Python application. Use a base image that is Python-based. - Write a simple build pipeline with AWS CodeBuild to build and push a Docker image into AWS ECR

- Create a service and deployment using Kubernetes configuration files to deploy the application

- Check AWS CloudWatch for application logs

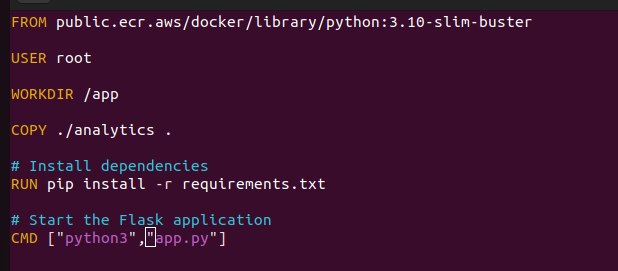

Dockerfile

- Screenshot of AWS CodeBuild pipeline

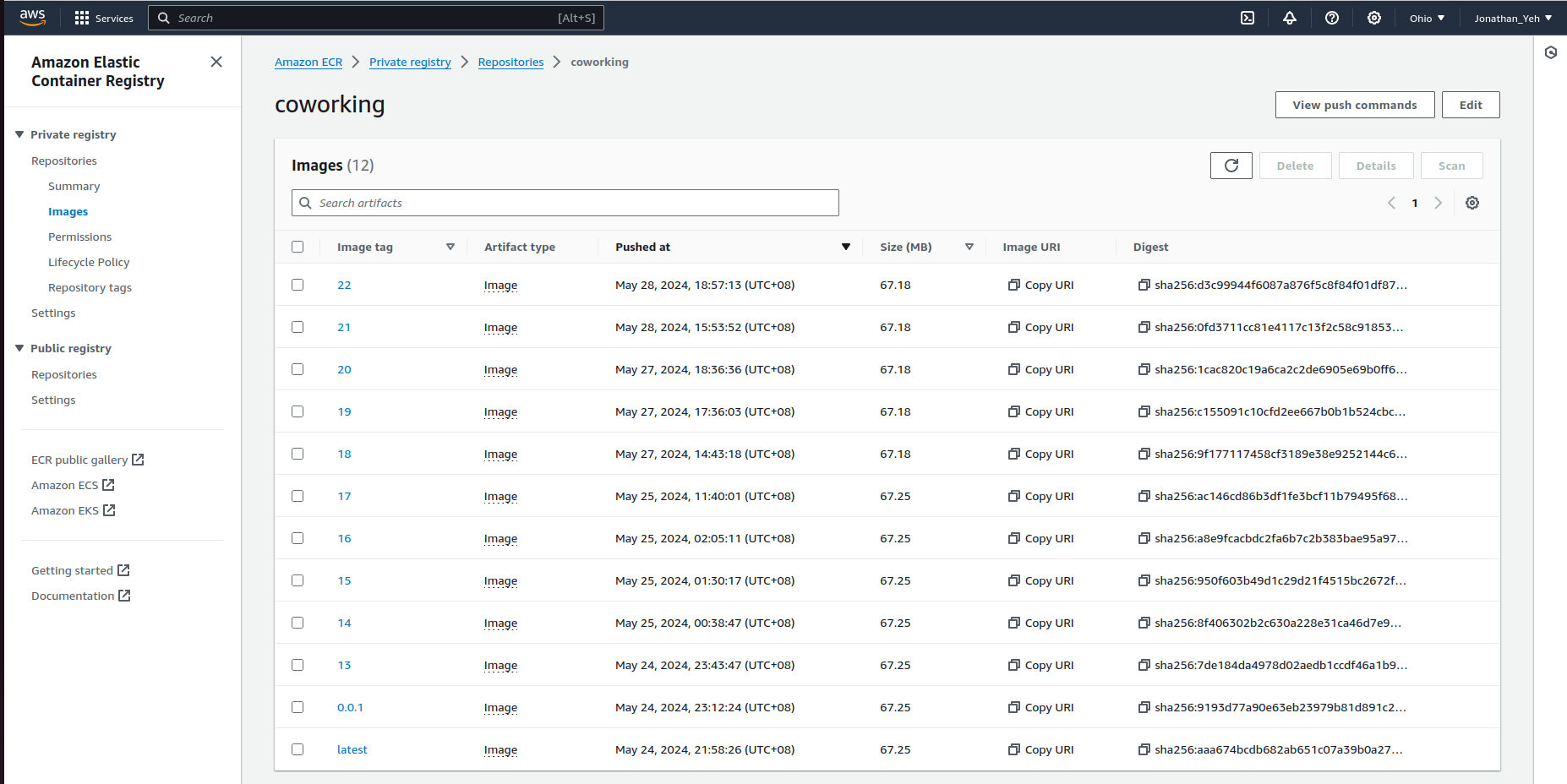

- Screenshot of AWS ECR repository for the application's repository

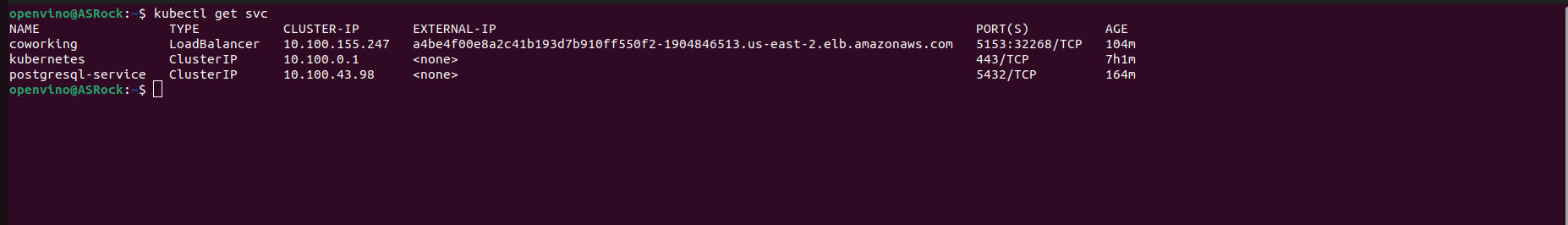

- Screenshot of

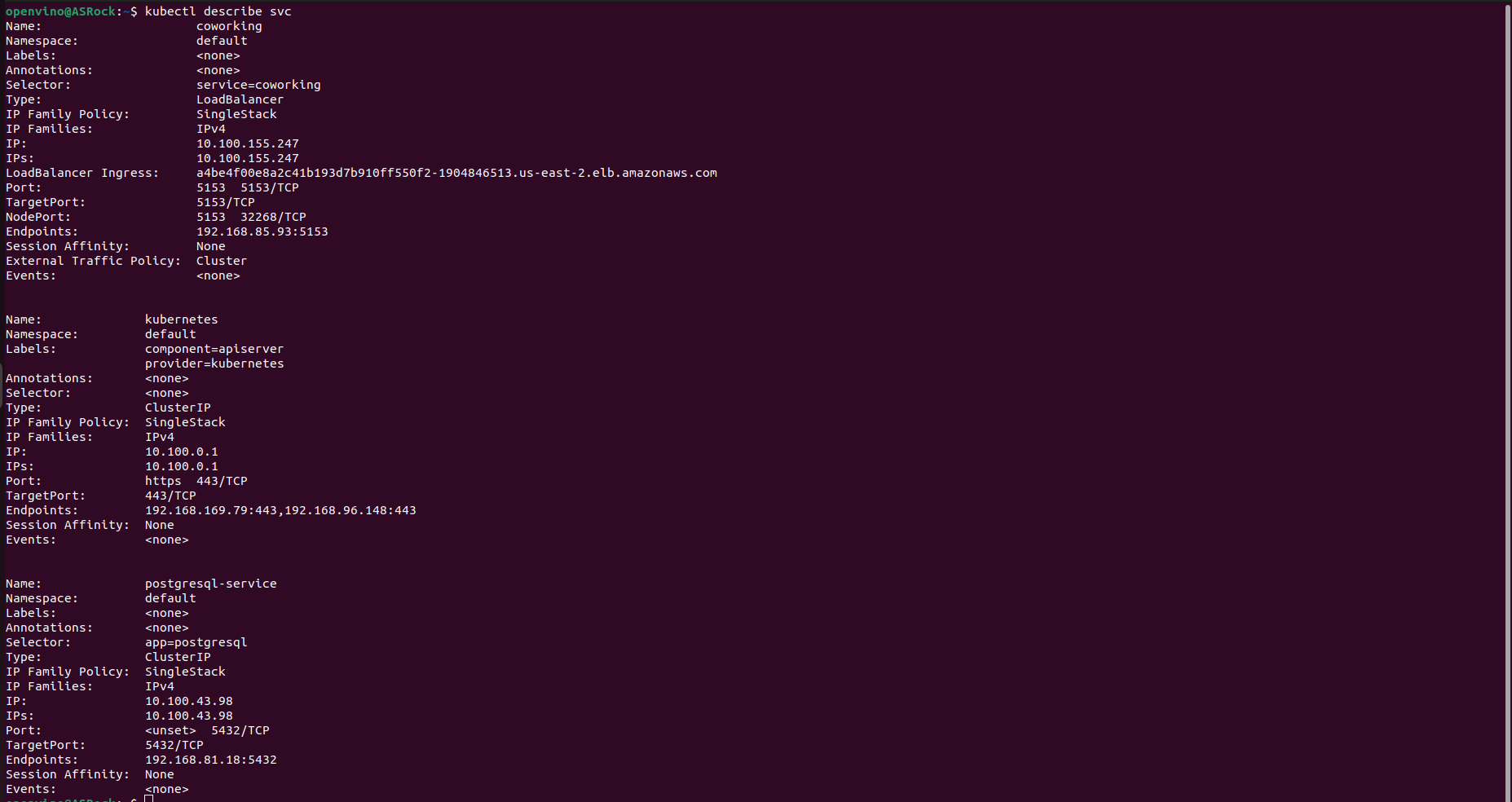

kubectl get svc

- Screenshot of

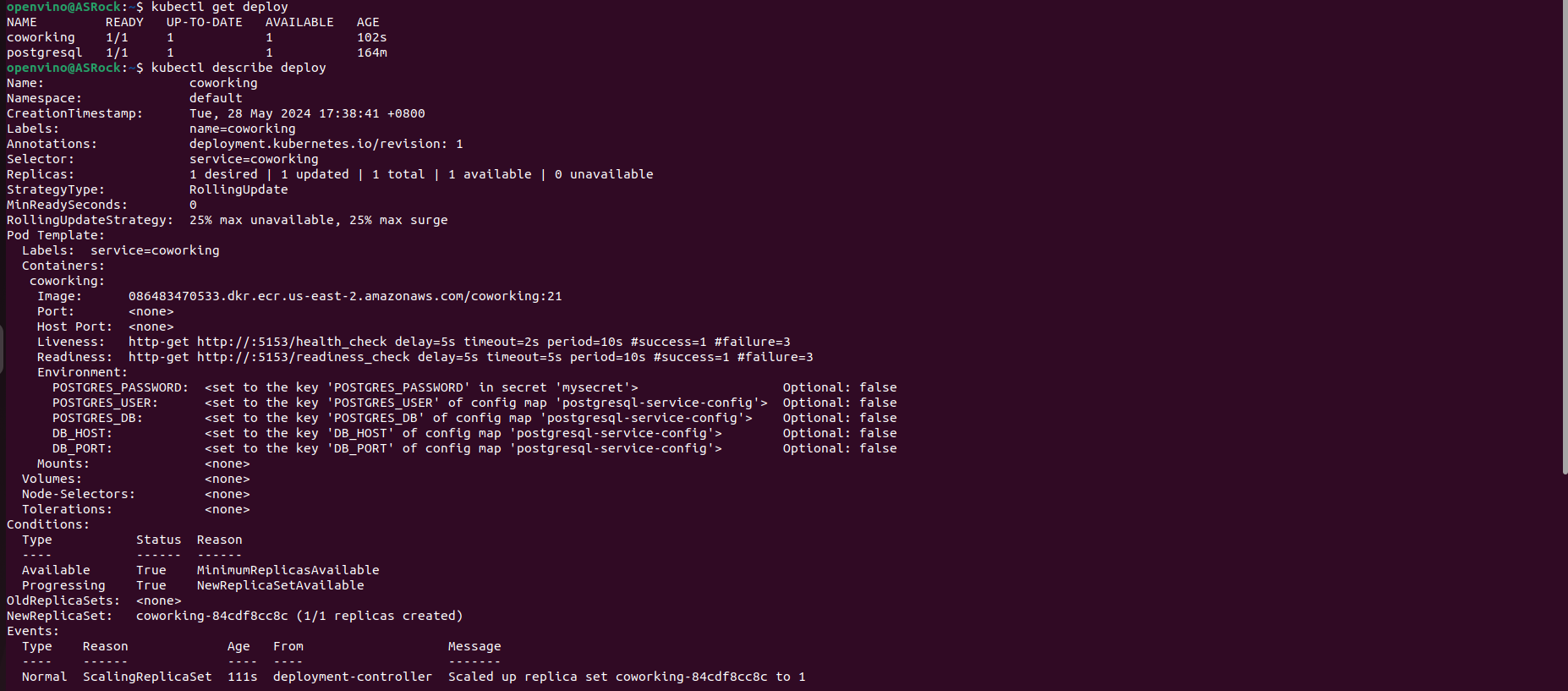

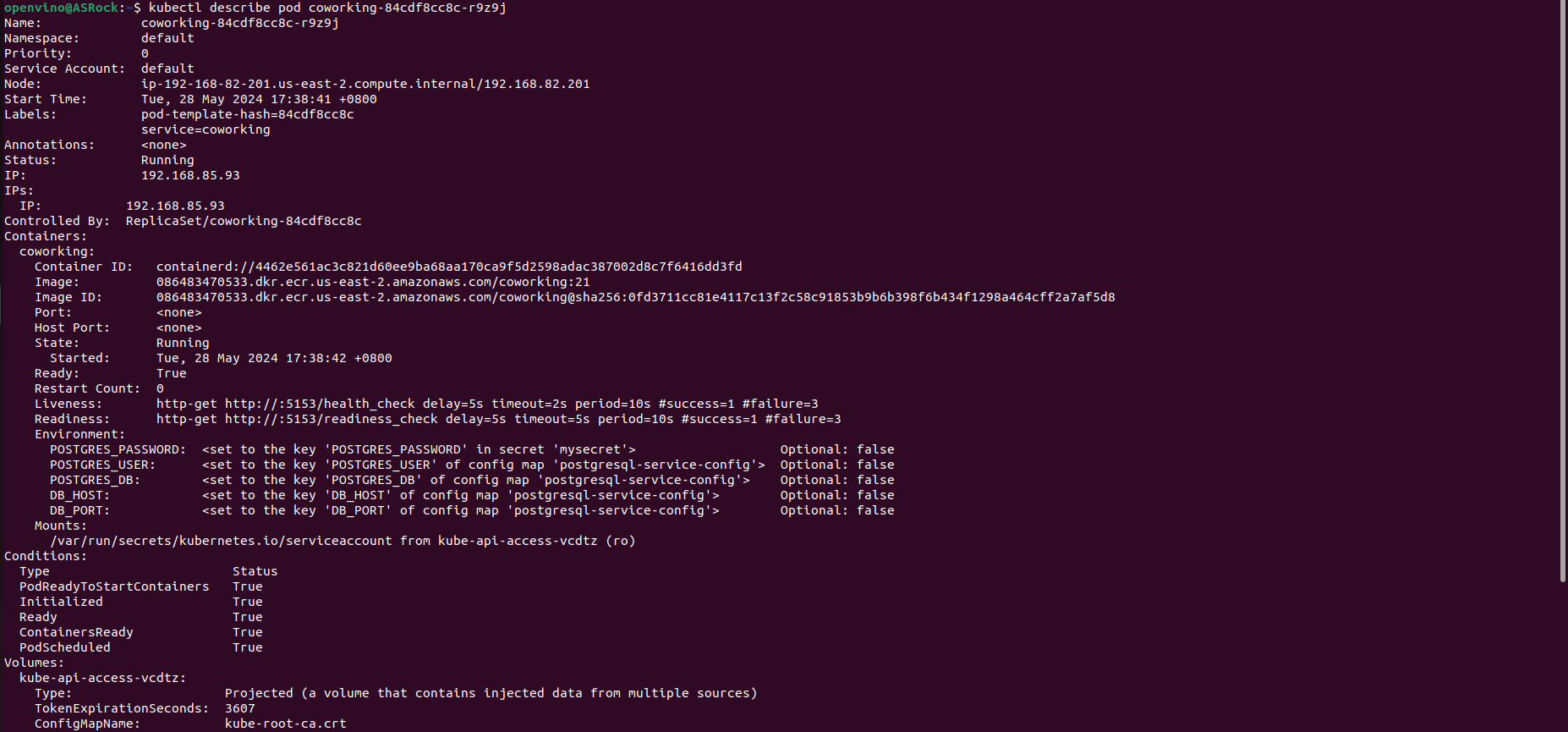

kubectl get pods

- Screenshot of

kubectl describe svc <DATABASE_SERVICE_NAME>

- Screenshot of

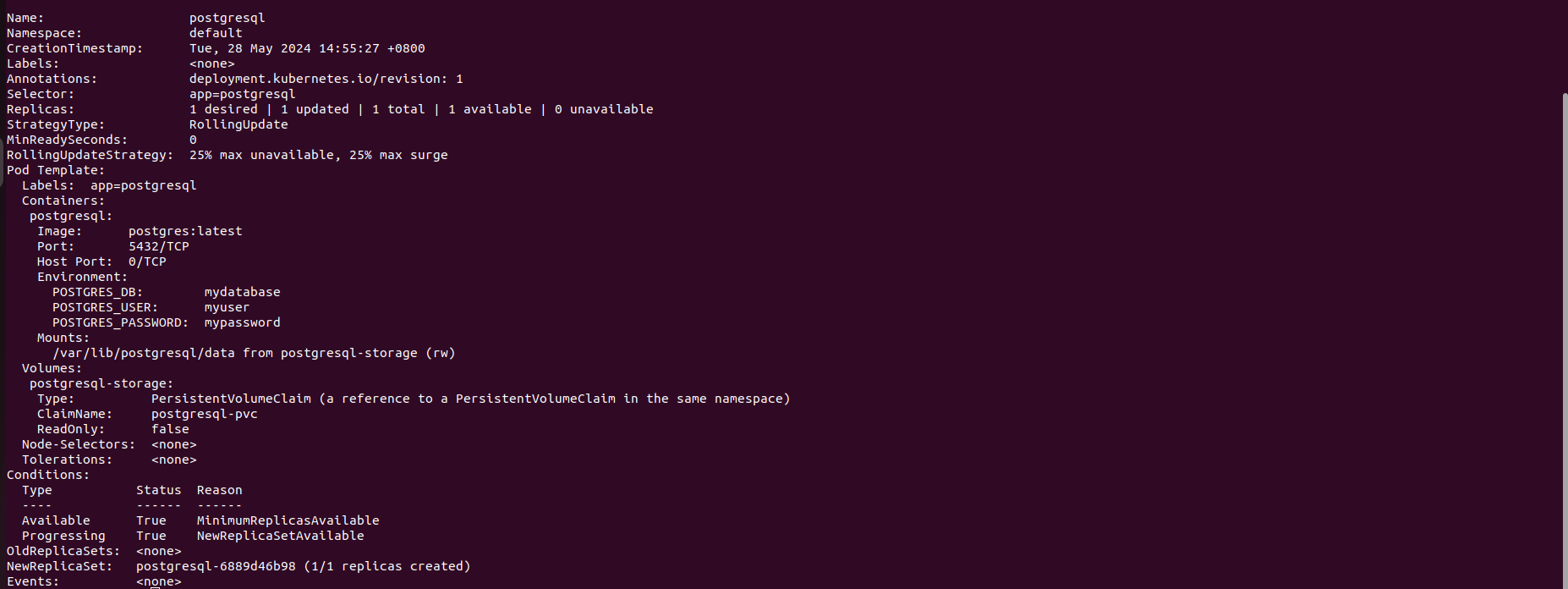

kubectl describe deployment <SERVICE_NAME>

- All Kubernetes config files used for deployment (ie YAML files)

Refer to the foler deployment and DB-YAML.

deployment

├── configmap.yaml

├── coworking.yaml

└── secret.yaml

0 directories, 3 files

├── postgresql-deployment.yaml

├── postgresql-service.yaml

├── pvc.yaml

└── pv.yaml

0 directories, 4 files

- Screenshot of AWS CloudWatch logs for the application

Basically, follow the below instructions for setup:

-

Create AWS EKS Cluster on the web interface

-

Install AWS CLI

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

aws configure

- Install

kubectl

curl -LO https://dl.k8s.io/release/v1.30.0/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

chmod +x kubectl

mkdir -p ~/.local/bin

mv ./kubectl ~/.local/bin/kubectl

- Using AWS CLI to create an EKS Cluster

eksctl create cluster --name my-cluster --region us-east-2 --nodes=2 --version=1.29 --instance-types=t3.micro

- Create Postgresql database

kubectl apply -f db/

- Port forwarding. Run seed files.

kubectl port-forward svc/postgresql-service 5433:5432 &

export DB_PASSWORD=mypassword

PGPASSWORD="$DB_PASSWORD" psql --host 127.0.0.1 -U myuser -d mydatabase -p 5433 < db/1_create_tables.sql

PGPASSWORD="$DB_PASSWORD" psql --host 127.0.0.1 -U myuser -d mydatabase -p 5433 < db/2_seed_users.sql

PGPASSWORD="$DB_PASSWORD" psql --host 127.0.0.1 -U myuser -d mydatabase -p 5433 < db/3_seed_tokens.sql

- Deploy the service by

kubectl apply -f deployment/

The deployment includes 3 files:

deployment

├── configmap.yaml

├── coworking.yaml

└── secret.yaml

0 directories, 3 files

- Check

kubectl get svc

kubectl get pods

kubectl describe svc <DATABASE_SERVICE_NAME>

kubectl describe deployment <SERVICE_NAME>

- Dockerfile uses an appropriate base image for the application being deployed. Complex commands in the Dockerfile include a comment describing what it is doing.

- The Docker images use semantic versioning with three numbers separated by dots, e.g.

1.2.1and versioning is visible in the screenshot. See Semantic Versioning for more details.