WARNING: this is a pre-release version of CBAS, if you identify an error or bug, please create an issue thread. Better documentation and juptyer notebook examples are coming soon!

Visit our slack channel for help and to stay up to date with the latest CBAS news!

CBAS is a suite of tools for phenotyping complex animal behaviors. It is designed to automate classification of behaviors from active live streams of video data and provide a simple interface for visualizing and analyzing the results. CBAS currently supports automated inferencing from state-of-the-art machine learning vision models including DeepEthogram (DEG) and Deeplabcut (DLC). CBAS also includes an attentive LSTM (A-LSTM) sequence model, which is designed to be more robust and more accurate than the original DEG sequence model.

Written and maintained by Logan Perry, a post-bac in the Jones Lab at Texas A&M University.

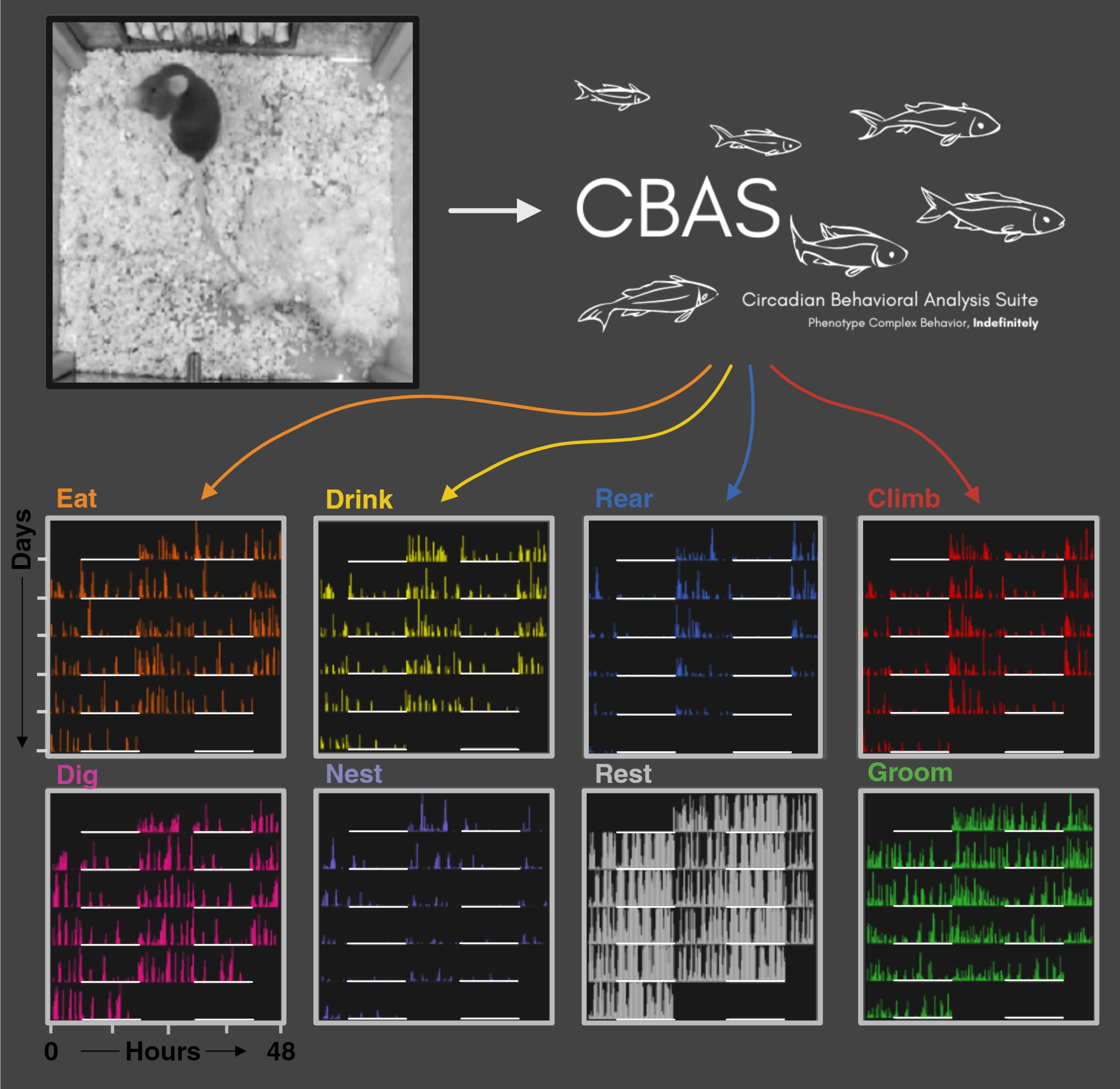

- CBAS was designed with circadian behavior monitoring in mind! Here's a visual of what CBAS can do (the behaviors are not set in stone, and can be changed to fit the user's needs).

- A timelapse gif of CBAS in action (may be slow to load). CBAS is capable of inferring with both DLC and DEG models at the same time!

A headless version of CBAS is coming soon and will be available via PyPI!

Requirements:

- Python 3.7 or later

- Conda

- IPython

- FFMPEG, with the ffmpeg executable in the system PATH

- Stand alone installation(s) of Deepethogram (DEG) and/or Deeplabcut (DLC) with working environments

To create A-LSTM models:

- PyTorch with or without CUDA support

For installation without a PyPI release, download and extract the zipped code for installation. Navigate to the CBAS-main/envs folder and type

conda env create -f CBAS.yaml

Enter the environment by typing

conda activate CBAS

If using windows, navigate to the CBAS-main directory and type

refresh

If using linux, navigate to the CBAS-main directory and type

python setup.py bdist_wheel

Then, navigate to the dist directory and type

pip install *.whl

Switch to the DEG and DLC environments by typing

conda activate (your DEG env) or conda activate (your DLC env)

Install CBAS in these environments by repeating the above installation steps.

To test the installation, type

ipython

Then, type

from cbas_headless import *

If no errors are thrown, the installation was successful!

The headless version of CBAS is designed to be used in ipython or jupyter notebooks. Jupyter notebooks for examples of how to use CBAS to automate pre-existing DEG or DLC models are currently under construction. Links to these example notebooks will be found below in the near future.

CBAS was built to facilitate inferring of live video streams. The video acquisition module provides a simple interface for acquiring video from real-time network streams via RTSP. RTSP is a widely supported protocol for streaming video over the internet, and is supported by many IP cameras and network video recorders. CBAS uses ffmpeg to acquire video from RTSP streams, and can be used to acquire video from any RTSP source that ffmpeg supports.

CBAS video acquisition can be used with or without DEG or DLC models. When used with a model or multiple models, CBAS can be used to automatically infer the behavior of animals in real time. CBAS seamlessly handles model context switching, allowing the user to inference video streams with any number or type of vision models. When a recording is finished, CBAS continues inferrencing the video stream until all videos are inferenced and gracefully exits.

- A visual of how CBAS works to inference videos in real-time. CBAS batches videos into segments and iteratively infers new video segments as they are made using DEG models, DLC models, or a combination of both. When a recording is finished, CBAS continues inferrencing the video stream until all videos are inferenced.

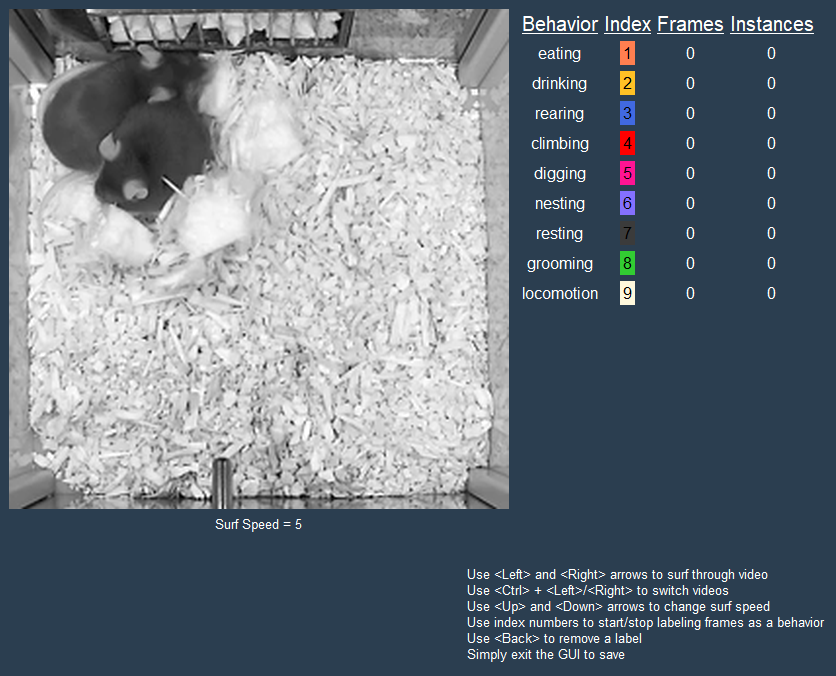

The training set creation module allows the user to manually annotate recorded videos with behaviors of interest. The annotated videos can then be used to train a DEG model or for training a CBAS sequence model for deepethogram outputs.

- The CBAS training set creator simplifies the process of annotating videos for training DEG or CBAS sequence models. Importantly, a user can annotate videos with the behavior of interest quickly and without having to label the entire video. The resulting annotations are saved to a file that can be used to train supervised models.

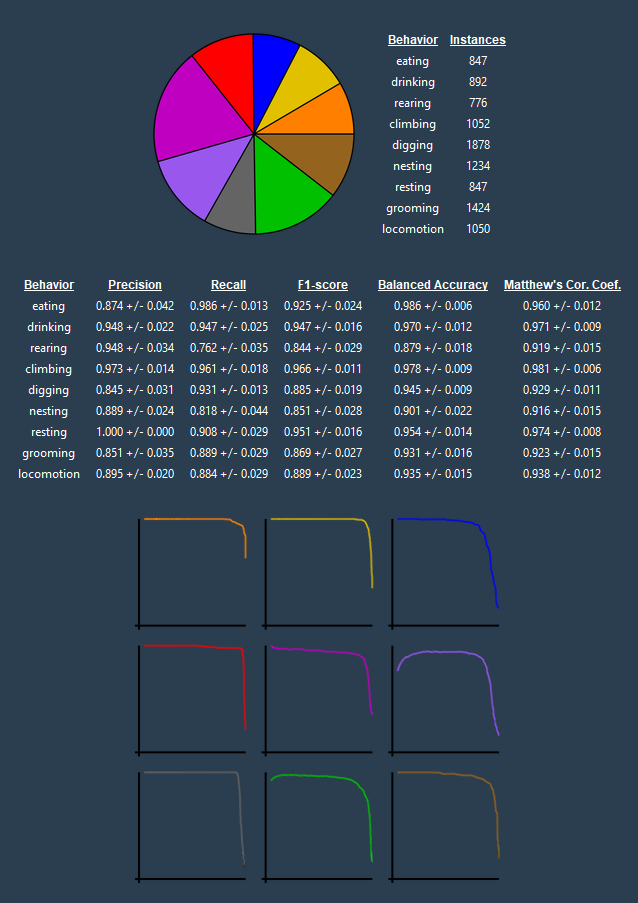

The model validation module allows the user to validate the performance of a DEG or CBAS sequence model on naive test sets. The user can use this module to visualize the model's performance on the videos, and to calculate the model's performance metrics (precision, recall, f1-score, balanced accuracy, etc.).

- An example of the CBAS model validation module. A user can use this module to visualize the model's performance on the videos, to calculate the model's performance metrics, and to see the class balance of the test set.

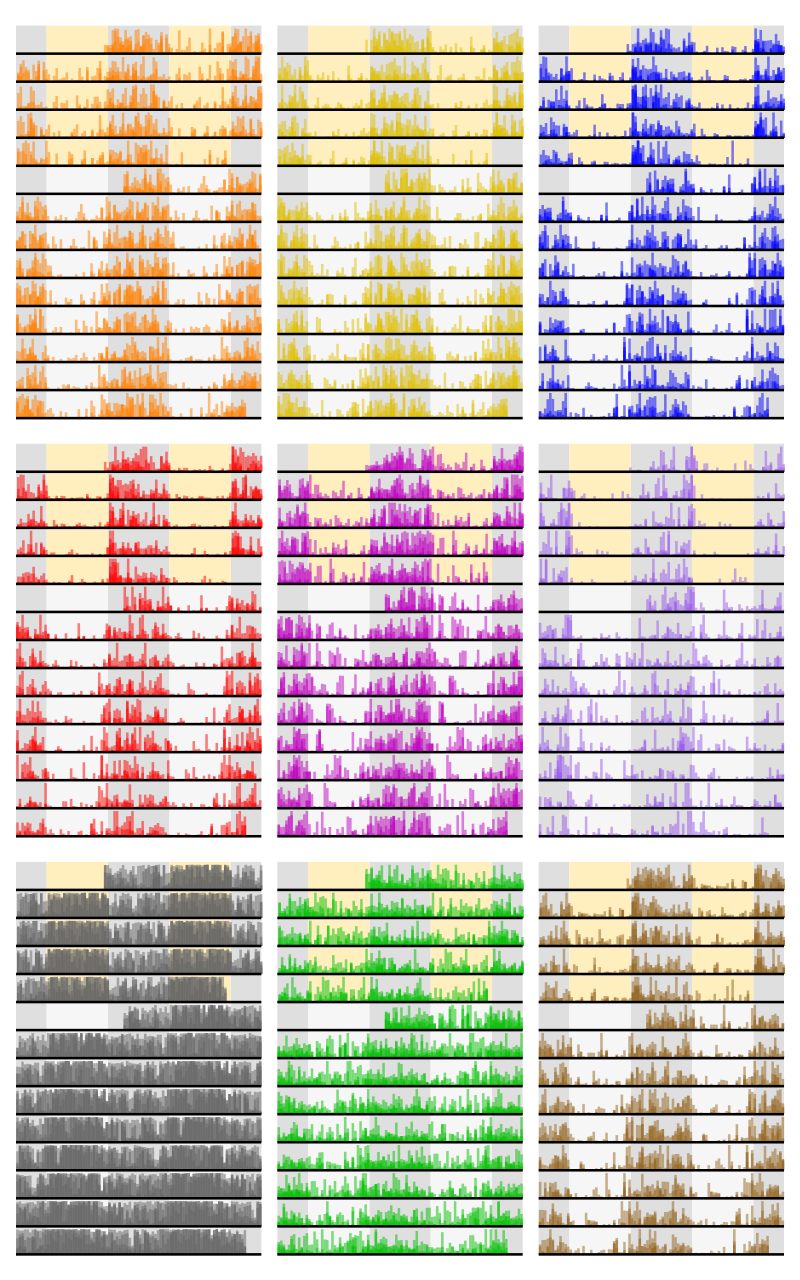

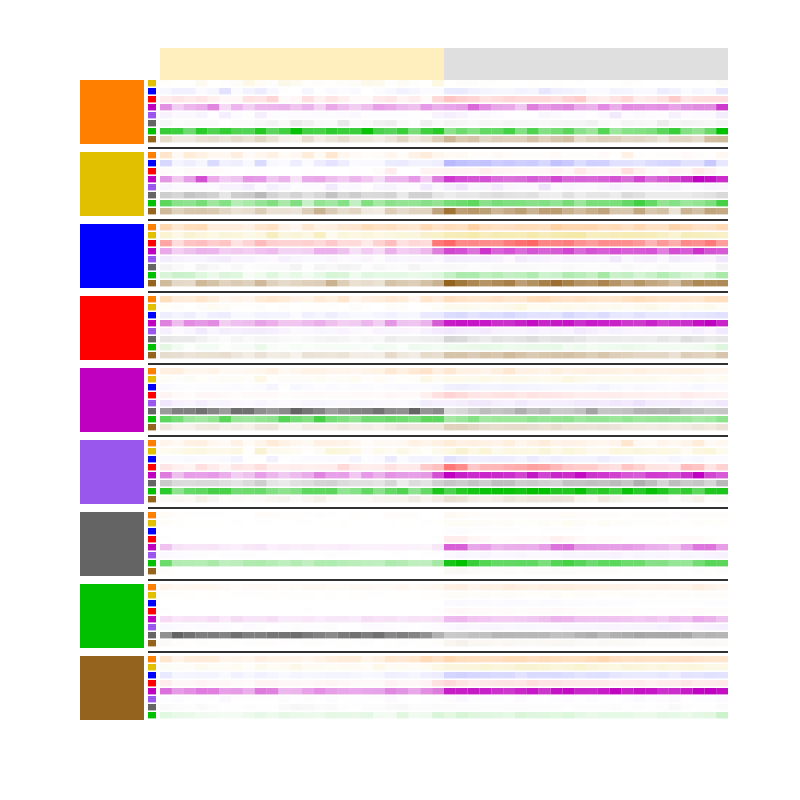

The visualization and analysis module provides circadian plotting tools for visualizing and analyzing the behavior of animals over time. Circadian methods of particular importance are actogram generation, behavior transition raster generation, timeseries fitting and circadian parameter extraction with CosinorPy integration, and time series exportation for ClockLab Analysis.

- Actogram generation: The actogram generation module allows the user to generate actograms of the behavior of animals over time. In this example actograms are generated by binning the behavior of all animals in the population into 30 minute bins, and overlaying the transparent behaviors for each animal, in each bin.

- Transition Raster generation: Below is an example of a population average transition raster generated by CBAS. The transition raster is generated by binning the behavior of all animals in the population into 30 minute bins, and plotting the average behavior transition probability in each bin.

- So much more to be announced soon: Reach out to loganperry@tamu.edu for more information or to request a feature!

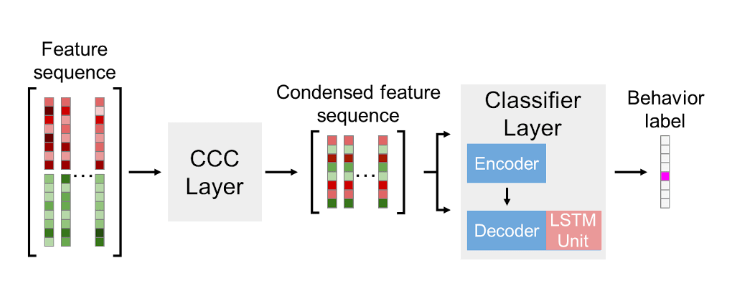

The CBAS sequence model is an attentive LSTM sequence model for DEG feature extractor outputs. Below is a schematic of how this model works. Briefly, the model consists of two layers. The first layer is an encoder layer for cleaning, condensing, and combining the 1024 spatial and flow features that DEG outputs. The second layer is a classification layer that takes as input a sequence of encoded outputs from the first layer and uses them to classify frame sequences into behavior classes.

-

Deepethogram is a state-of-the-art vision model for inferring complex behaviors from video data. It was developed by Jim Bohnslav at Harvard. A few components of the original deepethogram package have been included in the platforms/modified_deepethogram directory. These changes were made to hold the deepethogram model in memory and to allow for real-time inferencing of video streams. Only the necessary components of the original deepethogram package were included in the modified version. Please support the original deepethogram package by visiting their repository, here.

-

Deeplabcut is a skeletal based vision model. It was developed by the A. and M.W. Mathis Labs. Please support the original deeplabcut package by visiting their repository, here

This work was supported by National Institutes of Health Grant R35GM151020 (J.R.J.) and a Research Grant from the Whitehall Foundation (J.R.J.).

This project is licensed under the GNU Lesser General Public License v3.0. See the LICENSE file for details.