Powerful language-agnostic analysis of your source code and git history.

Website • Documentation • Blog • Slack • Twitter

source{d} Engine exposes powerful Universal ASTs to analyze your code and a SQL engine to analyze your git history:

- Code Processing: use git repositories as a dataset.

- Language-Agnostic Code Analysis: automatically identify languages, parse source code, and extract the pieces that matter with language-independent queries.

- Git Analysis: powerful SQL based analysis on top of your git repositories.

- Querying With Familiar APIs: analyze your code through powerful friendly APIs, such as SQL, gRPC, and various client libraries.

You can access a rendered version of this documentation at docs.sourced.tech/engine.

- Quick Start

- Other Guides & Examples

- Architecture

- Babelfish UAST

- Clients & Connectors

- Community

- Contributing

- Credits

- License

Follow the steps below to get started with source{d} Engine.

Follow these instructions based on your OS:

Follow instructions at Docker for Mac. You may also use Homebrew:

brew cask install dockerFollow instructions at Docker for Ubuntu Linux:

sudo apt-get update

sudo apt-get install docker-ceFollow instructions at Docker for Arch Linux:

sudo pacman -S dockerDocker Desktop for Windows. Make sure to read the system requirements here.

Please note that Docker Toolbox is not supported neither for Windows nor for macOS. In case that you're running Docker Toolbox, please consider updating to newer Docker Desktop for Mac or Docker Desktop for Windows. See previous section

Download the latest release for MacOS (Darwin), Linux or Windows.

Double-click on the tar file to extract it.

Open your terminal and move the binary to your local bin folder to make it executable from any directory:

sudo mv ~/replace/path/to/engine_darwin_amd64/srcd /usr/local/bin/If you want to setup bash completion, add the following to your $HOME/.bash_profile file:

# source{d} Engine auto completion

source <(srcd completion)Extract the contents of the tar file from your terminal:

tar -xvf ~/replace/path/to/engine_REPLACEVERSION_linux_amd64.tar.gzMove the binary to your local bin folder to be executable from any directory:

sudo mv engine_linux_amd64/srcd /usr/local/bin/If you want to setup bash completion, add the following to your $HOME/.bashrc file:

# source{d} Engine auto completion

source <(srcd completion)Please note that from now on we assume that the commands are executed in powershell and not in cmd. Running them in cmd is not guaranteed to work. Proper support may be added in future releases.

To run the following preparatory steps you need to run powershell as administrator.

mkdir 'C:\Program Files\srcd'

# Add the directory to the `%path%` to make it available from anywhere

setx /M PATH "$($env:path);C:\Program Files\srcd"

# Now open a new powershell to apply the changesExtract the tar file with the tool you prefer. Copy srcd.exe into the directory you created:

mv engine_windows_amd64\srcd.exe 'C:\Program Files\srcd'Now it's time to initialize source{d} Engine and provide it with some repositories to analyze:

# Without a path Engine operates on the local directory,

# it works with nested or sub-directories.

srcd init

# You can also provide a path

srcd init <path>You can also optionally provide a configration file through the --config flag as follows:

srcd init --config <config file path>

# Or

srcd init <path> --config <config file path>See the documentation for the --config flag here.

Note:

Once Engine is initialized with a working dir, it does not watch for new repository creation, so if you want to add (or delete) repositories, you need to init again.

Also, database indexes are not updated automatically when its contents change, so in that cases, the index must be manually recreated.

If you want to inspect different datasets, it needs to be done separatelly: you can init one working directory, perform your queries, and then change to the other dataset performing an init with the working directory containing it. In such situations, indexes are kept, so you can change from one dataset to the other, without having to recreate them.

Note for MacOS:

Docker for Mac requires enabling file sharing for any path outside of /Users.

For the full list of the commands supported by srcd and those

that have been planned, please read commands.md.

Note for Windows: Docker for Windows requires shared drives. Other than that, it's important to use a workdir that doesn't include any sub-directory whose access is not readable by the user running srcd. As an example using C:\Users as workdir will most probably not work. For more details see this issue.

source{d} Engine provides interfaces to query code repositories and to parse code into Universal Abstract Syntax Trees.

In this section we will cover a mix of some commands and interfaces available.

Note: source{d} Engine will download and install Docker images on demand. Therefore, the first time you run some of these commands, they might take a bit of time to start up. Subsequent runs will be faster.

To launch the web client for the SQL interface, run the following command and start executing queries:

# Launch the query web client

srcd web sqlThis should open the web interface in your browser. You can also access it directly at http://localhost:8080.

If you prefer to work within your terminal via command line, you can open a SQL REPL that allows you to execute queries against your repositories by executing:

# Launch the query CLI REPL

srcd sqlIf you want to run a query directly, you can also execute it as such:

# Run query via CLI

srcd sql "SHOW tables;"Note:

Engine's SQL supports a UAST function that returns a Universal AST for the selected source text. UAST values are returned as binary blobs, and are best visualized in the web sql interface rather than the CLI where are seen as binary data.

You may also connect directly to gitbase using any MySQL compatible client. Use the login user root, no password, and database name gitbase.

# Start the component if needed

srcd start srcd-cli-gitbase

mysql --user=root --host=127.0.0.1 --port=3306 gitbaseSometimes you may want to parse files directly as UASTs.

To see which languages are available, check the table of supported languages.

If you want a playground to see examples of the UAST, or run your own, you can launch the parse web client.

# Launch the parse web client

srcd web parseThis should open the web interface in your browser. You can also access it directly at http://localhost:8081.

Alternatively, you can also start parsing files on the command line:

# Parse file via CLI

srcd parse uast /path/to/file.javaTo parse a file specifying the programming language:

srcd parse uast --lang=LANGUAGE /path/to/fileTo see the installed language drivers:

srcd parse driversUnderstand which tables are available for you to query:

gitbase> show tables;

+--------------+

| TABLE |

+--------------+

| blobs |

| commit_blobs |

| commit_files |

| commit_trees |

| commits |

| files |

| ref_commits |

| refs |

| remotes |

| repositories |

| tree_entries |

+--------------+gitbase> DESCRIBE TABLE commits;

+---------------------+-----------+

| NAME | TYPE |

+---------------------+-----------+

| repository_id | TEXT |

| commit_hash | TEXT |

| commit_author_name | TEXT |

| commit_author_email | TEXT |

| commit_author_when | TIMESTAMP |

| committer_name | TEXT |

| committer_email | TEXT |

| committer_when | TIMESTAMP |

| commit_message | TEXT |

| tree_hash | TEXT |

| commit_parents | JSON |

+---------------------+-----------+Show me the repositories I am analyzing:

SELECT * FROM repositories;Top 10 repositories by commit count in HEAD:

SELECT repository_id,commit_count

FROM (

SELECT r.repository_id, COUNT(*) AS commit_count

FROM ref_commits r

WHERE r.ref_name = 'HEAD'

GROUP BY r.repository_id

) AS q

ORDER BY commit_count

DESC

LIMIT 10;Query all files from HEAD:

SELECT cf.file_path, f.blob_content

FROM ref_commits r

NATURAL JOIN commit_files cf

NATURAL JOIN files f

WHERE r.ref_name = 'HEAD'

AND r.history_index = 0;Retrieve the UAST for all files from HEAD:

SELECT * FROM (

SELECT cf.file_path,

UAST(f.blob_content, LANGUAGE(f.file_path, f.blob_content)) as uast

FROM ref_commits r

NATURAL JOIN commit_files cf

NATURAL JOIN files f

WHERE r.ref_name = 'HEAD'

AND r.history_index = 0

) t WHERE uast != '';Query for all LICENSE & README files across history:

SELECT repository_id, blob_content

FROM files

WHERE file_path = 'LICENSE'

OR file_path = 'README.md';You can find further sample queries in the examples folder.

Here is a collection of documentation, guides, and examples of the components exposed by source{d} Engine:

- gitbase documentation: table schemas, syntax, functions, examples.

- Babelfish documentation: specifications, usage, examples.

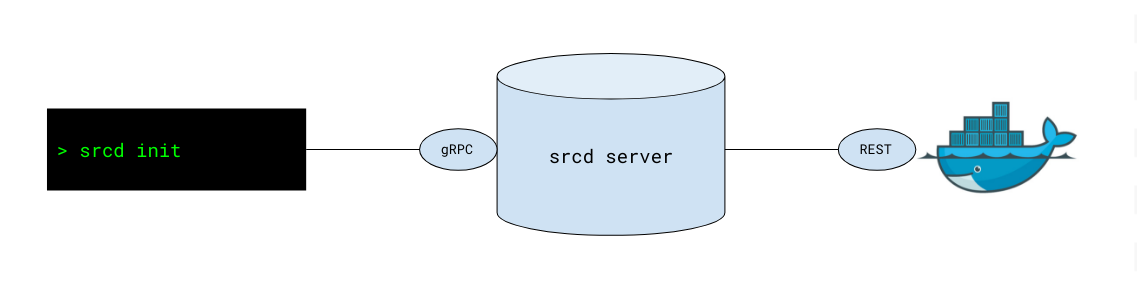

source{d} Engine functions as a command-line interface tool that provides easy access to components of source{d} stack for Code As Data.

It consists of a daemon managing all of the services, which are packaged as Docker containers:

- enry: language classifier

- babelfish: universal code parser

- daemon: Babelfish server

- language drivers: parsers + normalizers for programming languages

- babelfish-web: web client for Babelfish server

- gitbase: SQL database interface to Git repositories

- gitbase-web: web client for gitbase

For more details on the architecture of this project, read docs/architecture.md.

One of the most important components of source{d} Engine is the UAST, which stands for: Universal Abstract Syntax Tree.

UASTs are a normalized form of a programming language's AST, annotated with language-agnostic roles and transformed with language-agnostic concepts (e.g. Functions, Imports etc.).

These enable advanced static analysis of code and easy feature extraction for statistics or Machine Learning on Code.

To parse a file for a UAST using source{d} Engine, head to the Parsing Code section of this document.

To see which languages are available, check the table of Babelfish supported languages.

For connecting to the language parsing server (Babelfish) and analyzing the UAST, there are several language clients currently supported and maintained:

The Gitbase Spark connector is under development, which aims to allow for an easy integration with Spark & PySpark:

- Gitbase Spark Connector - coming soon!

source{d} has an amazing community of developers and contributors who are interested in Code As Data and/or Machine Learning on Code. Please join us! 👋

Contributions are welcome and very much appreciated 🙌 Please refer to our Contribution Guide for more details.

This software uses code from open source packages. We'd like to thank the contributors for all their efforts: