English | Español

PyTorch implementation of a Conditional Adversarial Network (cGAN) for Image-to-Image Translation applied to the task of image colorization.

The following implementations have been used as reference:

- Pix2Pix Implementation on PyTorch. 2018

- Colorizing B&W Images with U-Net and conditional GAN. Moein Shariatnia. 2020

The network has been trained with 2 separate datasets:

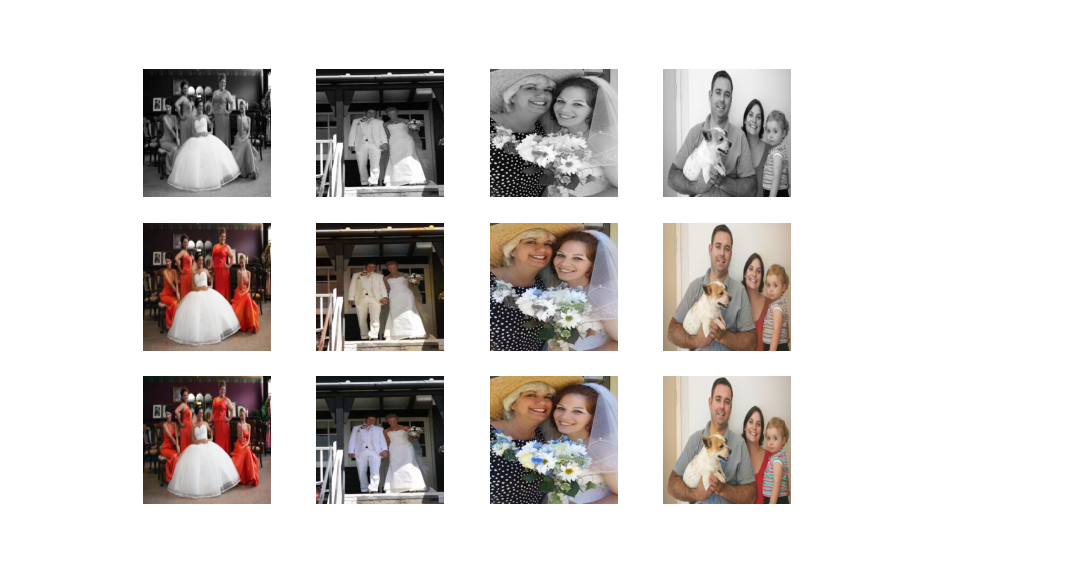

- Images of Groups: Color images of group portraits taken at weddings and other formal social events. As there are fewer samples the network can achieve acceptable results in less time but generalizes worse. Suitable for old family photos.

- 4902 samples in total (4850 for training)

- COCO: Varied color images. COCO Train 2017 subset. As there are more samples and they are more diverse, the network generalizes better but it takes much longer to reach acceptable results and worse results are obtained for family portraits. Suitable for old photos featuring animals, vehicles or other classes included in the COCO dataset.

- 7750 samples in total (7700 for training)

The grayscale images present in each dataset were removed during preprocessing.

Before entering the network, the images are resized to 256x256, normalized and converted to Lab color space.

The real luminance channel (L) will be used as input to the generator and the discriminator.

The real color channels (ab) will be used to calculate the error with respect to the output obtained by the generator.

The data generator in charge of providing each batch performs the following tasks:

-

Read the RGB images from memory.

-

Randomly flip horizontally (data augmentation).

-

Convert the image from RGB to Lab.

-

Normalize the L-channel values from [0,100] to [0,1].

-

Normalize the values of the ab channels from [-128,128] to [-1,1].

-

Convert the numpy matrix to PyTorch tensor (whc -> cwh).

-

Separate luminance (L) and color (ab) channels.

The discriminator architecture used is PatchGAN where the output is a 1-filter, 15x15-dimensional convolutional layer that indicates how real each patch or section of the image is. PatchGAN can be understood as a form of texture/style loss. According to authors, using a 16x16 PatchGAN is sufficient to promote sharp outputs.

The loss function of the discriminator is cross-entropy with logits (BCEWithLogitsLoss).

The generator is a U-Net where the encoder is a ResNet18 pre-trained with ImageNet (U-ResNet). The FastAI library has been used to implement this network.

The loss function used is the mean absolute error (MAE or L1 loss) between the ab channels produced by the generator and the ab channels of the image whose L channel was passed as input to the generator.

Adam optimizer was used for both the discriminator and the generator. The parameters used are the same as the ones specified in the original Pix2Pix paper.

Starting training using a generator that is reasonably good at coloring images from the first epoch will make GAN training more stable.

To do this, the generator is previously pretrained in isolation for 20 epochs. After the pre-training, the generator will have an initial notion on how to colorize images.

Then, the generator and the discriminator are trained combined for 100 epochs.

For both datasets, good results are obtained for the test samples.

However, all images used for training, for both datasets, are sharp images without cracks or other damage present in older images. When a bad image is passed to it, the network is not able to color it correctly.

This can be fixed by adding artificial stripes and artifacts to the L layer of the images during training, modifying the generator to produce also the L channel and using the 3 channels in the L1 Loss. The discriminator would receive the damaged version of the L layer and a Lab image. This way the network would be able to colorize and also fix damaged old photos.

Finally, even better results can be obtained by using a larger number of training samples and training for more epochs, especially for the COCO dataset.