ML model to classify whether a quality complaint is valid or not. The model is trained on a fictional dataset of quality complaints and their corresponding labels.

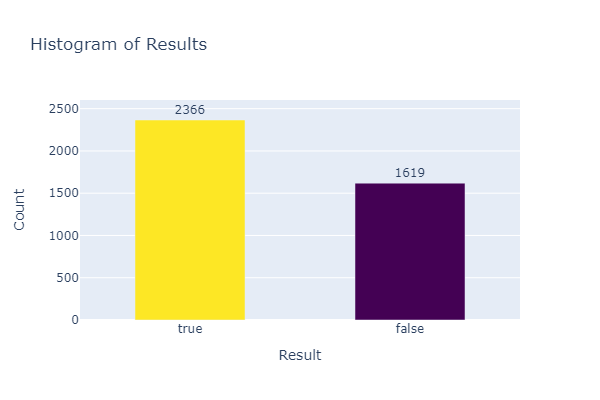

The fictional dataset consists of quality complaints and their corresponding results. The results are binary, where True indicates a valid complaint and False indicates an invalid complaint.

The data columns are as follows:

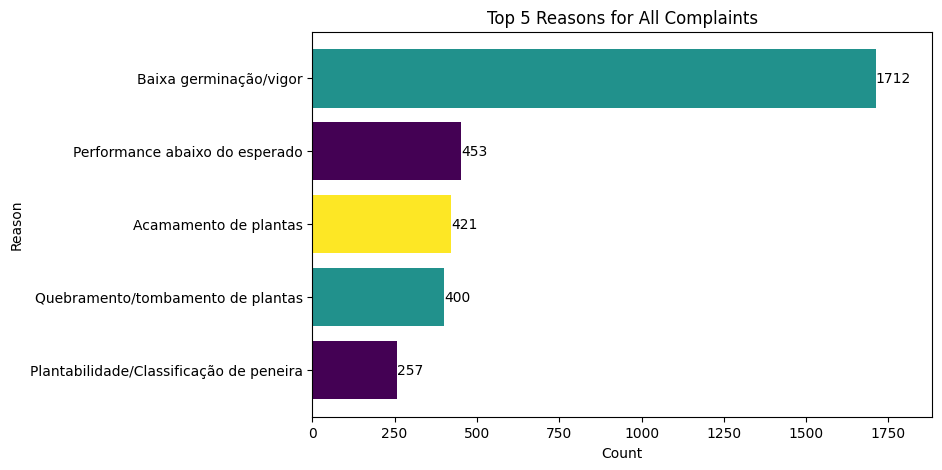

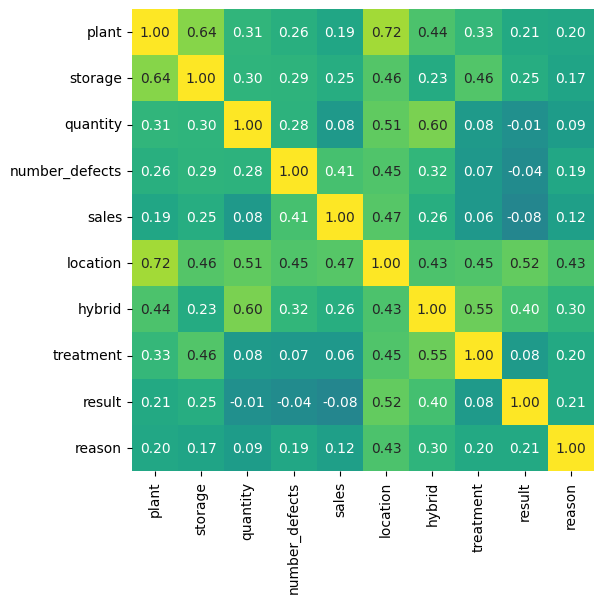

plant: The plant where the product was madestorage: The storage location of the productquantity: The quantity of the productnumber_defects: The number of defects in the productsales: The volume of saleslocation: The location of the complainthybrid: The hybrid (commercial name) of the producttreatment: The treatment of the productreason: The reason for the complaintresult: The result of the complaint (True for valid, False for invalid)

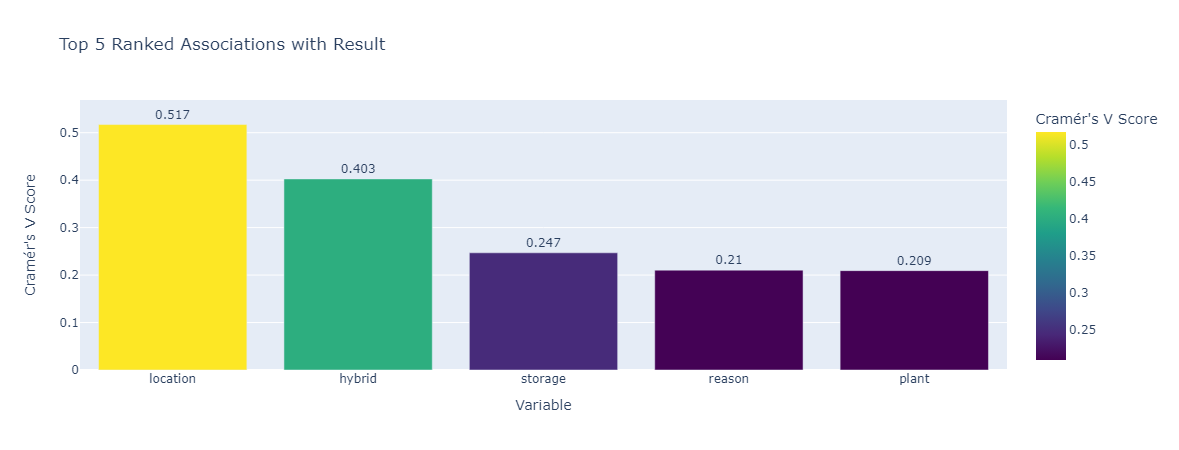

The Chi-Squared Test of Independence is used to determine if there is a significant association between two categorical variables. The null hypothesis (H0) is that the variables are independent, and the alternative hypothesis (H1) is that the variables are dependent.

The formula is:

Where

Cramer's V is a scaled version of the chi-squared test statistic

The formula is:

Where

The dataset is split into training and testing sets, with 80% of the data used for training and 20% for testing. The following models are trained on the dataset:

- LightGBM

- Random Forest

The models are evaluated using the following metrics:

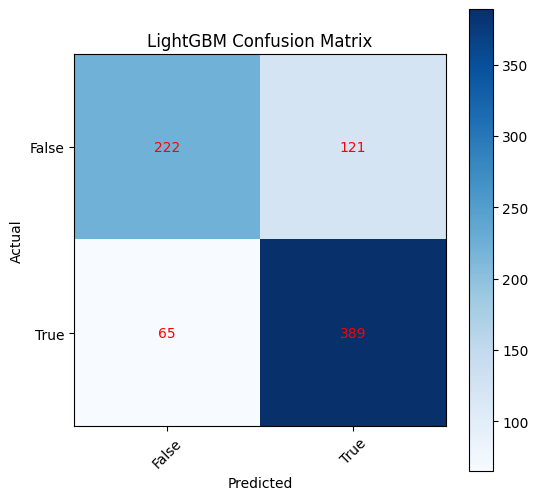

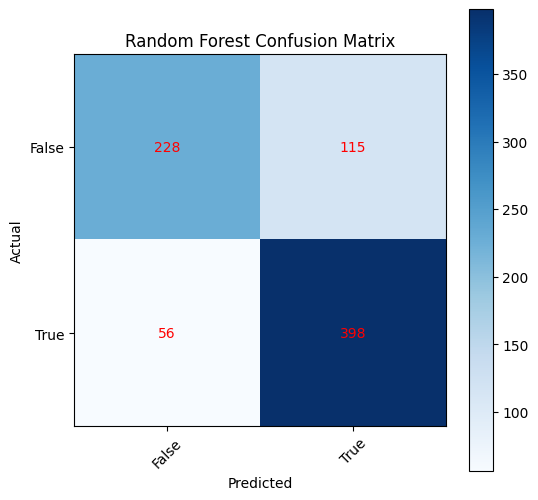

- Accuracy: The proportion of correct predictions to the total number of predictions.

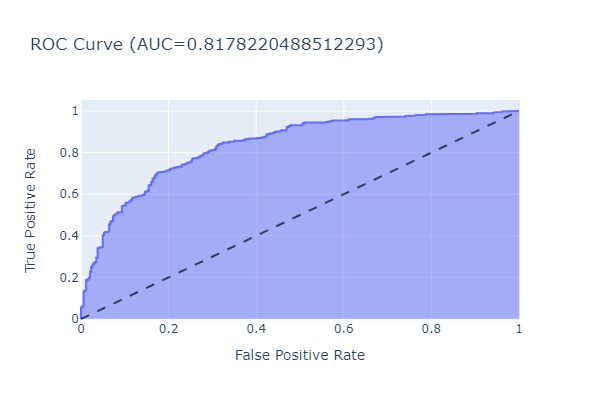

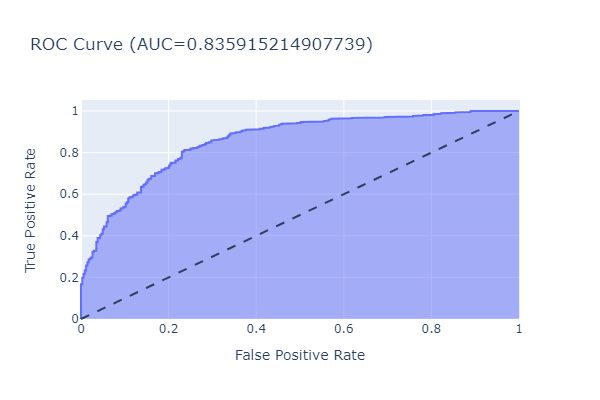

- Roc-Auc: The area under the receiver operating characteristic curve, which measures the trade-off between true positive rate and false positive rate.

LightGBM is a gradient boosting framework that uses tree-based learning algorithms. It is designed for speed and efficiency and is widely used in machine learning competitions.

Random Forest is an ensemble learning method that constructs a multitude of decision trees during training and outputs the mode of the classes as the prediction.

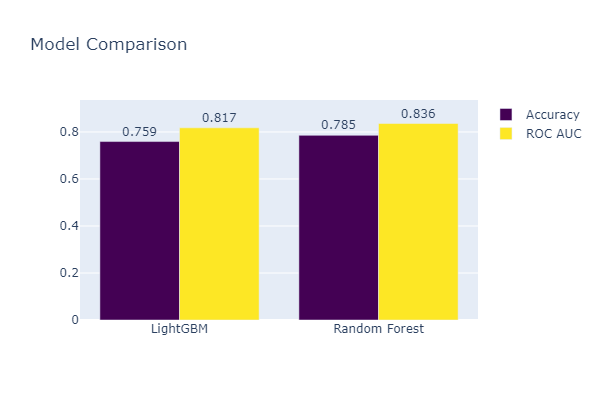

The results are as follows:

| Model | Accuracy | Roc-Auc |

|---|---|---|

| LightGBM | 0.759 | 0.817 |

| Random Forest | 0.785 | 0.836 |

The Random Forest model outperforms the LightGBM model in terms of accuracy and Roc-Auc score.