Code for the post Setting up end-to-end tests for cloud data pipelines

This is what our data pipeline architecture looks like.

For our local setup, we will use

- Open source sftp server

- Moto server to mock S3 and Lambda

- Postgres as a substitute for AWS Redshift

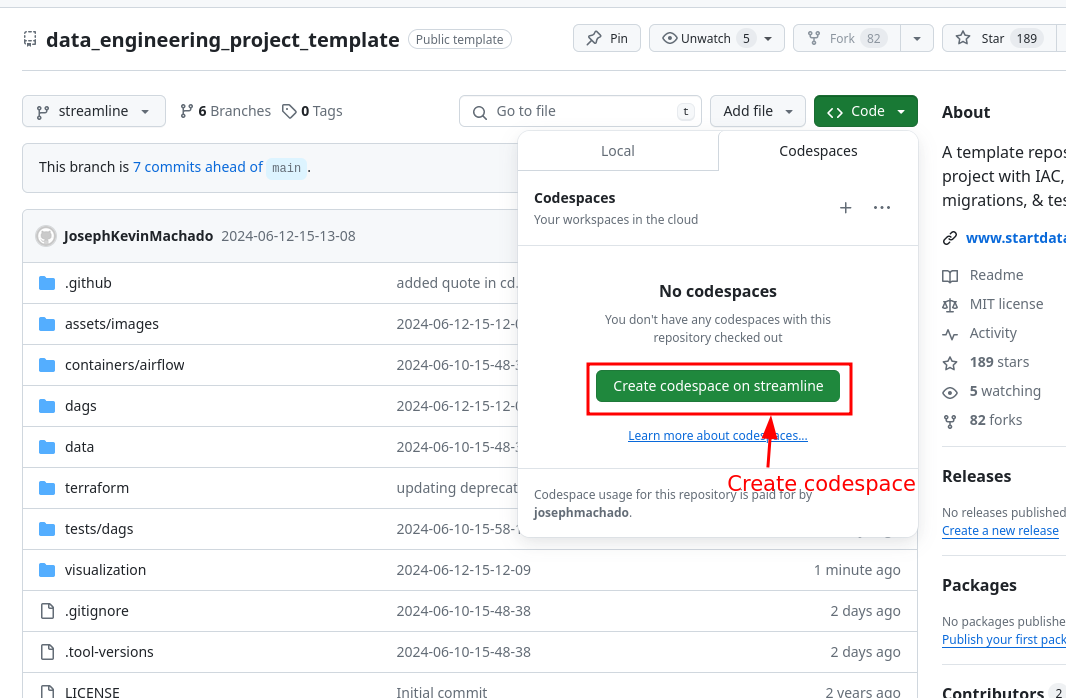

You can run this data pipeline using GitHub codespaces. Follow the instructions below.

- Create codespaces by going to the e2e_datapipeline_test repository, cloning(or fork) it and then clicking on

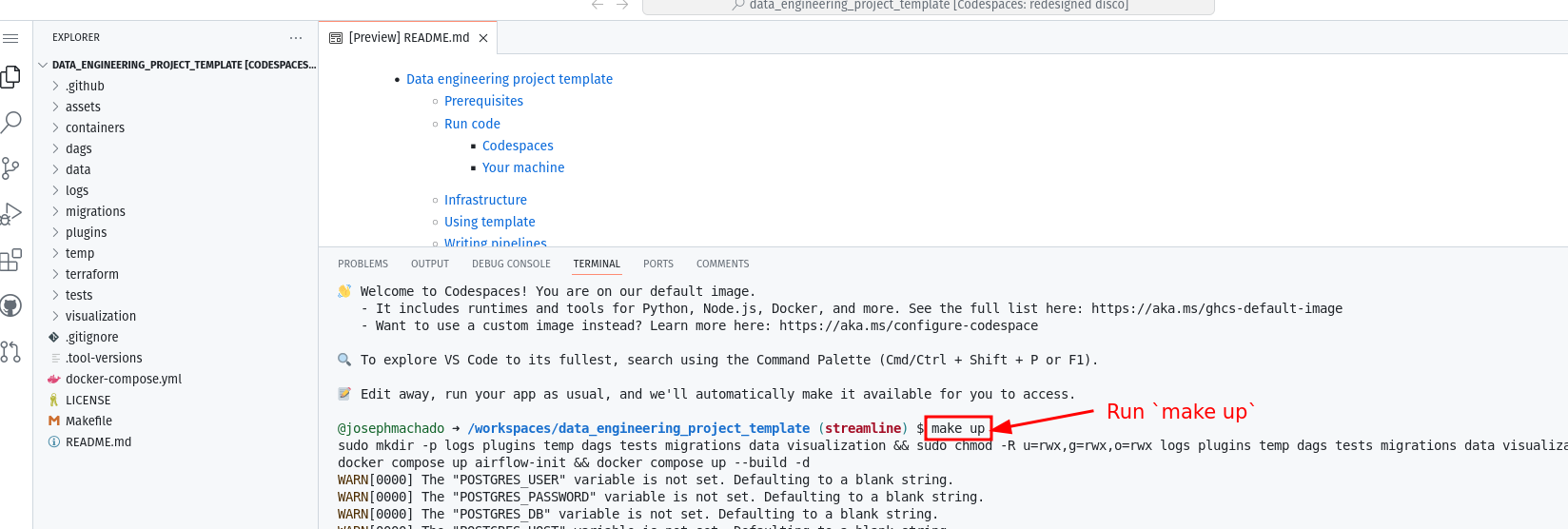

Create codespaces on mainbutton. - Wait for codespaces to start and for codespaces to automatically install the libraries in

requirements.txt, then in the terminal typemake up && export PYTHONPATH=${PYTHONPATH}:./src. - Wait for the above to complete.

- Now you can run our event pipeline end to end test using

pytestcommand and you can clean up code with themake cicommand

NOTE: The screenshots below, show the general process to start codespaces, please follow the instructions shown above for this project.

Note Make sure to switch off codespaces instance, you only have limited free usage; see docs here.

To run, you will need

Clone, create a virtual env, set up python path, spin up containers and run tests as shown below.

git clone https://github.com/josephmachado/e2e_datapipeline_test.git

cd e2e_datapipeline_test

python -m venv ./env

source env/bin/activate # use virtual environment

pip install -r requirements.txt

make up # spins up the SFTP, Motoserver, Warehouse docker containers

export PYTHONPATH=${PYTHONPATH}:./src # set path to enable importsWe can run our tests using pytest.

pytest # runs all tests under the ./test folderClean up

make cimake down # spins down the docker containers

deactivate # stop using the virtual environment