The entire application is governed through the docker-compose.yml file and is built with docker compose:

- Install

dockeranddocker-compose, this may need a restart of your system since Docker is a very low level program. - Run

docker compose up --buildas either a user with permissions to docker, or withsudo/doas, the build flag is required if the backend or frontend code has been changed, additionally-dwill make it detach from the terminal. - Wait for the scraper in the backend to complete scraping pages, this may take about 15 minutes.

- Run

docker compose restart, this is required so that the parser will run and so that the vector store can create new embeddings. - ???

- PROFIT!!!

The backend is built with Clojure, a functional programmering language based on Lisp which runs on the Java Virtual Machine.

This part serves multiple purposes, it is responsible for scraping the course pages from KU as well as the statistics from STADS.

The backend also serves the frontend and contains the "datascript" database and is responsible for refreshing and various services occasionally (this feature is partially broken at the moment).

This service is responsible for the semantic searches used in the get_course_overviews route, instead of using trigrams or full-text, we decided to use vector searches for the lower latency.

This service is the parser that takes the scraped course pages and parses them into a format we can use in the database for searching and for serving to the frontend.

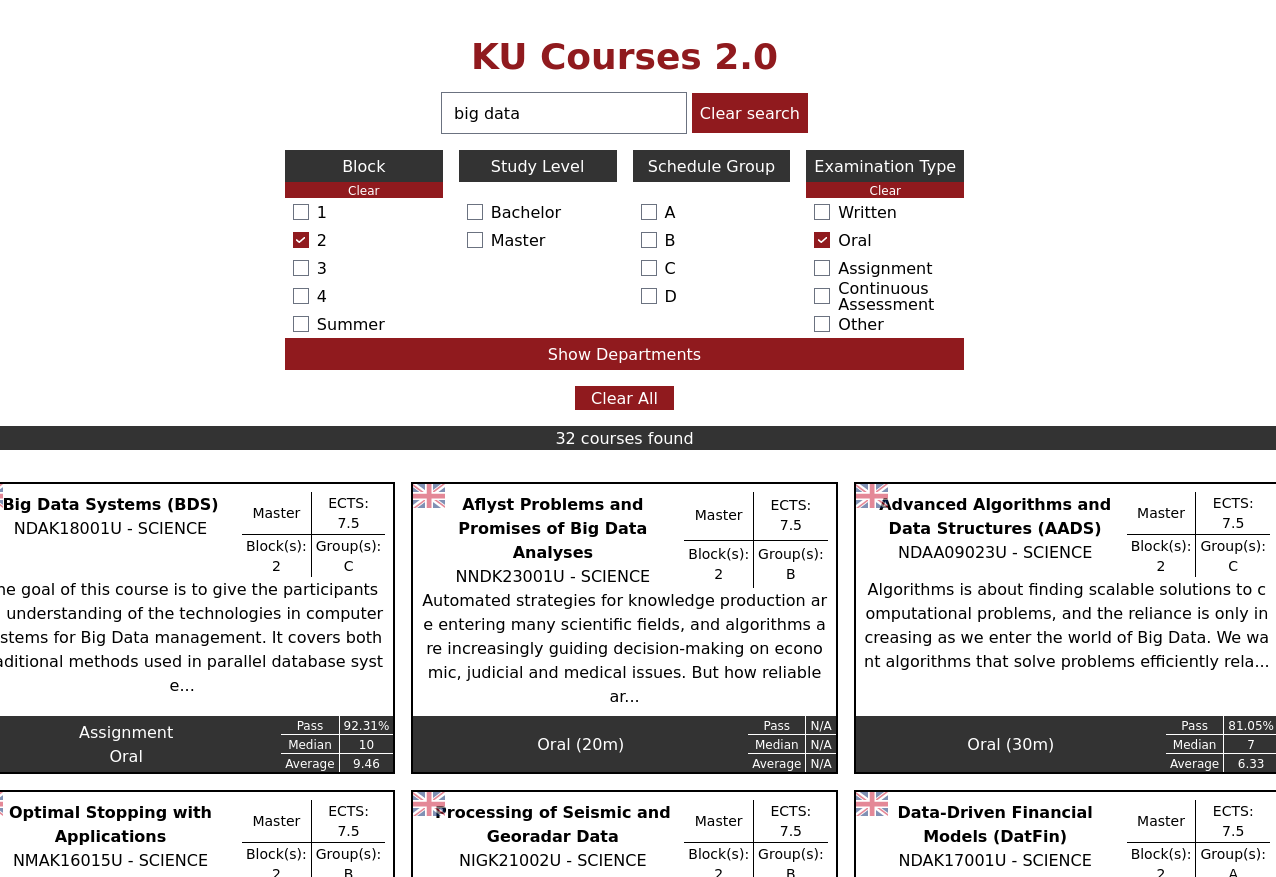

Frontend is built in Svelte/Typescript. This is a highly responsible SPA that shows the courses in the form of cards which can be clicked into to get a more detailed view of the course.

- Thanks to Jákup Lützen for creating the original course parser in Python.

- Thanks to Kristian Pedersen for creating the original frontend, and help in designing the architecture and first database schema.

- Thanks to Zander Bournonville for creating the statistics parser.