Official implementation of UniRef++, an extended version of ICCV2023 UniRef.

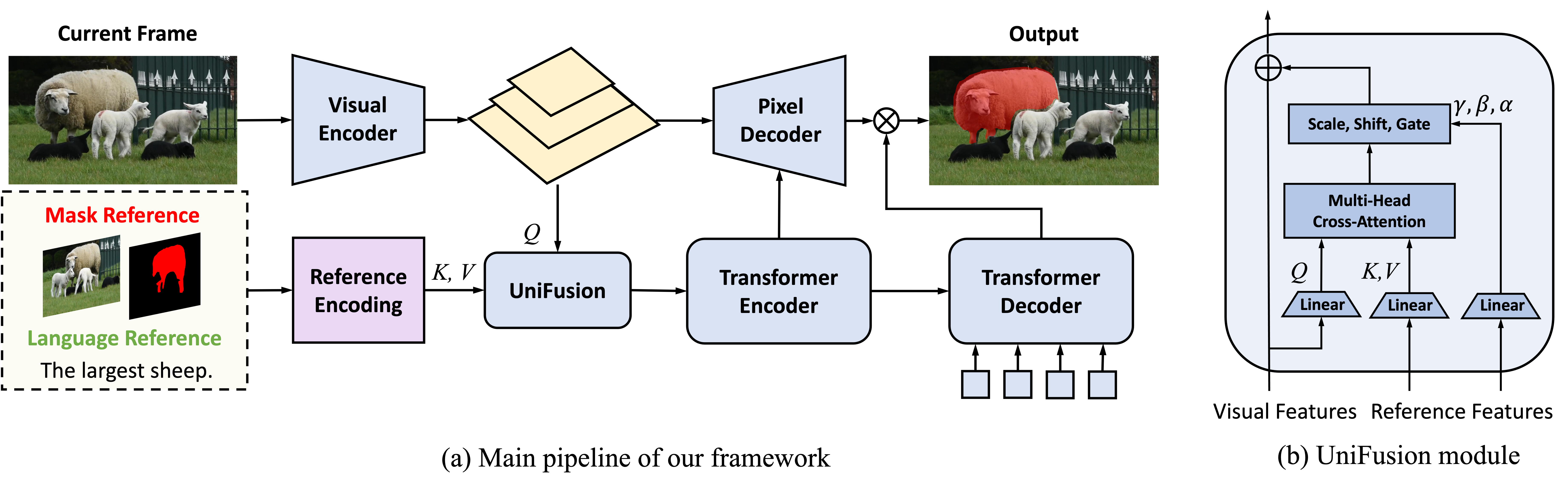

- UniRef/UniRef++ is a unified model for four object segmentation tasks, namely referring image segmentation (RIS), few-shot segmentation (FSS), referring video object segmentation (RVOS) and video object segmentation (VOS).

- At the core of UniRef++ is the UniFusion module for injecting various reference information into network. And we implement it using flash attention with high efficiency.

- UniFusion could play as the plug-in component for foundation models like SAM.

- Add Training Guide

- Add Evaluation Guide

- Add Data Preparation

- Release Model Checkpoints

- Release Code

video_demo.mp4

-

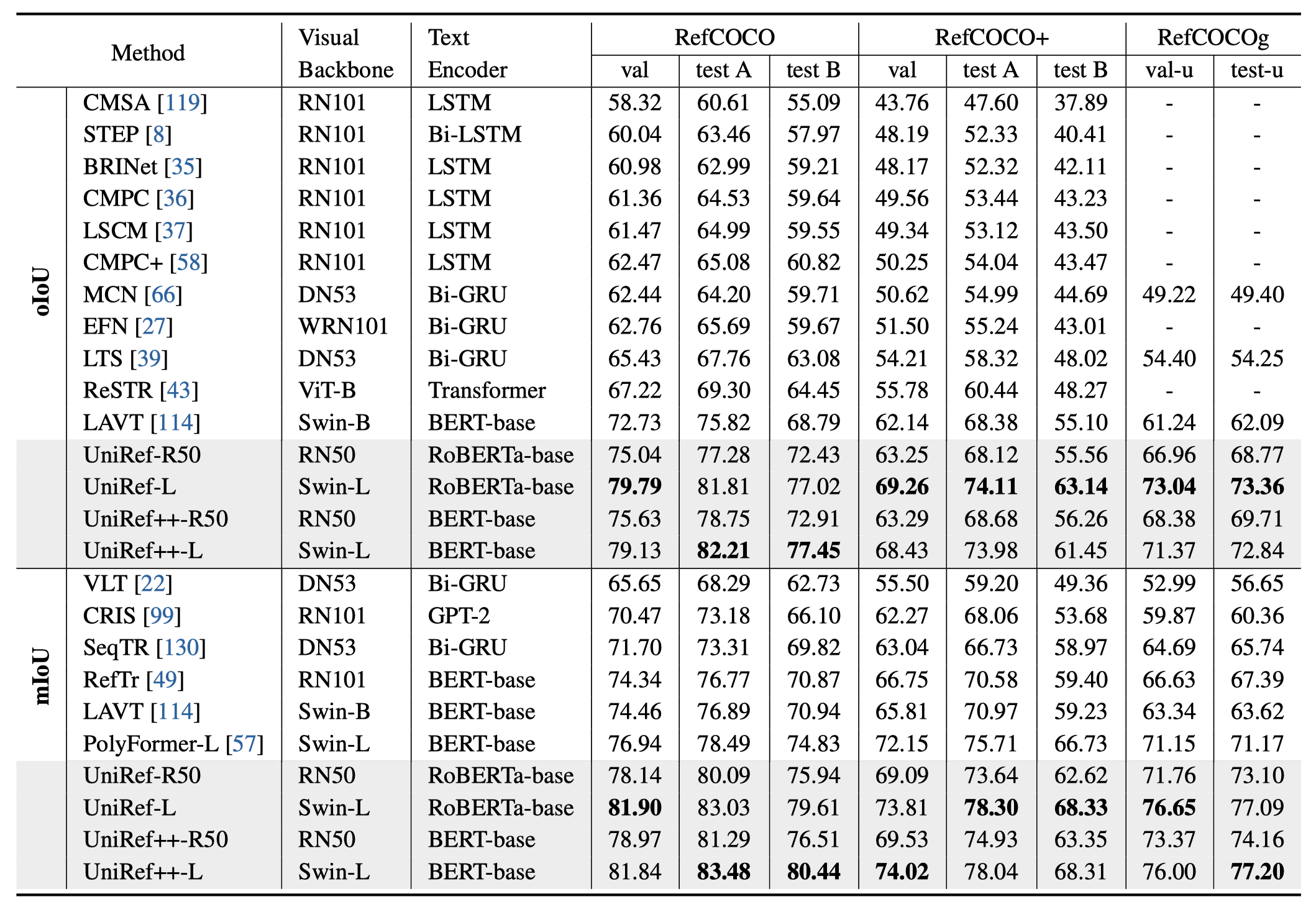

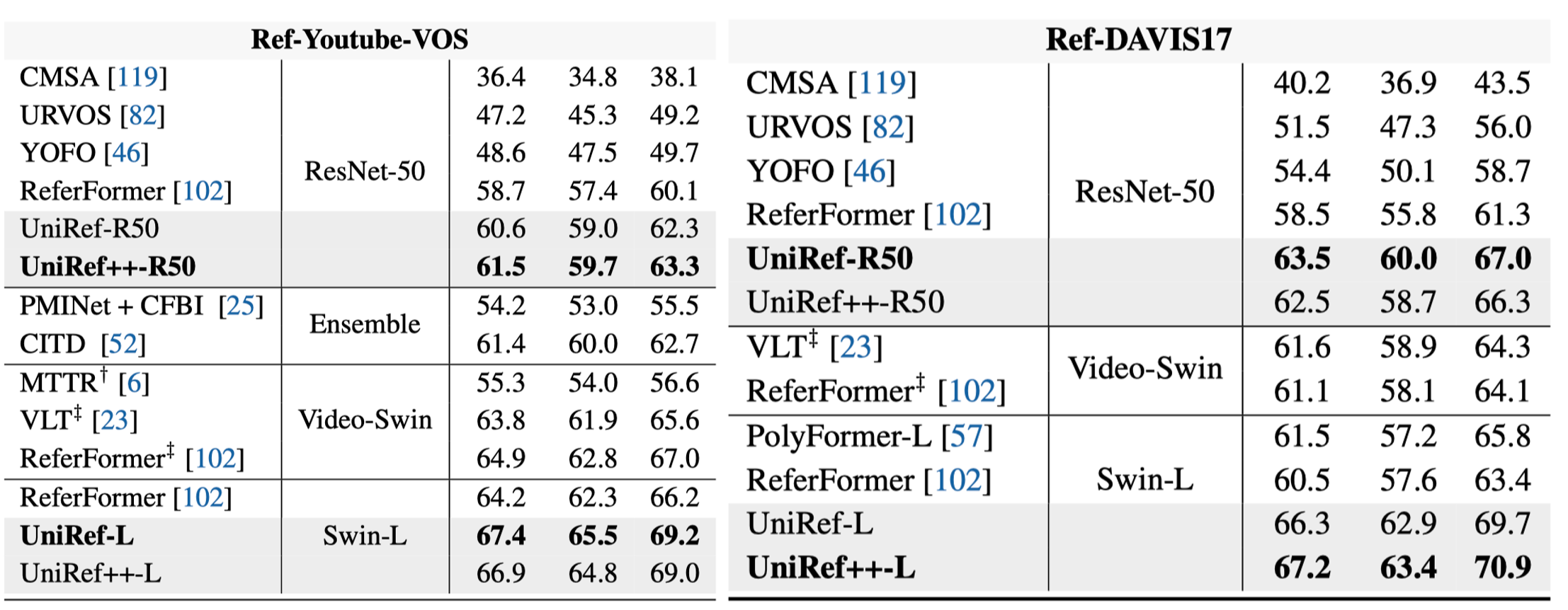

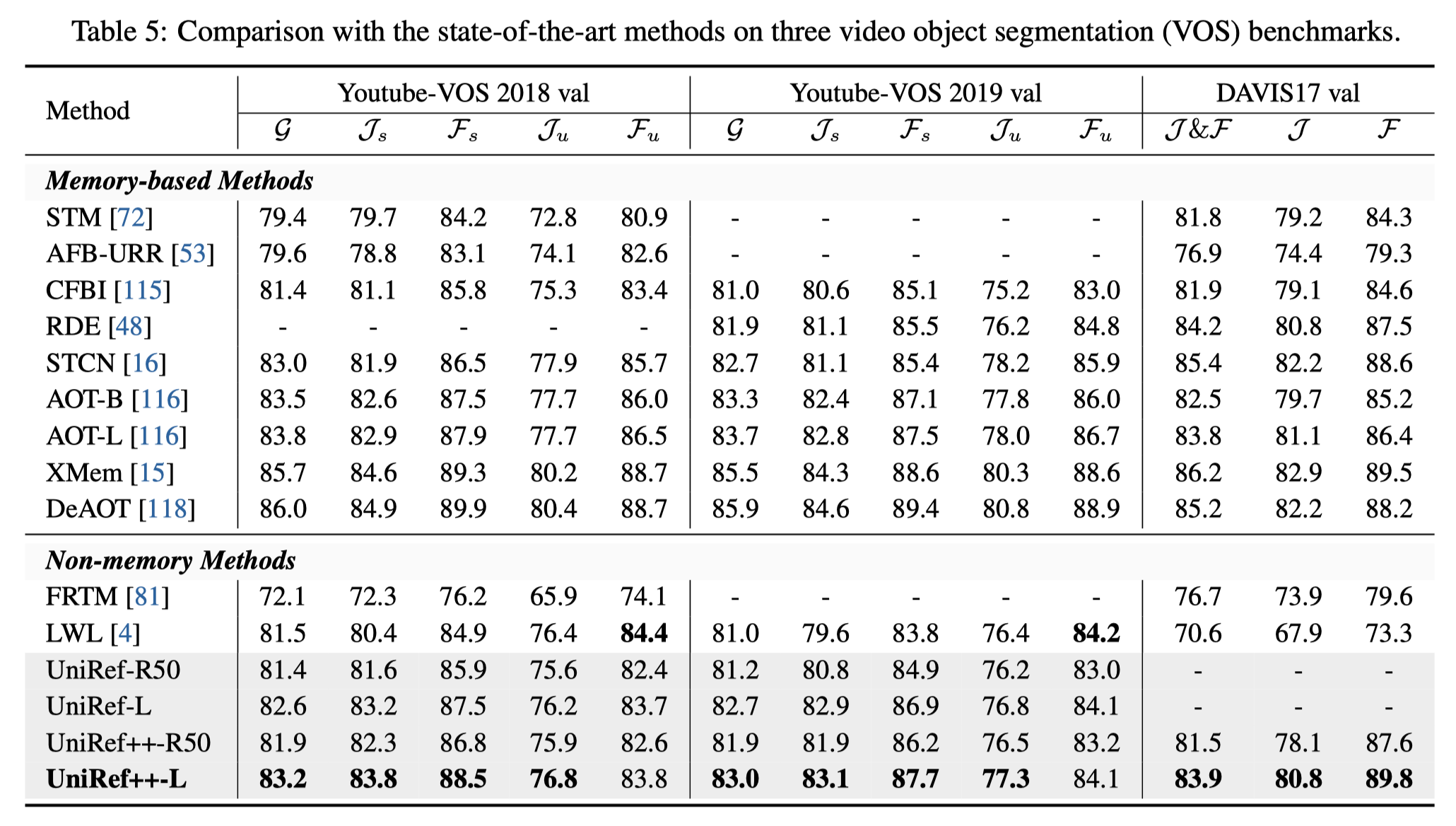

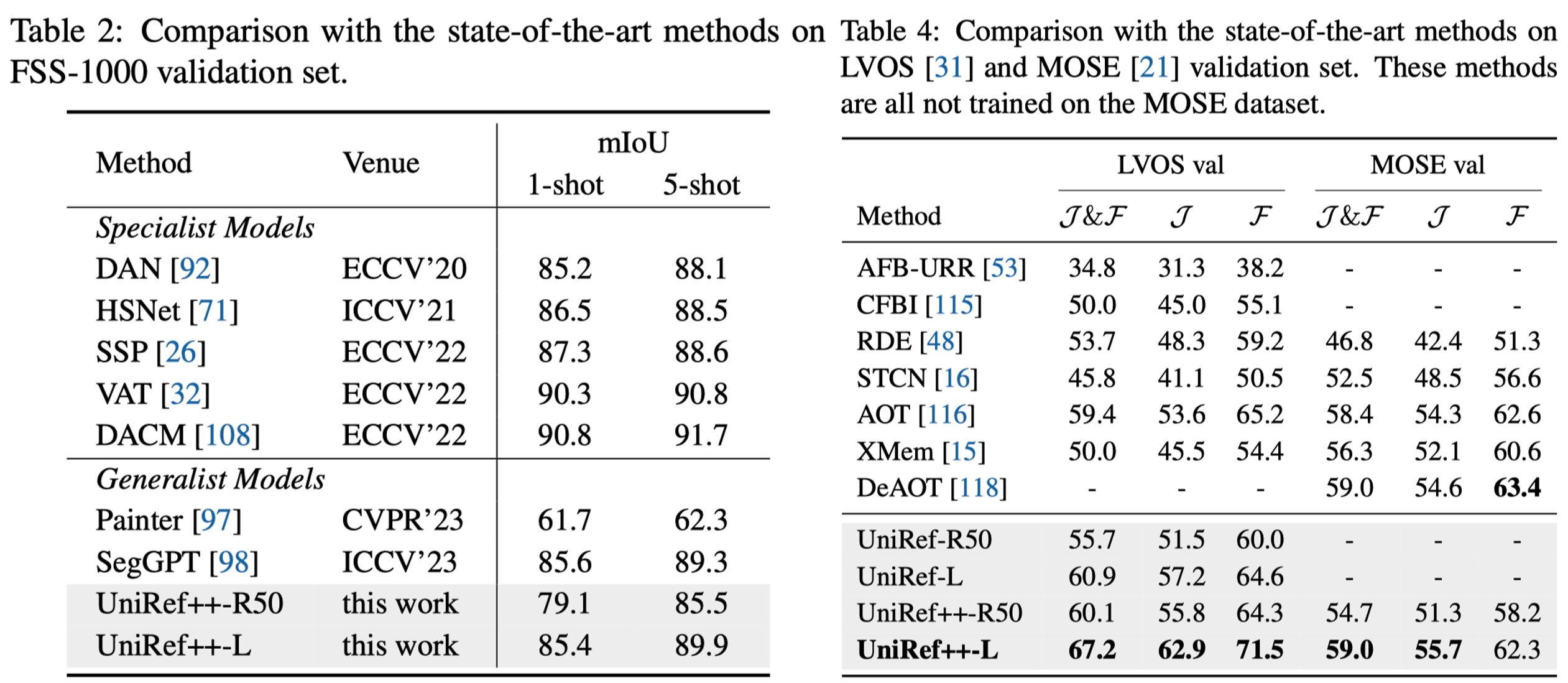

The results are reported on the validation set.

Model RefCOCO FSS-1000 Ref-Youtube-VOS Ref-DAVIS17 Youtube-VOS18 DAVIS17 LVOS Checkpoint UniRef++-R50 75.6 79.1 61.5 63.5 81.9 81.5 60.1 model UniRef++-Swin-L 79.1 85.4 66.9 67.2 83.2 83.9 67.2 model

See INSTALL.md

Please see DATA.md for data preparation.

Please see EVALUATION.md for evaluation.

If you find this project useful in your research, please consider cite:

@article{wu2023uniref++,

title={UniRef++: Segment Every Reference Object in Spatial and Temporal Spaces},

author={Wu, Jiannan and Jiang, Yi and Yan, Bin and Lu, Huchuan and Yuan, Zehuan and Luo, Ping},

journal={arXiv preprint arXiv:2312.15715},

year={2023}

}@inproceedings{wu2023uniref,

title={Segment Every Reference Object in Spatial and Temporal Spaces},

author={Wu, Jiannan and Jiang, Yi and Yan, Bin and Lu, Huchuan and Yuan, Zehuan and Luo, Ping},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={2538--2550},

year={2023}

}The project is based on UNINEXT codebase. We also refer to the repositories Detectron2, Deformable DETR, STCN, SAM. Thanks for their awsome works!