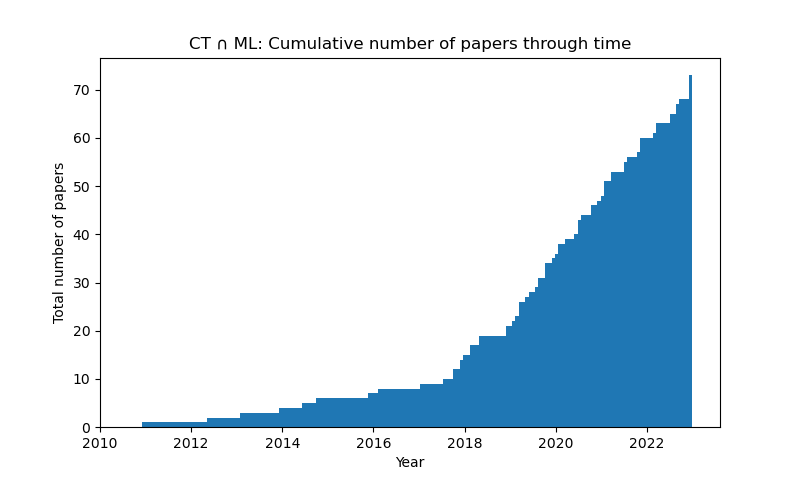

Category theory has been finding increasing applications in machine learning. This repository aims to list all of the relevant papers, grouped by fields.

For an introduction to the ideas behind category theory, check out this link.

There might be papers missing, and some papers are in multiple fields. Feel free to contribute to this list - preferably by creating a pull request.

- Categorical Foundations of Gradient-Based Learning

- Backprop as Functor

- Lenses and Learners

- Reverse Derivative Ascent

- Dioptics

- Learning Functors using Gradient Descent (longer version here)

- Compositionality for Recursive Neural Networks

- Deep neural networks as nested dynamical systems

- Neural network layers as parametric spans

- Categories of Differentiable Polynomial Circuits for Machine Learning

- Graph Neural Networks are Dynamic Programmers

- Natural Graph Networks

- Local Permutation Equivariance For Graph Neural Networks

- Sheaf Neural Networks

- Sheaf Neural Networks with Connection Laplacians

- Neural Sheaf Diffusion: A Topological Perspective on Heterophily and Oversmoothing in GNNs

- Graph Convolutional Neural Networks as Parametric CoKleisli morphisms

- Learnable Commutative Monoids for Graph Neural Networks

- Functorial String Diagrams for Reverse-Mode Automatic Differentiation

- Differentiable Causal Computations via Delayed Trace

- Simple Essence of Automatic Differentiation

- Reverse Derivative Categories

- Towards formalizing and extending differential programming using tangent categories

- Correctness of Automatic Differentiation via Diffeologies and Categorical Gluing

- Denotationally Correct, Purely Functional, Efficient Reverse-mode Automatic Differentiation

- Higher Order Automatic Differentiation of Higher Order Functions

- Space-time tradeoffs of lenses and optics via higher category theory

- Markov categories

- Markov Categories and Entropy

- Infinite products and zero-one laws in categorical probability

- A Convenient Category for Higher-Order Probability Theory

- Bimonoidal Structure of Probability Monads

- Representable Markov Categories and Comparison of Statistical Experiments in Categorical Probability

- De Finneti's construction as a categorical limit

- A Probability Monad as the Colimit of Spaces of Finite Samples

- A Probabilistic Dependent Type System based on Non-Deterministic Beta Reduction

- Probability, valuations, hyperspace: Three monads on Top and the support as a morphism

- Categorical Probability Theory

- Information structures and their cohomology

- Computable Stochastic Processes

- Compositional Semantics for Probabilistic Programs with Exact Conditioning

- A category theory framework for Bayesian Learning

- Causal Theories: A Categorical Perspective on Bayesian Networks

- Bayesian machine learning via category theory

- A Categorical Foundation for Bayesian probability

- Bayesian Open Games

- Causal Inference by String Diagram Surgery

- Disintegration and Bayesian Inversion via String Diagrams

- Categorical Stochastic Processes and Likelihood

- Bayesian Updates Compose Optically

- Automatic Backward Filtering Forward Guiding for Markov processes and graphical models

- A Channel-Based Perspective on Conjugate Priors

- A Type Theory for Probabilistic and Bayesian Reasoning

- Denotational validation of higher-order Bayesian inference

- The Geometry of Bayesian Programming

- Relational Reasoning for Markov Chains in a Probabilistic Guarded Lambda Calculus

- On Characterizing the Capacity of Neural Networks using Algebraic Topology

- Persistent-Homology-based Machine Learning and its Applications - A Survey

- Topological Expressiveness of Neural Networks

- Approximating the convex hull via metric space magnitude

- Practical applications of metric space magnitude and weighting vectors

- Weighting vectors for machine learning: numerical harmonic analysis applied to boundary detection

- The magnitude vector of images

- Neural Networks, Types, and Functional Programming

- Towards Categorical Foundations of Learning

- Graph Convolutional Neural Networks as Parametric CoKleisli morphisms

- Optics vs Lenses, Operationally

- Meta-learning and Monads

- Automata Learning: A Categorical Perspective

- A Categorical Framework for Learning Generalised Tree Automata

- Generalized Convolution and Efficient Language Recognition

- General supervised learning as change propagation with delta lenses

- From Open Learners to Open Games

- Learners Languages

- A Constructive, Type-Theoretic Approach to Regression via Global Optimisation

- Characterizing the invariances of learning algorithms using category theory

- Functorial Manifold Learning

- Diegetic representation of feedback in open games

- Assessing the Unitary RNN as an End-to-End Compositional Model of Syntax

- Classifying Clustering Schemes

- Category Theory for Quantum Natural Language Processing

- Categorical Hopfield Networks